A Deep Learning Application for Building Damage Assessment Using Ultra-High-Resolution Remote Sensing Imagery in Turkey Earthquake

Haobin Xia · Jianjun Wu, · Jiaqi Yao · Hong Zhu · Adu Gong · Jianhua Yang · Liuru Hu · Fan Mo

Abstract Rapid building damage assessment following an earthquake is important for humanitarian relief and disaster emergency responses.In February 2023, two magnitude-7.8 earthquakes struck Turkey in quick succession, impacting over 30 major cities across nearly 300 km.A quick and comprehensive understanding of the distribution of building damage is essential for ef ficiently deploying rescue forces during critical rescue periods.This article presents the training of a two-stage convolutional neural network called BDANet that integrated image features captured before and after the disaster to evaluate the extent of building damage in Islahiye.Based on high-resolution remote sensing data from WorldView2, BDANet used predisaster imagery to extract building outlines; the image features before and after the disaster were then combined to conduct building damage assessment.We optimized these results to improve the accuracy of building edges and analyzed the damage to each building, and used population distribution information to estimate the population count and urgency of rescue at dif ferent disaster levels.The results indicate that the building area in the Islahiye region was 156.92 ha, with an af fected area of 26.60 ha.Severely damaged buildings accounted for 15.67% of the total building area in the af fected areas.WorldPop population distribution data indicated approximately 253, 297, and 1,246 people in the collapsed, severely damaged, and lightly damaged areas, respectively.Accuracy verif ication showed that the BDANet model exhibited good performance in handling high-resolution images and can be used to directly assess building damage and provide rapid information for rescue operations in future disasters using model weights.

Keywords BDANet · Building damage assessment · Deep learning · Disaster assessment · Emergency rescue · Ultra-highresolution remote sensing

1 Introduction

Disasters are def ined as losses and impacts on humans,materials, economies, and the environment resulting from the interaction of hazard events with exposure, vulnerability,and capacity conditions (Uprety and Yamazaki 2012).An integrated disaster management system comprises a predisaster prediction phase and a post-disaster management phase aimed at minimizing the loss and impact of disasters.Remote sensing data can provide valuable information for both phases.In the post-disaster management phase, which includes the period of disaster assessment and response, a rapid and comprehensive understanding of damage distribution is crucial to responding rationally and ef fectively(Ge et al.2020).Assessing the damage to buildings in the immediate aftermath of a disaster is important because the extent of damage to buildings directly impacts the af fected population and can considerably af fect rescue ef forts (Wu et al.2016).Thus, it is crucial to reduce the amount of time required to identify buildings that have sustained damage because individuals trapped under collapsed structures usually survive for approximately 48 h (Karimzadeh et al.2017).Surveying an entire disaster area shortly after a major earthquake is challenging and hazardous.Moreover, earthquakes of higher magnitude can cause severe harm to communication and transportation systems, further complicating the process.Remote sensing technology enables rapid observation and response on a wide scale, thereby avoiding dif ficulties in on-site investigations.Remote sensing data can be ef fectively used for building damage assessment after a disaster, provided that appropriate selection is made based on factors such as sensor type, spatial resolution, return time, and availability of pre-disaster data and auxiliary maps(Yamazaki and Matsuoka 2007).

Accurate and rapid assessment of building damage after an earthquake is crucial for ef fective humanitarian relief and disaster response (Mangalathu et al.2020).With the continuous advancement of remote sensing technology, it has become possible to monitor large areas at high resolution (Silva et al.2022).High-resolution remote sensing imagery provides comprehensive regional information.However, analyzing disaster areas using traditional remote sensing imagery requires professional expertise.The larger the area, the more time-consuming and resource-intensive is the analysis, which often takes several months to complete.During the brief window of opportunity for rescue ef forts following a disaster, it is essential to rapidly and accurately identify the locations of damaged buildings using depthbased recognition methods.This process can be automated to provide results within a few hours, signif icantly enhancing the ef fectiveness of rescue operations.

In recent years, deep learning has been widely used in the f ield of computer vision and signif icant breakthroughs have been achieved.The advantage of this method is its ability to automatically extract image features from the surface to deep layers.Consequently, in recent years, many researchers have used it for building damage assessment.Xu et al.( 2019) investigated the ef fectiveness of convolutional neural networks (CNNs) in detecting building damage.Wang et al.( 2019) evaluated a Faster R-CNN model based on the ResNet101 framework to assess the damage to historic buildings.Weber and Kané ( 2020) considered building damage assessment as a semantic segmentation task in which the damage levels were assigned to dif ferent class labels.The model was trained using the xBD dataset (Gupta et al.2019).In addition to deep learning methods based on image processing, Hansapinyo et al.( 2020) developed an adaptive neuro-fuzzy inference system based on GIS technology for automatically assessing building damage.In deep learning models, image segmentation is vital for building damage assessment.These methods can be roughly divided into two categories: using only post-disaster image evaluation and the simultaneous use of pre- and post-disaster imagery.Ci et al.( 2019) simplif ied building damage after the 2014 Ludian Earthquake by framing it as a multi-classif ication problem.They trained a CNN model using post-disaster imagery to predict the extent of the damage.Similarly, Nex et al.( 2019)developed three dif ferent models based on three scales (satellites, spacecraft, and drones) to assess the damage caused by earthquakes.However, image segmentation recognition based on post-disaster images alone cannot accurately extract original building outlines, and consequently cannot provide comprehensive information on pre- and post-disaster changes.Building damage assessment using paired remote sensing images is similar to the task of change detection.Both aim to identify changes in images.However, building damage assessment requires not only identifying the changed area, but also classifying the degree of damage.The model presented in this study identif ies individual building cells while preserving information on undamaged buildings, resulting in a relatively richer dataset.Although paired images can provide contrasting information, it is essential to fuse their features with depth models to leverage this information ef fectively.The BDANet model used in this study is a two-stage CNN framework (Shen et al.2021).In stage one, the U-Net network (Ronneberger et al.2015) extracts the locations of the buildings.This architecture is used for high-spatial resolution building segmentation tasks (Liu et al.2020).In stage two, the model applies a two-branch multiscale U-Net structure to the results of the f irst phase of the building division to assess the extent of damage to buildings.Given the varying scales of dif ferent buildings, the model incorporates a multi-scale feature fusion (MFF) module to merge features from dif ferent scales.To improve the correlation between pre- and post-disaster images, BDANet introduced an attention mechanism (Liu et al.2020) into the model.This mechanism helps to construct a cross-directional attention (CDA) module that fuses and enhances the features of both images.In addition, some characteristics of building damage degrees are visually similar, such as no damage and minor damage, which can lead to confusion between categories (Shen et al.2021).Data augmentation is an ef fective strategy that can help distinguish between hard-to-distinguish classes and improve model performance(Shorten and Khoshgoftaar 2019) and is widely used in dataset preprocessing.The CutMix data augmentation technique(Yun et al.2019) combines two image samples to create new images, thereby improving the generalization ability of the model.In this study, only categories that were dif ficult to distinguish were augmented, allowing the model to be trained on a greater variety of categories.

This study addressed the issue of limited image samples depicting building damage in Islahiye, Turkey, which presents a challenge to comprehensive disaster assessments.To overcome this challenge, we used the xBD submeter building damage sample dataset to train the BDANet model.xBD is a large-scale dataset published by the Massachusetts Institute of Technology (MIT) for building damage assessment that includes pre- and post-event satellite imagery for various disaster events, along with building polygons, ordinal labels indicating the level of damage, and corresponding satellite metadata (Gupta et al.2019).With the assistance of WorldView2 high-resolution remote sensing imagery, this study conducted a swift evaluation of building damage in an area by extracting the pre-disaster locations of buildings.BDANet trained using xBD exhibited good versatility.The model maintained high accuracy in building identif ication and building damage assessment in the area.The weights of the trained model can be used for building damage assessment using remote sensing images with similar resolutions,thereby reducing the time required for sample collection and model training, facilitating rapid post-disaster assessment.This study used morphological methods to optimize the model results, making them closely resemble the actual contours of buildings.Based on this, the number of damaged cells in each building was counted and a building damage assessment was conducted using the building as the basic unit.Finally, when combined with WorldPop data, the number of victims can be estimated to provide a rapid evaluation of disaster severity.

2 Materials and Methods

As shown in Fig. 1, the study area was the town of Islahiye (37°1′N, 36°38′E) in the Gaziantep Province of southern Turkey.The town covers an area of 821 km 2 and was impacted by two 7.8 magnitude earthquakes in Kahramanmaraş on 6 February 2023, which caused considerable damage to buildings.The earthquake critically impacted a large area with frequent aftershocks.Figure 1 a depicts the ongoing rescue ef forts and Fig. 1 b shows the extent of damage to buildings using a drone platform.Figure 1 c captures the scene of clearing building debris and rescuing survivors, illustrating the relationship between the damaged buildings and the rescue operations.Figure 1 d shows Islahiye in a WorldView 2 image.

Fig.1 An overview of the af fected area.a Rescue ef forts.b Severely damaged buildings imaged using a drone platform.c Collapsed buildings in Islahiye.d Overview of Islahiye

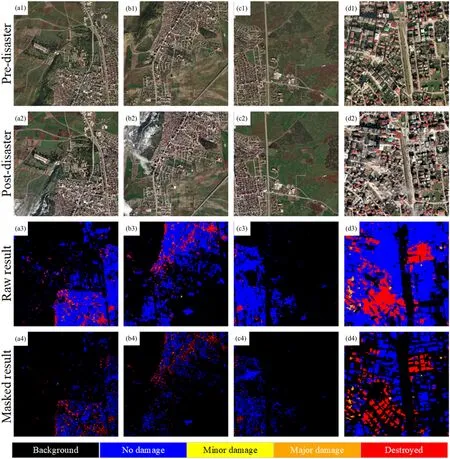

The pre- and post-disaster optical remote sensing imagery data used in this study were obtained from the WorldView2 high-resolution imaging and environmental monitoring satellites.The images have a resolution of 0.5 m; the predisaster data were captured from the Islahiye area on 27 December 2022, and the post-disaster data on 8 February 2023.High-resolution imagery can provide significant ground-level details for accurately assessing the condition of buildings, as shown in Fig. 2.The two rows display preand post-disaster images.Owing to issues with the shooting angle and satellite image quality, the data captured on 8 February 2023 were signif icantly tilted, as shown in Fig. 2 c2.In addition, the image was relatively blurry, which may interfere with subsequent processing.Manual identif ication of building damage is a time-consuming process that can easily miss subtle damages.Therefore, this study proposed the use of a depth model to automatically detect deep features,analyze each area, and make rapid judgments.Because the earthquake caused substantial damage to buildings and the extent of the damage varied considerably, this study used pre-disaster imagery as a reference for the original scope of the buildings.

2.1 Building Damage Assessment and Analysis Method Based on Optical Imagery

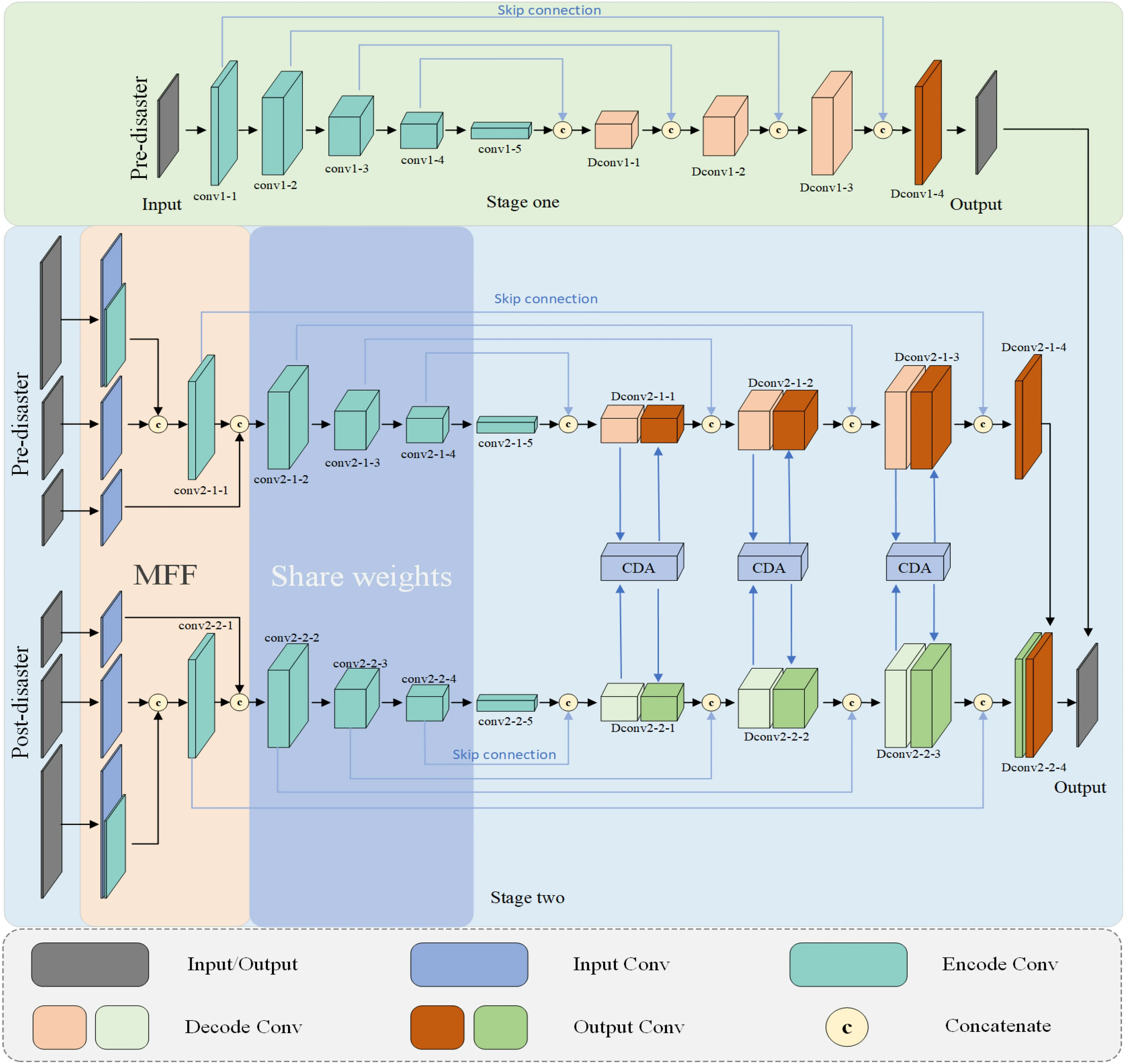

Building damage analysis consists of three steps: detection,segmentation, and evaluation.Currently, several building damage analysis algorithms are relatively mature.Based on very high-resolution (VHR) and synthetic aperture radar(SAR) images, Brunner et al.( 2010) predicted the characteristics of buildings following disasters by calculating the three-dimensional parameters of buildings prior to the disaster and comparing them with the characteristics of buildings after the actual disaster.Zhu et al.( 2021) converted an aerial video into a dataset, proposed an MSNet structure,and segmented building damage examples.To overcome the dif ficulties arising from primarily using post-disaster imagery for damage assessment as was the case in most models, this study used the two-stage neural network architecture BDANet, the overall architecture of which is shown in Fig. 3.

Fig.3 BDANet model structure.MMF = Multi-scale feature fusion; CDA = Cross-directional attention.Source Adapted from Shen et al.( 2021)

In the f irst stage, as depicted in Fig. 3, only pre-disaster imagery was used as the input to train the network to identify building objects.The architecture of the f irst stage is based on the design of the U-Net (Ronneberger et al.2015), and its jump connection layer can retain the original image features and fuse them with deep features,ef fectively preventing gradient explosions and other problems.The encoder uses ResNet (He et al.2016) as the backbone network.It employs a stride convolution to downsample the input image to half of its original resolution, followed by multiple deep convolutions to extract the high-dimensional features of the image.The decoder component accepts the low-dimensional features projected by the hopping connection layer.It gradually upsamples and restores the high-dimensional feature map to the original image size.This process captures details ignored during the extraction of high-dimensional features.Finally, the f irst-stage network outputs an image segmentation probability, whereMb∈R2×H×WandHandWrepresent the height and width of the resulting graph, respectively.The f irst channel represents the probability of the background and the second channel represents the probability of the building.Similar to traditional image segmentation tasks,this stage uses a cross-entropy loss function.

Owing to the high number of damaged buildings in the post-disaster imagery, it is challenging for a secondstage network to balance building location and damage assessment.Therefore, the weights from the f irst phase were used to aid the output of the second-stage results.

In the second stage, pre- and post-disaster imagery were imported into both branches.Because the backbone network remained consistent with the f irst stage, the weights obtained from the f irst stage training model were directly initialized in the backbone network of the second stage.In addition,the backbones of the two branches had the same weight.BDANet incorporates an MFF module in the second stage of the network encoder to understand the characteristics of buildings at various scales and to enhance the robustness of the model.As shown in the MFF section of the second stage in Fig. 4, the input image was divided into three scales:native resolution, 0.5 × resolution, and 0.25 × resolution,creating three input pathways.In the f irst step, the image with native resolution passes through a convolutional layer with a step of two, resulting in a feature map that is half the size of the original image.In the second approach, a convolutional layer with the same dimensions as the input size of the backbone network is used to convert the 0.5 × resolution image directly into a feature map with identical dimensions that is then connected to the feature map generated using the f irst approach.To ensure consistency between the number of input feature channels and input layer channels in the original ResNet backbone network, the linked feature map must be dimensionally reduced.In the third pathway, an image with 0.25 × resolution is directly linked to the output of the f irst convolutional layer of ResNet, serving as the input to the second convolutional layer.Using the three aforementioned routes, features of varying scales can be incorporated into a network to enhance its multiscale robustness.

The second-phase module must further explore the correlation between pre- and post-disaster features.Based on extrusion excitation (SE) (Roy et al.2018), BDANet uses a CDA module to recalibrate features from both the channel and spatial dimensions.The CDA module combines feature information from the pre- and post-disaster images and integrates them into the network, as illustrated in Fig. 4.

Based on the approach presented above, the model training in this study used the xBD dataset (Gupta et al.2019).The xBD dataset, derived from the Digital Earth Open Initiative, is currently the only publicly available large-scale satellite imagery dataset for building segmentation and damage assessment.This dataset of fers a highresolution global image pair of buildings before and after disasters along with their annotations.Each image is 1,024× 1,024 pixels in size and includes three RGB bands with a resolution of 0.8 m.The dataset comprises 19 disaster samples.Building damage in the dataset was categorized into four levels: no damage, light damage, major damage,and complete damage.Among the four grades, the largest number of samples showed no loss, accounting for 76.04%of the total.The proportion of samples with slight loss was 8.98%, whereas those with major loss and complete damage accounted for 7.29 and 7.69%, respectively (Shen et al.2021).Owing to the imbalance between the quantities of dif ferent classes, we used CutMix (Yun et al.2019)data augmentation to increase the sample sizes of dif ficult classes, such as minor and major damage classes.Because the resolution of this dataset is similar to that of World-View2 images, the model trained with pairs of seismically damaged building images and their corresponding annotations is suitable for assessing the damage in the remote sensing images of Islahiye.

2.2 Model Result Optimization and Building-by-Building Damage Assessment

After training the model, the weights with the highest accuracy were saved and used to predict the extent of damage to the buildings.To optimize the results, we did not directly obtain the f inal classif ication results of the model.Instead, we obtained probability maps of the building locations and four levels, denoted asMp∈R5×H×W.As shown in Fig. 5, a pre-disaster image block was input in stage one and the building layer was output, representing the probability that a pixel is a building, denoted asMp1.In the second stage, the image blocks captured before and after the disaster were imported simultaneously, and four layers of building damage probability levels (Level 1, Level 2, Level 3, and Level 4) were output and denoted asMp2,Mp3,Mp4, andMp5.

Because stage one was based on the U-Net structure and the backbone network used ResNet, the resulting map for the f irst f loor of the M-p building is called M-p1.When more than zero cells were output as building boundaries,there may be cases in which the edge lines are not suff iciently smooth, buildings are connected, or there are a large number of small plates.After consolidation of the predicted image blocks, the threshold method was initially used to eliminate features with a low probability of being a building.The probability values for building positioning in the image range were [0,255].To determine the threshold with the highest accuracy, we generated dif ferent building masks with a step size of 10 and threshold of 10, and the image was binarized using the following formula:

wherexandyrepresent the horizontal and vertical coordinates of the cell, respectively, and threshold represents the set threshold.We used Intersection over Union (IoU) to measure the accuracy of the results at dif ferent thresholds using the sample.Figure 6 shows that the IoU was the highest when the threshold was 70.

Figures 6 a, b, and c show the modif ications at the thresholds of 20, 70, and 200, respectively.A reduction in the image patches was observed until the building location map aligned with the outline of the building’s pre-disaster imagery.Figure 6 a shows that when the threshold was small,the output was far beyond the real building range.However, when the threshold was signif icantly larger than 70, as shown in Fig. 6 c, the output was signif icantly reduced.An erosion operation was then performed on the outline of the building using the erode function.

Among these elements,erode(X,Y) represents the corrosion function and the kernel represents the corrosion core.The corrosion core traverses the entire image in a specif ic step, which can reduce the number of cells at the edge of the building and reduce building connectivity.In this study,an 11 × 11 square corrosion core was constructed using the image-processing method provided by OpenCV.As building boundaries are typically f lat, a square corrosion core can f latten the distribution of building pixels and is closer to the real building distribution.After completing the preprocessing steps mentioned above, we multiplied the matrixMreswithMerodedelement-wise by Meroded.This set all cells that do not represent buildings to zero and removed any feature types incorrectly identif ied as buildings.

Fig.6 Intersection over Union(IoU) at dif ferent thresholds

The contours of the buildings extracted (Suzuki and Abe 1985) fromMerodedwere used to count the number of cells with building damage in each structure.This allowed us to rank the degree of damage to the individual buildings.

whereP 1,P 2,P 3, andP 4represent the number of cells in each of the four damage classes within a single building contour andceiling(X) is the rounding function.After compiling the statistics for each building, a map was created to assess the damage to each building.

3 Results

The deep-learning-based building assessment algorithm can achieve large-scale, rapid damage evaluations of buildings.The accuracy of these results can be further enhanced using various optimization methods.However, since this algorithm evaluates each pixel individually, to better ref lect the impact on each building, we further identif ied building contours and re-evaluated the damage based on individual buildings.After obtaining the evaluation results, this study integrated population distribution data to quantify the extent of the disaster.

3.1 Building Segmentation and Damage Assessment

The model was implemented using PyTorch software.Because of the complexity of the model, we conducted an experiment on a computer equipped with an Intel i9-9920X CPU and two NVIDIA TITAN-V GPUs.The sample was resized to 512 × 512 pixels for training, whereas the verif ication of the test set was performed on an original image with the size of 1,024 × 1,024 pixels.In addition to using the CutMix method to augment the sample data in the initial stage, the model underwent fundamental data augmentation, such as f lipping and rotating the samples during the training process.Owing to limitations in GPU performance,the backbone neural networks of all comparison networks used ResNet-50 and loaded the initial weights from PyTorch at the start of the training.The sizes of the convolutional blocks used in the building segmentation extraction and building damage assessment stages were identical.In addition, both stages used cross-entropy as the loss function, and the Adam optimization algorithm was used.Based on the test set provided by xBD, a confusion matrix was generated using the image size provided by WorldView2, which measured 17,408 × 17,408 pixels.To cover the entire town of Islahiye, four scenes with overlapping widths of 1,024 pixels were required.Therefore, the images were cropped to 1,024× 1,024 pixels.The model required pairs of pre- and postdisaster images for prediction.The model output is 1,024 ×1,024 × 5, where the f irst dimension represents the location of the building, and the second to f ifth dimensions represent the four levels of probability of damage to the building.

Based on the output of the f irst layer, the portion of the buildings with a probability greater than f ive was selected.The damage level was determined by selecting the highest probability among the four damage-level probability images, and each cell in the image was classif ied accordingly.However, BDANet uses a signif icant number of convolutional layers, and its direct classif ication results suf fer from uneven edges.Simultaneously, the high-resolution image retains detailed information such as vehicles and small clouds, resulting in a large number of f ine patches in the f inal image.To minimize interference, highlight building outlines, and reduce patch sticking, we proposed restitching the prediction results into four images of the same size as the original image to minimize the problem of building contour separation caused by cropping.First, a threshold was set based on the results for the f irst f loor.A threshold of 70 was set after a stepwise test.Next, features that were not prominent in the building characteristics were removed and the building outline was obtained through binarization.The area within the footprint was then calculated and any patch with an area of less than 25 m 2 was removed.In addition,the image erosion (erode) function was used to separate the pieces of buildings, bringing them closer to the proper building contours.This process lays the foundation for a more accurate assessment of the building damage.After obtaining the mask for the entire building, the output was clipped again to obtain the optimized result, as shown in Fig. 7.Figures 7 a,b, and c show the pre-disaster, post-disaster, and raw model output results, respectively.The morphological optimization results are shown in Fig. 7 d at a larger scale, and the situation at smaller scales is illustrated in the same f igure.Evidently, the initial building identif ication was inadequate.Some plots between buildings were incorrectly identif ied as buildings, causing individual patches of buildings to merge.Consequently, the street boundaries in Fig. 7 a3 and b3 appear blurred.After optimizing the morphology, the edges of the buildings became clearer, as the cells with a low probability of being buildings, such as roads, courtyards,and bare ground, are eliminated.Figure 7 d4 optimizes the detailed representation to improve the accuracy of the positioning of the buildings, and replicates their form prior to the earthquake.

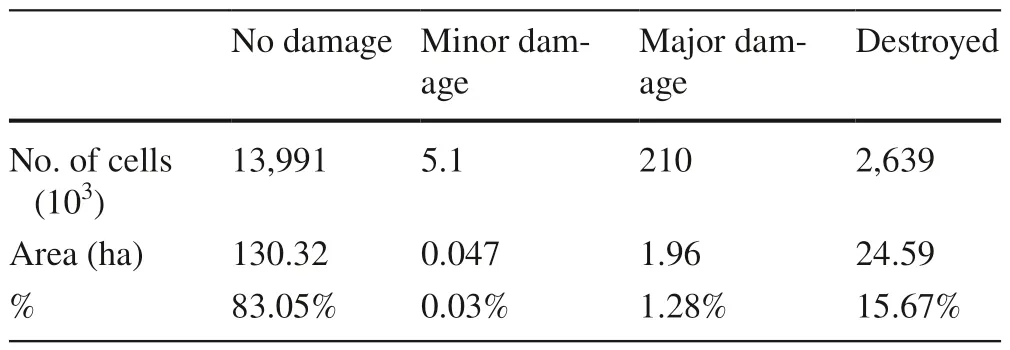

Based on the optimization results, Table 1 presents the built-up areas of Islahiye and the corresponding areas for each degree of damage.In many cases, severely damaged buildings collapsed completely, resulting in many destroyed buildings.As shown in the table, most of the damaged building pixels were classif ied as destroyed, and it was dif ficult to ref lect the overall damage to individual buildings.Further analyses were performed to obtain useful information on the building damage.

3.2 Building-by-Building Damage Assessment and Af fected Population Analysis

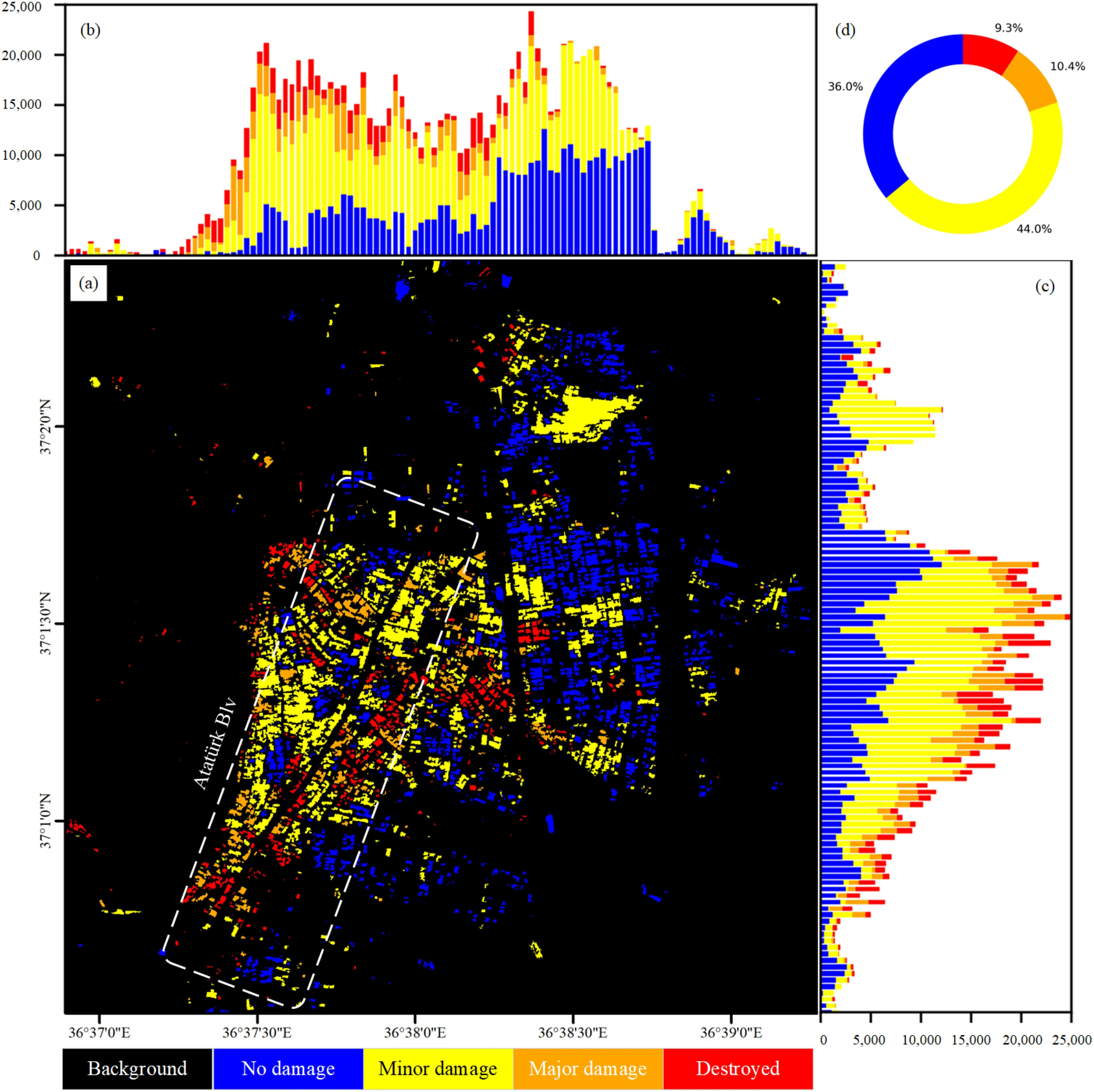

This study further assessed the extent of damage to dif ferent buildings in Islahiye.Figure 8 a presents a damage assessment for each building individually, which is more apparent and straightforward than the result diagram illustrated in Fig. 7.Figures 8 b and c provide a three-dimensional analysis of the spatial distribution of damaged buildings in the town, showing the horizontal and vertical cell statistics per 100-pixel width, respectively.Figure 8 d shows the proportion of cells in each building damage class across the entire image.Figure 8 a also indicates that the majority of the buildings that were damaged along Atatürk Blv Street,the primary residential area of Islahiye, were older and less earthquake-resistant.

Owing to the strong correlation between the distribution of damaged buildings and the af fected population, this study analyzed the impact on the population using WorldPop(Sorichetta et al.2015).WorldPop is a raster product of the global population distribution with a resolution of 100 ×100 m.It is the result of a collaborative ef fort between the University of Southampton, Université Libre de Bruxelles,and the University of Louisville, and is supported by the Bill and Melinda Gates Foundation.Global population data were provided for 2010, 2015, and 2020.In this study, population data of the Islahiye region for 2020 were selected, and the analysis results are presented in Table 2.

In terms of population distribution, the majority of Islahiye’s population is concentrated in the town center and the western part of the town.As illustrated in Fig. 8, this area experienced the most severe damage to its houses during the earthquake, resulting in a large number of af fected individuals.Although the distribution of buildings is strongly correlated with the population distribution, it does not always follow this pattern.For instance, in this study area, the population density of the eastern industrial area is notably lower than that of the western residential area.To respond more accurately to a disaster and emergency, it is critical to perform a raster multiplication calculation of the damaged graded map and population distribution map.This helps to identify areas with high population density and severe damage that require urgent attention during rescue operations.As shown in Fig. 9, although there were more houses on the east side, the emergency situation was less severe than that in the old residential area on the west side.Therefore, rescue ef forts should focus on the central and western regions,which have higher population densities and experienced more extensive damage to buildings.

Owing to the signif icant disparity between the resolution of the buildings and that of the population density data, the f inal output was presented in a 100 m × 100 m format, which provided a rough indication of the level of urgency for rescue ef forts in the Islahiye region.

4 Discussion

To validate the reliability of this method and to conf irm the consistency between building distribution and population distribution in the area, this section presents accuracy verif ication for the results and the correlation between building distribution and population distribution.

Fig.7 Comparison between raw results and optimized results.The f irst and second rows show the pre- and post-disaster images, respectively.The third row shows the raw results from BDANet.The fourth row shows the optimized results.a– c Images and results on a large scale.d Images and results on a small scale

Table 1 Building areas

4.1 Accuracy Verif ication

Fig.8 Building-by-building damage assessment.a The image of the damage assessment for each building.b– c The histogram of pixels in the direction of meridian and parallel.d Overall statistics of pixels

Table 2 Population af fected by the earthquake

This study used BDANet’s building prediction probability map and applied a threshold method to eliminate plots with low building probabilities, based on which a corrosion-expansion method was used to further ref ine the plaque shape.The corrosion function can remove small protruding pixels from the edges and abnormal patches with small areas, which caused the patch edge to shrink inward.After expansion, the edges became relatively f lat.This process provides a more accurate basis for subsequent building damage assessments.Within the research area of this study, the proposed method can ef fectively separate the most connected building patches.However, some buildings may have coloration similar to that of the surrounding road or bare ground, resulting in a lower probability of being identif ied as buildings.These areas may have been eliminated during the process, leading to incomplete building patches and removal of some buildings.The probability of building generation is based on an empirical model and the ef fectiveness of a threshold set using the threshold method can only be evaluated using a gradual empirical approach that lacks theoretical support.

Fig.9 Rescue urgency

In this study, we migrated BDANet, which was trained on the xBD dataset, to the Islahiye region of Turkey for building damage assessment without requiring additional training samples.Relatively reliable results were obtained, demonstrating the excellent versatility of the submeter-level image models.Because of the limited area, the building damage samples were insuf ficient for training the model.However, this method addresses the issue of insuf ficient samples and saves the time required for sample production.The entire process, from producing training samples to completing training and predicting regional results, was shorter than that of other model training methods.Using submeter-level image data to rapidly predict building damage can signif icantly reduce model training time, which is crucial for promptly assessing disaster situations.However, the results of the model were inadequate for identifying atypical buildings, such as those with brown-gray roofs in Turkey.As a result, the prediction accuracy is not ideal for certain areas.In the future,we plan to optimize the model to address this issue.

The BDANet model enables rapid evaluation of highresolution images before and after disasters while ensuring comparable accuracy.Due to the complexity of disasterstricken areas in Turkey, this study lacked data on building damage.To verify the accuracy of the results, this study adopted a method of sampling visual interpretations to verify the positioning accuracy of buildings.The formula used to verify the accuracy is as follows:

where TP represents true positive, FP represents false positive, and FN represents false negative.

Three buildings in dif ferent regions of Islahiye, including the residential area, town center, and suburbs, were chosen for visual interpretation to capture the town’s overall characteristics.The verif ication results are presented in Fig. 10.

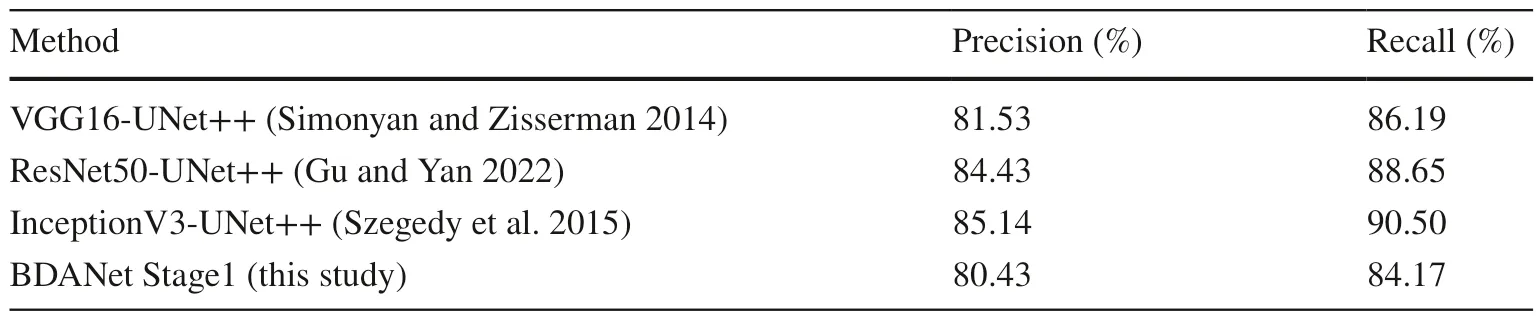

Based on cell count, the prediction accuracy was 80.43%and the sample recall rate was 84.17%.Owing to the unique architectural features and challenges posed by lighting and shadows during photographing, the model may not be able to accurately identify certain buildings, as illustrated in Fig. 10 c.The current depth model extracts buildings from high-resolution remote sensing images with an ef fectiveness similar to that of other models, including non-migration building positioning models, such as the UNet++ series.Zhou et al.( 2018) used this dataset on Mnih for building recognition, and the accuracy was approximately 85% and the recall rate was approximately 90% (Gu and Yan 2022).The specif ic combinations of the model and accuracy are listed in Table 3.

In general, building identif ication was more accurate and better represented the actual distribution of buildings in Islahiye.

4.2 Building Damage Assessment

Table 3 Comparison of dif ferent methods for building extraction

As human activities are typically centered around buildings,assessing damage on a building-by-building basis, in combination with existing remote sensing products for population distribution, can more accurately estimate the number of earthquake victims, provide quick reference data, and guide rescue ef forts.When deep learning was used for building damage assessments, the recognition of damaged areas in buildings was initially classif ied as an image segmentation task.It is often challenging for the af fected buildings to maintain their original outlines, and the models that have no reference to the pre-disaster building situation may not extract the precise extent of the buildings.This limitation renders it dif ficult to support the proposed building-bybuilding analysis method.The second-stage BDANet used in this study guided the assessment of building damage in the second stage by utilizing the f irst-stage building positioning results.It integrates the image channel and location characteristics of the pre- and post-disaster periods through the CDA mechanism, providing more accurate building location information and objectively comparing building information before and after the disaster.Based on the probability of the building location and the results of the damage assessment provided by BDANet, the overall damage to each building was calculated.The specif ic disaster situation of each building was then shown by using population distribution data to identify areas with signif icant building damage and high population density.To demonstrate the relationship between the distribution of damaged buildings and the af fected population, we quantif ied the relationship between the distribution of buildings and the population.After calculating the number of building pixels corresponding to a single pixel in the population image, we reclassif ied the number of building pixels and population into three levels and created a bivariate graduated statistical chart (Fig.11).

In Fig. 11, the cells with the same diagonal color as the legend represent a class of building pixels that matches the class of population density.This indicates that areas with dense buildings also have a higher number of population;therefore, the damage to buildings during earthquakes is closely related to casualties.

However, we approximated the population density by resampling the population distribution data at a lower resolution.This method of recalculating the population size is less accurate and can only serve as a reference for short-term decision making.Although the current resolution of population distribution data is low, it provides a general idea of the location of disaster-stricken areas.Better and more accurate data should be used to represent the population distribution, which will provide a more precise understanding of the af fected population and severely impacted areas.

5 Conclusion

The BDANet model was trained using the xBD dataset.Using high-resolution remote sensing imagery from WorldView2 captured before and after the 2023 Turkey earthquake, we conducted a building damage assessment and optimized the results to obtain accurate building positioning data for a building-by-building damage assessment.With the help of the result optimization algorithm, we compared the accuracy of the result with mainstream buildingidentif ication models and found that it still maintains a high level of precision, indicating its robustness.After assessing the damage to each building, the impacted population was analyzed using population distribution data.Subsequently,we examined areas with a large number of building collapses and dense populations to determine the level of urgency for rescue ef forts.

Fig.11 Bivariate graduated statistical chart of the distribution of buildings and population

Based on the building positioning probability map provided by the model, a probability threshold was set to remove plaques with low building probabilities.An image corrosion-expansion operation was then performed to enhance the clarity and accuracy of the building boundary in the original model output.This improved the overall accuracy of the results and provided a more reliable basis for assessing the damage to each building.

This study used an optimized image-loss result to assess building damage on an individual basis.It also analyzed the impact on the population using demographic data and categorized the urgency of rescue ef forts in Islahiye.It was verif ied that the model exhibited versatility, and that the building damage assessment process could be easily replicated using the weights of the trained model.This reduces the time that would otherwise be spent producing training samples and conducting model training.This ef ficiency is signif icant for disaster impact assessment in the immediate aftermath of a disaster.

AcknowledgmentsThis work was supported by the Third Xinjiang Scientif ic Expedition Program (Grant 2022xjkk0600).

Open AccessThis article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source,provide a link to the Creative Commons licence, and indicate if changes were made.The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material.If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.To view a copy of this licence, visit http:// creat iveco mmons.org/ licen ses/ by/4.0/.

International Journal of Disaster Risk Science2023年6期

International Journal of Disaster Risk Science2023年6期

- International Journal of Disaster Risk Science的其它文章

- Navigating Interoperability in Disaster Management: Insights of Current Trends and Challenges in Saudi Arabia

- Community Insights: Citizen Participation in Kamaishi Unosumai Decade-Long Recovery from the Great East Japan Earthquake

- Mapping Seismic Hazard for Canadian Sites Using Spatially Smoothed Seismicity Model

- Identifying Neighborhood Ef fects on Geohazard Adaptation in Mountainous Rural Areas of China: A Spatial Econometric Model

- Damage Curves Derived from Hurricane Ike in the West of Galveston Bay Based on Insurance Claims and Hydrodynamic Simulations

- Identify Landslide Precursors from Time Series InSAR Results