A Future Perspective on In-Sensor Computing

Wen Pn, Jiyun Zheng, Li Wng,, Yi Luo,

a Department of Electronic Engineering, Tsinghua University, Beijing 100084, China

b Beijing National Research Center for Information Science and Technology, Tsinghua University, Beijing 100084, China

The use of artificial intelligence(AI)is escalating rapidly in most applications nowadays, thanks to breakthroughs in biology and mathematics. Novel hardware systems are greatly needed to meet the requirements of AI, which include computing capacity and energy efficiency.One of the major aims of AI is to mimic the functions of the human brain,which are enabled by the massive interconnection of neurons.For example,the visual cortex is the region of the brain that processes visual information. The human vision system, which includes the visual cortex, is highly compact and energy efficient. The retina contains hundreds of millions of light-sensitive neurons interconnected by preprocessing and control neurons to enhance image quality,extract features,and recognize objects. Once light-sensitive neurons have detected trivial signals,they are disabled thereafter,and only the critical information is transferred to the cortex for deep processing.

The artificial imaging hardware systems that are commonly used at present,however,do not function like the human visual system.Sensors such as charge-coupled device (CCD) arrays and complementary metal oxide semiconductor (CMOS) arrays are interconnected serially with memory and processing units, through bus lines (i.e, Von Neumann architecture). Although current imaging hardware systems have an advantage over human brains in sensing unit density, response time, and sensitive wavelength range, their power consumption and processing latency are becoming problematic when a complex AI mission is being conducted.In most imaging processing applications, more than 90% of the data generated by sensors is redundant and useless [1]. As the number of pixels increases rapidly, the volume of unnecessary data multiplies,imposing a severe burden on analog-to-digital conversion (ADC)and data movement, and limiting the development of real-time image processing technology [2]. As a result, AI rapidly uses up hardware resources. Thus, there is strong demand for a breakthrough in hardware systems,which will surely emerge shortly.

Inspired by the human vision system, researchers have attempted to shift some processing tasks to sensors,thereby allowing in situ computing and reducing data movement. For example,Mead and Mahowald [3] at the California Institute of Technology proposed the AI vision chip in the 1990s. They envisioned a semiconductor chip that could capture images, directly carry out the parallel processing of visual information, and eventually output the processing results. Early vision chips aimed to imitate the retina’s preprocessing function but could only achieve low-level processing, such as image filtering and edge detection [2]. Gradually, low-level processing was found to be insufficient, and highlevel processing, including recognition and classification, became the goal for AI vision chips. Moreover, researchers proposed the development of programmable vision chips around 2006, with the goal of flexibly dealing with various processing scenes through software control[4].In 2021,Liao et al.[5]summarized the principle of the biological retina and discussed developments in neuromorphic vision sensors in emerging devices. Wan et al. [6]provided an overview of the technology of electronic, optical, and hybrid optoelectronic computing for neuromorphic sensory computing.

There are currently two significant types of vision chip architecture [2,4,7].

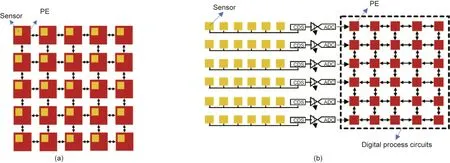

(1)Architectures with computing inside sensing units.In this type of architecture, the photodetector is placed directly into the analog memory and computing unit to form a processing element(PE) [4,8,9]. The PEs are then developed to possess in situ sensing and to deal with the analog signals obtained by the sensors. This type of architecture, which is illustrated in Fig. 1(a) [10], has the advantage of highly parallel processing speed.However,the analog memory and computing unit takes up a large volume,which makes the PEs much larger than the sensor;this results in a low pixel fill factor and limits the image resolution.

(2) Architectures with computing near the sensing units.Most vision chips cannot incorporate in situ sensing and computing architecture due to the low fill factor issue.Instead,the pixel array and processing circuits are separated physically while still being connected in parallel on a chip [4,7], which makes independent design possible according to the system’s requirements. This type of architecture is illustrated in Fig.1(b)[10].The sensing data(analog) is first extracted from the sensor array through the bus line and converted into a digital signal, which is then dealt with in the nearby processing unit. This architecture has the specific capabilities of wide-area image processing, high resolution, and large-scale parallel processing.In addition,AI algorithms,including artificial neural networks,can be conducted in this architecture in the digital process circuits.

Fig.1. Vision chip architecture.(a)Computing inside the sensing unit;(b)computing near the sensing unit.CDS:correlation double sampling.Reproduced from Ref.[10]with permission of IEEE, ©2014.

The current vision chip only has a neuron scale of 102-103,which is much smaller than those of the retina and cortex (1010).Therefore,larger scale integration technology is needed to achieve a greater neuron scale for in-sensor computing. One such method is implemented by convolutional neural networks (CNNs) and spiking neuron networks (SNNs) to significantly improve the processing efficiency.The other method is to adopt three-dimensional(3D) integration technology to vertically integrate the functional layers (sensor,memory,computing, communication, etc.) in space using through-silicon vias(TSVs)[11].In 2017,Sony proposed a 3D integrated vision chip with a pixel resolution of 1296 × 976 and a processing speed reaching 1000 frames per second(fps)[12].Some researchers believe that the 3D integrated chip has become an inevitable trend. However, further development of 3D integration technology is still necessary in areas such as architecture design and interconnections. It has been demonstrated that, although short interconnects could lower power consumption and latency,they could introduce thermal problems due to the short distance between layers [13,14]. Thus, it is crucial for the reliability issues of 3D integration to be solved and for the performance to be improved.

Driven by the need for AI development, technologies involving novel material systems and advanced devices have recently been emerging.

(1)Detect-and-memorize(DAM)materials.Photonic synaptic devices[15-20]have been proposed as a means of constructing insensor computing systems and are expected to facilitate the evolution of retina-mimicking technologies.It has been found that some metal oxides (oxide semiconductors, binary oxides, etc.), oxide heterojunctions, and two-dimensional (2D) materials [15] hold great potential as DAM materials for the realization of photonic synaptic devices. Photonic synapses possess temporary memory and synaptic plasticities, such as short-term plasticity (STP) and long-term plasticity(LTP),which can be modulated by light signals to implement real-time image processing. These devices have the advantages of ultrahigh propagation speed and high bandwidth;they also provide a noncontact writing method. However, some issues remain to be addressed, including nonlinear writing and high energy consumption due to the relatively large illumination intensity. Potentiation is achieved under optical stimuli during the writing process, while electric stimuli are utilized for habituation[21].To be specific,the conductance of devices increases gradually upon a series of photonic pulses due to the photogenerated electrons and holes, and decreases gradually under negative electric pulses, which is similar to the potentiation and depression in a biological synapse.Hence,it is expected to obtain a negative photoresponse and achieve habituation under optical stimulation[15,22].Most studies focus on mimicking synaptic behaviors(excitatory postsynaptic current (EPSC), paired-pulse facilitation (PPF),STP, LTP, etc.) in devices, as imitating the retinal neurons in the human eye remains a major challenge. In order to imitate the retina,the scaling-up of photonic synaptic devices requires further study.Among DAM materials,devices based on binary oxides(e.g.,ZnO,HfO2,AlOx,etc.)have the advantages of a simple device structure and CMOS compatibility, which are the decisive factors for scaling-up. In contrast, materials that are incompatible with an integrated circuit(IC)infrastructure can be used by adopting technologies such as heterogeneous integration [23], heteroepitaxy[24], bonding [25], and 3D heterogeneous integration [14].

(2) Device structures that combine sensor and memory.Researchers have proposed that PEs be replaced by advanced devices, such as storage elements (i.e., resistive random-access memory (RRAM) and other memristors) [26-28]. For example,combining these device-intrinsic features in a serial connection of both elements [26] makes the sensor array programmable and converts the light image into information that can be easily recognized. This structure significantly reduces the footprint of a single pixel down to the theoretical limit of 4F2(F is the feature size of the process), allowing integration with a high fill factor. Unlike CCD,however,this array does not show a destructive read-out and does not exhibit any integrating behavior. In this array, multiply-andaccumulation (MAC) operations can be directly implemented through Kirchhoff’s law in the analog domain [2,29]; however,crosstalk caused by large-scale integration is an urgent problem that remains to be solved.Researchers have also proposed a system comprised of single-photon avalanche diodes(SPADs)and memristors [30,31] to process information in the form of spike events,which would allow real-time imaging recognition.

New architectures or even algorithms must be introduced to accommodate the emerging materials and device technologies.For example, applying deep learning algorithms (deep neural networks (DNNs), CNNs, SNNs, etc.) to in-sensor computing is an urgent issue. SNNs provide a promising solution to enhance efficiency by encoding and processing time-encoded neural signals in parallel [2].

This paper presented a summary of two different kinds of architecture (i.e., with computing inside or near the sensing units) utilized in in-sensor computing and then discussed future development directions (including architecture matching with algorithms, 3D integration technology, novel material systems,and advanced devices). In sum, the ultimate goal for in-sensor computing is to achieve efficient AI hardware that has low power consumption, high speed, high resolution, high accuracy recognition, and large-scale integration, while being programmable. To commercialize in-sensor computing technology,further research is needed in physics,materials,computer science,electronics, and biology.

Acknowledgments

The authors highly appreciate Professor Supratik Guha from the University of Chicago for his useful discussion to improve the paper. This work is funded by the National Key Research and Development Program of China (2021YFA0716400), the National Natural Science Foundation of China (61904093, 61975093,61991443, 61974080, 61927811, 61822404, 62175126, and 61875104), the Key Lab Program of BNRist (BNR2019ZS01005),the China Postdoctoral Science Foundation (2018M640129 and 2019T120090), and the Collaborative Innovation Center of Solid-State Lighting and Energy-Saving Electronics the Ministry of Science and Technology of China (2021ZD0109900 and 2021ZD0109903).

- Engineering的其它文章

- The Pathway Toward Carbon Neutrality: Challenges and Countermeasures

- Weights-Based Gravity Energy Storage Looks to Scale Up

- New US Rules Promise to Unlock Hearing Aid Availability

- Flow in Porous Media in the Energy Transition

- Reactive Extrusion (REx): Using Chemistry and Engineering to Solve the Problem of Ocean Plastics

- A 150 000 t·a-1 Post-Combustion Carbon Capture and Storage Demonstration Project for Coal-Fired Power Plants