A snapshot into the future of image-guided surgery for renal cancer

Robot-assisted partial nephrectomy(RAPN)is certainly one of the most fascinating and complex urological procedures.This is ascribable to its vast heterogeneity from one case to another,related to patient’s anatomical variability and tumour’s characteristics.

Over the last years,with the aim to assist the surgeons in handling ever more difficult lesions(totally endophytic or large volume[1,2])suitable for RAPN,several technologies were proposed and tested,from preoperative planning to intraoperative assistance or navigation[3].

As already published,the recent advent of high definition three-dimensional(3D)models represents the major innovation in the field of image-guided robotic surgery,potentially changing surgeon’s approach to every single renal mass[4].In fact,notwithstanding the heterogeneity of the steps of 3D models’production and the lack of standardization of reconstruction process that lead to a faithful reproduction of the anatomy[5],the 3D spatial visualisation of patient’s anatomy can improve the perception of lesion’s complexityby the surgeon[6]with a general simplification of the procedure and a subsequent wider attempt to perform a nephron sparing surgery[7],without compromising oncological and functional outcomes[8].Furthermore,the possibility to intraoperatively overlap 3D virtual images over the real anatomy allows to obtain an augmented reality(AR)-guided surgery,of which safety,feasibility,and accuracy have already been demonstrated[9].

However,despite the lack of high-level clinical validation of these new tools,technological and engineering research keeps on moving forward,and a new generation of 3D kidney models is today available in our clinical practice.These models do not just represent the anatomy of the patient as a very detailed static photograph,but they are enhanced with perfusion area information.Today,with the application of mathematical models,it is possible to predict the area of parenchyma supplied by every arterial branch.To complete this task,the Voronoi diagram is used for calculating vascular dominant regions:considering the capillaries along the arteries,each branch of the renal artery is treated as a set of seed points of a Voronoi diagram instead of using the end points of arteries[10].The 3D models can then be divided and visualized with different colors,based on the different perfusion areas.These enhanced 3D models allow to perform a more precise selective clamping,no more empirically based on the hypothetical arteries supplying the tumor,but guided by a mathematical demonstration of the perfusion areas.This represents a new change of perspective:in fact,to obtain a proper selective clamping,we don’t have to consider the direction of the artery towards the tumor,but the area of tumor growth and by which arteries are supplied.The preliminary experiences presented by our group during the last edition of Techno-Urology Meeting(http://www.technourologymeeting.com)showed how these theoretical speculations found perfect correspondence during the intervention,with an effective selective clamping and subsequent bloodless resection bed(Fig.1).

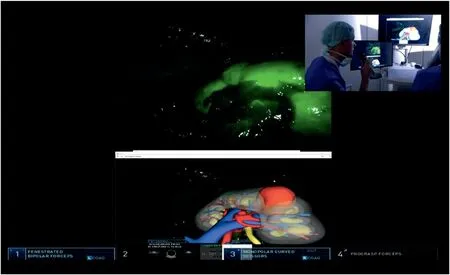

Furthermore,moving to intraoperative surgical navigation,despite the promising experiences with AR guidance,the need for a dedicated operator constantly handling a 3D mouse in order to guarantee an optimal overlapping still represents the main limit of this technology.Aiming to overcome this limitation,we explored an innovative way to reach a fully automated model overlapped using computer vision strategies,based on the identification of landmarks which could be linked to the virtual model.In particular,after the injection of indocyanine green,a specifically developed software named“IGNITE”(Indocyanine GreeN automatIc augmenTed rEality)allows the automatic anchorage of the 3D model to the real organ,leveraging the enhanced view offered by indocyanine green vision[11].In fact,after 7 s of registration time by the software,the model is properly anchored to the real anatomy and the AR-guided procedure can be started(Fig.2).

In the next future,the advent of artificial intelligence with different deep learning techniques such as the application of neuronal networks will allow to furtherly improve the precision of automatic overlapping,with a real-time navigation during the different dynamic phases of the procedure[12].

Figure 1 Three-dimensional models with different coloured perfusion areas.

Figure 2 Augmented reality robot-assisted partial nephrectomy with IGNITE software.

At last,the integration of different technologies,such as target molecules or monoclonal antibodies will allow to design novel near-infrared fluorescence imaging probes able to identify residual cancer cells in the resection bed and the advent of new artificial biomaterials enhancing the performance of robotic camera will allow to obtain integrated platforms improving the results of AR surgery.

Author contributions

Manuscript writing:Enrico Checcucci,Gabriele Volpi.

Study concept and design:Enrico Checcucci,Daniele Amparore.

Supervision:Francesco Porpiglia.

Conflicts of interest

The authors declare no conflict of interest.

Enrico Checcucci*

Department of Surgery,Candiolo Cancer Institute,FPOIRCCS,Candiolo,Turin,Italy

Department of Oncology,Division of Urology,University of Turin,Turin,ItalyUro-technology and SoMe Working Group of the Young Academic Urologists(YAU)Working Party of the European Association of Urology(EAU),Arnhem,the Netherlands Daniele Amparore

Asian Journal of Urology2022年3期

Asian Journal of Urology2022年3期

- Asian Journal of Urology的其它文章

- Burned-out testicular seminoma with retroperitoneal metastasis and contralateral sertoli cell-only syndrome

- Endoscopic management of adolescent closed Cowper’s gland syringocele with holmium:YAG laser

- Transcutaneous dorsal penile nerve stimulation for the treatment of premature ejaculation:A novel technique

- Bilateral calcified Macroplastique® after 12 years

- Culture-positive urinary tract infection following micturating cystourethrogram in children

- A phase II study of neoadjuvant chemotherapy followed by organ preservation in patients with muscle-invasive bladder cancer