Artificial intelligence can assist with diagnosing retinal vein occlusion

Qiong Chen, Wei-Hong Yu, Song Lin, Bo-Shi Liu, Yong Wang, Qi-Jie Wei, Xi-Xi He, Fei Ding,, Gang Yang, You-Xin Chen, Xiao-Rong Li, Bo-Jie Hu

1Tianjin Key Laboratory of Retinal Functions and Diseases,Tianjin Branch of National Clinical Research Center for Ocular Disease, Eye Institute and School of Optometry, Tianjin Medical University Eye Hospital, Tianjin 300384, China

2Key Lab of Ocular Fundus Diseases, Chinese Academy of Medical Sciences, Department of Ophthalmology, Peking Union Medical College Hospital, Chinese Academy of Medical Sciences & Peking Union Medical College, Beijing 100032,China

3Vistel AI Lab, Visionary Intelligence Ltd, Beijing 100081,China

4School of Information, Renmin University of China, Beijing 100081, China

Abstract

● KEYWORDS: artificial intelligence; disease recognition;lesion segmentation; retinal vein occlusion

INTRODUCTION

R etinal vein occlusion (RVO) is the second most common retinal vascular disease after diabetic retinopathy (DR)[1].If RVO is not treated in a timely manner, it can lead to serious complications that cause severe visual impairment[2-3].According to the Meta-analysis performed by Songet al[1], the overall prevalence rates of RVO, branch retinal vein occlusion(BRVO), and central retinal vein occlusion (CRVO) were 0.77%, 0.64%, and 0.13%, respectively, for individuals 30 to 89 years of age in 2015. Furthermore, the incidence of BRVO was approximately five-times higher than that of CRVO.Moreover, studies have shown that the incidence of RVO increases with age[1,4].

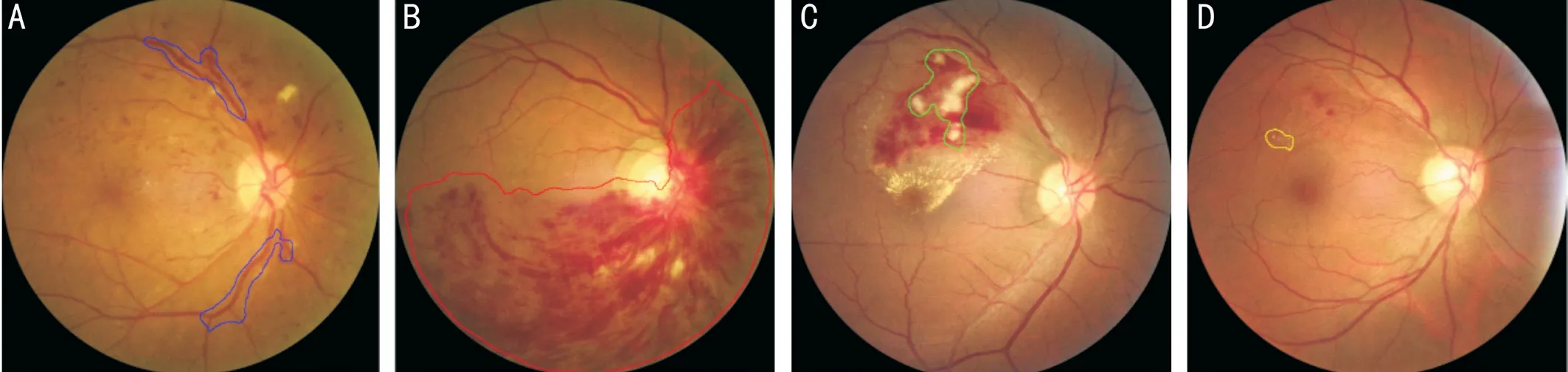

Figure 1 Schematic diagram of the recognized lesions A: Abnormally dilated and tortuous blood vessel; B: Flame-shaped hemorrhage; C:Cotton-wool spots; D: Hard exudate.

Patients with RVO may exhibit superficial retinal hemorrhage,abnormally dilated and tortuous blood vessels, cotton-wool spots, and optic nerve edema caused by increased venous pressure. The interruption of blood flow can cause a variety of complications and lead to blindness. The most serious complications include macular edema, macular ischemia, optic neuropathy, vitreous hemorrhage, neovascular glaucoma, and traction retinal detachment[5]. Because of its severity, the lack of awareness of RVO is notable. Most RVO patients do not present to a physician until they have severe ocular symptoms.Because of the development of deep learning (DL) methods for software, hardware, and large-scale datasets, the field of artificial intelligence (AI) has experienced tremendous progress since the 2010s[6]. AI techniques have been applied in various fields and have improved traditional methods. Moreover, large quantities of medical data have been collected and converted to electronic formats. With the improvements in AI techniques and the collection of medical data, AI has also experienced some progress in the medical field. The application of AI for ophthalmic diseases has focused mainly on fundus diseases,and most studies have focused on DR[7-8], age-related macular degeneration[9], retinopathy of prematurity, changes in the fundus with glaucoma, and other similar topics. However,there have been few reports of the diagnosis of RVO with AI assistance[10].

Our study used the lesions in color fundus photographs(CFPs) to identify RVO. Previous studies either identified BRVO disease alone without mention of lesion segmentation or judged the treatment response and prognosis of RVO based on the clear diagnosis of RVO using optical coherence tomography (OCT). However, this study provides evidencebased research results regarding RVO evaluated using AI.

This study aimed to establish an RVO identification model based on lesion segmentation to allow for early diagnosis and early treatment of RVO in areas with disproportionate and insufficient medical resources through the remote application of an AI model, improve the prognosis for RVO patients, and reduce the consumption of medical resources.

SUBJECTS AND METHODS

Ethical Approval This study was approved by the Ethics Committee of the Tianjin Medical University Eye Hospital and followed the tenets of the Declaration of Helsinki. Patients were informed of the advantages and disadvantages of the study and agreed to allow the use of their data for research purposes.Acquisition and Pretreatment of Color Fundus Photographs All CFPs were of the posterior pole and obtained at 45 degrees using a Canon CR2 nonmydriatic fundus camera (Canon,Tokyo, Japan). All collected CFPs were deidentified (Image Magick; https://imagemagick.org/) before further processing.Selection and Training of Ophthalmologists Three senior ophthalmologists were involved in the photograph annotation progress. The selection criteria for these ophthalmologists were as follows: performed clinical work involving fundus ophthalmopathy for at least 10y; had sufficient time to complete the study; and had a technical title equivalent to attending physician or higher rank. The three senior ophthalmologists included two attending physicians and one chief physician. The ophthalmologists were trained together. To differentiate CFP quality, the photographs with clear boundaries of the optic disc,cup-to-disk ratio, macular morphology, vascular morphology,retinal color, and lesion morphology were divided into the good quality group, photographs with vague boundaries but clear lesion shapes were divided into the acceptable group, and other photographs were divided into the unacceptable group.Ophthalmologists were required to diagnose the CFP images as CRVO, BRVO, non-RVO abnormalities, or normal conditions.Four types of lesions (abnormally dilated and tortuous blood vessels, cotton-wool spots, flame-shaped hemorrhages,and hard exudates) were marked by ellipses or polygons using online labeling software (Figure 1). For each CFP, the ophthalmologists assessed the photograph quality, diagnosed the disease exhibited by the CFP, and marked the lesions.CFPs with good quality were considered eligible for this study.All photographs were reviewed by two attending physicians,and disagreements were resolved by the chief physician. If a photograph was not reviewed by the chief physician, then the final lesion annotations were considered the union set of the annotations of the two attending physicians. Otherwise, the final lesion annotations were considered the annotations of the chief physician.

Dataset Creation We obtained 600 eligible CFPs from 481 patients. Additionally, 8000 normal CFPs were randomly picked from Kaggle Diabetic Retinopathy Detection Competition (Kaggle: Diabetic retinopathy detection. https://www.kaggle.com/c/diabetic-retinopathy-detection; 2015).All 8600 images were randomly divided into the training set, validation set, and test set using the proportion of 2:1:1,respectively. Images from the same patients were divided into the same subset (Table 1). Images containing different lesions in the three subsets are described in Table 2.

Neural Networks for Retinal Vein Occlusion Recognition We compared four different popular convolutional neural networks: ResNet-50[11], Inception-v3[12], DenseNet-121[13],and SE-ReNeXt-50[14]. All these networks showed great performance ability.

As convolutional neural networks go deeper, they can extract more complex features; however, the training to perform this task is more difficult. Simply compiling convolutional layers to create deeper networks cannot improve the performance.ResNet-50 is designed to resolve this problem. It adopts residual blocks whereby the first layers are directly connected to the last layer, which could decrease the training difficulty.

Inception-v3 adopts manually designed branches with different convolution kernel sizes. This design allows for the extraction of features with diverse sizes. During our study, it was better able to extract features of RVO-related lesions of different sizes. DenseNet-121 was designed based on ResNet. Instead of making a connection between the first and last layers,DenseNet-121 makes connections between every two layers in a block. Convolutional neural networks often output hundreds of features, but not all features have the same importance. For example, the SE-ReNeXt-50 has a squeeze-and-excitation block with an individual branch to learn the weights of different features.

Neural Networks for Lesion Segmentation Four convolutional neural networks were used to perform segmentation and were compared: FCN-32s[15], DeepLab-v3[16], DANet[17], and Lesion-Net-8s[18].

FCN-32s introduces deconvolution layers for feature maps and is considered a milestone in the field of image segmentation.DeepLab-v3 adopts an encoder-decoder structure using atrous convolution, therefore, its architecture is useful for segmenting objects with diverse sizes. When using other networks,different pixels and features exhibit weak relationships with others. However, DANet uses spatial attention and channelwise attention to connect every pixel and feature. All these networks were originally designed for natural image segmentation. Because retinal lesions are different from natural objects, Lesion-Net-16s was specially designed to segment retinal lesions, and its adjustable and expansive path is better suited for the unclear boundaries of retinal lesions.

Network Training We first used the training set to train the model and find the best network structure and configuration based on the results of the validation set. Here, we reportthe performance of the test set. This approach in the field of AI allows generalization of the developed network to applications with unknown data as much as possible. All the experiments,including model training, validation, and testing, were performed using Python 3.7.5 and PyTorch 1.0.0 on an Ubuntu 18.04 system with two NVIDIA GTX 1080Ti graphic processing units.

Table 1 Profile of the dataset n

Table 2 Images with different lesions n

RESULTS

Retinal Vein Occlusion Recognition Results The sensitivity,specificity, the harmonic mean value of sensitivity and specificity,which is referred to as F1 [F1=2×sensitivity×specificity/(sensitivity+specificity)], and the area under the curve (AUC)for RVO recognition were evaluated.

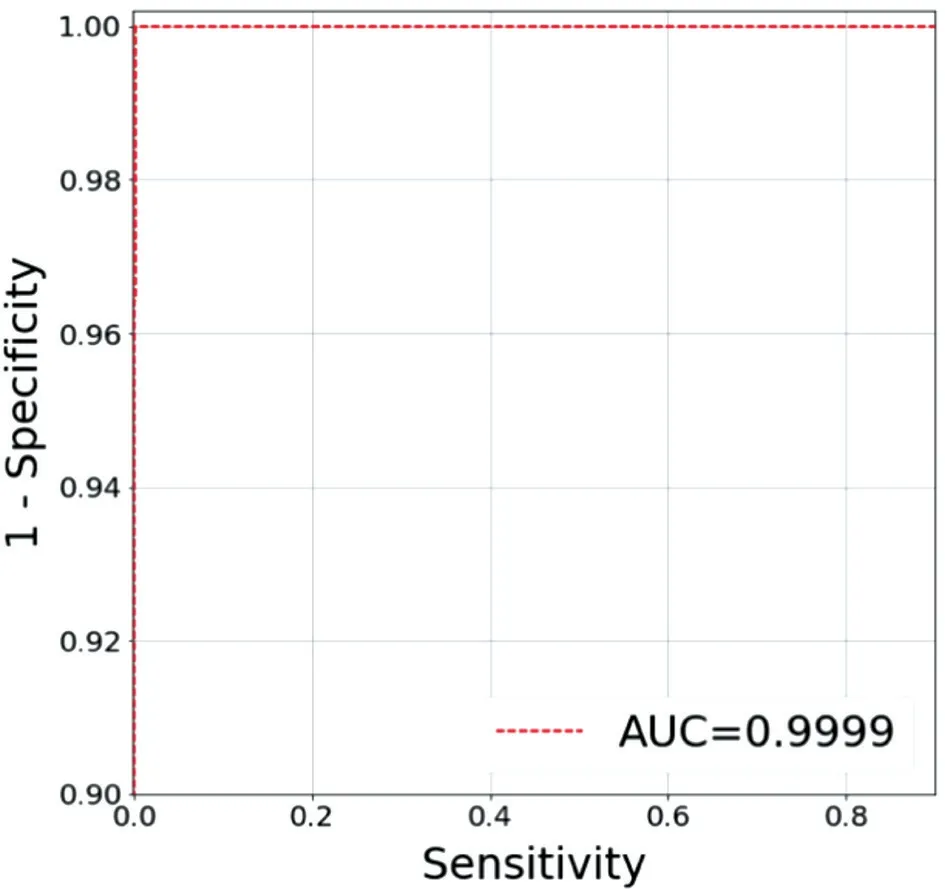

We compared the results of the four models and found that Inception-v3 had higher sensitivity, specificity, F1, and AUC. Furthermore, the Inception-v3 model (sensitivity,1.00; specificity, 0.97; F1, 0.98; AUC, 0.99) had a better profile for normal retina identification than the other models.Characterization improved and higher sensitivity (1.00),specificity (1.00), F1 (1.00), and AUC (1.00) were obtained when BRVO was the focus of this network model. When using the Inception-v3 model for CRVO and other diseases, the sensitivity values were 0.94 and 0.76, 1.00 and 1.00, 0.97 and 0.86, and 1.00 and 0.97, respectively. We calculated the mean result of all types CFPs (sensitivity, 0.93; specificity, 0.99;F1, 0.95; AUC, 0.99) using the Inception-v3 model and the ResNet-50 model (sensitivity, 0.85; specificity, 0.97; F1, 0.89;AUC, 1.00). The mean values of the DenseNet-121 model and SE-ResNeXt-50 model were also calculated; however,none of the three models worked as well as the Inception-v3 model. Therefore, we used the Inception-v3 model for disease identification (Table 3). The AUC results are shown in Figures 2 and 3.

Figure 2 Receiver-operating characteristic curve for branch retinal vein occlusion using the Inception-v3 model The area under the curve (AUC) is 0.9999.

Figure 3 Receiver-operating characteristic curve for central retinal vein occlusion using the Inception-v3 model The area under the curve (AUC) is 0.9983.

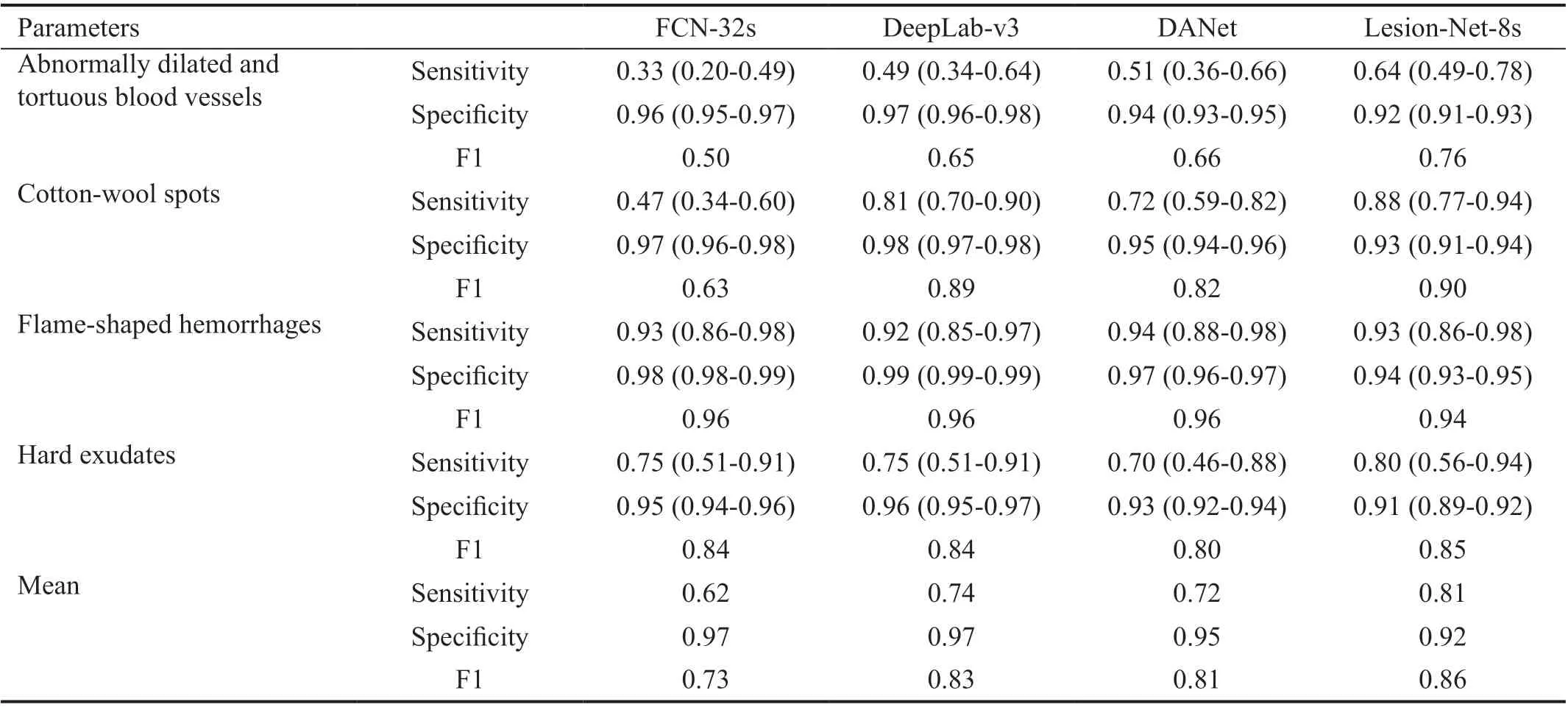

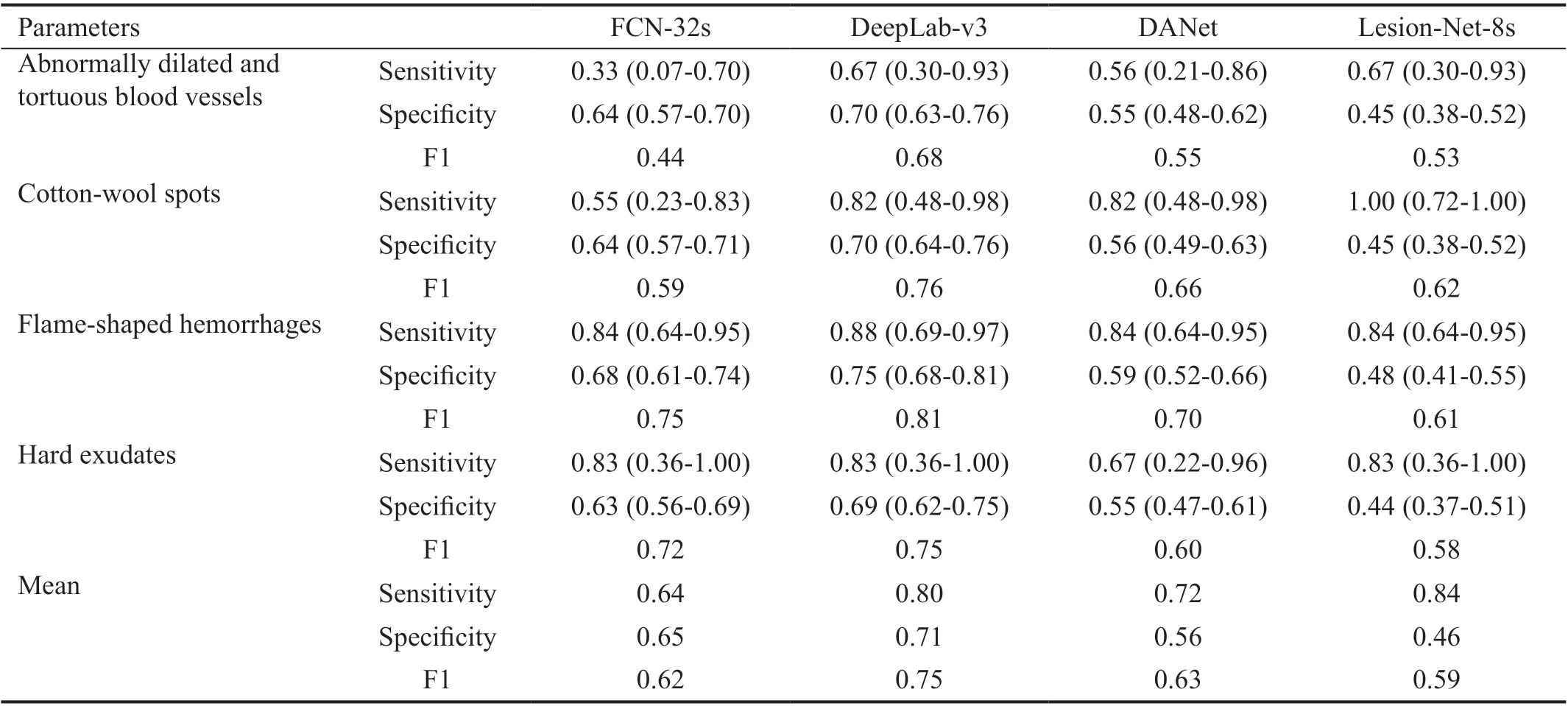

Lesion Segmentation Results We evaluated the image-level sensitivity, specificity, and F1 of the lesions. For specific types of lesions, the image results were considered true positive (TP)if the physician annotations and model predictions and their intersection over union (IoU) was more than 0.2. The image results were considered false negative (FN) if the physician annotations and IoU between the annotations and predictions was less than 0.2. The image results were considered false positive (FP) if there were predictions but no annotations.The image results were considered true negative (TN) if there were neither predictions nor annotations. We calculated the values for each lesion and reported the mean value. Becauselesions on CFP may not have had clear boundaries against the background or against other lesions of the same type, we did not calculate the pixel-level values.

Table 4 Lesion segmentation results 95%CI

Table 5 Profile of the external test set

Similarly, we compared the lesion segmentation results of the four models and found that the DeepLab-v3 model was better able to identify abnormally dilated and tortuous blood vessels(sensitivity, 0.49; specificity, 0.97; F1, 0.65) and cotton-wool spots (sensitivity, 0.81; specificity, 0.98; F1, 0.89). Furthermore,its sensitivity, specificity and F1 values were 0.92, 0.99, and 0.96, respectively, for flame-shaped hemorrhage, and 0.75,0.96, and 0.84, respectively, for hard exudates. The mean values (sensitivity, 0.74; specificity, 0.97; F1, 0.83) found by the DeepLab-v3 model using all types of CFPs compared to those of the other three models indicated that it was the superior model (Table 4).

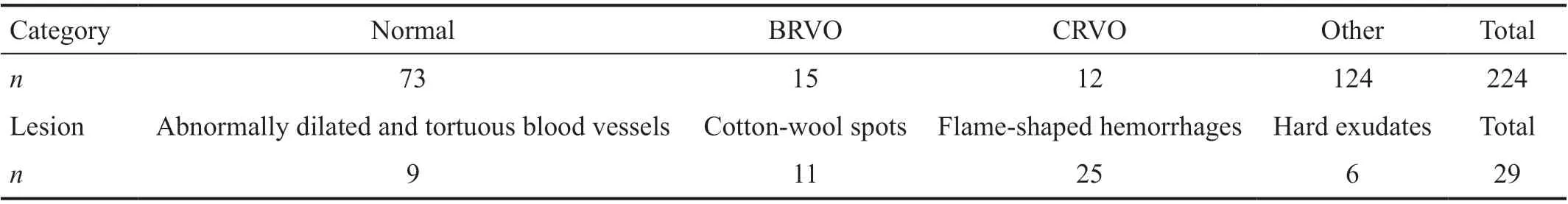

External Test Set At the beginning of the study, we could not obtain the patients’ baseline information because the number of CFPs was too large. However, because of the stability and applicability of the model, we tried our best to gather several CFPs as the external test set. The external test set consisted of 224 CFPs from 130 patients (44.3% males and 55.7% females)with an average age of 56.96±12.70y. Table 5 shows detailed information regarding the 224 CFPs and different lesions.

We validated the effectiveness of each RVO recognition model using the external test set. The Inception-v3 model exhibited good performance when normal CFPs were used (sensitivity,0.75; specificity, 0.85; F1, 0.80; AUC, 0.87). For BRVO and CRVO, the sensitivity, specificity, F1, and AUC values were 0.80 and 0.92, 0.98 and 0.98, 0.88 and 0.95, and 0.95 and 0.99,respectively. We also evaluated the recognition ability of the Inception-v3 for all CFPs (sensitivity, 0.81; specificity, 0.90;F1, 0.85; AUC, 0.91) and determined that, compared with the other models, its performance using the external test set was good and it had good generalizability (Table 6).

Using the external test set, the DeepLab-v3 model also exhibited good values for identifying abnormally dilated and tortuous blood vessels (sensitivity, 0.67; specificity, 0.70; F1,0.68) and cotton-wool spots (sensitivity, 0.82; specificity,0.70; F1, 0.76). Regarding the training set and validation set of CFPs, the sensitivity, specificity, and F1 values were 0.88 and 0.83, 0.75 and 0.69, and 0.81 and 0.75, respectively. It was exciting to us that the total mean sensitivity, specificity,and F1 values were 0.80, 0.71, and 0.75, respectively, thereby further demonstrating that the DeepLab-v3 model had better performance and generalizability (Table 7).

DISCUSSION

Most studies of AI and RVO also involved OCT, and most of them focused on the quantitative analysis of RVOnonperfusion areas and retinal edema fluids, therefore, these studies required a clear diagnosis of RVO. Nagasatoet al[19]compared the abilities of a deep convolutional neural network,a support vector machine, and seven ophthalmologists to detect retinal nonperfusion areas using OCT-angiography images. Rashnoet al[20]presented a fully automated method that used AI to segment and detect subretinal fluid, intraretinal fluid, and pigment epithelium detachment using OCT B-scans of subjects with age-related macular degeneration and RVO or DR. However, there have been relatively few studies of the AI-assisted diagnosis of RVO, and even fewer studies of the applications of CFPs and AI for diagnosing RVO.Anithaet al[21]developed an artificial neural network-based pathology detection system using retinal images to distinguish non-proliferative DR, CRVO, central serous retinopathy, and central neovascularization membranes. The system was highly sensitive and specific, but their study was restricted to four fixed diseases when the system was applied to an unlimited set of photographs, and the sensitivity and specificity values were unknown. However, the method proposed by our study was based on an open photograph set, and the sensitivity and specificity were stable.

Table 6 RVO recognition results using the external test set 95%CI

Table 7 Lesion segmentation results using the external test set 95%CI

This study indicated that our AI algorithm exhibited good performance when detecting RVO and corresponding lesions.Furthermore, our study differs from others because it not only identified RVO but also detected lesions. The results can help ophthalmologists and patients understand the decisions of the AI model. Notably, they can help patients understand the severity of RVO and related eye problems so they can obtain improved counseling, management, and follow-up[6].Furthermore, the models described could be used to guide the diagnosis.

This study had some limitations. We collected baseline information from patients at the start of the study, including sex, age, and history of systemic diseases (e.g., high blood pressure, diabetes, hyperlipidemia, and atherosclerosis).Unfortunately, most patients were from outpatient services,and their baseline information was not always sufficient.The inclusion of baseline information may allow for more consistent results regarding RVO epidemiological characteristics. Although it was difficult to collect baseline information, we tried to control the image consistency by removing the identification information from CFPs.

In the future, AI methods could be used to diagnose RVO and identify and treat RVO early during the disease process to improve the prognosis and reduce the use of medical resources.During this study, the proposed method was able to detect RVO and recognize related lesions, thus providing evidence of the ability to diagnose RVO with the assistance of AI. This approach provides a new concept for future applications of AI in medicine, especially in regions with limited access to retinal specialists for various reasons such as economic issues or medical resource allocation.

ACKNOWLEDGEMENTS

Foundations: Supported by Tianjin Science and Technology Project (No.BHXQKJXM-SF-2018-05); Tianjin Clinical Key Discipline (Specialty) Construction Project (No.TJLCZDXKM008).

Conflicts of Interest:Chen Q, None; Yu WH, None; Lin S,None; Liu BS, None; Wang Y, None; Wei QJ, None; He XX,None; Ding F, None; Yang G, None; Chen YX, None; Li XR,None; Hu BJ, None.

International Journal of Ophthalmology2021年12期

International Journal of Ophthalmology2021年12期

- International Journal of Ophthalmology的其它文章

- Upregulation of ASPP2 expression alleviates the development of proliferative vitreoretinopathy in a rat model

- Mesenchymal stem cell-derived exosomes inhibit the VEGF-A expression in human retinal vascular endothelial cells induced by high glucose

- Protective effects of umbilical cord mesenchymal stem cell exosomes in a diabetic rat model through live retinal imaging

- New technique for removal of perfluorocarbon liquid related sticky silicone oil and literature review

- Quantitative analysis of retinal vasculature in normal eyes using ultra-widefield fluorescein angiography

- Evaluation of the long-term effect of foldable capsular vitreous bodies in severe ocular rupture