Perceptions of emergency medicine residents on the quality of residency training in the United States and Saudi Arabia

Ahmad Aalam, Mark Zocchi, Khalid Alyami, Abdullah Shalabi, Abdullah Bakhsh, Asaad Alsufyani,Abdulrahman Sabbagh, Mohammed Alshahrani, Jesse M. Pines2,

1 Department of Emergency Medicine, King Abdulaziz University, Jeddah, Saudi Arabia

2 Department of Emergency Medicine, The George Washington University, Washington, DC, USA

3 Center for Healthcare Innovation and Policy Research, The George Washington University, Washington, DC, USA

4 Department of Pediatrics, University of Dammam, Dammam, Saudi Arabia

5 Department of Emergency Medicine, King Abdulaziz Medical City, National Guard Hospital, Jeddah, Saudi Arabia

6 Department of Emergency Medicine, University of Toledo, Toledo, Ohio, USA

7 Department of Emergency Medicine, King Fahad Medical City, Riyadh, Saudi Arabia

INTRODUCTION

A central question for applicants to emergency medicine residency training programs is the quality of their educational experience and environment.Emergency medicine residency programs differ by educational quality, patient care experiences, faculty,ancillary support (e.g. nursing staff, etc.), workplace environment, how evaluations are conducted, and personal support.[1]Educational environment includes physical environment (comforts, food, accommodations),emotional environment (constructive feedback, security,supports, and having a bullying-free environment) and intellectual environment (learning through patient care,evidence-based teaching, motivation, active participation by learners, and planned education).[2]

Many physicians who are citizens of Saudi Arabia(SA) have the option to be supported by the King Abdullah scholarship program which funds United States (USA) and Canadian universities and hospitals programs to train SA physicians. As of 2016, USA emergency medicine residencies have 1,896 training slots in 174 programs compared with 133 training slots in 3 programs in SA.[3,4]Many physicians around the world and in SA seek training in the USA for its well-developed EM residency programs. In 2014 the Saudi Arabian Cultural Mission (SACM) matched 88 Saudi physicians in USA residency programs, with 11 physicians entering emergency medicine.[5]Therefore, comparing the quality of EM training in USA and SA residency training programs is important to help physicians who are choosing whether to train in the USA or SA. It is also helpful in identifying differences in educational environments to allow emergency physician educators to identity targeted areas for improvement in both countries.To our knowledge, no studies have directly compared the quality of the educational environment of emergency medicine training programs in the USA and SA.

In this study, we compare educational environments in emergency medicine training programs between a select group of residency programs in the USA that have a history of training Saudi Arabian physicians and all three emergency medicine residency programs in SA using a modified version of a validated instrument,the Postgraduate Hospital Educational Environment Measure (PHEEM) survey. In the paper, we first compare differences in the overall PHEEM score across the two countries, then directly compare subscales and discuss the implications of the findings.

METHODS

Study design and survey development

A cross-sectional survey study was conducted using an adapted version of the PHEEM survey from April 2015 through June 2016 to compare the educational environment in emergency medicine residency programs in SA and select programs in the USA. The study population was emergency medicine residents in 6 residency programs (all the 3 programs in SA and 3 in the USA) who finished at least 6 months of their residency training at the time of survey completion. The study was approved by the Institutional Review Board at George Washington University.

The original PHEEM survey was developed in the United Kingdom (UK) to evaluate the clinical learning environment for junior doctors.[6]The survey has subsequently been validated in countries including Brazil, Greece, Singapore, Australia, Chile and Iran, and has also been validated in SA in several studies.[7-15]

Specifically, Al-Shiekh et al[15]validated the PHEEM tool in a Saudi Arabian teaching hospital, with a sample of 193 interns and residents. The results showed high internal consistency with a Cronbach's alpha score of 0.936. Binsaleh et al[12]used the PHEEM in multiple Saudi centers and also found high internal consistency with a Cronbach's alpha of 0.892. BuAli et al[11]used the PHEEM tool to assess the pediatric educational environment in six teaching hospitals in Saudi Arabia and showed overall positive ratings with room for improvement.Specifically, racism and sexual discrimination were issues that required targeted improvement.

The PHEEM assesses three main domains of the learning environment: perceptions of teaching,perceptions of autonomy, and perceptions of social support.[6,16]The PHEEM survey contains 40 statements that each resident answers about the program on a 5-point Likert scale. Responses of strongly agree, agree,uncertain, disagree, and strongly disagree were scored as 4, 3, 2, 1, and 0 points, respectively except for 4 negative statements (7, 8, 11, and 13), in which scoring was reversed. The maximum score from the 40-statement survey is 160. To interpret the results of PHEEM, the following qualitative categories are used: 0–40 is very poor, 41–80 is plenty of problems, 81–120 is more positive than negative but there is room for improvement,and 121–160 is excellent.[6]

Because the PHEEM was developed in the UK,some of the language does not translate directly to USA or SA training settings. We therefore modified the original PHEEM to include more familiar language and in some cases abbreviations were expanded into their full-form. Examples include a statement where the abbreviation (post) replaced with its full-form (position).Another example is where the original statement (I had an informative induction programme) was replaced with a simpler version (I had an informative orientation program). The modified survey items were reviewed by a research group of both SA and USA physicians(15 physicians) who filled out the updated instrument during a dedicated session, and then provided additional feedback and suggested improvements to the wording of the statements. The study team integrated these suggestions andfinalized the survey instrument.

Data collection

Data were collected from 6 EM training programs.All emergency medicine training programs in SA at the time of the study were included (3 programs:Central, West, and East). The three programs in the USA included George Washington University, Emory University, and University of Toledo. The USA training programs were chosen because they have a history of training SA residents. In each training program, a local contact administered the survey. Permission from every program director was received to conduct the study.The local contact distributed hardcopies of the survey face-to-face during a teaching conference, contacted those who did not attend, and emailed an electronic version to those residents who were not available in person. During this process, we explicitly excluded other providers who were attending the same conferences including emergency medicine residents from other programs or medical students. In one USA program,gift cards of $5 were given to help improve the response rate. No personal identifiers were collected to maintain respondent anonymity. Survey responses were not shared with program directors or any other personnel in the residency programs. All physical and electronic surveys data were compiled in a Microsoft Excel (Microsoft Corp, Redmond, WA) database.

Data analysis

Mean scores were calculated overall and by subscale(autonomy, teaching, and social Support). Mean scores by program, country, and post-graduate years (PGY)were also calculated. Chi-squared tests compared frequencies in the interpretation of PHEEM scores for overall score and subscales between the two countries.Independent-sample t-tests compared mean score for each statement, subscales, and overall PHEEM score between the two countries. One-way analysis of variance(ANOVA) was used to determine if mean scores differed across programs and PGY. AP-value of <0.05 was considered to be statistically significant. A small number of questions were skipped by respondents (25 of 8,760).For missing data, the respondent’s mean overall score,rounded to the nearest integer, was used for imputation.All analyses were conducted using Stata version 13(College Station, Texas, USA).

RESULTS

A total of 219 surveys were returned of 260 residents across the six programs for a response rate of 84%. The response rate for SA programs was 83% compared to 86% for USA programs. Response rates for individual programs ranged from 79% to 100%.

Table 1 displays the frequencies of scores on eachsubscale and the overall score categorized based on qualitative PHEEM interpretations.Very few respondents from either country had negative perceptions of their program. However,differences were found in perceptions of role autonomy withP<0.001 and perceptions of teaching withP=0.005 with more US respondents scoring their program as excellent 45% and 55%respectively compared to 19% and 31% in the SA programs. Perceptions of social support did not differ between the two countries withP=0.243.

Table 1. Frequencies of scores in each subscale and the overall scores in Saudi Arabia and the United States of America categorized based on their PHEEM interpretation

Figure 1 displays the mean score for each statement. The mean score of several statements was statistically different between the two countries. In most cases, the USA programs had higher scores. The one exception was for the statement: “There is an informative junior doctors’ handbook”, where residents in SA programs indicating higher agreement than the USA programs.

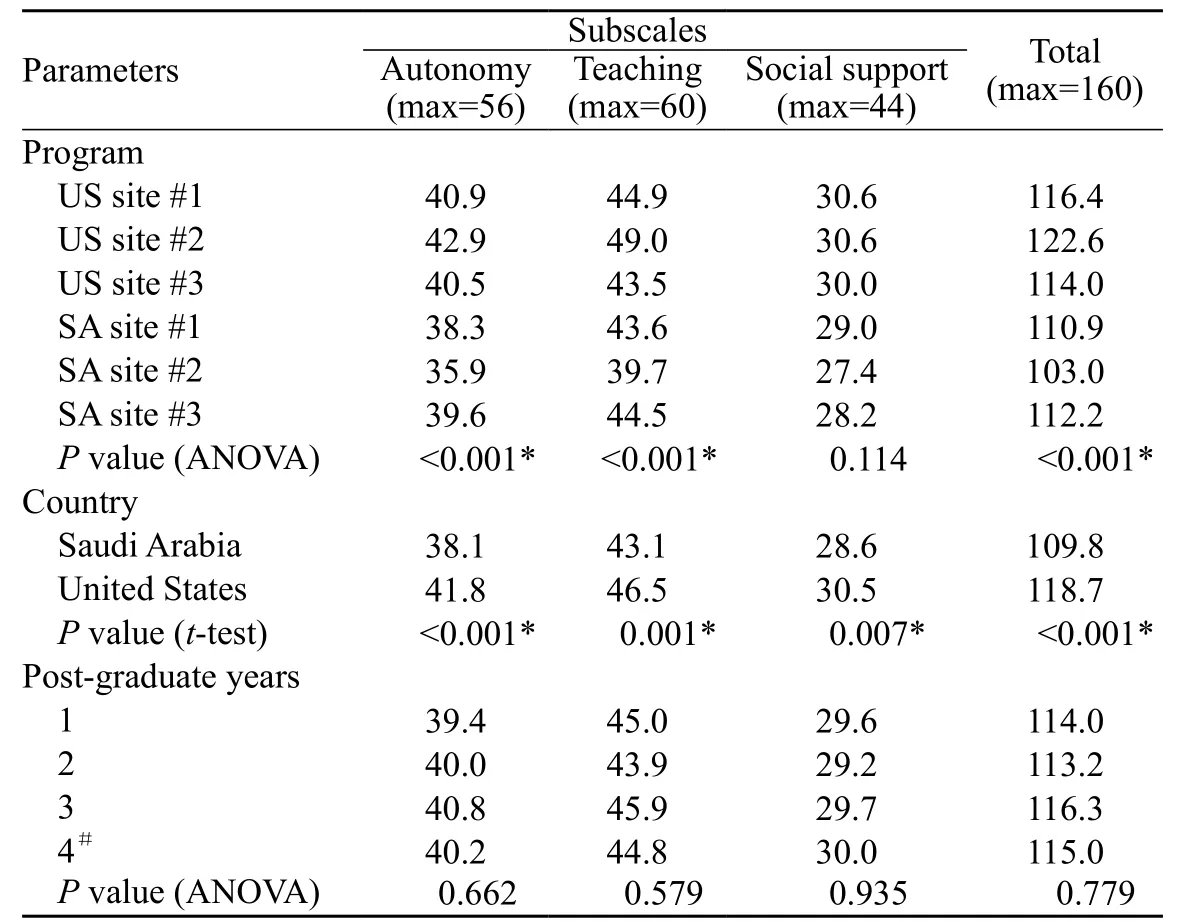

Table 2 displays mean scores for each subscale and overall by program, country, and PGY. The mean overall score for the USA programs was higher: 118.7 compared to 109.9 for SA withP<0.001. These overall scores describe both countries as “more positive than negative but room for improvement.” Mean scores by individual program differed significantly for the autonomy and teaching subscales but not for the social support subscale withP≤0.001,<0.001, and 0.114 respectively. Only one program had an overall mean score of “excellent”. No program had a mean score of “very poor” or“plenty of problems”. Mean scores did not differ significantly by post-graduate year (PGY).

DISCUSSION

This study is the first of its kind to compare educational environments in emergency medicine residency programs between two countries using the PHEEM tool. We found differences in perceptions of the educational environments of emergency medicine training programs in SA and USA. In both countries, trainees scored the educational environment as more positive than negative, with some areas for improvement.However, overall USA training programs scored slightly better, particularly on teaching and role autonomy.

Table 2. Mean scores for each PHEEM subscale and overall by program,country, and PGY in Saudi Arabia and the United States of America

There are several potential reasons for differences in program quality in the two countries. One is program age:USA programs are older, starting on average 22 years prior to the surveys (35, 25, and 7 years) compared to the relatively younger SA programs, the oldest of which is 16 years old.Longer experience with training residents may contribute to better quality training. This also may explain why USA residents described more experienced model teachers than their SA peers.The didactic portion of emergency medicine training in the USA and SA is largely similar. Both countries have similar approaches to weekly conferences (5 hours or more per week), and to participation in emergency medicine practice and other specialty practice. However, there are some notable differences. Until recently, SA emergency medicine residents have not had regular access to simulation labs or simulation practice especially in the western part of the country. By contrast, USA residency programs have a longer history of using simulation, which is a longstanding, regular part of the curriculum in all three training sites.[17]While most USA residents have online remote access to weekly conferences, this is not available to most of SA residents.USA residents also have access to older and more developed emergency medicine societies, and resources such as the Society for Academic Emergency Medicine (SAEM) (founded 1989),American College of Emergency Physicians (ACEP) (founded 1968) and others. By comparison, the Society Association of Saudi Emergency Medicine (SASEM) was founded in 2006 and has held only 2 national meetings.[18]

Figure 1. Comparison of the mean agreement score for each statement scored by the residents in Saudi Arabia and the United States of America and what difference between the two is statistically significant. Each statement is scored using the 5-point Likert scale, 4 is the maximum which imply strong agreement and 0 is the minimum which suggest strong disagreement. † = Questions 7, 8, 11, and 13 are reverse coded so higher scores are indicative of more disagreement with the statement. * = statistically significant difference between Saudi Arabia and United States of America at P<0.05.

Another reason for differences could be better oversight of USA programs. The Accreditation Council on Graduate Medical Education (ACGME) has strict requirements for elements of emergency medicine training. In comparison,SA has the Saudi Health Commission Society (SHCS)that uses the Canadian module (CanMEDS), a framework developed by the Royal College of Physicians and Surgeons of Canada in the 1990s for improving patient care by enhancing physician training.[19]Although the ACGME and CanMEDS requirements are largely similar, there may be less enforcement of program requirements by SHCS than its USA-based counterparts.Every USA program has a local (within the institution)Graduate Medical Education (GME) office that provides support, direction, and oversight to continuously ensure each residency program meets its requirements. By contrast, SA programs lack such a local authority. This is reflected in how program rules are implemented. For example, in USA programs, survey respondents reported that work hours were clearly defined and well explained.By contrast, SA residents were less certain with regards to work hours, even though specific work hours are clearly defined on the SHCS website.[4]

Residents in both countries felt confident about their autonomy and that they have an appropriate level of responsibility. However, practice differences were also found between the two countries. For example, USA residents are required to write detailed documentation for most cases because of regulatory and billing requirements, a task that often requires time after the shift finishes. By comparison, residents in SA do not have the same requirements for documentation because most patients are not billed and documentation standards vary by hospital. There was also considerable variation within each country, demonstrating that variation in training quality and environments exist despite similar regulations on residency training. This may be due to differences in local ways of administering programs or perceptions of the faculty; however, there were not great differences in scores between the programs, which may reflect similarities across programs, or at least the perception of it.

On average, social support was not found to be significantly different between the two countries. USA programs scored higher on a few questions. For example,differences may be due to the “no blame” culture for medical errors, which is a more prominent feature of USA training programs (Question 25 of the survey).Other could be also a result of sub-optimal or unpleasant personal interaction with staff physicians, where USA teaching physicians may be perceived as more collegial. Sexual discrimination was also noted to be felt more among the SA residents, which requires further exploration.

Residents in both USA and SA emergency medicine training programs ranked their experiences as positive on the PHEEM with scores of 119 and 110 respectively,considerably higher than evaluations of nine critical care training programs in England and Scotland with an average score of 103.[20]Another study in surgery,anesthesiology and internal Medicine residency programs in the Hospital das Clínicas, University of São Paulo Medical School demonstrated mean PHEEM scores of: 88, 101 and 97, respectively. The PHEEM survey was used to evaluate the educational environment in emergency medicine in Iran in 3 training programs with mean scores ranging from 88–93.[13]There may be more favorable perceptions of training in the USA and SA because with better-structured programs. In addition,SA programs have been primarily built by Saudi North American graduates, with similarities in the SA programs to the Canadian system.

From this work, there are several ways that both SA and the USA can create targeted improvements in emergency medicine residency training. Specifically, SA should increase the availability of simulation, improve access to weekly conferences and allow online access,increase oversight from SHCS, focus on adapting a“no blame” culture, and closely examine concerns over sexual discrimination. The USA programs surveyed should also consider improving hospital accommodations for residents, their “handbook” materials, and ensure that residents receive regular, actionable feedback to improve their practice.

Limitations

There are several limitations to this study. First,PHEEM has never been used to compare training in two different nations. Cultural differences between the USA and SA may be a factor in how residents perceive their training, which also may explain some differences. In addition, although the PHEEM has been used in SA and other countries, it has not been tested in the USA. It was not specifically designed for the emergency medicine training. Some of the questions may not have been clearly understandable to some residents in both countries (example: Question 13).This may be more prominent in SA because English is not the native language, which may result in a less accurate assessments; however, SA medical schools are administered in English so all are reasonably proficient by the time they are in residency. Residents also may have not felt comfortable providing negative feedback about their training environment, even though it was anonymous.

Because we only sampled three USA programs,this may not translate to the educational environment of all residency programs. Particularly, programs in community-based hospitals may be different. In addition,the three SA residency programs are four year programs,while the included USA programs were two three-year and one four-year program. This may account for some differences in study results, particularly with resident perceptions of role of autonomy. The data collection process was not uniform on all the residency programs.In one program, gift cards were used to increase the response rate, and the USA data collection was done in time frame of 1–2 months while in SA it took around 5–6 months. This could impact the results as residents may be comfortable in their education and autonomy compared to their peers answering the survey 4–5 months earlier.

Other limitations in comparing the two environments include but are not limited to: differences in sizes between the residencies in each country, unique features of each community like Hajj season rotations in the SA programs where all residents have the opportunity to do a high volume procedures in short time period. In addition, residency programs are constantly changing, so study results may have been different if the surveys were administered at different times. For example just recently SA programs have added a new mandatory research rotation.

CONCLUSION

We found that emergency medicine residency training programs in SA are all of reasonably high quality as measured by the PHEEM instrument, as were the three USA programs we studied. Programs in both countries compare favorably to other countries where the PHEEM scores has been assessed. The USA programs score higher overall, which is driven by more favorable perceptions of role autonomy and teaching.Understanding these differences may provide targeted areas for improvement for program directors to improve the learning environment.

ACKNOWLEDGEMENT

Authors would like to thank the program directors Colleen Roche, Edward Kakish, Majid Alsalamah, Azzah Aljabarti, Philip Shayne and Mohammed Alshahrani for their support, the group of physician Abdullah Sharani, Waleed Hussein, Ahmed Alkhathlan,Hani Alsaedi, Yasser Ajabnoor, Ahmed Alrajjal, Weaam Alshenawy,Hussain Al Robeh for their help in the process of modifying the survey, and Layal Suruji for helping with data entry.

Funding:None.

Ethical approval:The study was approved by the Institutional Review Board at George Washington University.

Conflicts of interest:None to declare.

Contributors:AA proposed the study and wrote the first draft. All authors read and approved the final version of the paper.

1 Asch DA. Evaluating residency programs by whether they produce good doctors. LDI Issue Brief. 2009;15(1):1-4.

2 Mohanna K, Wall D, Chambers R, editors. Teaching made easy:a manual for health professionals. Abingdon, UK: Radcliffe Medical Press Limited; 2004;41-57.

3 National Resident Matching Program, data reports, 2016 Match Results by State, Specialty, and Applicant Type report.http://www.nrmp.org/match-data/main-residency-match-data.Accessed August 3, 2017.

4 Saudi Commission for Health Specialties, Postgraduate Training Programs, Emergency Medicine Program Booklet. http://www.scfhs.org.sa/en/MESPS/TrainingProgs/TrainingProgsStatement/Emergency/Pages/ProgBook.aspx .Accessed August 3, 2017.

5 Ministry of Education, Saudi Arabian Cultural Mission to the United States, the Department of the Medical and Health Science Programs, 2014-2015 Acceptance Report, http://www.sacm.org/MedicalUnit/MedicalUnit.aspx . Accessed August 3, 2017.

6 Roff S, McAleer S, Skinner A. Development and validation of an instrument to measure the postgraduate clinical learning and teaching educational environment for hospital-based junior doctors in the UK. Med Teach. 2005;27(4):326-31.

7 Auret KA, Skinner L, Sinclair C, Evans SF. Formal assessment of the educational environment experienced by interns placed in rural hospitals in Western Australia. Rural Remote Health.2013;13(4):2549. Epub 2013 Oct 20.

8 Riquelme A, Herrera C, Aranis C, Oporto J, Padilla O.Psychometric analyses and internal consistency of the PHEEM questionnaire to measure the clinical learning environment in the clerkship of a Medical School in Chile. Med Teach. 2009;31(6):e221-5.

9 Vieira JE. The postgraduate hospital educational environment measure (PHEEM) questionnaire identifies quality of instruction as a key factor predicting academic achievement. Clinics (Sao Paulo). 2008;63(6):741-6.

10 Koutsogiannou P, Dimoliatis ID, Mavridis D, Bellos S,Karathanos V, Jelastopulu E. Validation of the Postgraduate Hospital Educational Environment Measure (PHEEM) in a sample of 731 Greek residents. BMC Res Notes. 2015;8:734.

11 Gilbert SK, Wen LS, Pines JM. A comparison of perspectives on costs in emergency care among emergency department patients and residents. World J Emerg Med. 2017;8(1):39-42.

12 Binsaleh S, Babaeer A, Alkhayal A, Madbouly K. Evaluation of the learning environment of urology residency training using the postgraduate hospital educational environment measure inventory. Adv Med Educ Pract. 2015;6:271-7.

13 Jalili M, Mortaz Hejri S, Ghalandari M, Moradi-Lakeh M, Mirzazadeh A, Roff S. Validating modified PHEEM questionnaire for measuring educational environment in academic emergency departments. Arch Iran Med.2014;17(5):372-7.

14 Khoja AT. Evaluation of the educational environment of the Saudi family medicine residency training program. J Family Community Med. 2015;22(1):49-56.

15 Al-Shiekh MH, Ismail MH, Al-Khater SA. Validation of the postgraduate hospital educational environment measure at a Saudi university medical school. Saudi Med J. 2014;35(7):734-8.

16 Wall D, Clapham M, Riquelme A, Vieira J, Cartmill R, Aspegren K, et al. Is PHEEM a multi-dimensional instrument? An international perspective. Med Teach. 2009;31(11):e521-7.

17 Khaled A, Alburaih A, Wagner MJ. A comparison between emergency medicine residency training programs in the united states and saudiarabia from the residents’ perception. Emergency Medicine International. 2014:362624.

18 Saudi Society of Emergency Medicine, About SASEM, http://sasem.org/about-sasem/ . Accessed February 5, 2017.

19 Royal College of Physicians and Surgeons of Canada,CanMEDS, About, http://www.royalcollege.ca/rcsite/canmeds/about-canmeds-e . Accessed August 3, 2017.

20 Clapham M, Wall D, Batchelor A. Educational environment in intensive care medicine--use of Postgraduate Hospital Educational Environment Measure (PHEEM). Med Teach.2007;29(6):e184-91.

World journal of emergency medicine2018年1期

World journal of emergency medicine2018年1期

- World journal of emergency medicine的其它文章

- Intravenous fluid selection rationales in acute clinical management

- Association between the elderly frequent attender to the emergency department and 30-day mortality: A retrospective study over 10 years

- Differential diagnoses of magnetic resonance imaging for suspected acute appendicitis in pregnant patients

- Ultrasound curriculum taught byfirst-year medical students: A four-year experience in Tanzania

- Falls from height: A retrospective analysis

- Prognostic value of cortisol and thyroid function tests in poisoned patients admitted to toxicology ICU