Energy Efficient On-Chip Communications Implementation Based on Power Slacks

Xiao-Yu Xia, Wen-Ming Pan, and Jia-Chong Kan

Energy Efficient On-Chip Communications Implementation Based on Power Slacks

Xiao-Yu Xia, Wen-Ming Pan, and Jia-Chong Kan

——The quest for energy efficiency has growing importance in high performance many-core systems. However, in current practices, the power slacks, which are the differences observed between the input power budget and the actual power consumed in the many-core systems, are typically ignored, thus leading to poor energy efficiency. In this paper, we propose a scheme to effectively power the on-chip communications by exploiting the available power slack that is totally wasted in current many-core systems. As so, the demand for extra energy from external power sources (e.g., batteries) is minimized, which helps improve the overall energy efficiency. In essence, the power slack is stored at each node and the proposed routing algorithm uses a dynamic programming network to find the globally optimal path, along which the total energy stored on the nodes is the maximum. Experimental results have confirmed that the proposed scheme, with low hardware overhead, can reduce latency and extra energy consumption by 44% and 48%, respectively, compared with the two competing routing methods.

Index Terms——Adaptive routing, dynamic programming network, networks-on-chip, power slack.

1. Introduction

Energy efficiency has become a major consideration in high-performance many-core systems. A large number of many-core systems, whether they are used in datacenters[1]or in embedded systems[2], can be powered by energy harvested from the environment. To maximize the energy efficiency, numerous dynamic power management approaches[3]have been proposed to allocate the powerbudget adaptively to the cores, by means of voltage/frequency scaling or power gating. It has been observed that these management-enabled systems tend to create many power slacks which are defined as the difference between the input power budget (either scavenged from the environment or from the power supply) and the actual power consumption of cores. Fig. 1 shows the power budget and actual consumption of a solar-powered many-core system that employs the dynamic power management methods: dynamic power partitioning and control (DPPC) used in [3]. The solid line is the power budget scavenged from the solar energy and the dashed line is the actual power consumption of the system. In this system, as shown in Fig. 1, the power slacks are totally discarded leading to lower energy efficiency.

Actually, the wasted energy from the unused power slacks can be recycled to power the on-chip communications. Power slacks can be stored into miniaturized on-chip energy storage devices, like supercapacitors or micro-batteries[4],[5]. This stored energy can be used to power the communications; by doing so, the energy drain from external power sources like batteries can be minimized, which brings the benefits of energy saving and battery longevity. One possible approach to store and exploit the power slack will be illustrated in the following example.

Energy from power slacks will be distributed and stored at the tiles beforehand, and data packets/flits can be routed following a power slack aware routing scheme. In the example shown in Fig. 2, energy recovered from power slacks are stored in the supercapacitors at each node. The color map represents the relative quantity of the stored energy. When a data transmission session is initiated between a source and a destination nodes, the path along which the nodes have the highest accumulated energy recovered from the power slacks and less congestion will be selected for routing the data packets. In this way, it can be seen that little, at the best case, even zero energy will be drawn from the power rail. In a simple term, energy spent on communications can be substantially reduced by exploiting the power slacks.

This novel technique of exploiting power slacks to power on-chip communications is referred as exploiting power slack (EPS) in this paper. EPS is a vast departure from the existing popular low-power design techniques,especially in the category of dynamic power management, where power saving is achieved by squeezing out unnecessary consumption. In other words, EPS recycles the wasted energy in current many-core systems to improve the energy efficiency, and as a result, it helps reduce the energy needed from the external power sources. EPS is complementary to modern power management approaches.

Fig. 1. Power slacks of a solar-powered many-core system.

Fig. 2. Routing path based on power slacks, with the highest accumulated energy recovered from the power slacks to minimize the need of extra energy from external power rail.

EPS consists of two major aspects. First, the power slack needs to get distributed among all the tiles and be stored locally. Another aspect concerns the actual data communications. In an EPS-enabled many-core system, the optimal routing path is determined as the one where the total energy stored in all the nodes on the path is the highest and this optimal path is selected using a dynamic programming network (DPN)[6]. The main contributions of this paper thus can be summarized as follows:

1) EPS is the first work known to recycle energy wasted in the existing many-core systems. Since the actual energy needed to route the packets has been minimized, the energy efficiency can be improved.

2) EPS takes advantage of DPN to find the global optimal path with the highest stored energy recovered from the power slacks. The overhead and energy consumption of DPN is considerably less than the energy saved by exploring power slacks.

3) Experimental results show that EPS can effectively reduce transmission latency and energy demands from the external power source.

The rest of this paper is organized as follows. Section 2 reviews the related work. Section 3 presents the EPS approach, and Section 4 provides the experimental results and analysis. Finally, conclusions are drawn in Section 5.

2. Related work

In this section, various works concerning 1) on-chip low power techniques, 2) on-chip energy storage techniques, and 3) the adaptive routing algorithms are reviewed.

2.1 Low Power Techniques

There is a growing attention on how to improve the energy efficiency under a given power budget in a many-core system. Traditional run time power management approaches using frequency/voltage scaling[3], power gating[7], and so on, are still applicable at core and/or system levels. These techniques are particularly appreciated when they are applied to certain on-chip modules/resources that consume disproportionally large amount of chip power. One good example is the on-chip networked interconnect, often referred as network-on-chip (NoC), whose power consumption alone contributes a large portion of the total system power consumption. Many efforts have been dedicated to reduce the power consumption of NoC, by shutting down the under-utilized sub-networks[8], or by passing routing pipeline stages[9], etc. All these low-power techniques, however, do not directly deal with power slacks found in many-core systems, so our proposed method is complementary to these approaches.

2.2 On-Chip Energy Storage

Numerous on-chip micro-supercapacitors have been proposed[5]and even commercialized. Microsupercapacitors can store energy based upon either the electrochemical double layer (EDL) effect, or the fast surface redox reactions (pseudo capacitance) that take place at the interface of electrodes and electrolytes. These microsupercapacitors exhibit fast charge and discharge rates (compared with rechargeable batteries) and long cycle lives (millions of cycles), and their energy density is getting close to that of batteries with the advance of using advanced nanostructured materials. In this paper, we assume that the supercapacitors are used to store energy from power slacks, and the energy stored in supercapacitors is sufficient to power the on-chip communications.

2.3 On-Chip Routing Algorithms

Routing algorithms can be categorized from different angles. The first angle is from the cost functions which can be the congestion level[6], temperature[10],[11], and fault information[12],[13], etc. A different kind of classification could be based on adaptiveness. And the routing algorithms are divided to be either adaptive or deterministic. In adaptive routing algorithms[6], the output channels with the max/min cost are selected at each router. DPN[11]can be used to find globally optimal paths with respect to the cost functions. Following the same philosophy, the local stored energy recovered from power slacks can also be used as the cost function in finding the paths with the maximum stored energy.

3. EPS Approach

3.1 Overview

Fig. 3 shows the overview of the EPS approach whereas each tile has an energy storage element. The EPS framework consists of two major aspects: 1) power slack distribution and storage, and 2) power slack aware routing. Excessive energy from the power slack needs to be stored at the supercapacitor attached to each tile. During the data transmission phase, each participating router will choose the output to ensure that the path has the maximum energy stored in supercapacitors.

Fig. 3. Overview of the EPS approach. Each tile is augmented with a supercapacitor-based on-chip energy storage element[5].

3.2 Power Slack Distribution and Storage

Energy that is collected from power slacks is distributed, through a power grid, in each tile’s supercapacitor and stored there. In the following, we will first introduce the power grid and the power/energy models, then we will present the supercapacitor model.

A.Power Grid and Power/Energy Models

Each tile or router is equipped with an energy storage unit and realized by using an on-chip supercapacitor[4]. Fig. 4 shows the equivalent circuit of one tile in the power delivery network[14]together with an energy storage unit, whereCSC,Ci,j,Li,j, andRi,jare the lumped equivalent capacitor, inductor, and resistor between nodeiand its adjacent nodej.

Fig. 4. Equivalent circuit of one node in the power delivery network[14]together with the energy storage element.

The current demand of loadiin Fig. 4 at each node can be modeled as

whereIch(k,t) andIr(k,t) are the equivalent current demands of the link and router at cyclek[14].

Let SW be a vector of sizen(the number of link wires) with each element switaking values of 0 and 1,i=1, 2, ···,n. The current demand of a link can be expressed as[14]

whereIi,ch,kis the current of wirei∈ {1,2,… ,n} for the link at cyclek, M is the decoupling transformation matrix[14], and,ch,swikis the switching direction of wireiat cyclek.

To determine the current need of a router, LetΨ={RECEIVE, ROUTE, FORWARD, STANDBY} be the set of micro-architectural-level processes that can be executed by a router. These processes deal with receiving a flit, computing a route, forwarding a flit, and idling (leakage power), and their energy consumption is determined as follows, respectively.

The energy of the RECEIVE process is the energy required for storing the flits to the input buffer.

During a ROUTE process, energy is consumed for route computation, which is only performed for header flits (in the wormhole switching).

The total energy consumption of a FORWARD process is the energy spent on data retrieval from the input buffer, switch allocation, switch traversal, and link traversal.

The total energy consumed by routerrat cyclek,Er(k), can be expressed as

whereEψis the energy required by the router circuit to execute processψ, andαψ(r,k) is the number of instantiations of processψin routerrat cyclek. The termaccounts for energy contributed by leakage power, whereis the leakage power and Δtis the cycle time.

Thus, the amount of current needed by the routerris

The current demand of loadican be derived by tools in [14] which combines the router micro-architectural level power model and an NoC event driven simulator.

B.Energy Storage Device Model

The energy storage unit in Fig. 4 could be implemented by on-chip supercapacitors[5]which can be essentially modeled as a capacitorCSC[4],

whereε0is the permittivity of the vacuum,εris the relative permittivity of the electrolyte,dis the double-layerthickness and it is an electrolyte-dependent parameter, andAis the overall surface area of the electrodes.

The remaining energy stored in the supercapacitor at time pointtis

whereQ(t) is the charge remaining in the supercapacitor.

The power delivered to each node can be derived by (1) to (5). When the stored energy is insufficient to forward a flit, the router might either hold the flit in its buffer, or still forward it, but with the energy drain from an external power source (e.g., external batteries), depending on the task criticality. Thus, to reduce the leakage power when holding a flit in a buffer, two voltage levels,VDDHandVDDL, are assumed here: the former with a higher voltage level is for normal operations and the latter for the low-power mode to reduce the leakage. The low-power model can be used to hold flits in buffers when the stored energy is not sufficient to forward a flit. Different voltage islands can still talk to each other using circuits like level shifters[15]; their energy consumption and delay are denoted asELSandtLS.

C. Power Slack Aware Routing

Once the energy recovered from the power slack is stored at each tile, the routing algorithm can exploit the stored energy to forward packets to minimize extra energy consumption. To find a globally optimal path in that sense, DPN[6]is used.

D. DPN

DPN is a parallel implementation of Bellman’s equation with optimization. It has a graph of statesG(V,E), where each vertexv∈Vis a state and each edgee=(u,v) is the transition between the states, whereuis a vertex in the graph, too. Each transition is associated with a cost-to-go functionCu,vand each vertex has a expected rewardCMAX(v,D) to reach a destinationD. The optimization or decision-making problem can be expressed in a recursive form at each state as follows:

whereP(S,D) is the set of all paths from the source nodeSto the destination nodeD.

E. Finding Maximum Reward Paths Using DPN

To employ DPN to find the paths with the maximum stored energy, the NoC can be denoted as a connected graphG(V,E) where each vertexv∈Vis a tile and each edgee=(u,v) is a link between the two tiles, whereudenotes a tile in the graph, too. Each edge is associated with a cost-to-go functionCu,v, which can be defined as

To find a path with the maximum stored energy and less congestion (i.e., the maximum cost), the dynamic programming equation (7) can be calculated withinNiterations[6]. For each vertexv, calculate theCMAX(v,D) value in (7) with theCu,vdefined in (9).

The calculation of (7) at each vertex leads to finding the maximum cost path (optimal solution) under the power constraint inNsteps, given as (8). So, the optimal decision at each vertexvalong the maximum cost path to reach the destinationDcan be obtained as

Fig. 5 presents the algorithm operations required for updating the routing directions at each tile. Each vertexvreceives the different maximum costs to reach the destinationDfrom the adjacent vertices. In the main loop, the optimal cost will be computed for reach destinationD. The corresponding optimal output direction is selected to update the routing table. When the computation ofCMAX(v,D) at vertexvis done, it will be sent to all the adjacent vertices. The two for-loops are implemented in hardware with a parallel architecture and the computational delay complexity can be reduced to be linear.

Once an output direction with the maximum cost is found at each router, the flit can be forwarded to that output channel. If the stored energy is not sufficient to forward a packet, the flit is held in the buffer operating at the low-power mode. Each buffer is associated with a timerT, which could be set to different values, e.g., 10, 50, and 100 cycles. Once the timer is overflow, the flits will be forwarded using extra energy from the power supply system (e.g., external battery). Proper setting ofTstrikes a balance between performance and extra energy consumption needed, i.e., largerTindicates longer latency but a lower extra energy requirement.

The dynamic programming unit at each router is the same as that detailed in [6] and [11] with the total area and power overheads less than 2%[11]to a router. To avoid deadlocks, the physical network is separated into two virtual networks, VN0and VN1, and each of these virtualnetworks does not include any cyclic path by restricting some turns. VN0does not allow packets to turn to the north, while VN1does not allow packets to turn to the south. In addition, any packet only can traverse one virtual network. These routing policies will ensure that no cycle will be formed at the network level.

In this routing scheme, packets need to be distinguished by the virtual network that they shall pass through. One bit is thus added in the packet header to identify the virtual network designated for a packet. At the source router, this bit is set for the packets. If the destination is in the north to the source node, the packet follows VN0, while the packet follows VN1if the destinations are in the south to the source node. For the destinations that have the sameX-coordinate as the source, the random virtual networks can be chosen. All the intermediate nodes sitting in a routing path should never flip the virtual network identification bit; they just simply forward the packet to the next node on the same virtual network.

Fig. 5. Algorithm for updating the routing directions at each tile.

4. Experiemental Results

4.1 Experimental Setup

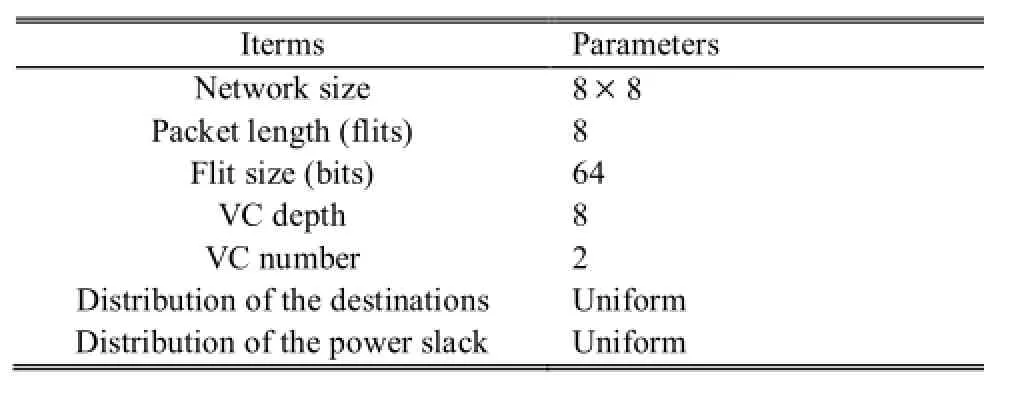

Table 1 tabulates the simulation configuration. Noxim[16], a cycle accurate NoC simulator, is extended to simulate an 8×8 NoC system. The power grid model[14]is integrated with the NoC simulator. We measure the average packet latency and extra energy consumption in the experiments. The dynamic and static power of the 5×5 router is estimated using Synopsis Design Vision 2009.06 with Taiwan Semiconductor Manufacturing Company (TSMC) 65 nm CMOS technology library.

Table 1: Simulation configuration

There are various on-chip micro supercapacitors made of different materials. The capacitance of the micro supercapacitors ranges from 80 mF/cm2to 90 mF/cm2[5]up to a few hundreds of microfarads per square centimetre[4]. Since the energy density is directly proportional to the capacitance, we select the capacitance value to be 90 mF/cm2in the experiments[5]. The area of the supercapacitors is assumed to be 1×1 mm2. The power slacks are represented as time series traces, generated from a uniform distributed random process with different expectations.

4.2 Performance Evaluation

In the experiments, we will first study the impact of the weight in the cost function (see (9)) and timerT(Sub-Section 3.2 E). Then two competing routing algorithms, XY routing and odd-even (OE) routing will be compared against EPS.

A.Impact of Weight Coefficient in Cost Function

Fig. 6 (a) and Fig. 6 (b) show the latency and extra energy consumption (from the external power rail) of EPS with different weight values included in the cost function (theγparameter in (9)). The timerTis set to be 0 and the injection rate is 1 flit/cycle. As mentioned in Sub-Section 3.2 E, the weight coefficientγis a parameter that helps balance between latency and extra energy consumption. In general, a larger value ofγprovides lower latency and a smallerγimplies lower energy consumption. This has been confirmed by the results shown in Fig. 6 (a) and Fig. 6 (b). In following experiments, we will use a weightγ=5.

Fig. 6. Effect of buffer level weight coefficientγon (a) packet latency and (b) extra energy consumption from external power sources.

B.Impact of Timer

The timerTalso balances between the latency and energy consumption: a largerTput an emphasis on low energy consumption while a smaller value ofTbrings shorter latency. Fig. 7 shows the latency and energy consumption under differentTvalues, where EPS-Tindicates the timer with a valueT. As shown in Fig. 7 (a), whenTincreases, the latency increases accordingly, i.e., the latency of EPS-100 (holding flits for at most 100 cycles incase of insufficient stored energy) is about 2 times over that of EPS-0 (forwarding the flits immediately with extra energy) at the packet injection rate of 1 flit/cycle. On the other hand, Fig. 7 (b) confirms that largerTwill tend to help reduce the energy consumption. For example, the extra energy request of EPS-0 is about 1.7 times over that of EPS-100 at the injection rate 1.5 flit/cycle.

Fig. 7. Latency and energy consumption under differentT’ values: (a) latency and (b) extra energy consumption with different timers.

C. Performance Comparison of EPS against Other Routing Algorithms

In this subsection, the performance and extra energy consumption of EPS are compared against two competing routing algorithms, XY routing and OE routing algorithms in terms of latency and extra energy consumption. The values of weight coefficientγand timer of EPS are set to be 5 and 0, respectively. The average power slack is set to be 10 W and 40 W, respectively, in Fig. 8. From Fig. 8, it can be seen that the EPS algorithm has the lowest latency among the three routing algorithms. For example, in Fig. 8 (a), the latency to XY and OE routing algorithms are 1.8 times and 1.5 times over that of EPS. EPS outperforms OE because EPS uses DPN which can find the globally optimal (less congested) path, while OE selects output channels only with the local knowledge of congestion from adjacent routers.

Fig. 8. Packet latency to different average power slacks: (a) 10 W and (b) 40 W.

Fig. 9 shows the extra energy requests of the three routing algorithms when the average power slack is set to be 10 W and 40 W, respectively. Fig. 9 indicates that with a higher average power slack, EPS requests less extra energy compared with the other two competing routing algorithms. For example, in Fig. 10 (b), EPS reduces the extra energy request by up to 48% and 34% over XY and OE routing alogrithms, respectively, when the injection rate is 1.5 flit/cycle.

Fig. 10 further shows the latency and extra energy request with the traces of four benchmarks from PARSEC. Fig. 10 (a) shows the latency reduction of EPS over OE and XY. EPS reduces the latency by as much as 22% and 30% over that of OE and XY. Fig. 10 (b) shows EPS can reduce about half of the extra energy request compared with that of OE and XY.

Fig. 9. Extra energy consumption when the power slack is (a) 10 W, and (b) 40 W.

Fig. 10. Latency and extra energy request with the traces of four benchmarks (a) latency reduction of EPS over OE and XY, and (b) extra energy of EPS, OE and XY with traces from three benchmarks.

D. Summary

From the above experiments, some conclusions can be drawn.

1) The buffer level weight coefficientγ(refer to (9)) and timerTrelate to a balance between the latency and energy consumption, i.e., a larger weight or smallerTcorreponding to lower latency, while a smaller weight or largerTindicating lower energy consumption.

2) Comparing with XY and OE routing, the proposed algoritm EPS has the lowest latency due to its capability of finding globally optimal paths. When the power slack is high, the proposed algoritm EPS can significantly reduceextra energy requests over the other two compared routing algorithms.

5. Conclusions

The power slack has been consistently ignored in most modern many-core systems. Exploiting or recycling the wasted energy available from the power slack can certainly improve the energy efficiency and reduce the extra energy request from the external power rail. In this paper, we have proposed an approach, named EPS, to convert and store the energy recycled from the power slack to power the on-chip communications. Essentially, EPS uses on-chip energy storage devices to store the power slacks at each node. Paths with the highest stored energy can be found by a DPN based routing algorithm. Experiments have confirmed that EPS can reduce latency and extra energy consumption over two existing routing algorithms, XY routing and OE routing algorithms, by up to 48% and 44%, respectively.

[1] C. Li, W. Zhang, C. B. Cho, and T. Li, “Solarcore: solar energy driven multi-core architecture power management,”inProc. of IEEE Int. Symposium on High Performance Computer Architecture, San Antonio, 2011, pp. 205-216.

[2] Q. Liu, T. Mak, J. Luo, W. Luk, and A. Yakovlev, “Power adaptive computing system design in energy harvesting environment,” inProc. of Int. Conf. on Embedded Computer Systems, Sammos, 2011, pp. 33-40.

[3] K. Ma, X. Wang, and Y. Wang, “DPPC: dynamic power partitioning and control for improved chip multiprocessor performance,”IEEE Trans. on Computers, vol. 63, no. 7, pp. 1736-1750, 2014.

[4] Y. Jiang, Q. Zhou, and L. Lin, “Planar MEMS supercapacitor using carbon nanotube forests,” inProc. of IEEE Int Conf. on Micro Electro Mechanical Systems, Sorrento, 2009, pp. 587-590.

[5] C. Shen, X. Wang, W. Zhang, and F. Kang, “A high-performance three-dimensional micro supercapacitor based on self-supporting composite materials,”Journal of Power Sources, vol. 196, no. 23, pp. 10465-10471, 2011.

[6] T. Mak, P. Y. K. Cheung, K. P. Lam, and W. Luk,“Adaptive routing in network-on-chips using a dynamic-programming network,”IEEE Trans. on Industrial Electronics, vol. 58, no. 8, pp. 3701-3716, 2011.

[7] S. Reda, R. Cochran, and A. Coskun, “Adaptive power capping for servers with multi-threaded workloads,”IEEE Micro Magazine, vol. 32, no. 5, pp. 64-75, 2012.

[8] R. Das, S. Narayanasamy, S. K. Satpathy, and R. G. Dreslinski, “Catnap: energy proportional multiple network-on-chip,” inProc. of ACM/IEEE Int. Symposiums on Computer Architecture, Shenzhen, 2013, pp. 320-331.

[9] Y. Jin, E. J. Kim, and T. M. Pinkston, “Communicationaware globally-coordinated on-chip networks,”IEEE Trans. on Parallel and Distributed Systems, vol. 23, no. 2, pp. 242-254, 2012.

[10] K.-C. Chen, C.-H. Chao, S.-Y. Lin, H.-S. Hung, and A.-Y. Wu, “Transport-layer assisted vertical traffic balanced routing for thermal-aware three-dimensional network-onchip systems,” inProc. of Int Symposiums on VLSI Design, Automation, and Test, Hsinchu, 2012, pp. 23-25.

[11] T. Mak, K.-P. Lam, F. Xia, A. Yakovlev, and C.-S. Poon,“Dynamic on-chip thermal optimization for three-dimensional networks-on-chip,”The Computer Journal, vol. 56, no. 6, pp. 756-770, 2013.

[12] A. Vitkovskiy, V. Soteriou, and C. Nicopoulos, “Dynamic fault-tolerant routing algorithm for networks-on-chip based on localised detouring paths,”IET Computers & Digital Techniques,vol. 7, no. 2, pp. 93-103, 2013.

[13] M. Radetzki, C. Feng, X. Zhao, and A. Jantsch, “Methods for fault tolerance in networks on chip,”ACM ComputingSurveys, vol. 44, no. 1, pp. 1-35, 2012.

[14] N. Dahir, T. Mak, F. Xia, and A. Yakovlev, “Modelling and tools for power supply variations analysis in networks-on-chip,”IEEE Trans. on Computers, vol. 63, no. 3, pp. 679-690, 2014.

[15] T. Nakamura, H. Matsutani, M. Koibuchi, K. Usami, and H. Amano, “Fine-grained power control using a multi-voltage variable pipeline router,” inProc. of IEEE Int Symposium on Embedded Multicore SoCs, Aizu, 2012, pp. 59-66.

[16] F. Fazzino, M. Palesi, and D. Patti. Noxim. [Online]. Available: http://www.sourceforge.net/Noxim

Xiao-Yu Xia was born in Hunan, China in 1987. He received the B.S. and M.S. degree from Wuhan University, Wuhan in 2010 and 2012, respectively, both in electrical engineering. He is currently the research assistant at Guangzhou Institute of Advanced Technology, Chinese Academy of Science. His research interests include networks-on-chip and parallel computing.

Wen-Ming Pan was born in Guangdong, China in 1982. He received the B.S. and M.S. degrees in electronic engineering from Jinan University, Guangzhou in 2004 and 2007, respectively. He works as a research assistant with Guangzhou Institute of Advanced Technology, Chinese Academy of Sciences. He has been engaged in the FPGA design research for years. His research interests include networks-on-chip and parallel computing.

Jia-Chong Kan was born in Liaoning, China in 1990. He received his M.S. degree in circuit and system from South China Normal University, Guangzhou in 2014. He works as an intern with the Guangzhou Institute of Advanced Technology, Chinese Academy of Sciences. His research interests include image processing and parallel computing.

Manuscript received August 11, 2014; revised November 23, 2014. This work was supported by the National Natural Science Foundation of China under Grant No. 61376024 and No. 61306024, Natural Science Foundation of Guangdong Province under Grant No. S2013040014366, and Basic Research Program of Shenzhen under Grant No. JCYJ20140417113430642 and No. JCYJ 20140901003939020.

X.-Y. Xia is with Guangzhou Institute of Advanced Technology, Chinese Academy of Sciences, Guangzhou 511400, China (Corresponding author e-mail: xiawork2013@163.com).

W.-M. Pan and J.-C. Kan are with Guangzhou Institute of Advanced Technology, Chinese Academy of Sciences, Guangzhou 511400, China (e-mail: wm.pan@giat.ac.cn; jc.kan@giat.ac.cn).

Digital Object Identifier: 10.3969/j.issn.1674-862X.2014.04.003

Journal of Electronic Science and Technology2014年4期

Journal of Electronic Science and Technology2014年4期

- Journal of Electronic Science and Technology的其它文章

- Study on Temperature Distribution of Specimens Tested on the Gleeble 3800 at Hot Forming Conditions

- Automatic Vessel Segmentation on Retinal Images

- Family Competition Pheromone Genetic Algorithm for Comparative Genome Assembly

- Quantification of Cranial Asymmetry in Infants by Facial Feature Extraction

- Intrinsic Limits of Electron Mobility inModulation-Doped AlGaN/GaN 2D Electron Gas by Phonon Scattering

- Real-Time Hand Motion Parameter Estimation with Feature Point Detection Using Kinect