D⁃ZENIC:A Scalable Distributed SDN Controllerr Architeccttuurree

(Central R&D Institute of ZTE Corporation,Nanjing 210012,China)

D⁃ZENIC:A Scalable Distributed SDN Controllerr Architeccttuurree

Yongsheng Hu,Tian Tian,and Jun Wang

(Central R&D Institute of ZTE Corporation,Nanjing 210012,China)

In a software⁃defined network,a powerful central controller provides a flexible platform for defining network traffic through the use of software.When SDN is used in a large⁃scale network,the logical central controller comprises multiple physical servers,and multiple controllers must act as one to provide transparent control logic to network applications and devices.The challenge is to minimize the cost of network state distribution.To this end,we propose Distributed ZTE Elastic Network Intelligent Controller(D⁃ZENIC),a network⁃control platform that supports distributed deployment and linear scale⁃out.A dedicated component in the D⁃ZENIC controller provides a global view of the network topology as well as the distribution of host information.The evaluation shows that balance complexity with scalability,the network state distribution needs to be strictly classified.

software defined network;OpenFlow;distributed system;scalability;ZENIC

1 Introduction

OpenFlow[1]was first proposed at Stanford Univer⁃sity in 2008 and has an architecture in which the data plane and control plane are separate.The ex⁃ternal control⁃plane entity uses OpenFlow Proto⁃col to manage forwarding devices so that all sorts of forwarding logic can be realized.Devices perform controlled forwarding according to the flow tables issued by the OpenFlow controller. The centralized control plane provides a software platform used to define flexible network applications,and in the data plane,functions are kept as simple as possible.

This kind of network platform is constructed on top of a gen⁃eral operating system and physical server.General software programming tools or scripting languages such as Python can be used to develop applications,so new network protocols are well supported,and the amount of time needed to deploy new technologies is reduced.The great interest in this concept was the impetus behind the founding of the Open Networking Foun⁃dation(ONF)[2],which promotes OpenFlow.

The main elements in a basic OpenFlow network are the net⁃work controller and switch.Generally speaking,the network has a single centralized controller that controls all OpenFlow switches and establishes every flow in the network domain. However,as the network grows rapidly,a single centralized OpenFlow controller becomes a bottleneck that may increase the flow setup time for switches that are further away from the controller.Also,throughput of the controller may be restricted, which affects the ability of the controller to handle data⁃path requests,and control of end⁃to⁃end path capacity may be weak⁃ened.Improving the performance of an OpenFlow controller is particularly important in a large⁃scale data center networks in order to keep up with rising demand.To this end,we propose Distributed ZTE Elastic Network Intelligent Controller(D⁃ZE⁃NIC),a distributed control platform that provides a consistent view of network state and that has friendly programmable inter⁃faces for deployed applications.

The rest of this paper is organized as follows:In section 2, we survey distributed controller solutions;in section 3,we dis⁃cuss the design and implementation of ZENIC and D⁃ZENIC; and in section 4,we evaluate the scalability of D⁃ZENIC.

2 Related Work

Ethane[3]and OpenFlow[1]provide a fully fledged,pro⁃grammable platform for Internet network innovation.In SDN, the logically centralized controller simplifies modification of network control logic and enables the data and control planes to evolve and scale independently.However,in the data center scenario in[4],control plane scalability was a problem.

Ethane and HyperFlow[5]are based on a fully replicated model and are designed for network scalability.HyperFlow us⁃es WheelFS[6]to synchronize the network⁃wide state across distributed controller nodes.This ensures that the processing of particular flow request can be localized to an individual con⁃troller node.

The earliest public SDN controller to use a distributed hash table(DHT)algorithm was ONIX[7].As with D⁃ZENIC,ONIX is a commercial SDN implementation that provides flexible dis⁃tribution primitives based on Zookeeper DHT storage[8].This enables application designers to implement control applica⁃tions without reinventing distribution mechanisms.

OpenDayLight[9]is a Java⁃based open⁃source controller with Infinispan[10]as its underlying distributed database sys⁃tem.OpenDaylight enables controllers to be distributed.

In all these solutions,each controller node has a global view of the network state and makes independent decisions about flow requests.This is achieved by synchronizing event messag⁃es or by sharing storage.Events that impact the state of the controller system are often synchronized.Such events include the entry or exit of switches or hosts and the updating or alter⁃ing of the link state.However,in a practical large⁃scale net⁃work,e.g,an Internet datacenter comprising one hundred thou⁃sand physical servers,each of which is running 20 VMs,about 700 controllers are needed[4].The maximum number of net⁃work events that need to be synchronized every second across multiple OpenFlow controllers might reach tens of thousands. The demand of which cannot be satisfied by the current global event synchronization mechanisms.

3 Architecture and Design

3.1 ZENIC

ZENIC[11]is a logically centralized SDN controller system that mediates between the SDN forwarding plane and SDN⁃en⁃abled applications.The core of ZENIC encapsulates the com⁃plex atomic operations to simplify scheduling and resource al⁃location.This allows networks to run with complex,precise pol⁃icies;with greater network resource utilization;and with guar⁃anteed QoS.

ZENIC Release 1 with core and applications developed by ZTE mainly supports the multi⁃version OpenFlow protocol.In ZENIC Release 2,distributed architecture is introduced into D⁃ZENIC;which provides a general northbound interface that supports third⁃party applications.

ZENIC comprises protocol stack layer,drive layer,forward⁃ing abstraction layer,controller core layer,and application lay⁃er,which includes internal and external applications(Fig.1). The controller core and self⁃developed applications are imple⁃mented in the C/C++domain(Fig.1,right).Maintenance of op⁃erations and compatibility between the northbound interface and third⁃party controller programming interface are imple⁃mented in the JAVA domain(Fig.1,left).This facilitates the migration of applications from other existing controllers.

The forwarding abstraction layer(FAL)defines a unified for⁃warding control interface in terms of reading and operating state,capacity,hardware resources,forwarding tables,and sta⁃tistics of the FAL.This layer also manages the derive instances of FAL devices and loads different deriver instances according to a description of the devices.It also makes it convenient to extend southbound protocols,such as NETCONF,SNMP,and IR2S.

▲Figure 1.ZENIC architecture.

Network virtualization support is a built⁃in feature of ZENIC that supports network partitioning based on the MAC address, port,IP address,or a combination of these.ZENIC Core adopts a 32⁃bit virtual network identify,with which maximum 232virtu⁃al networks may be supported theoretically.All packets and network states belonging to a specified virtual network are identified,labeled,and sent to an assigned controller.

Each virtual network is isolated from other virtual networks by default,and bandwidth may be shared or reserved.For com⁃munication between virtualnetworks,interworking rules should be configured by the network administrator through the standard northbound interfaces.To simplify configuration,ZE⁃NIC provides a default virtual network that enables intra⁃and inter⁃virtual network communication.

The controller core functions are responsible for managing network and system resources.This includes topology manage⁃

ment,host management,interface resource management,flow table management,and management of the network informa⁃tion created by the physical or virtual topology and flow tables. The core functions include not only maintaining the state of network nodes and topology but acquiring the location and state of hosts.In this way,a complete network view can be pro⁃vided so that further decisions on forwarding and services can be made.Fabric network management is a core function that decouples the access network from the interconnected net⁃works.The core functions include managing Internet packet formats,calculating the complete end⁃to⁃end path,and map⁃ping the forwarding policies to corresponding Internet encapsu⁃lation labels.Upper applications only make decisions on loca⁃tion and policies of access interfaces inside the SDN control domain.ZENIC supports Internet encapsulation formats such as MPLS and VLAN.In the next stage,VXLAN and GRE will also be supported.

3.2 D-Zenic

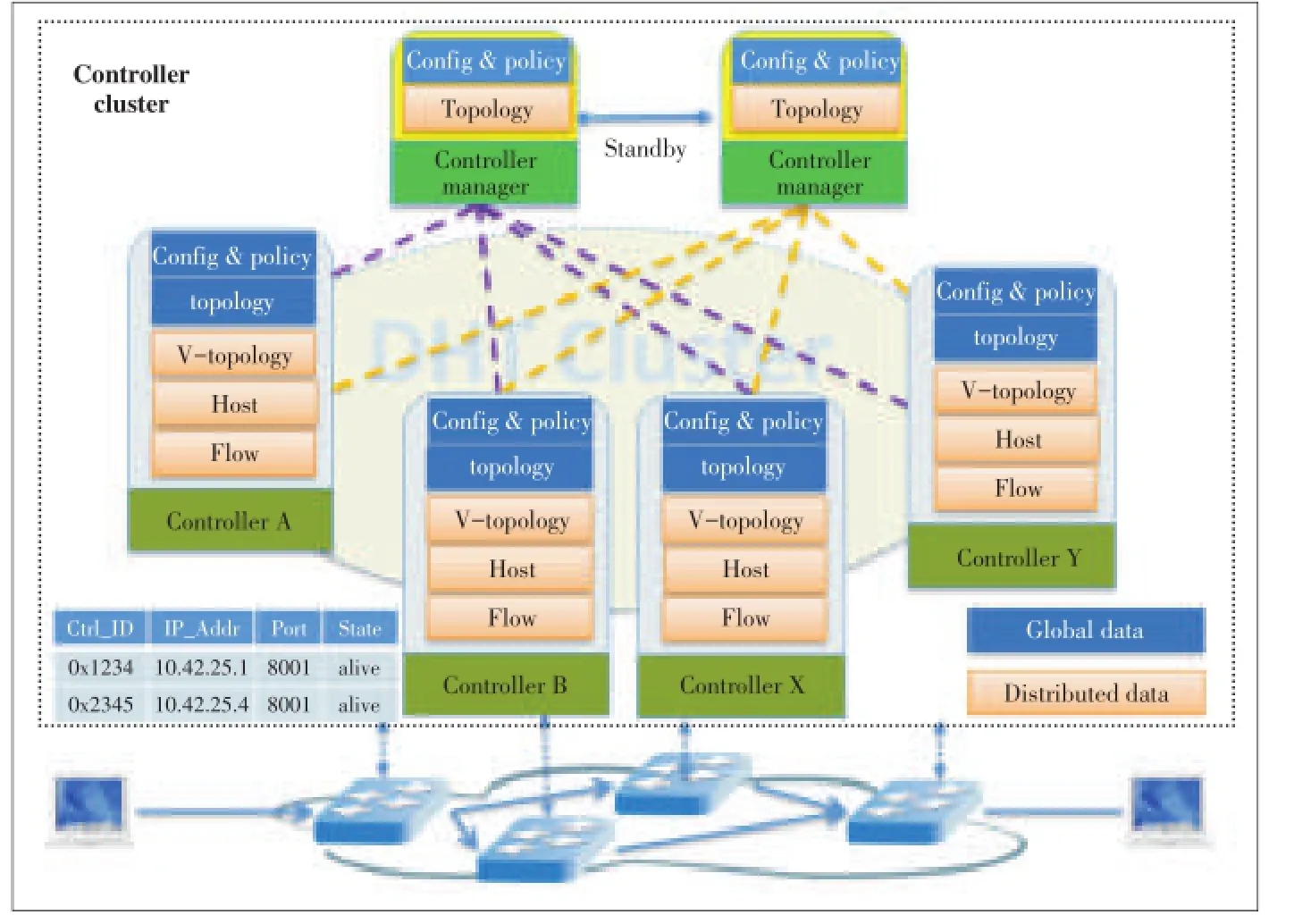

To support large⁃scale networks and guarantee performance, ZENIC Release 2 has a distributed controller architecture and is re⁃named D⁃ZENIC(Fig.2).The architecture features two critical approaches to reducing overhead in state synchroniza⁃tion of distributed controllers.In the first approach,the distrib⁃uted controller reduces message replication as much as possi⁃ble.In the second approach,controller states should synchro⁃nized according to the user’s demands.Only a necessary and sufficient state should be replicated.

The controller cluster includes a controller manager and one or more controller node(s).Here,the controller manger is a log⁃ical entity which could be implemented on any controller node.

3.3 Controller Cluster Management

In a D⁃ZENIC system,an improved one⁃hop DHT algorithm is used to manage the distributed controller cluster.Each control⁃ler manages a network partition that con⁃tains one or more switches.All OpenFlow messages and events in the network parti⁃tion,including the entry and exit of switch⁃es,detection of link state between switches, and transfer of flow requests by these switch⁃es,are handled independently by the con⁃nected controller.

The controllersynchronizes messages about changes of switch topology across all controllers in the network so that these con⁃trollers have a consistent global view of the switch topology.Controllers learn host ad⁃dresses from the packets transferred by their controlled switches and store this informa⁃tion in the distributed controller network. When a controller receives a flow request,it inquires about the source/destination addresses of the flow in the distributed network,chooses a path for the flow(based on the locally stored global switch topology),and issues the corre⁃sponding flow tables to the switches along the path.The con⁃troller also provides communication across the nodes,includ⁃ing data operation and message routing for the application.

The controller manager,which manages the controller clus⁃ter,is responsible for configuring clusters,bootstrapping con⁃troller nodes,and providing unified NBI and consistent global data.The controller manager is also responsible for managing the access of the controller node.When a controller node joins or leaves the cluster,the controller manager initiates cluster self⁃healing by adjusting relationship between specified switch⁃es and controller nodes.The controller manager node has a hot standby mechanism to ensure high availability.

In D⁃ZENIC,the switch should conform to OpenFlow Proto⁃col 1.3[12].The switch establishes communication with one master controller node and,at most,two slave controller nodes. It does this by configuring OpenFlow Configuration Point (OCP)on the controller manager.If the master controller fails, a slave requests to become the master.Upon receiving notifica⁃tion from the controller manager,the switch incrementally con⁃nects to another available controller.

In D⁃ZENIC,underlying communication between controller nodes is facilitated by a message middleware zeroMQ[13].Ze⁃roMQ is a successful open⁃source platform,and its reliability has been verified in many commercial products.

3.4 DB Subsystem Based on DHT

▲Figure 2.D⁃ZENIC deployment.

In an SDN,a switch sends a packet in message to the con⁃troller when the flow received by the controller does not match

any forward flow entry.The controller then decides to install a flow entry on specified switches.This decision is made accord⁃ing to the current network state,which included network topol⁃ogy and source/destination host location.In D⁃ZENIC,the net⁃work state is divided into global data and distributed data ac⁃cording to requirements in terms of scalability,update frequen⁃cy,and durability.

Global data changes slowly and has stringent durability re⁃quirements in terms of switches,ports,links,and network poli⁃cies.Other data changes faster and has scalability require⁃ments in terms of installed flow entries and host locations. However,applications have different requirements in different situations,e.g.,because the link load changes frequently,it is important to the traffic engineering application.

The DB subsystem based on DHT is custom⁃built for D⁃ZE⁃NIC.This subsystem provides fully replicated storage for glob⁃al data and distributed storage for dynamic data with local characteristics.Furthermore,for higher efficiency and lower la⁃tency,the basicput/getoperation is synchronous for global da⁃ta and asynchronous for distributed data.The DB subsystem based on DHT provides the following basic primitives:1)Put, for adding,modifying,or deleting an entry that has been decen⁃tralized;2)Get,to find a matched entry with specified keys;3) Subscribe,applications subscribe change event about topolo⁃gy,host,flow entry,etc.;4)Notify,to notify subscribers of any changes to an entry,and 5)Publish,to push an entry to all con⁃troller nodes.

3.5 Application Service Implementation

The application deployed on top of D⁃ZENIC has a consis⁃tent network state,so there is no difference in its core code. For example,a joining switch and its port list are automatically inserted by a Put operation and then published to all controller nodes.A host entry is inserted by a Put operation and then stored into three controller nodes selected using the DHT algo⁃rithm.The information of switches,links and hosts would be equally consumed by application services on each controller node.

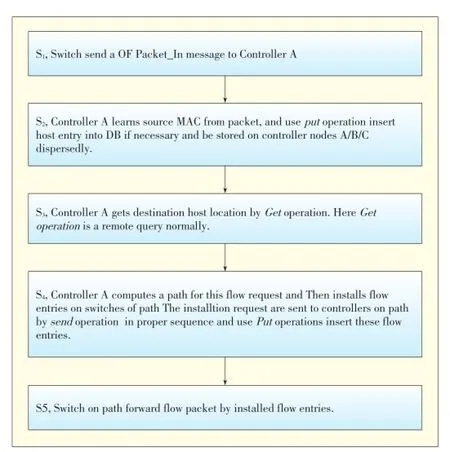

The steps for processing a basic L2 flow are shown in Fig.3. Of particular note is the use of the DB subsystem based on DHT.

4 Performance Evaluation

In D⁃ZENIC,each switch connects to one master controller and two slave controllers simultaneously.According to the OpenFlow specification[12],the master controller fully con⁃trols the switches,and the slave controller only receives parts of asynchronous messages.However,slave controller does not need to consider asynchronous events from its connected switches in D⁃ZENIC because the slave controller can get all switch states from the DB based on DHT.A flow request from a switch is handled locally by its connected master controller, and the performance of the controller is affected by the cost of data synchronization across controller nodes.D⁃ZENIC has near⁃linear performance scalability as long as the distributed database in the controller nodes can handle it.

▲Figure 3.Basic L2 flow implementation in D⁃ZENIC.

In a general Internet data center[4]comprising ten thou⁃sand physical servers,there may be 20 VMs running on each server.Each top of rack(TOR)has 20 servers connected,with 10 concurrent flows per VM[14].This will lead to 200 concur⁃rent flows per server;that is,there will be 2 million new flow installs per second.The flow entry count is about 200 on the software virtual switch,or 4000 on the TOR switch for per⁃source/destination flow entry in the switch.Actually,the count of the flow entry will increase tenfold because the expiration time of the flow entry is longer than the flow’s lifetime.

Theoretically,the total memory consumed by a controller is bounded byO(m(Hn+F)+TN),where H is the number of host attributes,which include ARP and IP at minimum;F is the number of flow entries on all connected switches to each controller;T is the number of topology entries,which include links and ports of all switches;N is the number of controller nodes;mis the count of data backups in DHT;and n is the in⁃dex table number of host attributes.Here,m=3 and n=2. The amount of CPU processing power consumed by a control⁃ler is given byO(m(p×H×n+q×F)+r×T×N),where p,q, and r denote the frequency of updates of the host attribute, flow entry,and topology entry,respectively.We estimate the capacity of an individual controller node according to the scale of controller cluster in Fig.4.Assuming that an individual con⁃troller can handle 30,000 flow requests per second,the data center previously mentioned should require 67 controller

nodes.If D⁃ZENIC is deployed,about 2350 extra operation re⁃quests per second will be imposed on each controller node,i. e.,the processing capacity of individual controller node will fall by 7.83%,and another four controller nodes will be re⁃quired.We could improve performance by optimizing the dis⁃tribution database model.

▲Figure 4.Capability of a single controller node according to the scale of the controller cluster.

5 Conclusion

Central control and flexibility make OpenFlow a popular choice for different networking scenarios today.However,coor⁃dination within the controller cluster could be a challenge in a large⁃scale network.D⁃ZENIC is a commercial controller sys⁃tem with near⁃linear performance scalability and distributed deployment.With D⁃ZENIC,a programmer does not need to worry about the embedded distribution mechanism.This is an advantage in many deployments.We plan to construct a large integrated test⁃bed D⁃ZENIC system that has an IaaS platform, such as OpenStack.This will allow us to thoroughly evaluate the scalability,reliability,and performance of D⁃ZENIC.

[1]McKeown Nick,et al.,“OpenFlow:enabling innovation in campus networks,”ACM SIGCOMM Computer Communication Review,vol.38,no.2,pp.69-74, 2008.doi:10.1145/1355734.1355746.

[2]Open Networking Foundation[Online].Available:https://www.opennetworking. org/

[3]M.Casado,M.J.Freedman,J.Pettit,et al.,“Ethane:taking control of the enter⁃prise,”ACM SIGCOMM Computer Commun.Review,vol.37,no.4,pp.1⁃12, 2007.doi:10.1145/1282427.1282382.

[4]A.Tavakoli,M.Casado,T.Koponen,et al..Applying NOX to the Datacenter[On⁃line].Available:http://www.cs.duke.edu/courses/current/compsci590.4/838⁃CloudPapers/hotnets2009⁃final103.pdf

[5]Amin Tootoonchian and Yashar Ganjali.Hyperflow:a distributed control plane for openflow[Online].Available:https://www.usenix.org/legacy/event/inmwren10/ tech/full_papers/Tootoonchian.pdf

[6]J.Stribling,et al.,“Flexible,wide⁃area storage for distributed systems with wheelFS,”NSDI,vol.9,pp.43-58,2009.

[7]Koponen,Teemu,et al.,“Onix:a distributed control platform for large⁃scale pro⁃duction networks.”OSDI,vol.10,pp.1-6,2010.

[8]P.Hunt,M.Konar,F.P.Junqueira,et al.,“ZooKeeper:wait⁃free coordination for internet⁃scale systems,”in Proc.2010 USENIX Conf.USENIX annual techni⁃cal conference,Boston,MA,USA,2010.

[9]Open Daylight Project[Online].Available:http://www.opendaylight.org/

[10]Marchioni Francesco and Manik Surtani.Infinispan Data Grid Platform[On⁃line].Available:http://www.packtpub.com/infinispan⁃data⁃grid⁃platform/book

[11]Wang Jun,“Software⁃Defined Networks:Implementation and Key Technology,”ZTE Technology Journal,vol.19,no.5,pp.38⁃41,Oct.2013.

[12]OpenFlow Switch Specification(Version 1.3.0)[Online].Available:https://www. opennetworking.org/images/stories/downloads/sdn⁃resources/onf⁃specifications/ openflow/openflow⁃spec⁃v1.3.0.pdf

[13]P.Hintjens.ZeroMQ:Messaging for Many Applications[Online].Available: http://www.pdfbooksplanet.org/development⁃and⁃programming/587⁃zeromq⁃messaging⁃for⁃many⁃applications.html

[14]A.Greenberg,J.R.Hamilton,N.Jain,et al.,“VL2:a scalable and flexible data center network,”ACM SIGCOMM Computer Communication Review⁃SIGCOMM’09, vol.39,no.4,pp.51-62,2009.doi:10.1145/1594977.1592576.

Manuscript received:2014⁃03⁃10

Biograpphhiieess

Yongsheng Hu(hu.yongsheng@zte.com.cn)received his PhD degree from Nanjing University of Science and Technology in 2008.He is a senior engineer in the Cen⁃tral R&D Institute,ZTE Corporation.His current research interests include distrib⁃uted system,SDN and cloud computing.

Tian Tian(tian.tian1@zte.com.cn)received her Dipl.⁃Ing degree in electronic and information engineering from Technical University Dortmund,Germany,in 2008. She is a senior standard and pre⁃research engineer in the Central R&D Institute, ZTE Corporation.Her current research interests include core network evolution and network function virtualization.

Jun Wang(wang.jun17@zte.com.cn)graduated from Nanjing University of Aeronau⁃tics and Astronautics,China,and received his MS degree in 2006.He is an archi⁃tect at the System Architecture department of Central R&D,ZTE Corporation.His research interests include core network evolution,distributed system,and datacen⁃ter networking.

- ZTE Communications的其它文章

- Software⁃Defined Networkkiinngg

- Network Function Virtualization Technologyy:: Progress and Standardizatiioonn

- Service Parameter Exposure and Dynamic Service Negotiation in SDN Environmenttss

- SDN⁃Based Broadband Network for Cloud Services

- Software⁃Defined Cellular Mobile Network Solutions

- SDN⁃Based Data Offloading foorr 55G Mobile Networrkkss