Physical informed memory networks for solving PDEs: implementation and applications

Jiuyun Sun,Huanhe Dong and Yong Fang

College of Mathematics and Systems Science,Shandong University of Science and Technology,Qingdao 266590,China

Abstract With the advent of physics informed neural networks(PINNs),deep learning has gained interest for solving nonlinear partial differential equations(PDEs)in recent years.In this paper,physics informed memory networks(PIMNs)are proposed as a new approach to solving PDEs by using physical laws and dynamic behavior of PDEs.Unlike the fully connected structure of the PINNs,the PIMNs construct the long-term dependence of the dynamics behavior with the help of the long short-term memory network.Meanwhile,the PDEs residuals are approximated using difference schemes in the form of convolution filter,which avoids information loss at the neighborhood of the sampling points.Finally,the performance of the PIMNs is assessed by solving the KdV equation and the nonlinear Schrödinger equation,and the effects of difference schemes,boundary conditions,network structure and mesh size on the solutions are discussed.Experiments show that the PIMNs are insensitive to boundary conditions and have excellent solution accuracy even with only the initial conditions.

Keywords: nonlinear partial differential equations,physics informed memory networks,physics informed neural networks,numerical solution

1.Introduction

Partial differential equations (PDEs) are widely used to describe nonlinear phenomena in nature [1–3].And,solving PDEs is helpful in understanding the physical laws behind these nonlinear phenomena [4–7].However,analytical solutions of PDEs are often very difficult to obtain [8].Accordingly,numerical methods have been proposed and promoted the study of PDEs [9,10].Due to the large computational demands of these methods,the accuracy and efficiency of solving PDEs are difficult to acquire simultaneously.

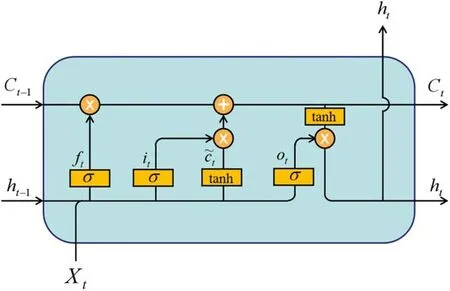

Figure 1.The general structure of LSTM.

In recent years,deep learning methods have been extended from natural language recognition and machine translation to scientific computing,and have provided new ideas for solving PDEs [11–13].According to the universal approximation theorem,a multilayered feed forward network containing a sufficient number of hidden neurons can approximate any continuous function with arbitrary accuracy[14,15],which provides the theoretical support for deep learning to solve PDEs.At this stage,there are two types of deep learning methods for solving PDEs [16].The first type keep the same learning approach as the original deep learning methods.The basic theory is constructing neural operators by learning mappings from function parameter dependence to solutions,such as Deeponet,Fourier neural operator,so on[17,18].This type needs to be trained only once to handle different initial value problems,but requires a large amount of data with high fidelity.The second type combines deep learning with physical laws.In second type,the physical laws and a small amount the initial and boundary data of PDEs are used to constrain the network training instead of lots of labeled data.The representative is physics informed neural networks (PINNs) that can solve both nonlinear PDEs and corresponding inverse problems [19].Based on the original PINNs,many improved versions were proposed [20–26].Ameya et al set a scalable hyper-parameter in the activation function and proposed an adaptive activation function with better learning capabilities and convergence speed [22].Lin and Chen devise a two-stage PINNs method based on conserved quantities,which better exploits the properties of PDEs[23].In addition to the physical laws,the variational residual of PDEs is also considered as a loss term,such as deep Ritz method,deep Galerkin method and so on [27–29].As the intensive study of the PINNs and its variants,these algorithms are applied to many fields,such as biomedical problems[30],continuum micromechanics [31],stiff chemical kinetics [32].Inspired by the PINNs,some deep learning solver for nonfully connected structures are proposed.Zhu et al constructed a physics-constrained convolutional encoding-decoding structure for stochastic PDEs[33].Based on the work of Zhu et al,physics-informed convolutional-recurrent networks combined with long short-term memory network (LSTM)were proposed,while the initial and boundary conditions were hard-encoded into the network [34].Mohan et al used an extended convolutional LSTM to model turbulence [35].Based on this,Stevens et al exploited the temporal structure[36].In general,existing deep learning solvers with LSTM structures can approximate the dynamic behavior of the solution without any labeled data,but the implementations rely on feature extraction of convolutional structures and are complex.

The aim of this paper is to build a flexible deep learning solver based on physical laws and temporal structures.Therefore,physics informed memory networks (PIMNs)based on LSTM framework is proposed as a new method for solving PDEs.In the PIMNs,the differential operator is approximated using difference schemes rather than automatic differentiation (AD).AD is flexible and ingenious,but loses information about the neighbors of the sampling points [37].The differential schemes are implemented as convolution filter,and the convolution filter is only used to calculate the physical residuals and does not change with network training.This is different from existing solvers with convolutional structures.Numerical experiments on the KdV equation and the nonlinear Schrödinger equation show that the PIMNs can achieve excellent accuracy and are insensitive to the boundary conditions.

The rest of the paper is organized as follows.In section 2,the general principle and network architectures of the PIMNs are elaborated.In section 3,two sets of numerical experiments are given and the effects of various influencing factors on the learned solution are discussed.Conclusion is given in last section.

2.Physics informed memory networks

2.1.Problem setup

In general,the form of PDEs that can be solved by physical informed deep learning is as follows:

where u(x,t) is the solution of PDEs and N [u]is a nonlinear differential operator.u0(x) and u1(t),u2(t) are initial and boundary functions,respectively.And,the basic theory of physical informed deep learning is approximating the solution u(x,t) through the constraints of the physical laws [15].Therefore,the PDEs residual f(x,t) is defined as

The keys of the PIMNs are the calculation of physical residuals f(x,t) using the difference schemes and the establishment of the corresponding long-term dependence.

2.2.Physics informed memory networks

In this part,the framework and principles of the PIMNs are given.As shown in figure 2,the basic unit of the PIMNs is LSTM.LSTM inherits the capability of the recurrent neural network for long sequence data and can avoid the problem of vanishing gradient [38].

The structure of the LSTM unit is shown in figure 1.Xt,ht,ct,,ft,it,otare the input,the hidden state,the cell state,the internal cell state,the forget gate,the input gate,the output gate,respectively.ftand itcontrol the information forgotten and added to ct.is the information added to ct.The output is determined jointly by otand ct.The mathematical expression of LSTM is shown as follows:

Here,W,b are the network parameters,and ◦represents the Hadamard product.

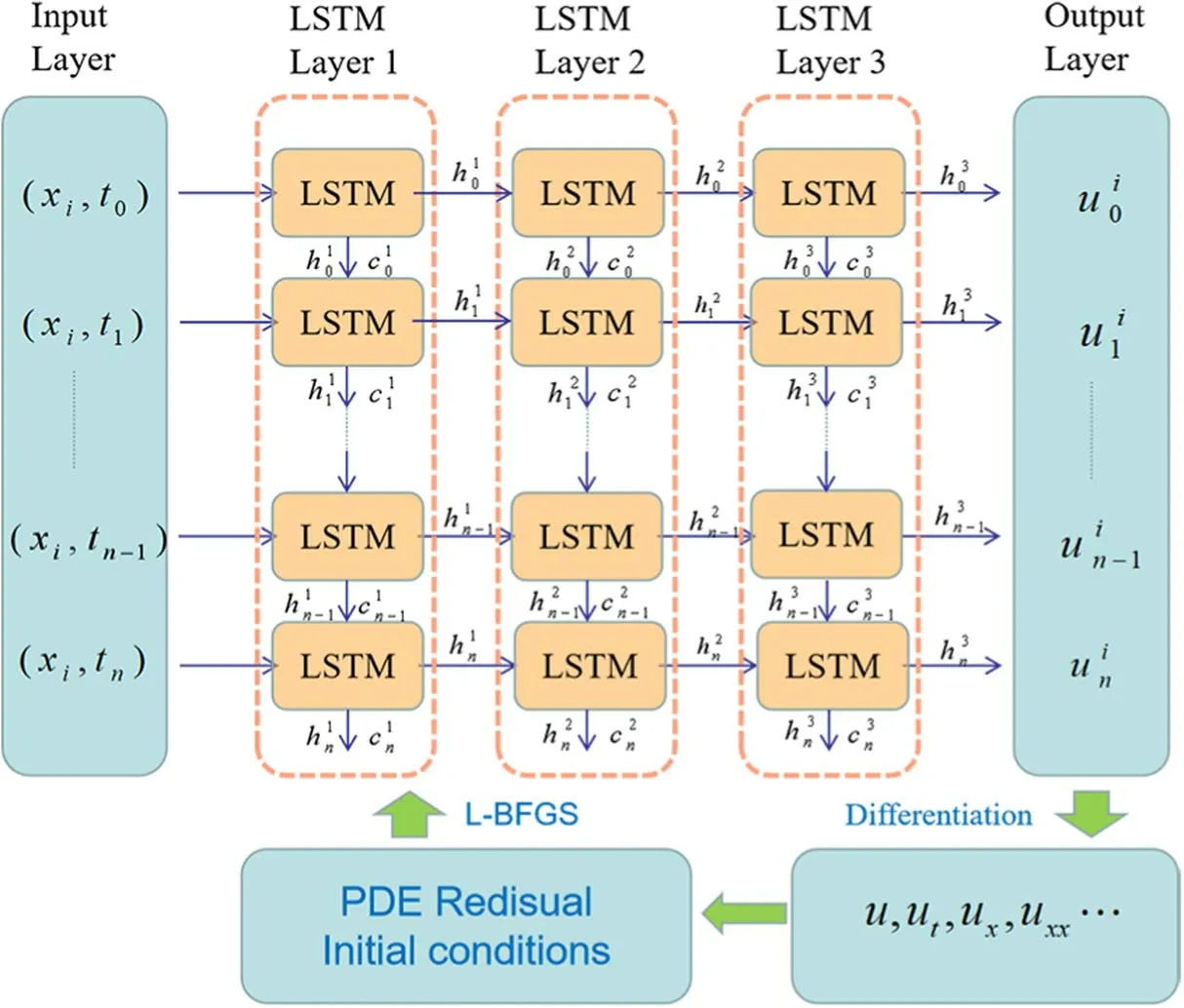

Figure 2.The general structure of the PIMNs.

In the PIMNs,subscripts and superscripts ofandrefer to the number of time series and layers,respectively.LSTM unit imports the outputof the current moment t to the next moment t+1,which links all moments of the same spatial point.In addition,is also used as input to moment t for the next LSTM layer,which strengthens the connection between different moments.It should be noted that the hidden nodes of the last LSTM are fixed.In fact,we use the last LSTM to control the dimensionality of the output.

As shown in figure 2,the inputs to the PIMNs are the coordinates of the grid points in the region [x0,x1]×[0,T].The region [x0,x1]×[0,T] is meshed into (m+1)×(n+1)grid points.Each set of inputs to the PIMNs are coordinate values (xi,t0),(xi,t1),…,(xi,tn) at the same location.The outputs are the corresponding(corresponding to each row of the left panel of figure 3).Based on the outputof the PIMNs,their loss functions can be constructed.The loss function of includes three components:

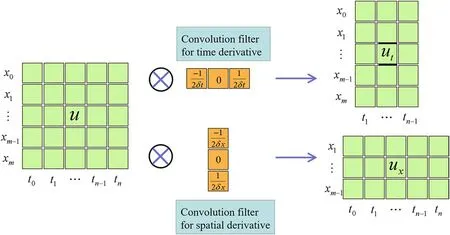

Here,δx and δt represent spatial and temporal intervals,respectively.To accelerate the training,the difference schemes are implemented by convolution operations.Taking second-order central difference schemes as an example,the convolution filters are:

Figure 3 illustrates the computation of uxand ut.Higher order derivatives can be obtained by performing a difference operation on uxand ut,such as

Figure 3.The convolution process of difference schemes.

As shown in figure 3,the padding is not done to avoid unnecessary errors.This leads to the fact that utat t=t0,t=tnand uxat x=x0,x=xmcannot be computed (in terms of the second-order central difference).In other words,fjicannot be computed at t=t0(i.e.the initial condition)due to the lack ofA similar problem arises with the boundary conditions.Therefore,fjiat t=t0,x=x0and x=xmwill not be used to compute the loss.When higher order derivative terms are included in fji,the region in which losses are not computed is correspondingly enlarged.In fact,since the spatial and temporal intervals we obtain are usually small and the boundaries of the domain can be bounded by the initial and boundary conditions,the numerical solutions of the PDEs can be approximated even without computing fjinear the boundaries of the domain.And,the boundary conditions are not necessary,which will be tested in the numerical experiments.Correspondingly,the total loss excludes the boundary loss when the boundary condition is missing.In addition,the spatial derivatives at t0are constructed by known initial conditions.Therefore,except for being the target for the initial loss,the initial conditions are also used indirectly for the PDEs residuals.

3.Numerical experiments

In this section,the PIMNs are applied to solve the KdV equation and the nonlinear Schrödinger equation.Specifically,the effects of different difference schemes on the solving ability of the PIMNs are discussed,and the network structure and mesh size that minimize the error are investigated.Moreover,the performance of the PIMNs and the PINNs are compared.

In the numerical experiments,the implementation of the PIMNs is based on Python 3.7 and Tensorflow 1.15.The loss function is chosen as the mean squared loss function,and L-BFGS is used to optimize the loss function.All numerical examples reported here are run on a Lenovo Y7000P 2020H computer with 2.60 GHz 6-core Intel(R) Core(TM) i7-10750H CPU and 16 GB memory.In addition,the relative L2error is used to measure the difference between the predicted and true values and is calculated as follows:

3.1.Case 1: The KdV equation

The KdV equation is a classical governing model for the propagation of shallow water waves and has important applications in many areas of physics,such as fluid dynamics,plasma [39–41].In general,the KdV equation is given by

where q0(x) is an initial function,and q1(x) and q2(x) are boundary functions.x0and x1are arbitrary real constants.The PDEs residual f(x,t) corresponding to the KdV equation is:

And,the existence theory of equation (14) can be referred to in the [42].Here,the traveling wave solution is simulated:

Taking [x0,x1] and [t0,t1] as [−10,10] and [0,1],the corresponding initial and boundary functions are obtained.

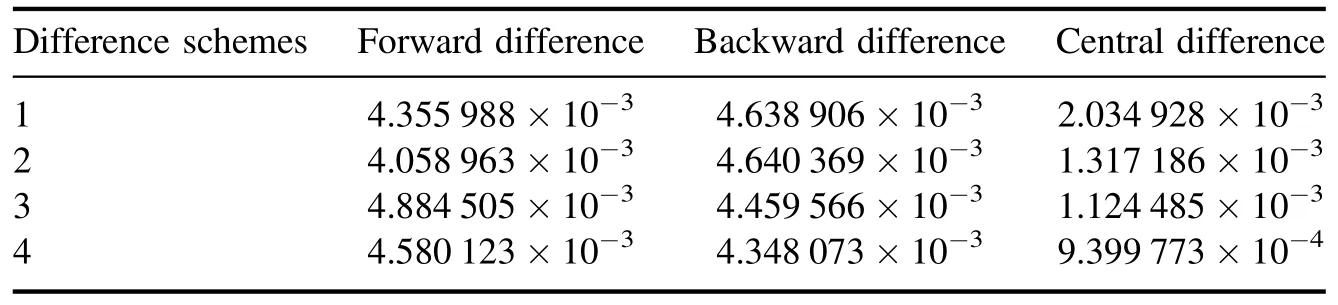

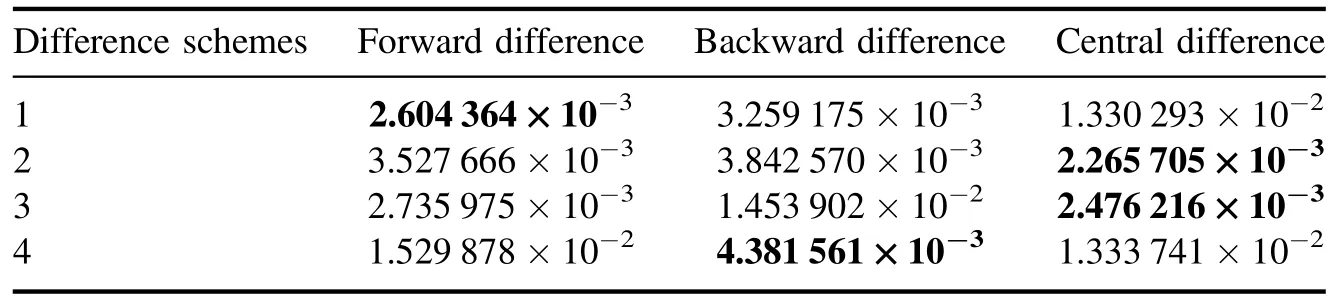

3.1.1.Comparison of different difference schemes for solving the KdV equation.In section 2.2,we constructed the PDEs residuals by the second-order central difference.However,it is important to discuss which difference scheme is suitable for constructing time derivatives for establishing the long-term dependence of PDEs.Here,forward difference,backward difference and central difference are used to computer the temporal derivatives,respectively.The space [−10,10] is divided into 1000 points and the time [0,1] is divided into 100 points.Two LSTM layers are used with the number of nodes 30 and 1,respectively.Since the initialization of the network parameters is based on random number seeds,4 sets of experiments based on different random number seeds (i.e.1,2,3,4 in table 1)were set up in order to avoid the influence of chance.The relative errors for four sets of numerical experiments are given in table 1.

From the data in table 1,the PIMNs can solve the KdV equation with very high accuracy for all three difference methods.The bolded data are the lowest relative L2errors produced by the same random number.It can be clearly seen that the relative L2errors produced by the central difference is significantly lower than that of the forward and backward difference when using the same network architecture and training data.This indicates that the temporal structure constructed by the central difference is most suitable for solving the KdV equation.

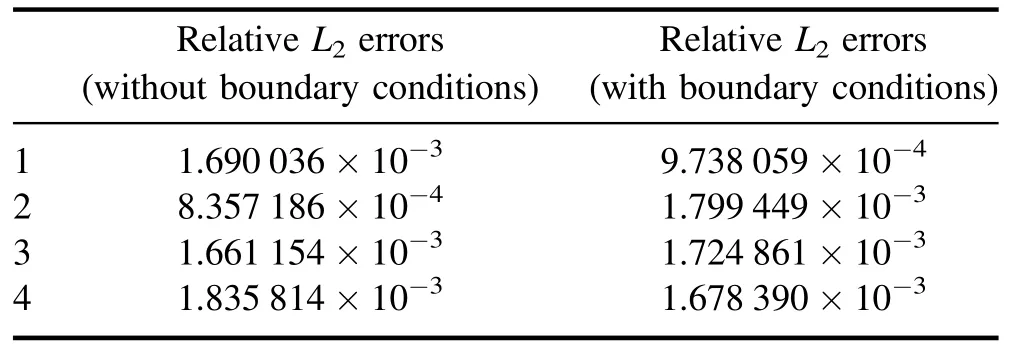

3.1.2.The effect of boundary conditions on solving the KdV equation.In the PIMNs,the boundary conditions are not necessary due to the establishment of long-term dependencies.Next,we analyze the influence of boundary conditions on the training and results.The experimental setup are consistent with the previous subsection.And,four sets of experiments with different seeds of random numbers were also set up.

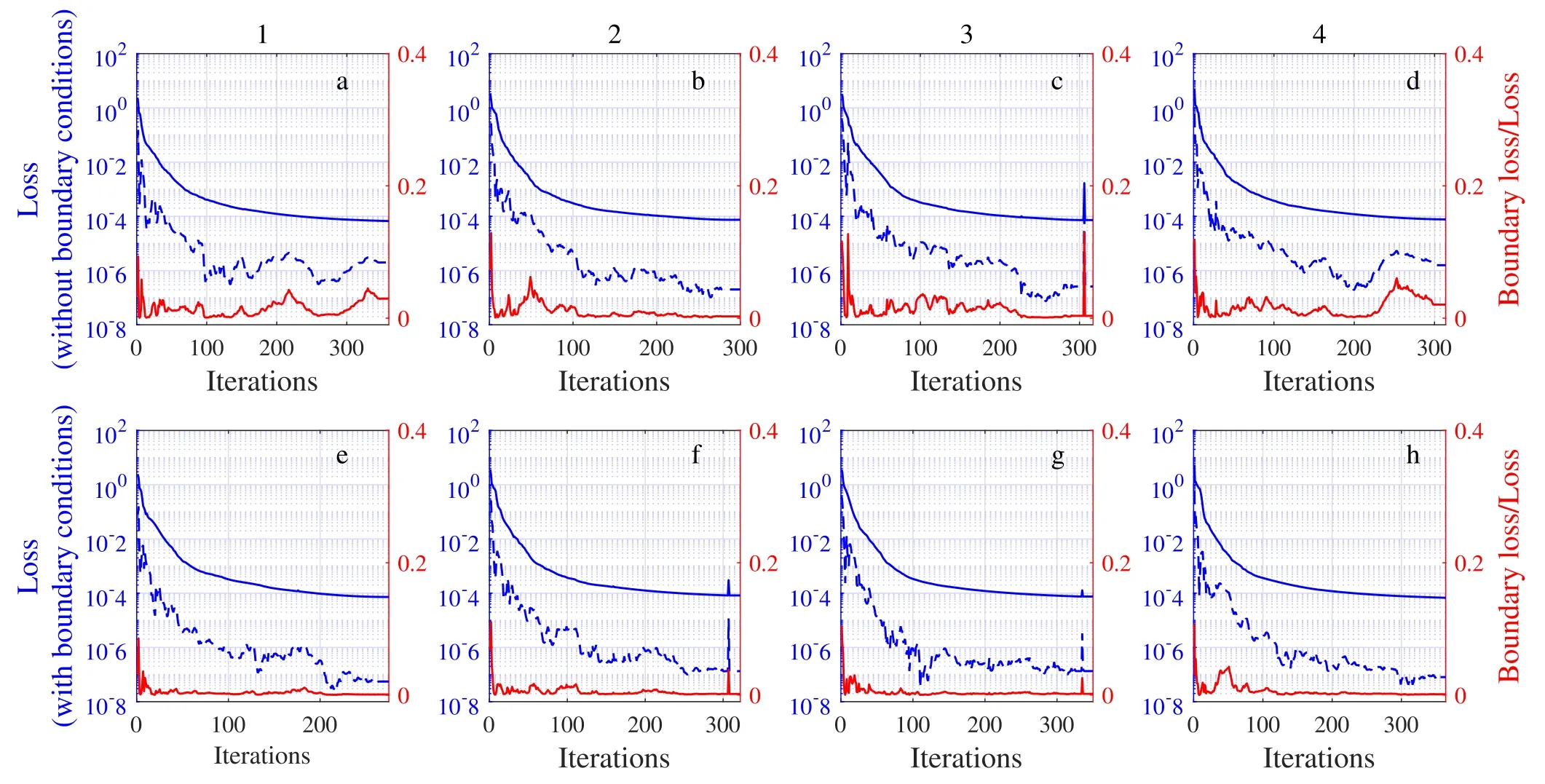

Figure 4 shows the loss curve,and subplots a–d and e–h correspond to the cases without boundary conditions and with boundary conditions,respectively.From figure 4,it can be seen that the total loss with and without boundary conditions can converge to the same level.Although the boundary loss without boundary conditions is more curved than the boundary loss with boundary conditions,the difference is not significant.Meanwhile,the red line shows that the influence of boundary loss is limited.In terms of the number of iterations,the boundary conditions do not accelerate the convergence of the network,but rather lead to a certain increase in iterations.

Figure 4.The loss curve:a–d are the loss curves without boundary conditions and e–h are the loss curves with boundary conditions.The blue solid line and the blue dashed line are MSE and MSEB(the left y-axis),respectively,and the red line is the ratio of MSEB to MSE(the right y-axis).

Table 2 gives the relative errors for the four sets of numerical experiments with and without boundary condition losses corresponding to figure 4.These two cases have very similar errors.the accuracy of the solution is not affected by boundary conditions.In general,since the influence of boundary conditions on both the training process and the relative L2errors is limited,the PIMNs is insensitive to the boundary conditions.But this does not mean that the boundary conditions are not important for solving PDEs,it only shows that the PIMNs can solve initial value problems for PDEs.

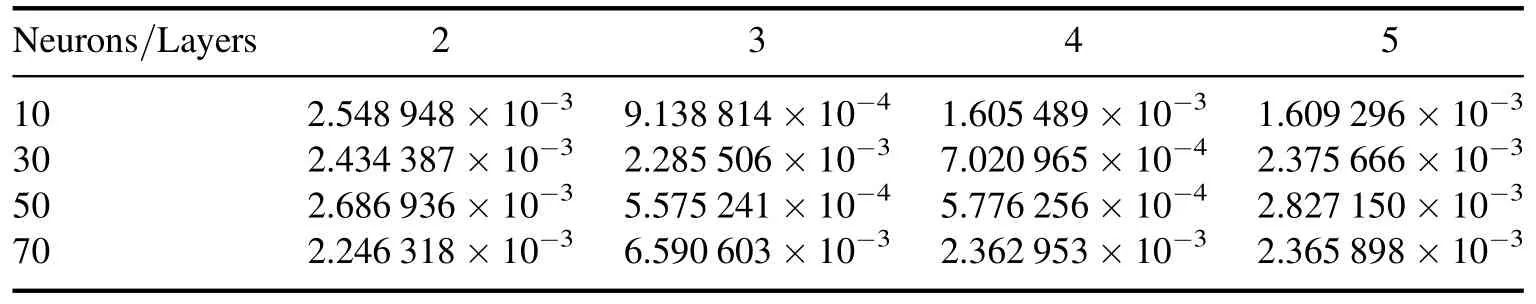

3.1.3.The effect of network structure for solving the KdV equation.In this part,based on the same training data,we investigated the effect of network structure on solving the KdV equation by setting different numbers of LSTM layers and hidden nodes.Complex networks tend to be more expressive,but also more difficult to train.The relative L2errors for different network structures are given in table 3.Among them,the number of hidden nodes does not include the last LSTM layer (the last LSTM hidden node is 1).

Table 3 shows experimental results of different network structures.When increasing the number of LSTM layers and hidden nodes,the error shows a tendency to decrease.Although not all data fit this trend,it can still be argued that a complex network structure is beneficial to increase accuracy.

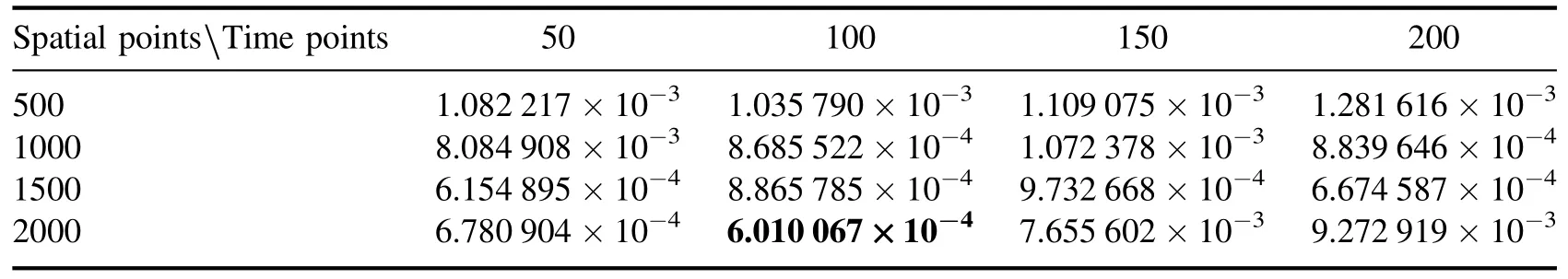

3.1.4.The effect of mesh size on solving the KdV equation.In this part,the impact of mesh size on errors is studied when the region is fixed as [−10,10]×[0,1].More temporal and spatial points represent smaller temporal and spatial steps and finer grids.In general,a finer grid produces a smaller truncation error in the difference schemes.This means numerical solutions with smaller errors.But,a fine grid also represents a large amount of training data,which is more demanding to train the model.The number of spatial points is set to 500,1000,1500 and 2000.The number of time points is set to 50,100,150 and 200.The network structure is chosen with 3 LSTM layers and the first 2 layers have 50 hidden nodes.

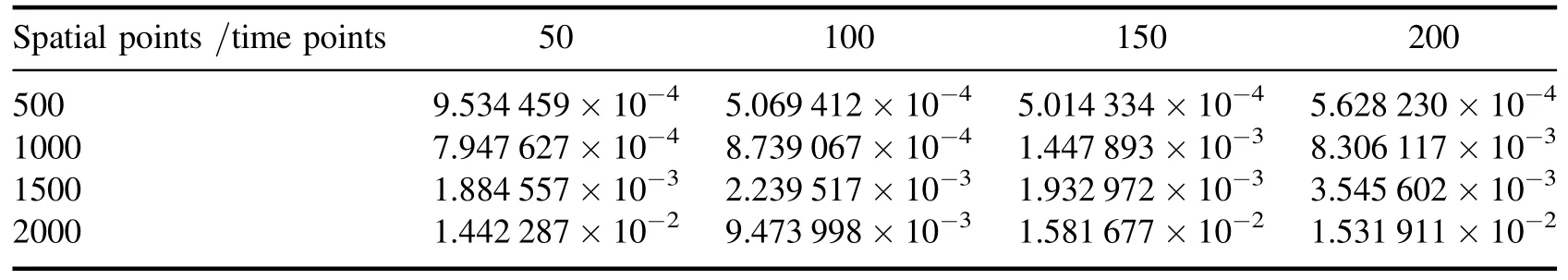

The errors at different mesh sizes are given in table 4.It can be observed that in the case of all time nodes,the error is minimum when the spatial node is 500.However,the change of time node does not have a regular effect on the error.To sum up,excessively increasing the grid number and decreasing grid size does not improve the accuracy of the solution for a fixed region.

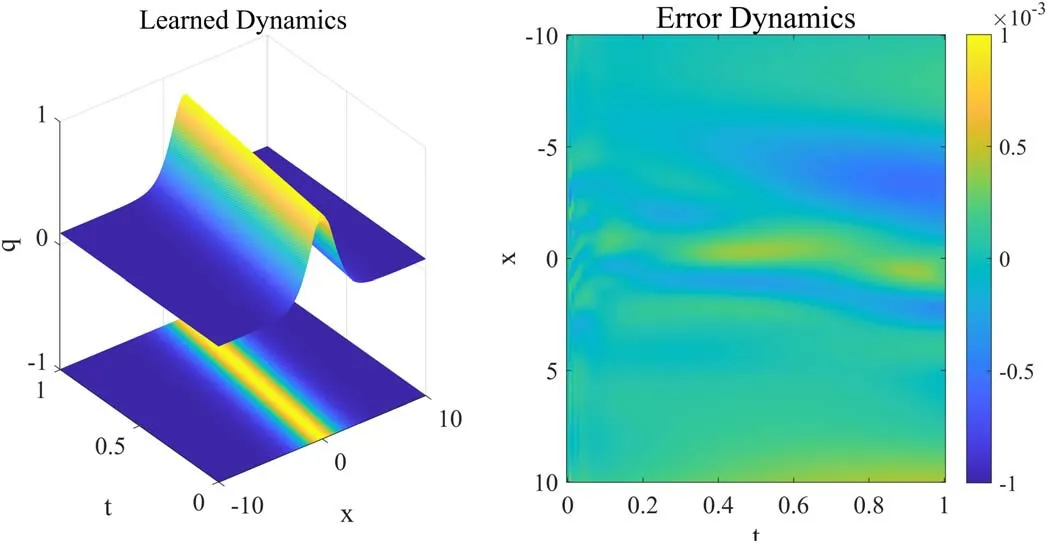

Figure 5 shows the dynamic behavior of learned solution and the error density diagram when the time points are 150 and the spatial points are 500.The number of iterations is 622 and the training time is 91 s.The error density diagram shows that the error has remained very low and has not changed significantly over time.Therefore,PIMNs can still solve the KdV equation with high accuracy when only the initial conditions are available.

Figure 5.The traveling wave solution of the KdV equation: the dynamic behavior of learned solution and the error density diagram.

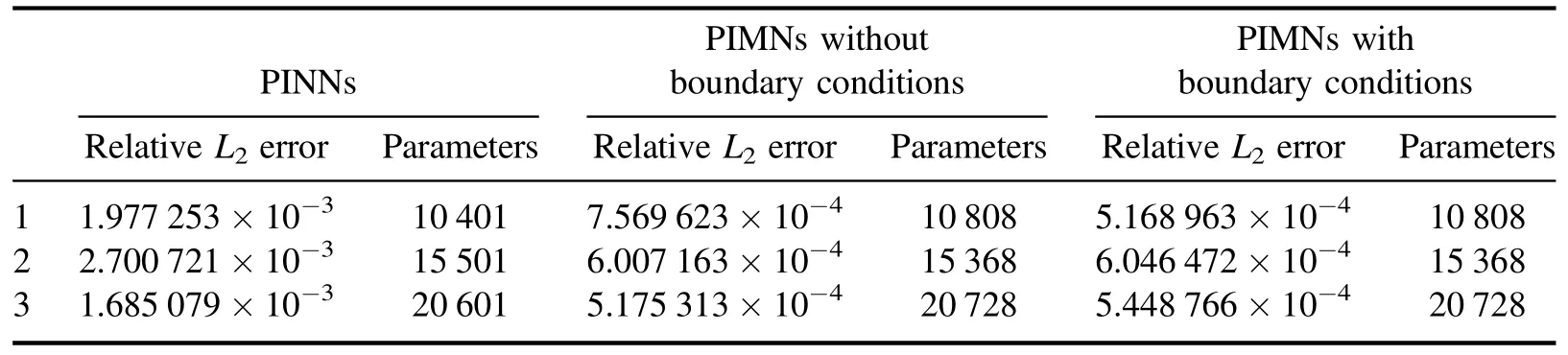

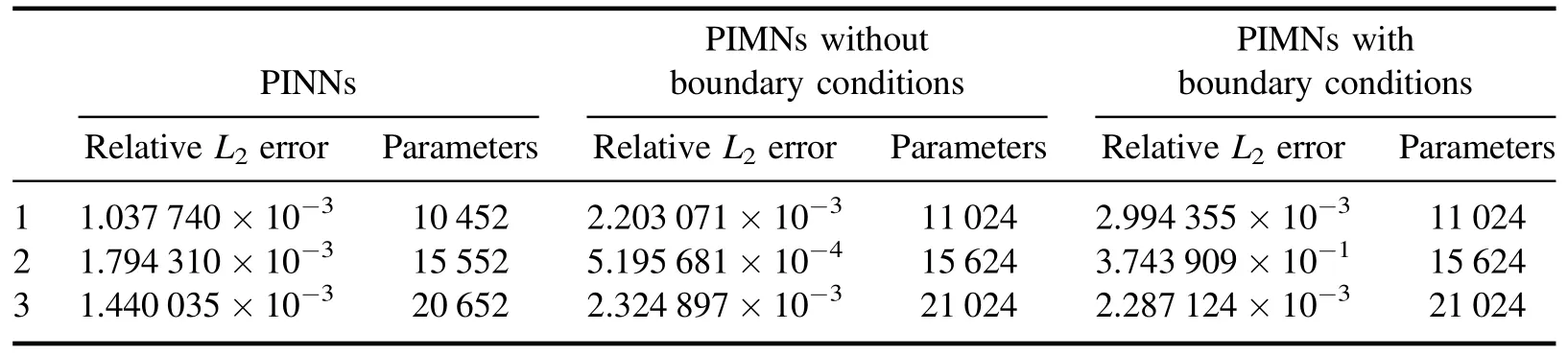

3.1.5.Comparison of the PINNs and the PIMNs for the KdV equation.In this part,the KdV equation is solved by the PINNs and the PIMNs with and without boundary conditions,respectively.To effectively compare these two methods,three sets of comparison experiments were set up and the number of parameters of the three cases is set close to each other.The number of hidden layers of the PINNs are 5,7,9 and the single layer neuron is 50.The numbers of parameters are 10 401,15 501,20 601.The number of initial and boundary points and collocations points are 100,10 000,respectively.The structure of the PIMNs is two LSTM layers,and the first layer is 50,60,70 nodes,respectively.The numbers of parameters are 10 808,15 368,20 728.Table 5 shows the relative errors and number of parameters for the PINNs with boundary condition losses and the PIMNs with and without boundary condition losses.

In terms of the relative errors,all three cases are able to solve the KdV equation with high accuracy.Both the errors of the PIMNs with and without boundary conditions is lower than that of the PINNs when the number of parameters is close.This indicates that the structure of the PIMNs is more advantageous when reconstructing the solutions of the KdV equation.Also,consistent with the 3.1.2 subsection,the PIMNs with boundary conditions does not show a significant advantage over the PIMNs without boundary conditions.In summary,the PIMNs can simulate the solution of the KdV equation with only initial conditions,and even have higher accuracy than the PINNs.

3.2.Case 2: Nonlinear Schrödinger equation

To test the ability of the PIMNs to handle complex PDEs,the nonlinear Schrödinger equation is solved.The nonlinear Schrödinger equation is often used to describe quantum behavior in quantum mechanics and plays an important role in the physical fields such as plasma,fluid mechanics,and Bose–Einstein condensates [43,44].The nonlinear Schrödinger equation is given by

where q are complex-valued solutions,q0(x) is an initial function,and q1(x) and q2(x) are boundary functions.The existence theory of equation (18) can be referred to in the[45].The complex value solution q is formulated as q=u+iv,and u(x,t) and v(x,t) are real-valued functions of x,t.The equation (18) can be converted into

The residuals of equation (18) can be defined as

Here,the traveling wave solution is simulated by the PIMNs and formed as

where c=−ω −v2/4>0.Taking c=0.8,v=1.5,the traveling wave solution equation (21) is reduced to

Taking [x0,x1],[t0,t1] as [–10,10],[0,1],the corresponding initial and boundary functions are obtained.

3.2.1.Comparison of different difference schemes for solving the nonlinear Schrödinger equation.Similar to the KdV equation,it is first discussed that which difference schemes should be used to calculate the temporal derivatives of the solution q.The space [−10,10] is divided into 1000 points and the time [0,1] is divided into 100 points.Two LSTM layers are used,and the number of nodes is 30 and 2,respectively.Table 6 shows the results generated by the four sets of random numbers.

In table 6,the bolded data are the lowest relative L2errors produced by the same random number.It can be seen that the temporal derivatives constructed by all three difference schemes can successfully solve the nonlinear Schrödinger equation with very small relative L2errors.And,result generated by the central difference performs better compared to the other two ways.Therefore,the central difference is used to calculate the time derivative in the subsequent subsections.

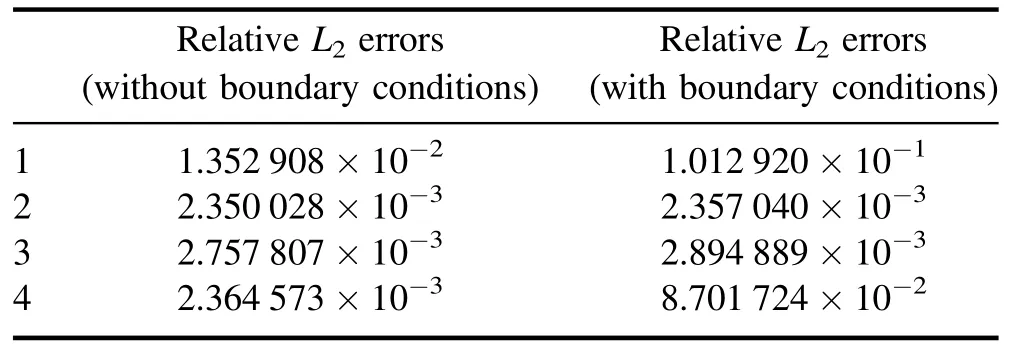

3.2.2.The effect of boundary conditions on solving the nonlinear Schrödinger equation.To investigate the effect of boundary conditions on the solution in the nonlinear Schrödinger equation,the training process and experimental results with and without boundary conditions are compared.The network structure and training data continue the previous setup.Four sets of experiments were also set up.

Figure 6 shows the loss curve of the training process.Subplots a–d show the loss curves without boundary conditions,and subplots e–h show the loss curves with boundary conditions.It is clear that the total loss convergence levels are close for the top and bottom.Although e–h have more iterations than a–d under the influence of the boundary conditions,all ratio are very low,less than 0.01.Therefore,the boundary conditions do not positively influence the training process.

Figure 6.The loss curve:a–d are the loss curves without boundary conditions and e–h are the loss curves with boundary conditions.The blue solid line and the blue dashed line are MSE and MSEB(the left y-axis),respectively,and the red line is the ratio of MSEB to MSE(the right y-axis).

Table 7 demonstrates the relative L2errors with and without boundary conditions corresponding to figure 6.After adding the boundary loss to the total loss,the error is kept at the original level.The influence of the boundary conditions on the errors remains limited.Since the boundary conditions do not positively affect either the training process or the results,they are not necessary for the PIMNs.

3.2.3.The effect of network structure for solving the nonlinear Schrödinger equation.In this part,we set different numbers of network layers and neurons to study that how the network structure affects the errors.The data are the same as those used before.Table 8 shows results of numerical experiments with different network structures.Note that the structures in the table 8 do not include the final LSTM layer.

From table 8,the error usually decreases as the number of neuron nodes and LSTM layers increases.Due to some chance factors,not all errors satisfy this law.In summary,complex structure of the PIMNs is more advantageous for solving differential equations.

3.2.4.Effect of mesh size on solving the nonlinear Schrödinger equation.In this part,we set different spatial and temporal points to study that how the mesh size affects the errors.The region remains [−10,10]×[0,1].The number of spatial points is set to 500,1000,1500 and 2000,and the number of time points is set to 50,100,150 and 200.The network structure is 3 LSTM layers,and the nodes of the first two layers are 50.The relative L2errors for different mesh sizes are given in table 9.

As can be seen from table 9,the relative error reduces as the grid size decreases.However,the error is not minimal at a grid number of 2000×200.This indicates that the grid determines the relative L2error to some extent,and moderate adjustment of the mesh size can improve the accuracy of the solution.

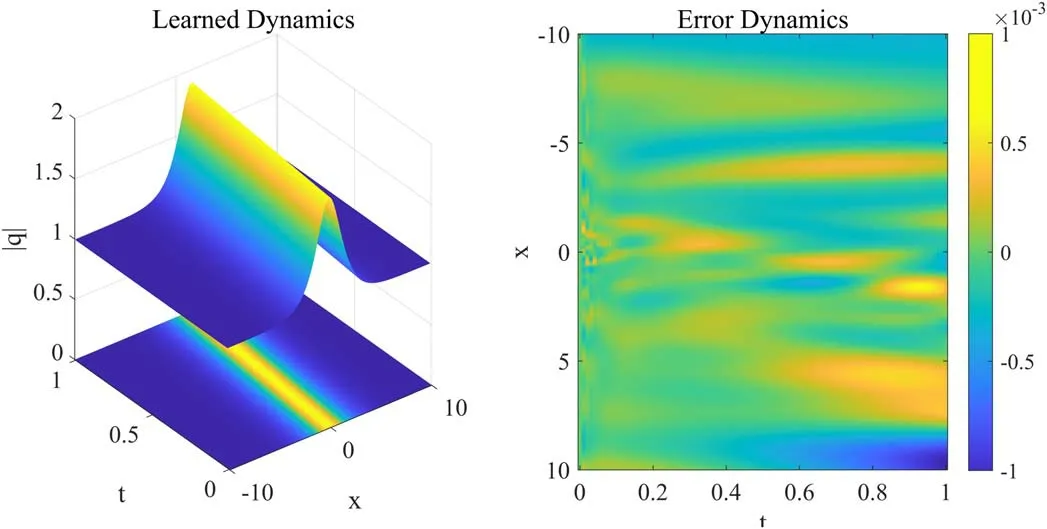

Figure 7 shows the dynamic behavior of learned solution and the error density diagram when the time points are 100 and the spatial points are 2000.The iterations are 2013 and the training time is 225 s.From the error density,although the overall level of error is low,it also demonstrates an increasing trend over time.In general,the PIMNs can solve the nonlinear Schrödinger equation with high speed and quality.

Figure 7.The traveling wave solution of the nonlinear Schrödinger equation:the dynamic behavior of learned solution and the error density diagram.

3.2.5.Comparison of the PINNs and the PIMNs for nonlinear Schrödinger equation.In this part,the nonliner Schrödinger equation is solved using the PINNs and the PIMNs with and without boundary conditions,respectively.And the differences between the two models are discussed by comparing the relative errors.Similarly to 3.1.5,the parameters of the two models are set specifically.The PINNs has 5,7,9 layers with 50 nodes in each layer.The initial boundary points and the collocations points are 100 and 10 000,respectively.The PIMNs have two LSTM layers and the first layer has 50,60,70 nodes.Table 10 gives all the relative errors and the number of parameters.

Table 1.The KdV equation: relative L2 errors for different difference schemes.

Table 2.The KdV equation: relative L2 errors with and without boundary conditions.

Table 4.The KdV equation: relative L2 errors for different mesh size.

Table 5.The KdV equation: relative L2 errors for the PINNs and the PIMNs.

Table 6.The nonlinear Schrödinger equation: relative L2 errors for different difference schemes.

Table 7.The nonlinear Schrödinger equation:relative L2 errors with and without boundary conditions.

Table 8.The nonlinear Schrödinger equation: relative L2 errors for different network structures.

Table 9.The nonlinear Schrödinger equation: relative L2 errors for different mesh sizes.

Table 10.The nonlinear Schrödinger equation: relative L2 errors for the PINNs and the PIMNs.

From the data of table 10,both the PINNs and PIMNs can solve the nonlinear Schrödinger equation with very low error.All errors are very close except for individual experiments.The PINNs are more advantageous at the number of parameters around 10 000 and 20 000,and the PIMNs without boundary conditions have lower errors at the number of parameters around 15 000.That is,the PIMNs without boundary conditions can obtain similar results to the PIMNs with boundary conditions.This demonstrates the powerful generalization ability of the PIMNs when there is no boundary condition.

4.Conclusion

In this paper,the PIMNs are proposed to solve PDEs by physical laws and temporal structures.Differently from the PINNs,the framework of the PIMNs is based on LSTM,which can establish the long-term dependence of the PDEs’dynamic behavior.Moreover,the physical residuals are constructed using difference schemes,which are similar to finite difference method and bring better physical interpretation.To accelerate the network training,the difference schemes are implemented using the convolutional filter.The convolution filter is not involved in the model training and is only used to calculate the physical residuals.The performance and effectiveness of the PIMNs are demonstrated by two sets of numerical experiments.Numerical experiments show that the PIMNs have excellent prediction accuracy even when only the initial conditions are available.

However,the PIMNs use only second-order central differences and do not use higher-order difference schemes.And,solving higher-order PDEs is worth investigating.In addition,most physical information deep learning methods construct numerical solutions of PDEs.In the [46],a neural network model based on generalized bilinear differential operators is proposed to solve PDEs[46].The method obtains a new exact network model solutions of PDEs by setting the network neurons as different functions.This proves that it is feasible to construct new exact analytical solutions of PDEs using neural networks.And how to construct new analytical solutions based on the PIMNs is a very worthwhile research problem.Compared with the fully connected structure,it is difficult to set the LSTM units in the same layer as different functions.These are the main research directions for the future.

Communications in Theoretical Physics2024年2期

Communications in Theoretical Physics2024年2期

- Communications in Theoretical Physics的其它文章

- The modeling non-sequential double ionization of helium atom under highintensity femtosecond laser pulses with shielding charge approximation

- Black hole evaporation and its remnants with the generalized uncertainty principle including a linear term

- Thermodynamic geometry of the RN-AdS black hole and non-local observables

- Non-static plane symmetric perfect fluid solutions and Killing symmetries in f(R,T)gravity

- Correlation between mechano-electronic features and scattering rates using deformation potential theory

- Phase diagram of muonium hydride: the significant effect of dimensionality