An Intelligent HealthCare Monitoring Framework for Daily Assistant Living

Yazeed Yasin Ghadi,Nida Khalid,Suliman A.Alsuhibany,Tamara al Shloul,Ahmad Jalal and Jeongmin Park

1Department of Computer Science and Software Engineering,Al Ain University,Al Ain,15551,UAE

2Department of Computer Science,Air University,Islamabad,44000,Pakistan

3Department of Computer Science,College of Computer,Qassim University,Buraydah,51452,Saudi Arabia

4Department of Humanities and Social Science,Al Ain University,Al Ain,15551,UAE

5Department of Computer Engineering,Korea Polytechnic University,Siheung-si,Gyeonggi-do,237,Korea

Abstract: Human Activity Recognition (HAR) plays an important role in life care and health monitoring since it involves examining various activities of patients at homes,hospitals,or offices.Hence,the proposed system integrates Human-Human Interaction(HHI)and Human-Object Interaction(HOI) recognition to provide in-depth monitoring of the daily routine of patients.We propose a robust system comprising both RGB (red,green,blue) and depth information.In particular,humans in HHI datasets are segmented via connected components analysis and skin detection while the human and object in HOI datasets are segmented via saliency map.To track the movement of humans,we proposed orientation and thermal features.A codebook is generated using Linde-Buzo-Gray (LBG) algorithm for vector quantization.Then,the quantized vectors generated from image sequences of HOI are given to Artificial Neural Network (ANN) while the quantized vectors generated from image sequences of HHI are given to K-ary tree hashing for classification.There are two publicly available datasets used for experimentation on HHI recognition:Stony Brook University(SBU)Kinect interaction and the University of Lincoln’s(UoL)3D social activity dataset.Furthermore,two publicly available datasets are used for experimentation on HOI recognition:Nanyang Technological University(NTU)RGB-D and Sun Yat-Sen University(SYSU)3D HOI datasets.The results proved the validity of the proposed system.

Keywords: Artificial neural network;human-human interaction;humanobject interaction;k-ary tree hashing;machine learning

1 Introduction

Recent years have seen an advanced use of multi-vision sensors to attain robustness and highperformance rates while tackling many of the existing challenges in visual recognition systems [1].Moreover,low-cost depth sensors such as Microsoft Kinect [2]are being used extensively ever since their introduction.In comparison with conventional visual systems,depth maps are unaffected by varying brightness and lighting conditions [3]which motivate reflection over a wide variety of applications of Human Activity Recognition (HAR).These applications include assisted living,behaviour understanding,security systems,human-robot interactions,e-health care,smart homes,and others[4].

To monitor the daily lifecare routine of humans thoroughly,this paper proposes a system that integrates the recognition of Human-Human Interaction (HHI) and Human-Object Interaction(HOI).In the proposed system,the silhouette segmentation of red,green,blue (RGB) and depth images from HHI and HOI datasets is carried out separately.After silhouette segmentation,there is the feature extraction phase which consists of mining two unique features,namely thermal and orientation features.Both HHI and HOI descriptors are combined and processed via Linde-Buzo-Gray(LBG)algorithm for compact vector representation.In the end,K-ary tree hashing is used for the classification of HHI classes,while Artificial Neural Network(ANN)is applied for the classification of HOI classes.

We have used two publicly available datasets for experimentation on HHI recognition:Stony Brook University(SBU)Kinect interaction and the University of Lincoln’s(UoL)3D social activity datasets.Furthermore,we have used two different publicly available datasets for experimentation on HOI recognition:Nanyang Technological University (NTU) RGB+D and Sun Yat-Sen University(SYSU)3D HOI datasets.

The main contributions of this paper are:

• Developing an efficient way of segmenting human silhouettes from both RGB and depth images via connected components,skin detection,morphological operations,and saliency maps.

• Designing a high-performance recognition system based on the extraction of unique orientation and thermal features.

• Accurate classification of HHI classes via K-ary tree hashing and HOI classes via ANN.

The rest of this paper is organized as follows:Section 2 explains and analyzes the research work relevant to the proposed system.Section 3 describes the proposed methodology of the system which involves an extensive pre-classification process.Section 4 describes the datasets used in the proposed work and proves the robustness of the system through different experiments.Section 5 concludes the paper and notes some future works.

2 Related Work

The related work can be divided into two subsections including some recently developed recognition systems for both HHI and HOI.

2.1 HHI Recognition Systems

In recent years,many RGB-D(red,green,blue,and depth)human-human interaction recognition systems have been proposed [5].Prati et al.[6]proposed a system in which multiple camera views were used to extract features from depth maps using regression learning.However,despite the use of multiple cameras,their system had restricted applicability on large areas and was not robust against occlusion.Q.Ye et al.[7]proposed a system comprising of Gaussian time-phase features using ResNet(Residual Network).A high performance rate was achieved but their system also had a high complexity rate.In [8],Ouyed et al.extracted motion features from the joints of two persons involved in an interaction.They used multinomial kernel logistic regression to evaluate HIR but the system lacked spatiotemporal context for interaction recognition.Moreover,Ince et al.[9]proposed a system based on skeletal joints movement using Haar-wavelet.In this system,some confusion was observed due to the similarities in angles and positions of various actions.Furthermore,Bibi et al.[10]proposed an HIR system with local binary patterns using multi-view cameras.A high confusion rate was observed in similar interactions.

Yanli et al.[11]proposed an HIR system that benefits from contrastive feature distribution.The authors extracted skeleton-based features and calculated the probability distribution.In[12],Subetha et al.extracted Histogram of Oriented Gradient (HOG) and pyramidal features.Besides,a study in [13]presented an action recognition system based on way-points trajectory,geodesic distance,joints motion and 3D Cartesian-plane features.This system achieved better performance but there was a slight decrease in accuracy due to the factor of silhouette overlapping.In addition to this study,an action representation was performed with shape,spatio-temporal angular-geometric and energy-based features[14].Therefore,a high recognition rate was achieved but the performance of the system is reduced in those environments where human posture changes rapidly.Also,human postures were extracted in [15]using an unsupervised dynamic X-means clustering algorithm.Features were extracted from skeleton joints obtained via depth sensors.The system lacked in the identification of static actions.Waheed et al.[16]generated 3D human postures and obtained their heat kernels to identify key body points.Then they extracted topological and geometric features using these key points.The authors also extracted full body features using CNN[17].In[18],a time interval at which social interaction is performed was detected and spatio-temporal and social features were extracted to track human actions.Moreover,a study in[19]proposed a fusion of multiple sensors.That is,they extracted HOG and statistical features.However,this system only worked on pre-segmented activities.

2.2 HOI Recognition Systems

Various methodologies have been adopted by researchers for identifying human activities in the past few years[20].For example,Meng et al.[21]proposed an HOI recognition system based on interjoint and joint-object distances.However,they did not identify the object individually but considered it one of the human body joints.In[22],joint distances that were invariant to the human pose were measured for feature extraction.This system was tested with only one dataset that consists of six simple interactions.Jalal et al.[23]proposed a HAR system based on the frame differentiation technique.In this system,human joints were extracted to record the spatio-temporal movements of humans.Moreover,Yu et al.[24]proposed a discriminative orderlet,i.e.,a middle-level feature that was used for the visual representation of actions.A cascaded HOI recognition system was proposed by Zhou et al.[25].It was a multi-stage architecture in which each stage of HOI was refined and then fed to the next network.In[26],zero-shot learning was used to accurately identify a relationship between a verb and an object.Their system lacked a spatial context and was tested on a simple verb-object pair.All the methodologies mentioned above in related work are either tested on RGB data or represented by a very complex set of features which increases the time complexity of the system.

Inspired by these approaches and mindful of their limitations,the proposed system has been designed.Because of the high accuracies achieved by systems that used depth sensors or RGB-D data,our system also takes RGB-D input.Different researchers have extracted different types of features from human silhouettes.To make our model unique,we have chosen orientation and thermal features.Moreover,these features are robust against occlusion and rapid posture changes,the two major issues faced by most researchers.Furthermore,the approaches that have used multiple features have increased time complexity.To solve this issue,we used vector quantization.It was also noted that systems tested on only one dataset or limited number of classes fail to prove their general applicability.Therefore,the proposed system has been validated on four large datasets including two HHI and two HOI datasets.

3 Material and Methods

This section describes the proposed framework for active monitoring of the daily life of humans.Fig.1 shows the general overview of the proposed system architecture.This architecture is explained in the following sections.

3.1 Image Normalization and Silhouette Segmentation

The images in HHI and HOI datasets are first filtered to enhance the image features.Then,a median filter is applied to both RGB and depth image sequences to remove noise[27]using the formula in Eq.(1):

whereiandjbelong to a windowwa having specified neighborhood centered around the pixels[m,n]in an image.

3.1.1 Silhouette Segmentation of Human-Human Interactions

The silhouette segmentation of RGB and depth frames of HHIs is performed separately.At first,connected components are located in an image via 4-connected pixel analysis as given through Eq.(2):

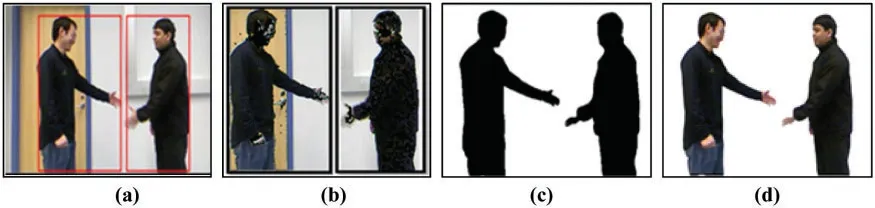

wherexandyare coordinates of pixelp.After labeling of connected components,a threshold limit that determines the area(height and width)of the human body is specified.Then,a bounding box is drawn on only those labeled components that are within the specified limit.As a result,all the humans in a frame are identified and enclosed in a bounding box.After identification,human skin is detected inside a bounding box via HSV(hue,saturation,value)color model[28].To improve the thresholding process,all the light color intensities (white,skin,and yellow) are converted into black color having an intensity value of0.Then threshold-based segmentation is applied to generate binary silhouettes.Silhouette segmentation of RGB images is shown in Fig.2.

Figure 2:Silhouette segmentation of an HHI image.(a)Detected humans via connected components,(b)skin color detection of right and left human,(c)binary silhouette,and(d)RGB silhouette

The segmentation of depth images of HHI is performed via morphological operations[29].The first step is to convert a depth image into a binary image via Otsu’s thresholding method.Then morphological operations of dilation and erosion are applied which result in retaining the contour of key objects in an image.The Canny edge detection technique is then applied to detect the edges of the two people involved in HHI.In the end,the smaller detected objects are removed from the image.

3.1.2 Silhouette Segmentation of HOI

Spectral residual saliency map-based segmentation technique[30]is used to segment humans and objects from RGB and depth images.The saliency map is generated by evaluating the log spectrum of images and Fourier transform is used to obtain the frequencyfof the grayscale imagesG(x).The amplitudeA(f)=abs(f)and phase spectrumP(f)=angle(f)are computed through frequency image.Then,spectral residual regions of RGB and depth images are computed from Eq.(3)as follows:

whereL(f)is the log ofA(f)andh(f)is an averaging filter.After residual regions are computed,saliency mapSis generated from Eq.(4)as follows:

whereF-1is inverse Fourier transform.In the end,the saliency map is converted into a binary image via the binary thresholding method.After segmenting salient regions from the background,humans and objects are detected separately in an image using K-means algorithm.This process of silhouette segmentation on a depth image is depicted in Fig.3.

Figure 3:Silhouette segmentation of an HOI image.(a) Original depth image,(b) saliency map,(c)binary silhouette,and(d)segmented depth human and object silhouette

3.2 Feature Extraction

The proposed system exploits two types of features:orientation features and thermal features.The details and results of these features are described in the following subsections.

3.2.1 Orientation Features

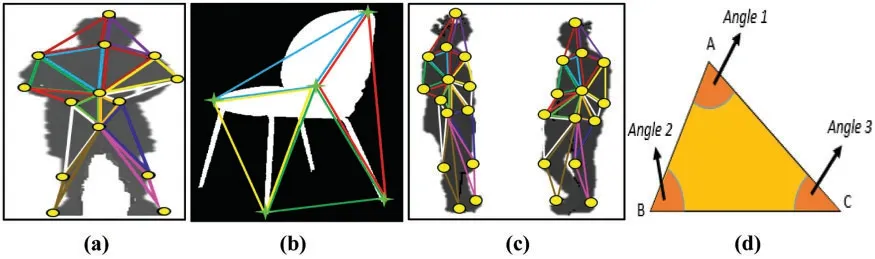

After obtaining human silhouettes,fourteen human joints (head,neck,right shoulder,left shoulder,right elbow,left elbow,right hand,left hand,torso,torso base,right knee,left knee,right foot,and left foot)are identified.Eight human joints are identified by Algorithm 1(detection of keybody points in human silhouette)proposed in[14].In this algorithm,eight human joints are detected by finding the topmost,bottommost,rightmost and leftmost pixels from the boundary of a human silhouette.The rest of the six human joints are identified by taking the average of the pixel locations of already identified joints.For example,the location of the neck joint is identified by taking the mean of the location of the head and torso joint.After locating joint points,a combination of three joints is taken to form a triangular shape and as a result,fourteen triangles are formed in HOI images(See Fig.4a).In HOI silhouettes,the orientation features of objects are also extracted.Four triangles(twelve angles)are formed from the centroid to all the four extreme points(See Fig.4b).While in HHI images,two people are involved so the number of triangles is twenty-eight(fourteen for each person)as shown in Fig.4c.The angle of tangent is measured between three sides of each triangle from Eq.(5)as follows:

whereu.vare obtained by taking a dot product of two vectorsu and vwhich are any two sides of a triangle.A total of three angles are calculated for each triangle.The first angle is calculated by taking AB asuand AC asv.The second angle is calculated by taking AB asuand BC asv.The third angle is calculated by taking BC asuand AC asvas shown in Fig.4d.

Figure 4:Triangular shapes formed by combining human joints.(a) Single person frame of playing phone HOI in SYSU dataset,(b)triangle formation on object,(c)two-person frame of conversation HHI in UoL dataset,and(d)three angles of a triangle

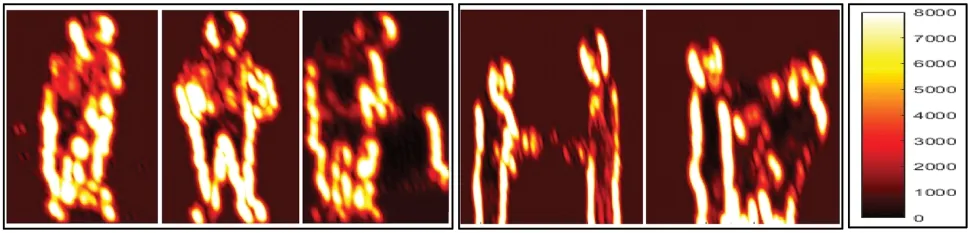

3.2.2 Thermal Features

The movements of different body parts as the human silhouettes move from one frame to the next are captured in the form of thermal maps.The parts having greater movements during an interaction have higher heat values (yellowish colors) on these maps.On the other hand,parts of a human silhouette that show lesser movements,i.e.,they are less involved in performing an interaction,are displayed in a reddish or blackish color.A matrix of index values ranging from 0 to 8000 shows heat values in thermal maps.These index values are used to extract heat of only those parts that are involved in an HOI and are represented from Eq.(6)as follows:

wherevis a 1D vector in which the extracted values are stored,Krepresents index values and lnRrefers to the RGB values that are extracted fromK.The thermal maps of different HOI are shown in Fig.5.

Figure 5:Thermal maps of HOI and HHI along with the scale of thermal values

After extracting the two types of features from both HHI and HOI datasets,they are concatenated,resulting in a matrix.

3.3 Vector Quantization

After extracting the two types of features from all the images of the HHI and HOI datasets,the features are added as descriptors of each interaction class,separately.However,this results in a very large feature dimension size[31].Therefore,we generate an organized feature vector by considering a codebook of size 512.

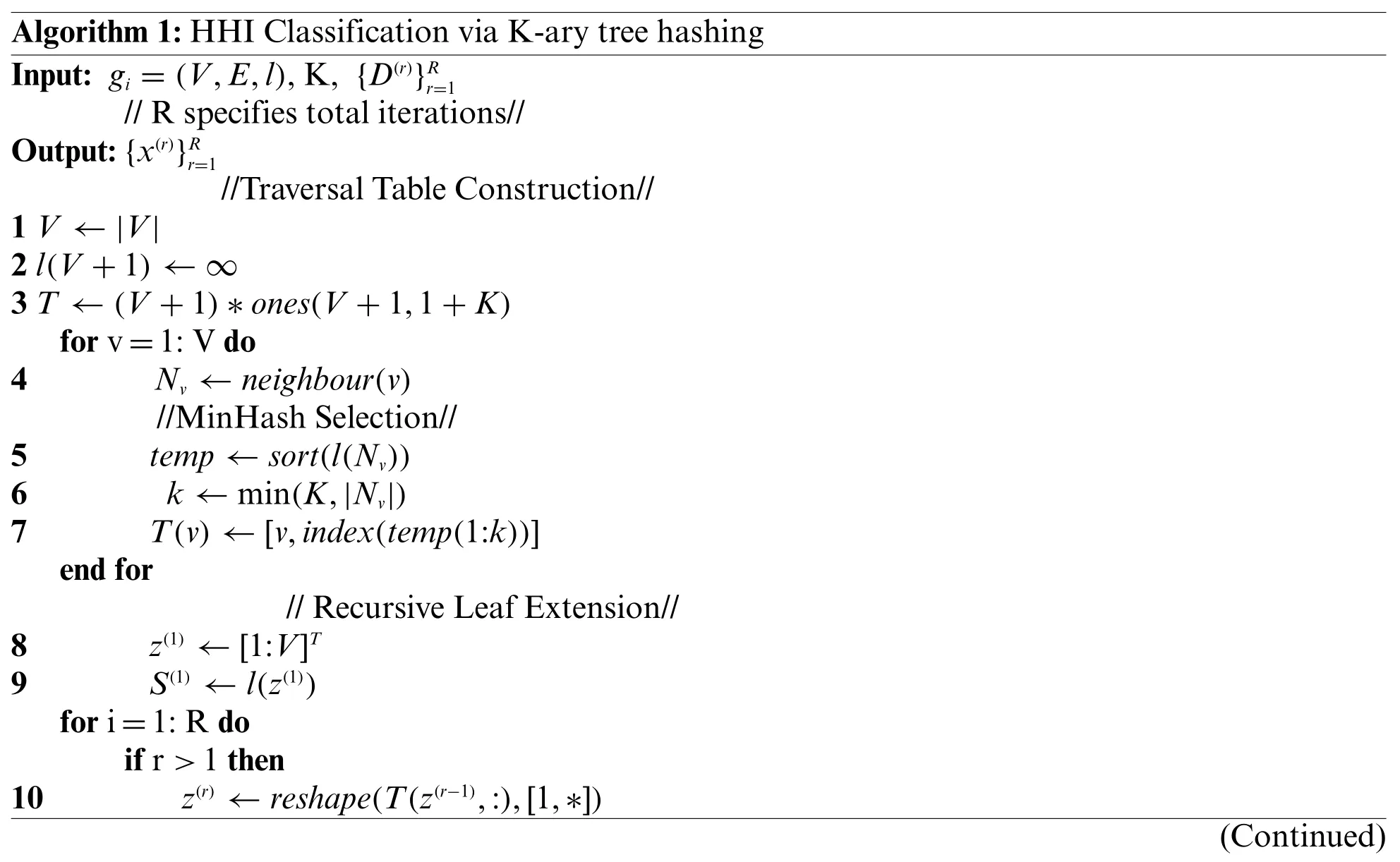

3.4 HHI Classification via K-ary Tree Hashing

The quantized feature vectors of HHI classes are fed to the K-ary tree hashing classifier.The optimized features are represented in the form of a graphG={gi},wherei=1....NandNrepresents the number of objects in the graph[32].The graph comprises of verticesVand undirected edgesE.Moreover there is a label functionl:V→Lto assign labels to nodes ingiwheregirepresents the whole graph withV,E,andl.A class labelyiis set for eachgibased on graph’s structure.The graph structure means the values in the nodes of the graph based on the values of feature vectors.Each feature vector of the testing class is represented in the form of a graph and then used for predicting the correct label for each class.Also,a size of MinHashes{D(r)}forRiterations and the traversal tableKis also defined.For MinHashes,random permutations {} are also generated.The process of classifying various HHIs is given in Algorithm 2 which takes the graph,the traversal table,and the size of MinHashes as input.It is divided into four sections:traversal table construction,MinHash selection,recursive leaf extension,and leaf sequence.The traversal table is constructed to find the subtree patterns in using k-ary trees.Like a binary tree in which each node has two children,each node in a k-ary tree has k children.The leaf node labels of the k-ary trees can identify the patterns hidden in the data.Then the MinHash scheme is used to classify the interactions based on the identified patterns.

Algorithm 1:HHI Classification via K-ary tree hashing Input: gi=(V,E,l),K,{D(r)}R r=1//R specifies total iterations//Output:{x(r)}Rr=1//Traversal Table Construction//1 V ←|V|2 l(V+1)←∞3 T ←(V+1)*ones(V+1,1+K)for v=1:V do 4 Nv ←neighbour(v)//MinHash Selection//5 temp ←sort(l(Nv))6 k ←min(K,|Nv|)7 T(v)←[v,index(temp(1:k))]end for//Recursive Leaf Extension//8 z(1) ←[1:V]T 9 S(1) ←l(z(1))for i=1:R do if r>1 then 10 z(r) ←reshape(T(z(r-1),:),[1,*])(Continued)

Algorithm 1:Continued 11 S(r) ←reshape(l(z(r)),[V,*])end if//Leaf Sequence//12 f (r) ←[h(S(r)(1,:)),...h(S(r)(V,:))]T 13 x(r) ←[min(π(r)1 (f (r))),...min(π(r)D(r)(f (r)))]T end for

3.5 HOI Classification via Artificial Neural Networks

The Quantized vectors of HOI are then fed to ANN for training and predicting accurate results.The final vector dimension of the SYSU 3D HOI dataset is 6054×480 while for NTU RGB+D dataset is 6054×530.There are 6054 rows that represent the feature values for both thermal and orientation features.Whereas there are 480 and 530 columns representing the number of images in the SYSU 3D HOI and NTU RGB+D datasets respectively.In the LOSO validation technique,one subset is used for testing and all the remaining subsets are used to train the system.The system is then validated by taking another subset for testing and the remaining subsets for training.In this way,the system is trained and tested with all the subjects in both datasets and avoids sampling bias.There are three layers:input layer,hidden layer,and output layer in ANN[33].These layers are interconnected to each other and weights are associated with each connection.The net input at the neuron of each layer is computed using a transfer functionTjgiven in Eq.(7)as follows:

wherewi,jare the weights,xirepresents the inputs andbjis the added bias term.An input layer is fed with feature descriptors.After adjusting weights,adding bias,and processing through hidden layer,it predicts accurate HOI classes of both datasets.

4 Performance Evaluation

This section gives a brief description of the four datasets used for HHI and HOI,results of the experiments conducted to evaluate the proposed HAR system and its comparison with other systems.

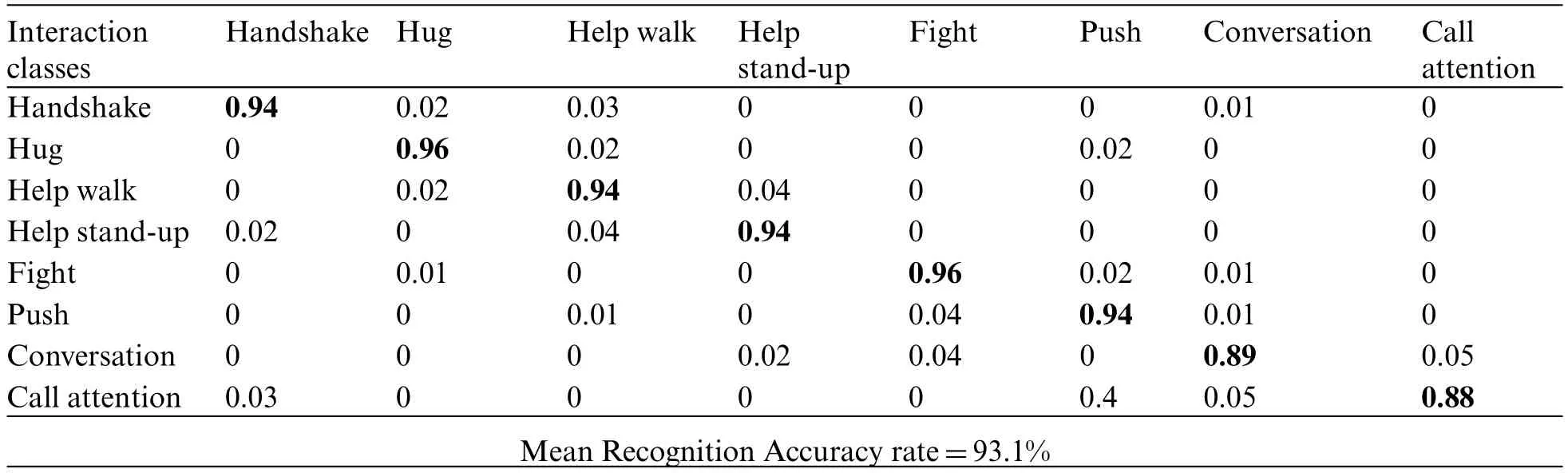

4.1 Datasets Description

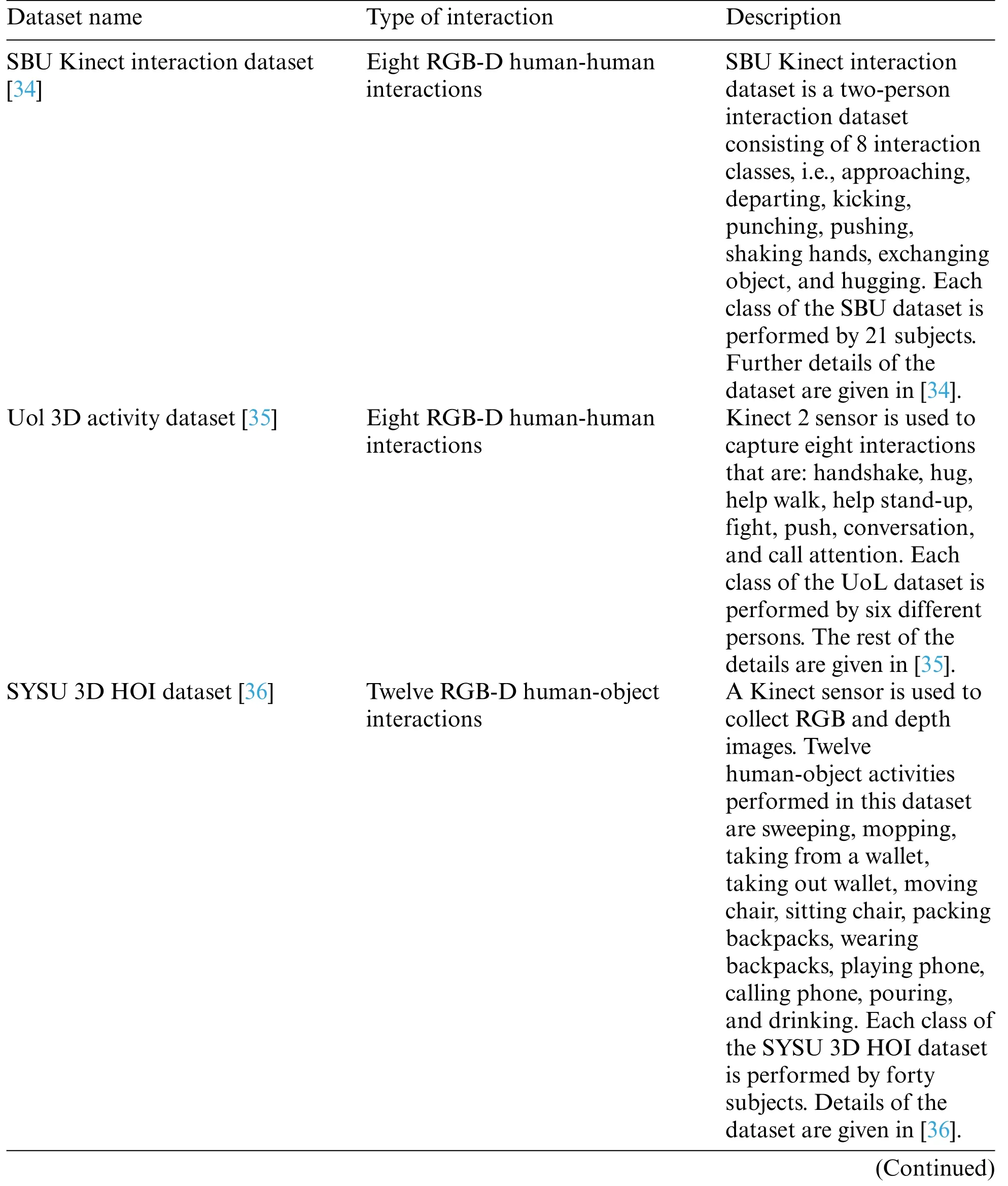

The description of each dataset used for HHI recognition and HOI recognition is given in Tab.1.Each class of HHI and HOI dataset is performed by different number of subjects as described in the dataset description table.So the proposed system is trained with the different number of subjects of varying appearances resulting in high-performance rate in the testing phase.Each subject is used for both training and testing of a system via the LOSO validation technique.Cross-validation is used to avoid sampling bias via using new image classes for testing of a system other than those used for training.

Table 1:Datasets description for HHI and HOI recognition

Table 1:Continued

4.2 Experimental Settings and Results

All the processing and experimentation are performed on MATLAB (R2018a).The hardware system used is Intel Core i5 with 64-bit Windows-10.The system has an 8 GB ram and 5 (GHz)CPU.To evaluate the performance of the proposed system,we used a Leave One Subject Out(LOSO)cross-validation method.The results section is divided into two sections:experimental results on HHI datasets and experimental results on HOI datasets.

4.2.1 Experimental Results on HHI Datasets

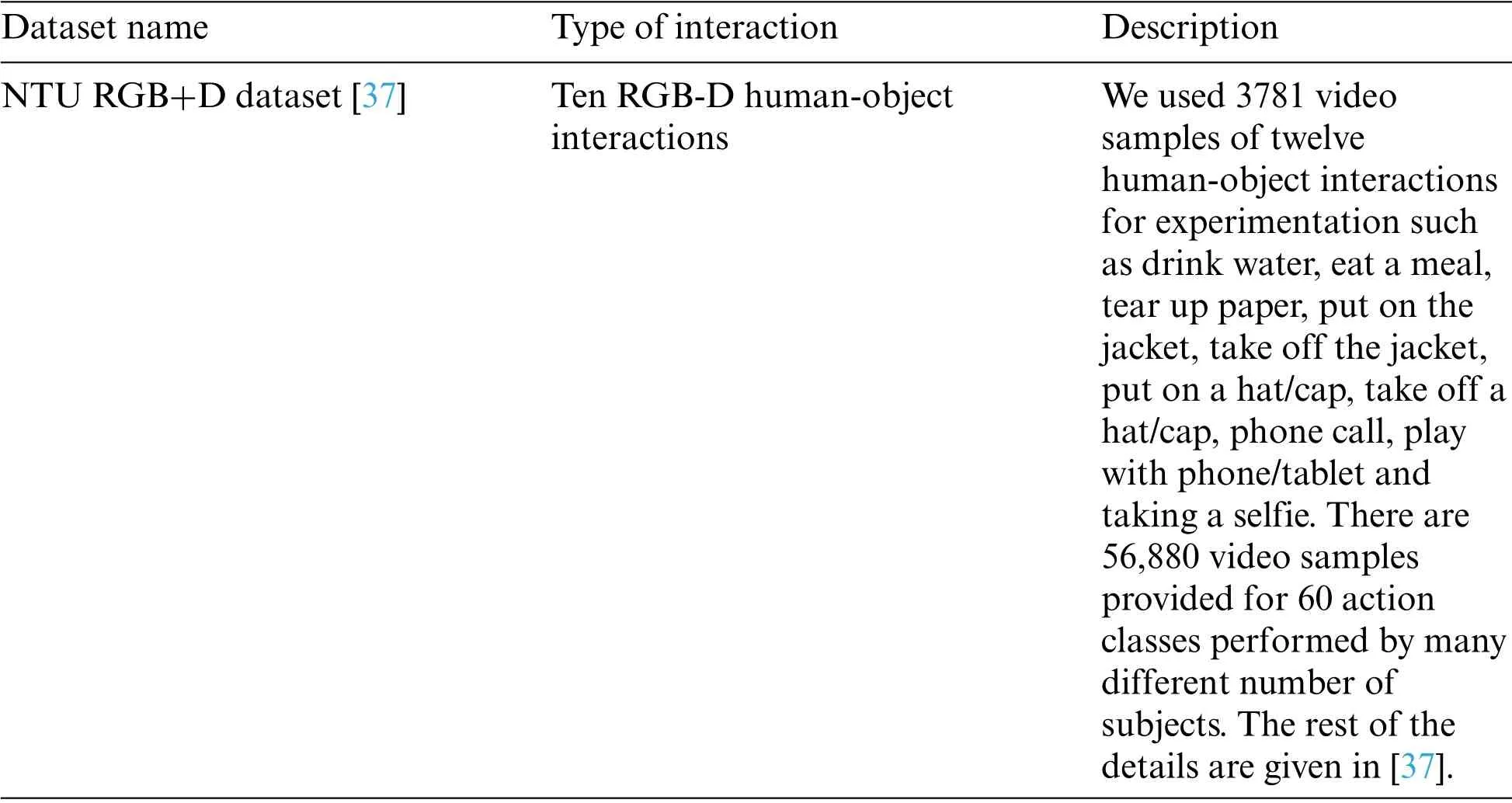

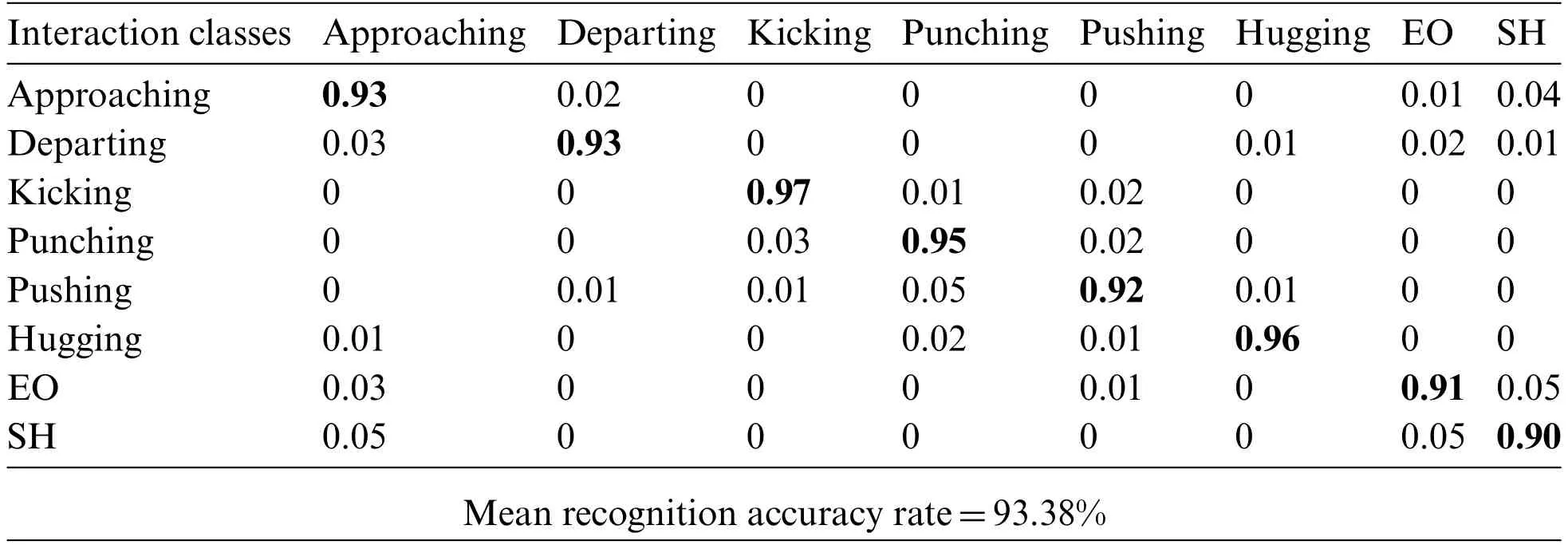

Experiment I:Recognition Accuracies

At first,classes of SBU and UoL datasets are given to the K-ary tree hashing classifier separately.The results of classification with classes of the SBU and UoL dataset is shown in the form of confusion matrices in Tabs.2 and 3 respectively.

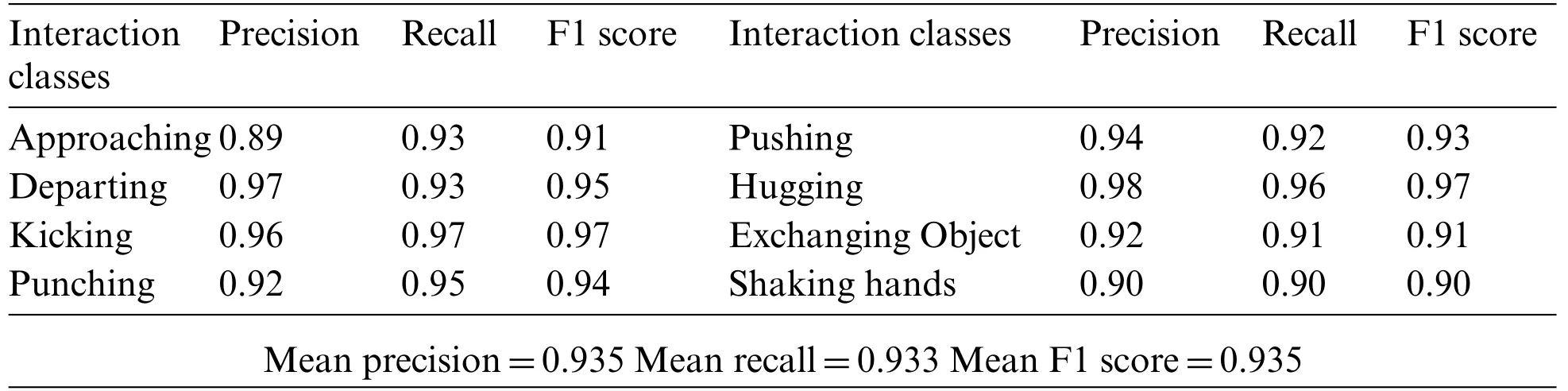

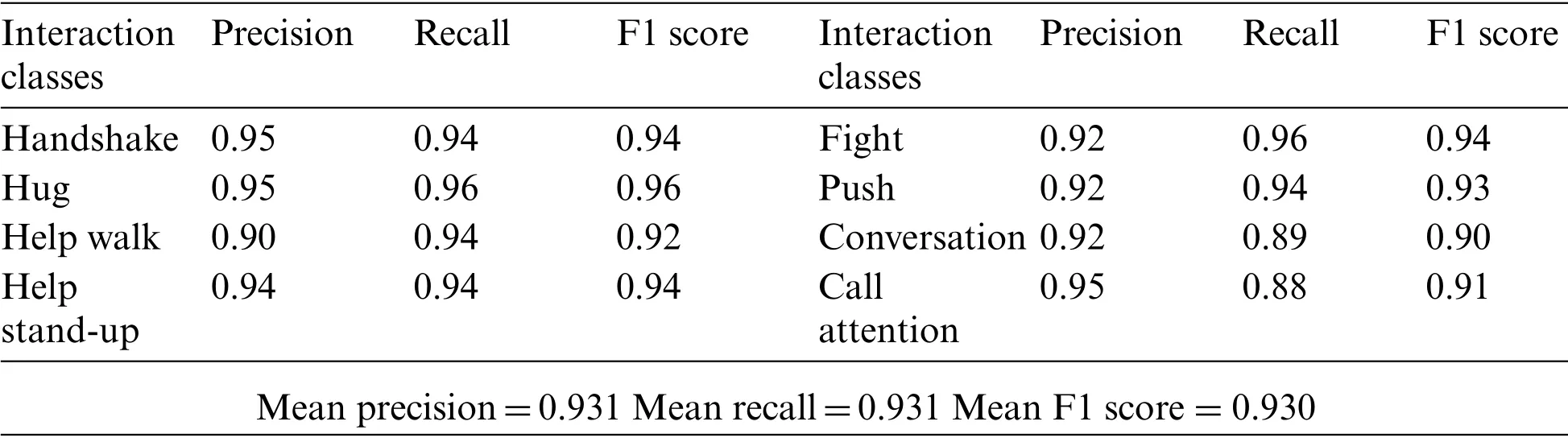

Experiment II:Precision,Recall and F1 Measures

The precision is the ratio of correct positive predictions to the total positives while the recall is the true positive rate and it is the ratio of correct positives to the total predicted positives.The F1 score is the mean of precision and recall.The precision,recall and F1 score for classes of SBU and UoL dataset are given in Tabs.4 and 5 respectively.

Table 2:Confusion matrix showing recognition accuracies over classes of SBU dataset

Table 3:Confusion matrix showing recognition accuracies over classes of UoL dataset

Table 4:Precision,Recall and F1 score over classes of SBU dataset

Table 5:Precision,Recall and F1 score over classes of UoL dataset

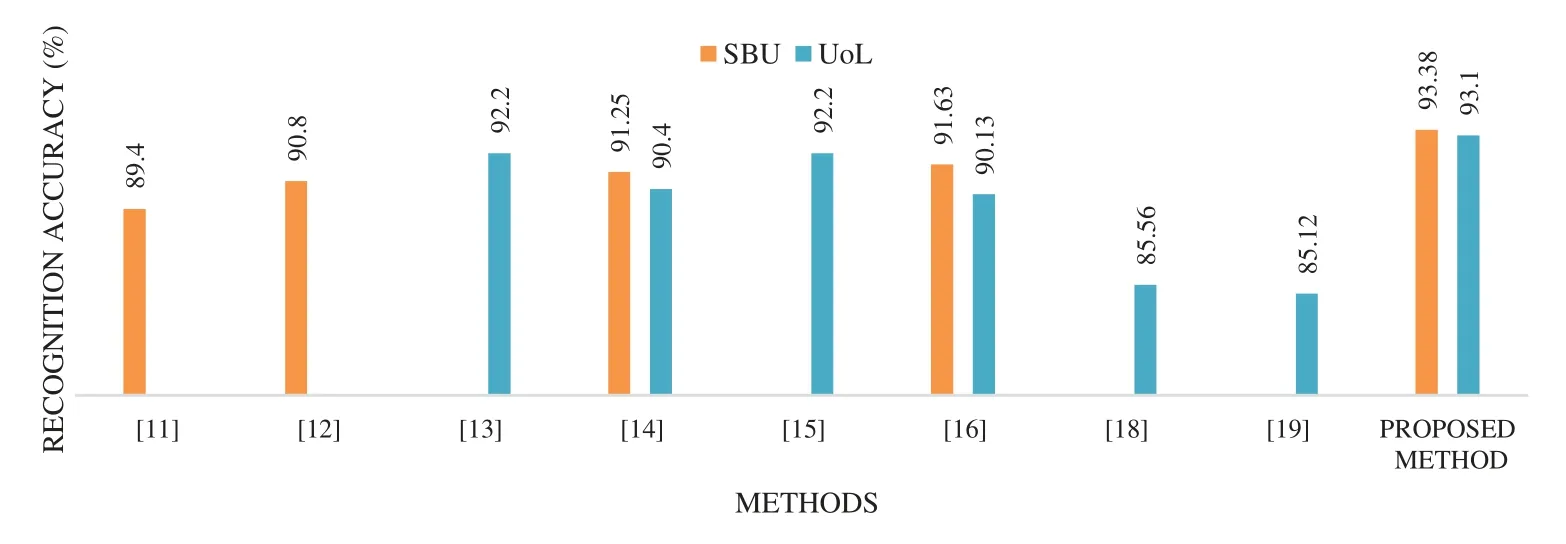

Experiment III:Comparison with Other Systems

This section compares the proposed methodology with other recent methods as shown in Fig.6.These methods have been discussed in Section 2.

Figure 6:Comparison of mean recognition accuracy of the proposed method with other recent methods over HHI datasets

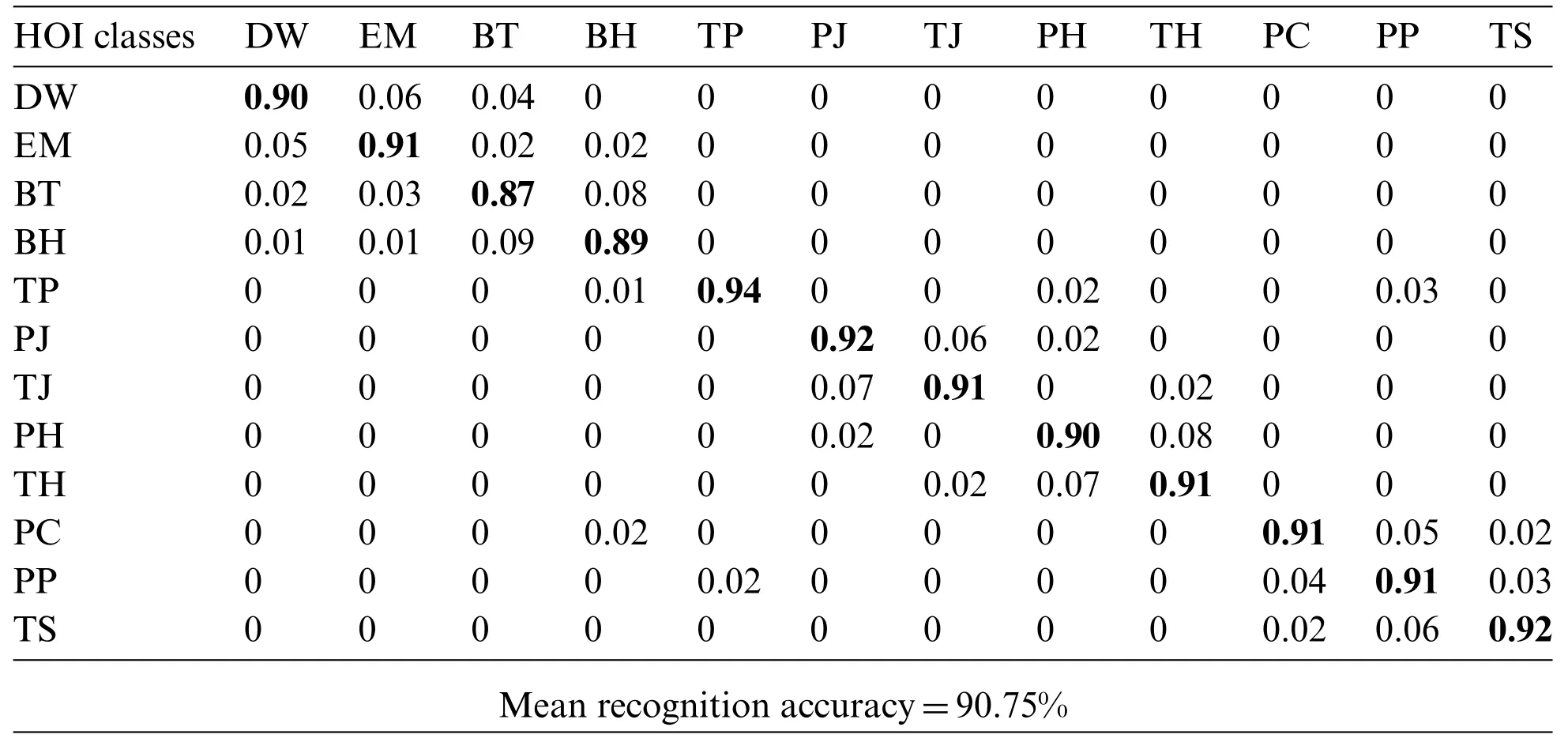

4.2.2 Experimental Results on HOI Datasets Experiment I:Recognition Accuracies

The results of classification with classes of SYSU and NTU dataset are shown in the form of confusion matrices in Tabs.5,6 and 7,respectively.It is observed during experimentation that the interactions,which involve similar objects like packing backpacks and wearing backpacks,are confused with each other.

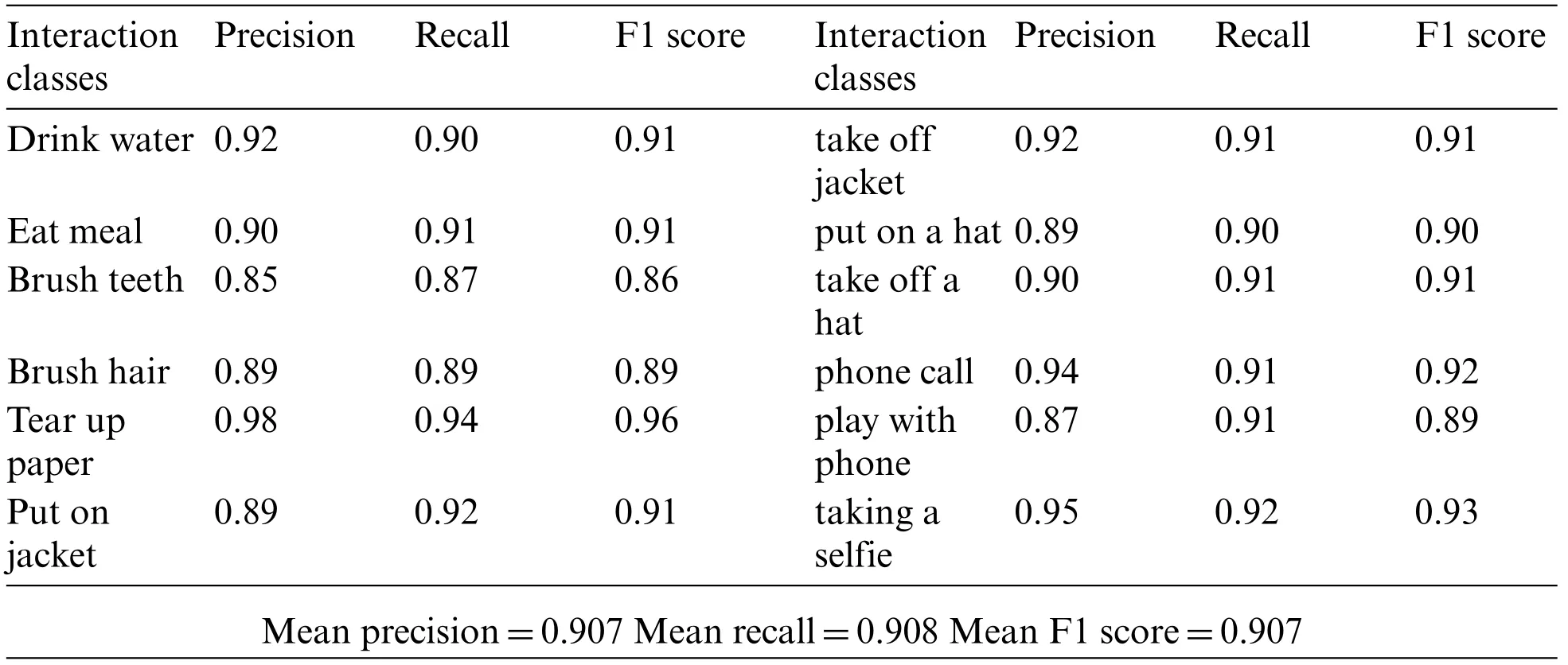

Experiment II:Precision,Recall and F1 Measures

The precision,recall and F1 scores for classes of the SYSU and the NTU dataset are given in Tabs.8 and 9 respectively.Hence an accurate system is developed which is able to recognize each HOI with high precision.

Table 6:Confusion matrix showing recognition accuracies over classes of SYSU 3D HOI dataset

Table 7:Confusion matrix showing recognition accuracies over classes of NTU RGB+D dataset

Table 8:Precision,recall and F1 score over classes of SYSU dataset

Table 9:Precision,Recall and F1 score over classes of NTU dataset

Experiment III:Comparison with Other Systems

This section compares the proposed methodology over HOI datasets with other recent methods as shown in Fig.7.In[36],a RGB-D HOI system based on joint heterogeneous features based learning was proposed.Also,an RGB-D HOI system based on SIFT regression was proposed in[38].A feature map was constructed by Local Accumulative Frame Feature (LAFF).Furthermore,a study in [39]explained graph regression,whereas multi-modality learning convolutional network was proposed in[40].In[41],the skeletal joints extracted via depth sensors were represented in the form of key poses and temporal pyramids.A mobile robot platform-based HIR was performed in [42]using skeletonbased features.Moreover,the overall human interactions are divided into interactions of different body parts [43].In this work,pairwise features were extracted to track human actions.In [44],the authors introduced a semi-automatic rapid upper limb assessment(RULA)technique using Kinect v2 to evaluate the upper limb motion.

Figure 7:Comparison of mean recognition accuracy of different recent methods over HOI datasets

5 Discussion

A comparison of the proposed system with other systems showed that the proposed system performed better than many other systems proposed in the recent years.Moreover,the high accuracy scores justify the need of additional depth information along with RGB information.Similar findings were also presented in[16]and[17].However,there are some limitations of the systems,such as during skeletal joints extraction,it was challenging to locate the joints of occluded body parts.In order to overcome this limitation,we have adopted the methodology of dividing the silhouette into four halves and then locating the top,bottom,left and right pixels for identifying joints in each half.Moreover,most of the interactions are performed in standing positions in the datasets used in the proposed system.Due to this reason,there is less occlusion of human body parts with objects or other body parts and the performance rate is not very much affected.

6 Conclusion and Future Works

This paper proposes a real-time human activity monitoring system that recognizes the daily activities of humans using multi-vision sensors.This system integrates two types of HAR systems:HHI recognition systems and HOI recognition systems.After silhouette segmentation,two unique features are extracted:thermal and orientation features.In order to validate the proposed system’s performance,three types of experiments are performed.The comparison of the proposed system with other state-of-the-art systems is also provided which clearly shows the better performance of the proposed system.In real life,the proposed system should be applicable to many applications such as assisted living,behavior understanding,security systems and human-robot interactions,e-health care and smart homes.

We are working on integrating more types of human activity recognition and developing a system that monitors human behavior in both indoor and outdoor environments as part of our future works.

Funding Statement:This research was supported by a grant(2021R1F1A1063634)of the Basic Science Research Program through the National Research Foundation (NRF) funded by the Ministry of Education,Republic of Korea.

Conflicts of Interest:The authors declare that they have no conflicts of interest to report regarding the present study.

Computers Materials&Continua2022年8期

Computers Materials&Continua2022年8期

- Computers Materials&Continua的其它文章

- EACR-LEACH:Energy-Aware Cluster-based Routing Protocol for WSN Based IoT

- Medical Image Analysis Using Deep Learning and Distribution Pattern Matching Algorithm

- Fuzzy MCDM Model for Selection of Infectious Waste Management Contractors

- An Efficient Scheme for Data Pattern Matching in IoT Networks

- Feedline Separation for Independent Control of Simultaneously Different Tx/Rx Radiation Patterns

- Deep-piRNA:Bi-Layered Prediction Model for PIWI-Interacting RNA Using Discriminative Features