Evaluation of artificial intelligence models for osteoarthritis of the knee using deep learning algorithms for orthopedic radiographs

INTRODUCTION

Knee osteoarthritis (KOA) is a debilitating joint disorder that degrades the knee articular cartilage and is characterized by joint pain,stiffness,and deformity,and pathologically by cartilage wear and osteophyte formation.KOA has a high incidence among the elderly,obese,and sedentary.Early diagnosis is essential for clinical treatments and pathology[1,2].The severity of the disease and the nature of bone deformation and intra-articular pathology impact decision-making.Radiographs form an essential element of preoperative planning and surgical execution.

There the Gray Wolf stopped. Well, Tsarevitch Ivan, he said, I have paid for thy horse, and have served thee in faith and truth. Get down now; I am no longer thy servant.

Artificial intelligence (AI) is impacting almost every sphere of our lives today.We are pushing the boundaries of human capabilities with unmatched computing and analytical power.Newer technological advancements and recent progress of medical image analysis using deep learning (DL),a form of AI,has shown promising ways to improve the interpretation of orthopedic radiographs.Traditional machine learning (ML) has often put much effort into extracting features before training the algorithms.In contrast,DL algorithms learn the features from the data itself,leading to improvisation in practically every subsequent step.This has turned out to be a hugely successful approach and opens up new ways for experts in ML to implement their research and applications,such as medical image analysis,by shifting feature engineering from humans to computers.

DL can help radiologists and orthopedic surgeons with the automatic interpretation of medical images,potentially improving diagnostic accuracy and speed.Human error due to fatigue and inexperience,which causes strain on medical professionals,is overcome by DL,reducing their workload and,most importantly,providing objectivity to clinical assessment and decisions for need of surgical intervention.Moreover,DL methods trained based on the expertise of senior radiologists and orthopedic surgeons in major tertiary care centers could transfer that experience to smaller institutions and create more space in emergency care,where expert medical professionals might not be readily available.This could dramatically enhance access to care.DL has been successfully used in different orthopedic applications,such as fracture detection[3-6],bone tumor diagnosis[7],detecting hip implant mechanical loosening[8],and grading OA[9,10].However,the implementation of DL in orthopedics is not without challenges and limitations.

While most of our orthopedic technological research remains focused on core areas like implant longevity and biomechanics,it is essential that orthopedic teams also invest their effort in an exciting area of research that is likely to have a long-lasting impact on the field of orthopedics.With this aim,we set up a project to determine the feasibility and efficacy of the DL algorithms for orthopedic radiographs (DLAOR).To the best of our knowledge,only few published studies have applied DL to KOA classification,but many of these involved application to preprocessed,highly optimized images[11].In this study,radiographs from the Osteoarthritis Initiative staged by a team of radiologists using the Kellgren-Lawrence (KL) system were used.They were standardized and augmented automatically before using the images as input to a convolution neural network model.

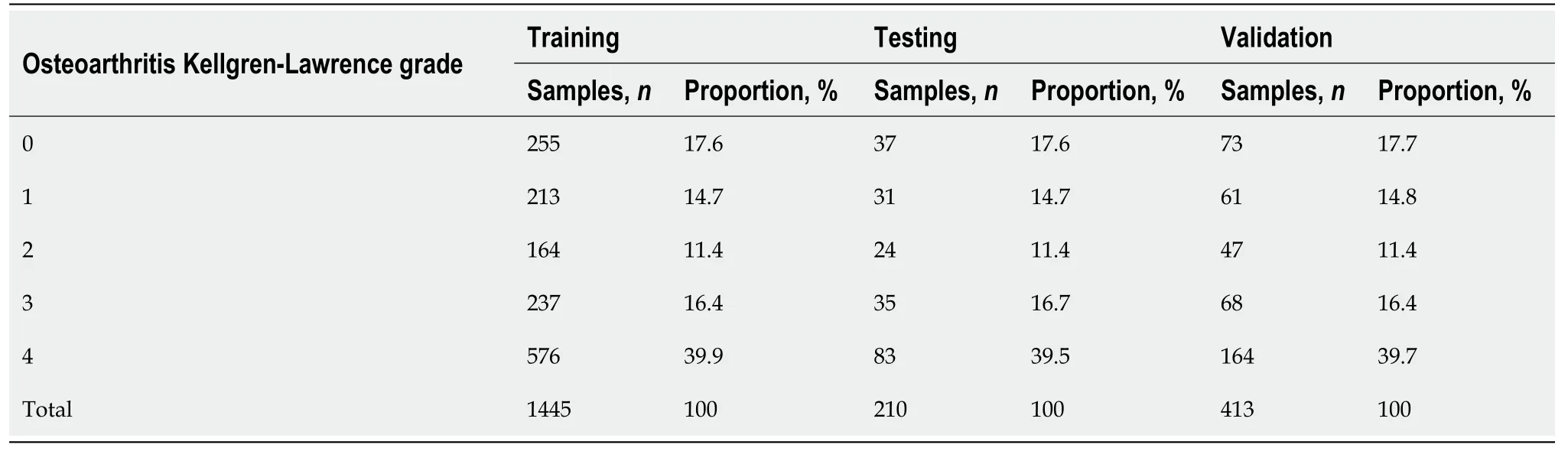

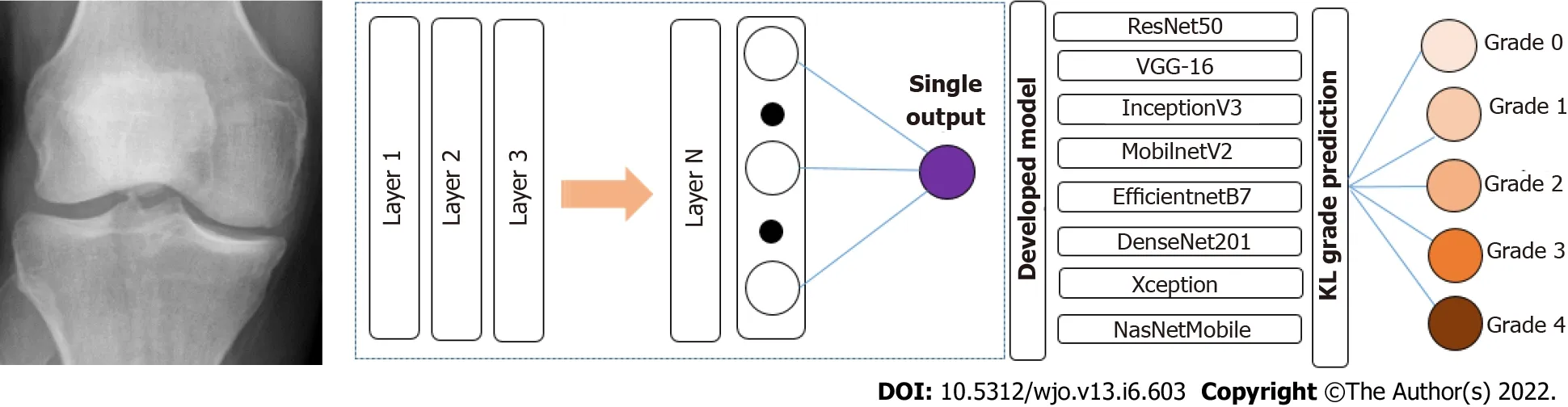

As per the Kellgren-Lawrence scale,three orthopedic surgeons reviewed these independent cases,graded their severity for OA,and settled disagreements through consensus.To assess the efficacy of ML in accurately classifying radiographs for KOA,eight models were used,including ResNet50,VGG-16,InceptionV3,MobilnetV2,EfficientNetB7,DenseNet201,Xception,and NasNetMobile.A total of 2068 images were used,of which 70% were used initially to train the model,10% were then used to test the model,and 20% were used for accuracy testing and validation of each model.

32.The ogre: The addition of the ogre and the magic surrounding his demise is another difference in Perrault s tale from older European versions. An ogre is defined as a giant or hideous monster of folklore...often given to eating human flesh, and characterized by extreme stupidity... The word ogre is said to have first been used by Perrault in his Contes, though the ogre himself, according to Gaston Paris, is a descendant of the Italian rackshasas. The word may be from the Italian ogro for orgo or orco, monster, demon, in turn from the Latin Orcus, god of Hades. (Leach 1949, 816). KHReturn to place in story.

MATERIALS AND METHODS

The study was approved by an institutional review committee as well as the institutional ethics committee (HNH/IEC/2021/OCS/ORTH 56).It involved a diagnostic method based on retrospectively collected radiographic examinations.The examinations had been performed by a neural network,for assessment of both presence and severity of KOA using the KL system[12].AI identifies patterns in images based on input data and associated recurring learning.It is fed with both the input (the radiographic images) and the information of expected output label (classifications of OA grade) to establish a connection between the features of the different stages of KOA (,possible osteophytes,joint space narrowing,sclerosis of subchondral bone) and the corresponding grade[13].Before being fed to the classification engine,images were manually annotated by a team of radiologists according to the KL grading.

Data group

All the medical images were de-identified,without personal details of patients.The inclusion and exclusion criteria were applied after de-identification,complying with international standards like the Health Insurance Portability and Accountability Act.

Inclusion criteria

The following inclusion criteria were used: age above 18 years; recorded patient complaint of chronic knee pain (pain most of the time for more than 3 mo) and presentation to the orthopedic department or following radiologic investigation; and recorded patient unilateral knee complaints and knee grading according to KL system classification.

Exclusion criteria

The algorithm processed the images collected for each class.Each DICOM format radiograph was automatically cropped to the active image area,any black border was removed and reduced to a maximum of 224 pixels.Image dimensions were retained by padding the rectangular image to a square format of 224 × 224 pixels.The training was carried out by cropping and rotating.Figure 2 shows the labelling of the KOA images in different classes as 0,1,2,3 and 4,as per the KL grading scale and the knee severity from the X-ray images.The data were split into five different classes as per the KL grade shown in Figure 2.Table 1 shows the data splits in the training,testing and validation subsets,according to KL grades.

For the anteroposterior view of the knee,the leg had been extended and centered to the central ray.The leg had then been rotated slightly inward,in order to place the knee and the lower leg in a true anterior-posterior position.The image receptor had then been centered on the central ray.For a lateral view,the patient had been positioned on the affected side,with the knee flexed 20-30 degrees.

After a time the wolf, who had likewise fallen on the Prince s neck, advised them to continue their journey, and once more the Prince and his lovely bride mounted on the faithful beast s back

Three orthopedic surgeons reviewed these independently,graded them for the severity of KOA as per the KL scale and settled exams with disagreement through a consensus session.Finally,in order to benchmark the efficacy,the results of the models were also compared to a first-year orthopedic trainee who independently classified these models according to the KL scale.

Pre-processing

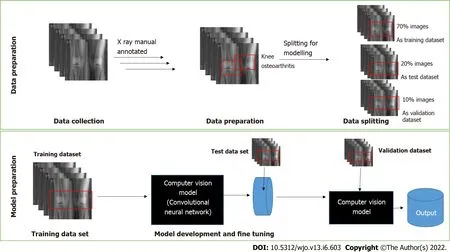

The models were trained with 2068 X-ray images.The dataset was split into three subsets used for training,testing,and validation,with a split ratio of 70-10-20.Initially,70% of the images were used to train the model.Subsequently,20% were used to test,and finally 10% were used for validation of the models.An end-to-end interpretable model took full knee radiographs as input and assessed KOA severity according to different grades (Figure 1).

AI can only operate within the areas in which it has been trained,whereas human intelligence and its interpretation are independent of the area in which it has been trained.One of the key differences between humans and machines is that humans will be able to solve problems related to unforeseen domains,while the latter will not have the capability to do that.It can be accomplished by increasing the size or number of parameters in the ML model,examining the complexity or type of the model,increasing the time spent training,and increasing the number of iterations until the loss function in ML is minimized.

Input images

The following exclusion criteria were used: patients who had undergone operation for total knee arthroplasty in either of the knees; patients who had undergone operation for unicompartmental knee arthroplasty; or patients who had recorded bilateral knee complaints.

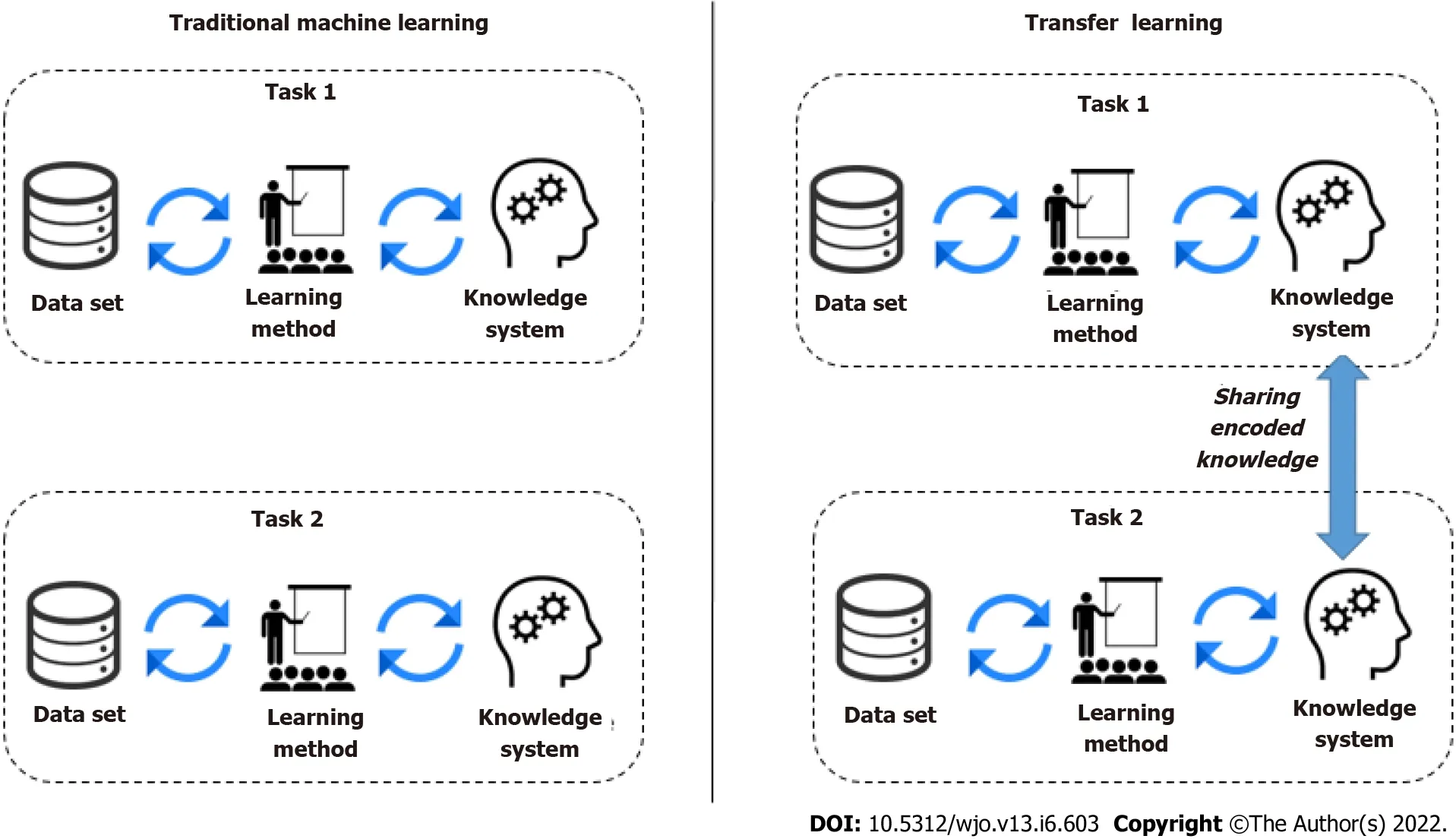

Need of transfer learning

A DL model is a program that has been trained on a set of data (called the training set) to recognize certain types of patterns.AI models use different algorithms to understand the information as part of a dataset and then learn from these data,with the definite goal of solving business challenges and overarching problems.The focus of traditional ML techniques used in image classification is acquiring knowledge from existing data and classifying.Most commonly,this means synthesizing practical concepts from historical data.However,another technique that encapsulates knowledge learned across many tasks and transfers it to new,unseen ones is transfer learning.Knowledge transfer can help speed up training and prevent overfitting,and improve the obtainable final performance.In transfer learning,knowledge is transferred from a trained model (or a set thereof) to a new model by encouraging the new model to have similar parameters.The trained models from which knowledge is transferred is not trained with this transfer in mind,and hence the task it was trained on must be very general for it to encode useful knowledge concerning other tasks.Transfer learning appeared to be helpful when there was a small training dataset with similar feature images.Thus,we have opted for a transfer learning approach to develop the DLAOR system.Figure 3 shows the traditional MLtransfer learning.

DLAOR system

DLAOR is an image classification system where images are fed into the model to classify the KOA grade in medical images.It is built using a data ingestion pipeline,modelling engine,and classification system.The present study utilized a transfer learning technique for the effective classification of KOA grades using medical images.Since there are multiple well-established transfer learning models,we decided to use eight classification models.The selected models were reported to be the most efficient for image classification.All the selected models were compared to identify the best technique for the DLAOR system.The same set of images had been given to two OA surgeons to classify KL grades based on expert human interpretation and compared with models as part of the DLAOR system (Figure 4).

By using DL methods trained by senior radiologists and orthopedic surgeons in larger hospitals,smaller institutions could gain the expertise they need and create more space in emergency care,where medical professionals may not be readily available.Providing care in this manner would improve access dramatically.

Model development

In this study,transfer ML models were developed using Python as Language; the Google Colab platform was used to run the code using GPU configuration to process models faster.Model development has the following steps: (1) Data augmentation and generators.There is the development of augmentation functions and data generator functions for training,testing,and validation datasets in

this stage; (2) Import the base transfer learning model.The next step is to import the base model from the TensorFlow/Keras library; (3) Build and compile the model.The base model was used just as a layer in a sequential model.After that,a single fully connected layer on top was added.Then,the model was compiled with Adam as an optimizer and categorical cross entropy as a loss mechanism; (4) Fit the model.In this step,the final model was fitted over the validation dataset.Then,with the help of 10 epochs,the model was finalized.This model was then evaluated over the test data; and (5) Fine-tune the model.We fine-tuned the model to improve the statistical order by giving a learning rate of 0.001 and additional epochs as 20.

RESULTS

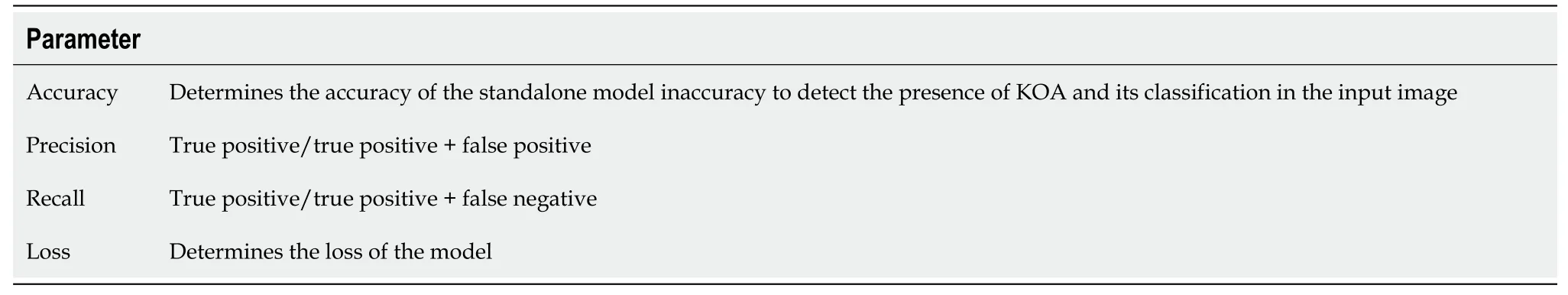

In the present study,transfer ML was performed to compare the following parameters,to compare which model or human expert interpretation performed better regarding image classification of KOA grades: (1) Accuracy,which tells us what fractions are labeled correctly; (2) Precision,which is the number of true positives (TPs) over the sum of TPs and false positives.It shows what fraction of positive sides are correct; (3) Recall,which is the number of TPs over the sum of TPs and false negatives.It shows what fraction of actual positives is correct; and (4) Loss,which indicates the prediction error of the model.

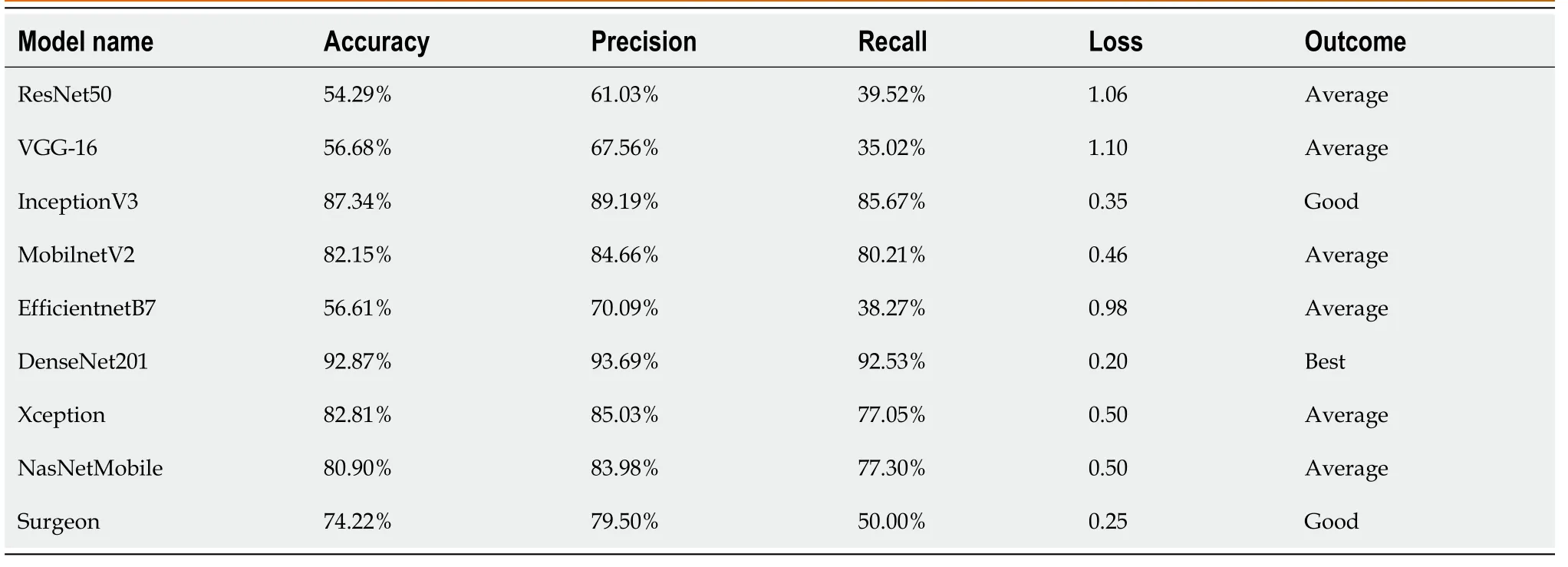

Table 2 shows the performance comparison of the various models used for transfer learning models for KOA with the accuracy,precision,recall,and loss.It was observed that the highest accuracy was 92.87% and was achieved using DenseNet201.It had high precision and recall value as compared to all the other models.

This study leveraged cross-entropy multiple class loss.It is the measure of divergence of the predicted class from the actual class.It was calculated as a separate loss for each class label per observation and sums the result (Table 3).

The next year, I married. When I became pregnant, I found the yellow shirt tucked in a drawer and wore it during those big?belly10 days. Though I missed sharing my first pregnancy11 with Mom and Dad and the rest of my family, since we were in Colorado and they were in Illinois, that shirt helped remind me of their warmth and protection. I smiled and hugged the shirt when I remembered that Mother had worn it when she was pregnant.

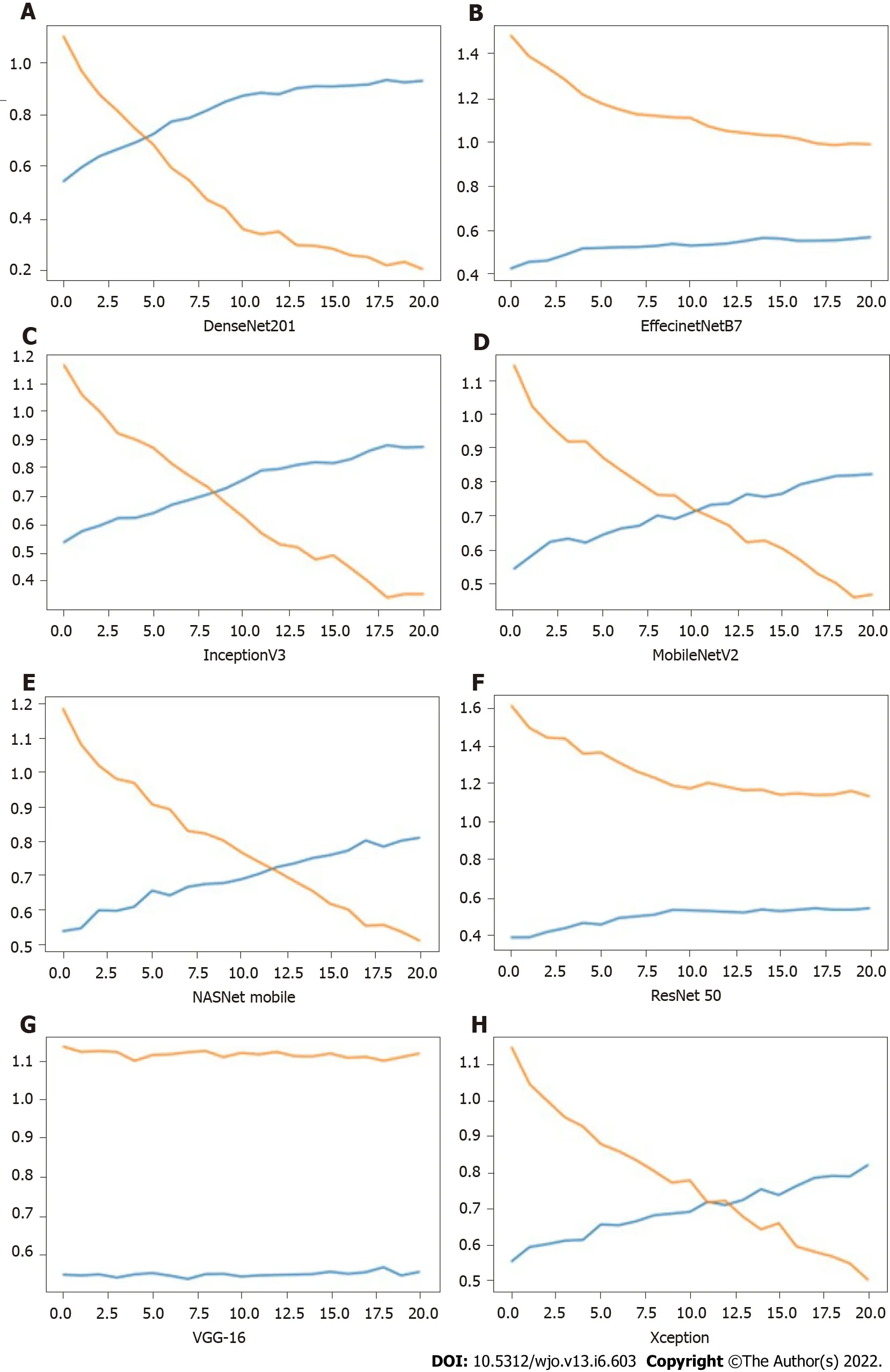

Figure 5 shows the impact of iterations on the loss and accuracy of the eight different models.All the image clarification models had accuracy over 54%,while five models achieved accuracy over 80%,and one model achieved accuracy above 90%.High precision was achieved as 93.69% from the DenseNet model.The same model resulted in the highest accuracy of 92.87% and recall of 92.53%.Maximum loss was observed for the VGG-16 model.The minimum value for accuracy and precision was observed in the ResNet50 model.The result showed that DenseNet was the best model to develop a DLAOR image classification system.The expert human interpretation for image classification was also captured for comparison.This was performed by two OA surgeons,and observed accuracy was 74.22%,with minimum loss value as second in comparison to all models.

Yes, ma am. It s too much to expect an ordinary mother to give up all six sculptures she made from a 60 foot tall crane that a total stranger left in her back yard.

DISCUSSION

Making the DL algorithm interpretable can benefit both the fields of computer science (to enable the development of improved AI algorithms) and medicine (to enable new medical discoveries).Interpreting and understanding cases of a machine’s failure or when the machine is underperforming relative to the human expert can improve the DL algorithm itself.Understanding the AI decisionmaking process when the machine is outperforming the human expert can lead to new medical discoveries to extract the deep knowledge and insights that the AI utilizes to more optimal decisions than humans.DL in healthcare has already achieved wonders and improved the quality of treatment.Multiple organizations like Google,IBM,have spent a significant amount of time developing DL models that make predictions around hospitalized patients and support managing patient data and results.The future of healthcare has never been more intriguing,and both AI and ML have opened opportunities to craft solutions for particular needs within healthcare.

The present study found that a neural network can be trained to classify and detect KOA severity and laterality as per the KL grading scale.The study reported excellent accuracy with the DenseNet model for the detection of the severity of KOA as per KL grading.Previously,it was challenging for healthcare professionals to collect and analyze large data for classification,analysis,and prediction of treatments since there were no such tools and techniques present.But now,with the advent of ML,it has been relatively available.ML may also help provide vital statistics,real-time data,and advanced analytics in terms of the patient’s disease,lab test results,blood pressure,family history,clinical trial data,to doctors.The future of the technology in orthopedics and the application of this study’s findings are multi-fold.We can also borrow from experience from other subspecialties.

DL algorithms will diagnose symptomatic OA needing treatment from radiological OA with no symptoms needing just observation.Both humans and machines make mistakes in applying their intelligence to solving problems.In ML,overfitting memorizes all examples,and an overfitted model lacks generalization and fails to work on never-before seen examples.In ML,transfer learning is a method that reuses a model that has been developed through a system getting to know specialists and has already been reinforced on a huge dataset[18,19].

Transfer learning leverages data extracted from one set of distributions and uses it to leverage its learning to predict or classify others.In humans,the switch of expertise to college students is frequently performed with the aid of using instructors and training providers.This might not make the scholars smart.However,transfer learning makes the knowledge provider wise because of the knowledgegathering party.In the case of humans,transferring expertise relies upon the inherent intelligence of the expert to improve the classification and prediction skills.

Future prospects

With the identification of top models for the classification of OA using a medical image,these models can be deployed for automating the process of preliminary X ray processing and determination of the next course of action.Any image classification project starts with a substantial number of images classified by an expert.This will provide inputs to the training of the model.There are multiple challenges that can result from this misclassification of data during validation of the model by a machine,such as intra-class variation,image scale variation,viewpoint variation,orientation of image,occlusion,multiple objects in the image,illumination,and background clutter.While humans can overcome some of these possible challenges with naked eyes,it will be difficult for a machine to reduce the impacts of such factors while classifying the images.This results in an increased amount of loss and effect on the outcome of the prediction.

Expert human interpretation accuracy comes with experience,and it was observed in this study.Both humans and machines are prone to overfitting,a curse that impacts preconceived notions while making decisions.AI is limited to the areas in which they are trained.Human intelligence and its interpretation,however,are independent of this domain of training.The key differentiator between human beings and machines is that humans will be able to solve problems related to unforeseen domains,while the latter will not have the capability to do that.The common solution suggested by experts to overcome overfitting or underfitting is cross-validation and regularization.This would be achieved by increasing the size or number of parameters in the ML model,increasing the exploration of complexity or type of the model,increasing the training time,and increasing the number of iterations until loss function in ML is minimized.Another major drawback of utilizing transfer learning models for image classification is negative transfer,where transfer learning ends up decreasing the accuracy or performance of the model.Thus,there is a need to customize the models and their parameters to see the impact of tuning and epochs on the performance or accuracy of the model.

This study is a preliminary step to the implementation of an end-to-end machine recommendation engine for the orthopedic department,which can provide sufficient inputs for OA surgeons to determine the requirement of OA surgery,patient treatment,and prioritization of patients based on engine output and related patient demographics.

CONCLUSION

Standard radiographs of the hand,wrist,and ankle fractures were automatically diagnosed as a first step while identifying the examined view and body part[5].A continuous,reliable,fast,effective,and most importantly accurate machine would be an asset without access to a radiology expert in the premise for preliminary diagnosis.AI solutions may incorporate both image data and the radiology text report for best judgment of an image or further learning of the network[14].A recent study[15] revealed that with the help of isolating the subtle changes in cartilage texture of knee cartilage in a large number of magnetic resonance imaging T2-mapped images could effectively predict the onset of early symptomatic (WOMAC score) KOA,which may occur 3 years later.We are in a new era of orthopedic diagnostics where computers and not the human eye will comprehend the meaning of image data[16,17],creating a paradigm shift for orthopedic surgeons and even more so for radiologists.The application of AI in orthopedics has mainly focused on the implementation of DL on clinical images.

Transfer learning techniques will not work when the image features learned by the classification layer,which are not enough nor appropriate to identify the classes for KL grades.When the medical images are not similar,then the KOA features are transferred poorly in the model as in the conventional model building,where layers for accuracy purposes have been adjusted as per convenience.If the same is applied in transfer learning,then it results in a reduction of trainable parameters and results in overfitting.Thus,it is important that a balance between overfitting and learning features is achieved.

This study also plans to improve the accuracy,which can be improved with more images,and the features of the algorithm.The implication of this study goes beyond the simple classification of KOA.The study paves the way for extrapolating the learning from KOA classification using ML to develop an automated KOA classification tool and enable healthcare professionals with better decision-making.This study helps in exploring trends and innovations and improving the efficiency of existing research and clinical trials associated with AI-based medical image classification.The Healthcare sector,including orthopedics,generates a large amount of real-time and non-real-time data.However,the challenge is to develop a pipeline that can collect these data effectively and use them for classification,analysis,prediction,and treatment in the most optimal manner.ML allows building models to quickly analyze data and deliver results,leveraging historical and real-time data.Healthcare personnel can make better decisions on patients’ diagnoses and treatment options using AI solutions,which leads to enhanced and effective healthcare services.

ONCE upon a time there dwelt on the outskirts1 of a large forest1 a poor woodcutter2 with his wife and two children; the boy was called Hansel3 and the girl Gretel

ARTICLE HIGHLIGHTS

Research background

Artificial intelligence (AI)-based on deep leaning (DL) has demonstrated promising results for the interpretation of radiographs.To develop a machine learning (ML) program capable of interpreting orthopedic radiographs with accuracy,a project called DL algorithm for orthopedic radiographs was initiated.It was used to diagnose knee osteoarthritis (KOA) using Kellgren-Lawrence scales in the first phase.

Research motivation

I m in Grade 7 now, and I have tons of friends. They support me when I am sad, and I support them. We have fun and happy times together and I love them all to bits. It amazes11 me how easy our friendship is. There s no struggling to be on top—we re all equal. I smile all the time now and I never feel alone. I have so many friends now, that it doesn t matter to me if two of them walk away or if two of them buy friendship necklaces together. It doesn t hurt my feelings or make me feel alone, because I have so many friends. We re all one big group of friends. Even though Grade 6 was horrible12, it taught me a lot. It s better to have ten or 20 awesome13 friends than just one best friend.

Research objectives

This study aimed to explore the use of transfer learning convolutional neural network for medical image classification applications using KOA as a clinical scenario,comparing eight different transfer learning DL models for detecting the grade of KOA from a radiograph,to compare the accuracy between results from AI models and expert human interpretation,and to identify the most appropriate model for detecting the grade of KOA.

Research methods

The purpose of this study was to compare eight different transfer learning DL models for KOA grade detection from a radiograph and to identify the most appropriate model for detecting KOA grade.

Then he dragged his enemy joyfully4 to the house, feeling that at length he had got the better of the mischievous5 beast which had done him so many ill turns

Research results

Overall,our network showed a high degree of accuracy for detecting KOA,ranging from 54% to 93%.Some networks were highly accurate,but few had an efficiency of more than 50%.The DenseNet model was the most accurate,at 93%,while expert human interpretation indicated accuracy of 74%.

Research conclusions

The study has compared the accuracy provided by expert human interpretation and AI models.It showed that an AI model can successfully classify and differentiate the knee X-ray image with the presence of different grades of KOA or by using various transfer learning convolution neural network models against human actions to classify the same.The purpose of the study was to pave the way for the development of more accurate models and tools,which can improve the classification of medical images by ML and provide insight into orthopedic disease pathology.

Overjoyed at having obtained what they wanted, the counsellors sent envoys8 far and wide to get portraits of all the most famous beauties of every country. The artists were very busy and did their best, but, alas9 nobody could even pretend that any of the ladies could compare for a moment with the late queen.

Research perspectives

The prince gave orders to set sail at once, and after a fine voyage landed in his own country, where his parents and his only sister received him with the greatest joy and affection

Tiwari A analyzed and evaluated the feasibility and accuracy of the artificial intelligence models with the interpretation of data; Poduval M was involved in critically revising the draft; Bagaria V designed and conceptualized the study and revised the manuscript for important intellectual content; All authors read and approved the final manuscript.

Elves know nothing of human history, to them it s a mystery. So the little elf, already a shouter, just got louder Go to the library, I need books. More and more books. And you can help me read them too.

The study was reviewed and approved by the Scientific Advisory Committee and Institutional Ethics Committee of Sir H.N.Reliance Foundation Hospital and Research Centre,Mumbai,India (Approval No.HNH/IEC/2021/OCS/ORTH/56).

Informed consent was waived since the data were retrospectively collated anonymously from routine clinical practice.

The authors of this manuscript have no conflicts of interest to disclose.

No additional data are available.

This article is an open-access article that was selected by an in-house editor and fully peer-reviewed by external reviewers.It is distributed in accordance with the Creative Commons Attribution NonCommercial (CC BYNC 4.0) license,which permits others to distribute,remix,adapt,build upon this work non-commercially,and license their derivative works on different terms,provided the original work is properly cited and the use is noncommercial.See: https://creativecommons.org/Licenses/by-nc/4.0/

India

Anjali Tiwari 0000-0002-8683-6102; Murali Poduval 0000-0002-6821-0640; Vaibhav Bagaria 0000-0002-3009-3485.

Wang JL

Albert Einstein did not speak until he was four years old and didn’t read until he was seven. His teacher described him as “mentally slow, unsociable and adrift forever in his foolish dreams.” He was expelled and refused admittance to Zurich Polytechnic8 School.

A

Wang JL

World Journal of Orthopedics2022年6期

- World Journal of Orthopedics的其它文章

- Management of hip fracture in COVID-19 infected patients

- Topical use of tranexamic acid:Are there concerns for cytotoxicity?

- Content of blood cell components,inflammatory cytokines and growth factors in autologous platelet-rich plasma obtained by various methods

- Reducing bacterial adhesion to titanium surfaces using low intensity alternating electrical pulses

- Role of joint aspiration before re-implantation in patients with a cement spacer in place