Combination of classifiers with incomplete frames of discernment

Zhung LIU, Jingfei DUAN, Linqing HUANG, Jen DEZERT,Yongqing ZHAO

a School of Automation, Northwestern Polytechnical University, Xi’an 710072, China

b ONERA - The French Aerospace Lab, Palaiseau 91761, France

KEYWORDS Abnormal object;Belief functions;Classifier fusion;Evidence theory;Detection

Abstract The methods for combining multiple classifiers based on belief functions require to work with a common and complete (closed) Frame of Discernment (FoD) on which the belief functions are defined before making their combination. This theoretical requirement is however difficult to satisfy in practice because some abnormal (or unknown) objects that do not belong to any predefined class of the FoD can appear in real classification applications. The classifiers learnt using different attributes information can provide complementary knowledge which is very useful for making the classification but they are usually based on different FoDs. In order to clearly identify the specific class of the abnormal objects, we propose a new method for combination of classifiers working with incomplete frames of discernment,named CCIF for short.This is a progressive detection method that select and add the detected abnormal objects to the training data set.Because one pattern can be considered as an abnormal object by one classifier and be committed to a specific class by another one,a weighted evidence combination method is proposed to fuse the classification results of multiple classifiers.This new method offers the advantage to make a refined classification of abnormal objects, and to improve the classification accuracy thanks to the complementarity of the classifiers. Some experimental results are given to validate the effectiveness of the proposed method using real data sets.

1. Introduction

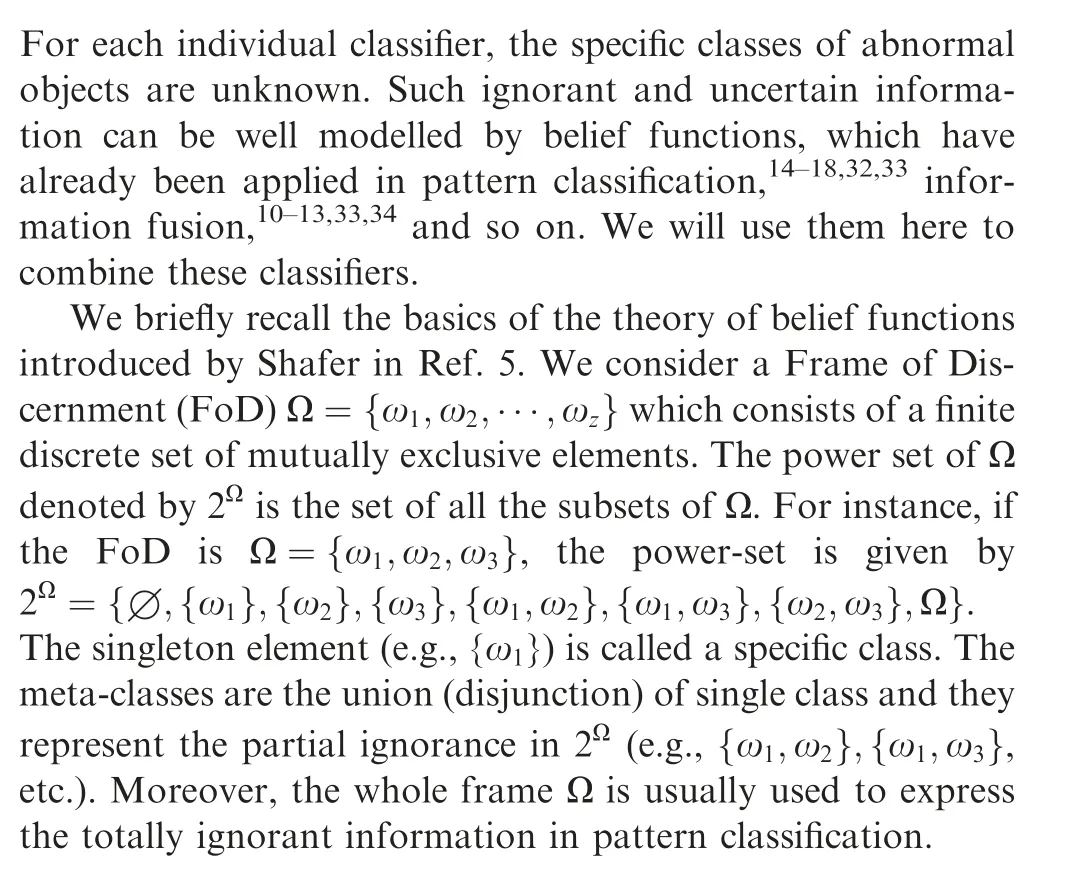

In object recognition, the classifiers learnt with different attributes usually provide diverse information for query objects.The combination of classifiers must take advantage of the complementary information of different classifiers to improve the classification accuracy. According to the type of the individual classifier output, the fusion methods can be generally divided into three groups:class rankings,crisp labels,and soft outputs. The class set reduction or reordering methods are usually applied to merge the class rankings. The crisp labels are often combined by the voting methods. The soft output(e.g.,fuzzy membership,probability,and belief functions)provides much useful classification information,and the Bayesian rule,fuzzy integrals,or Belief Functions (BFs)can be employed to deal with such soft output. Particularly, belief functions theory also called evidence theory or Dempster-Shafer Theory(DST)provides an appealing theoretical framework to represent and combine uncertain information,and it can also well characterize the (partial) ignorance thanks to the use of set of classes.The belief functions have been successfully applied in many fields, e.g., information fusion,pattern classification,pattern clustering,parameter estimationand so on.

Some classification fusion methods based on BF have been developedto deal with the uncertainty in pattern recognition. In Ref. 24, three fusion techniques including Sugeno’s fuzzy integral, the possibility theory, and BF are used to extract the information for the Automatic Target Recognition(ATR),and the experiments show that the best performance is usually achieved by the BF-based approach. Because multiple classifiers can have different reliabilities on the given data set,a new weighted classifier combination method based on BF is proposed in Ref. 12 to improve the classification accuracy.To deal with complex pattern recognition and to improve the classification performance, a cautious discounting rule based on the reliability evaluation is also developed with BF in Ref. 11.

The traditional classifier fusion methods for combining multiple classifiers based on belief functions require to work with a common and complete (closed) Frame of Discernment(FoD)on which the belief functions are defined before making their combination.This theoretical requirement is however difficult to satisfy in practice because some abnormal (or unknown) objects that do not belong to any pre-defined class of the training data set can appear in real classification applications.

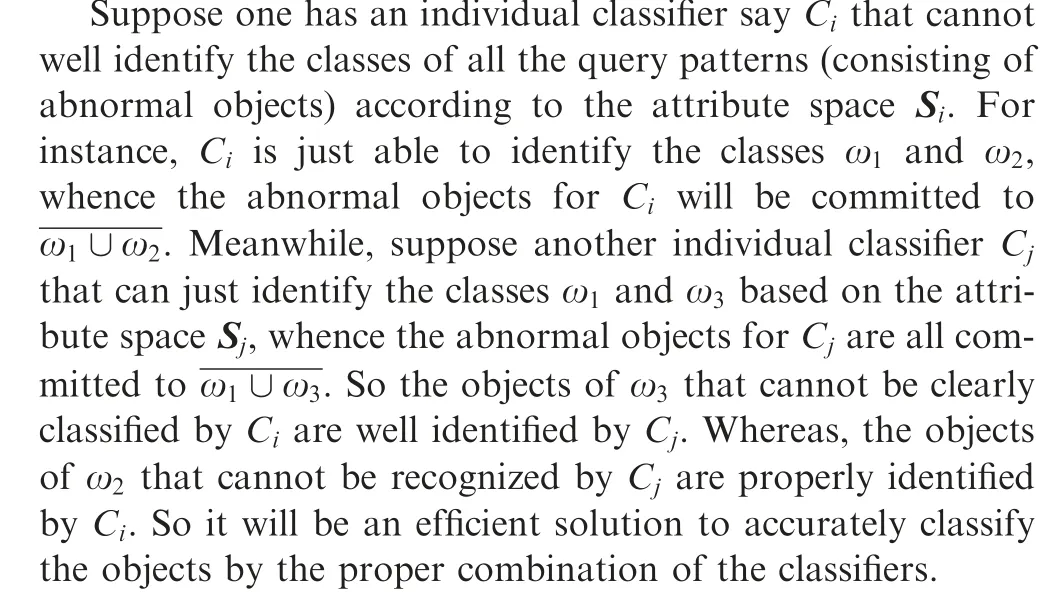

In object recognition, it is a challenging problem to detect and identify abnormal objects,since the class of abnormal objects is out of the FoD given a priori, and the classes of abnormal objects are usually unavailable in training process.There exists some methodsto solve such issue.An interesting‘‘1-vs-set machine”presented in Ref.25 browses the decision space from the marginal distances of a 1-class or binary Support Vector Machine (SVM) with a linear kernel. In Ref.29, a novel method models the decision space of a trained SVM classifier for decreasing false matches from unknown classes, and it takes advantage of a few known cameras to adjust the decision boundaries. Nevertheless, the aforementioned methods only detect abnormal objects without separating them into the corresponding classes. Compared with any individual classifier, the fusion of multiple classifiers should not only improve the classification accuracy,but it should also provide more specific classification results. Multiple classifiers generally provide some complementary information for classification, and a judicious combination of these classifiers with different FoDs is able to clearly recognize the specific class of the abnormal object (with respect to the individual classifier).

In this paper,we present a new method to combine multiple classifiers with incomplete FoDs based on belief functions.Our contributions are summarized as follows:

(1) For the individual classifier, the significantly abnormal object is detected at first based on local K nearest neighbors. Because the different classes may have different dispersion degrees, we seek the KNNs of object in each class instead of the whole training data set.After that,if the mean value of the distances between object and its KNNs is too big with respect to the given threshold,this object will be considered as the significantly abnormal object.

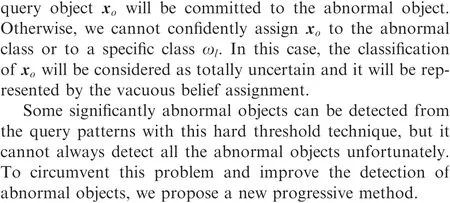

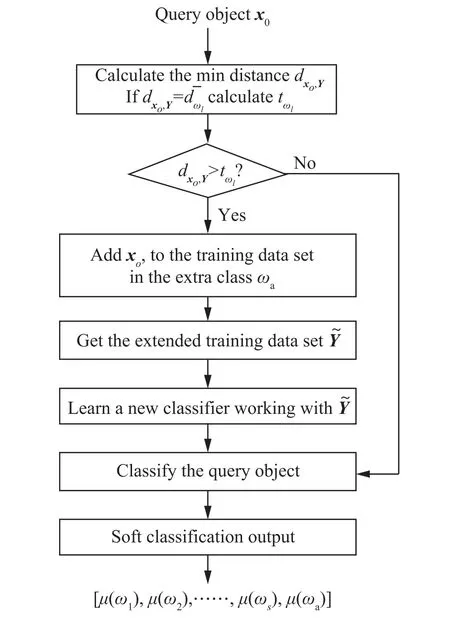

(2) There may still exist some abnormal objects that cannot be well detected. Then a progressive classification method is developed to improve the accuracy of each individual classifier. The significantly abnormal objects are added into the training data set as the abnormal class to train a new classifier. The other query patterns will be classified by this new classifier. By doing this,we can efficiently improve the detection accuracy of the abnormal object.

(3) Once the abnormal object detection is done, the classification results from different classifiers are combined by Dempster-Shafer (DS) rule to further refine the class of the abnormal objects. Because the quality(reliability) of each classifier is different, the classification results are discounted with different weights before combination. This new method can be used to efficiently identify the specific class of the abnormal object, and improve the classification accuracy as well.

This paper is organized as follows. In Section 2 we present in details the Combination of Classifiers with Incomplete Frames of discernment (CCIF) method. The experimental results of CCIF and its performance are given and discussed in Section 3, with concluding remarks and perspectives in Section 4.

2. A new method for combination of classifiers with incomplete frames of discernment

In the traditional classifier fusion problem based on belief functions, one needs to work with common FoD. Moreover,the FoD is often considered as complete (i.e., a set of exhaustive and mutually exclusive classes).It means that the potential class label of the object (query pattern) must be included in FoD of each classifier. So it requires that there must exist sufficient prior labeled (training) patterns for each class to learn the classifier. Such FoD can be regarded as a closed set. Most pattern classification techniques focus on solving closed-set problems. However, some classes of query patterns (objects)may be not included in the training data set in many applications. In such case, the given FoD determined by the labeled training data set is considered as incomplete. For one object,if its true class is not included in FoD of a classifier, it will be considered as an abnormal object, and it is impossible to clearly identify the specific class of the abnormal object using this individual classifier. If we have multiple classifiers learnt in different attribute spaces, these classifiers generally provide useful complementary knowledge.For instance,the samples of class ω(or ω) could be unavailable in the attribute space S(or S) corresponding to classifier C(or C), but we may have some labeled samples of these classes ω(or ω)in the attribute space S(or S)with classifier C(or C).So,one wants to identify both classes of ωand ωby combining the classifiers Cand Cin an appropriate way.A new method for Combination of Classifiers with Incomplete Frames of discernment (CCIF)is presented in this section to achieve this goal. It includes two main steps: (A) the progressive detection of abnormal objects with each individual classifier,and(B)the refined classification of abnormal objects by the combination of the classifiers.

2.1. Progressive detection of abnormal object

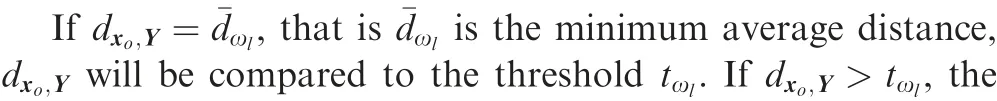

The basic principle of the progressive detection method for abnormal object is introduced here. If an object, say x,belongs to a class of the training data set, xis usually close to the training patterns of the same class. However, if xis an abnormal object, it will far away from all the training patterns. Thus, we can detect the abnormal objects thanks to an unsupervised method based on the distance from the object to the training samples. When the distance measure is beyond a given threshold, the object is likely committed to the abnormal class. Such method based on a hard thresholding technique cannot always give a sufficient good detection performance, and that is why we propose a new progressive detection method for achieving good performance.Once some significantly abnormal objects are detected (those quite far from the training samples), one can add them to the training data set labeled as the abnormal class. Then we can learn a new classifier using the extended training data set, and the abnormal class will be included in the FoD of this new classifier. The significant features of abnormal objects can be captured by this new classifier. The other query patterns will be classified by this new classifier. By combining the preliminary detection of unsupervised significant abnormal objects with the refined method of supervised object classification,the accuracy of object classification can be significantly improved.

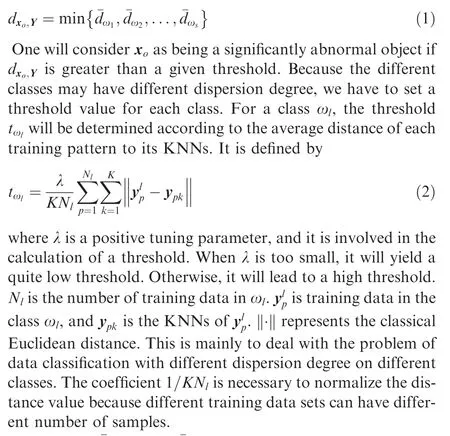

When the object is closest to one class of training data, it means the object most likely belongs to this class compared with the other classes. If the distance between the object and this closest class is big, it indicates that the object probably belongs to the abnormal class. Hence, the minimum value of these average distances is used as the distance measure of this object to the whole training data set, that is

The general principle of abnormal object detection method(i.e. the Step 1 of CCIF) for a classifier is illustrated by the flowchart of Fig. 1 for convenience.

Fig. 1 Flow chart of Step 1 of CCIF for a classifier.

In real applications, these abnormal objects detected by an individual classifier may truly belong to several classes rather than a single class. However, it is quite difficult to accurately identify the specific class of each abnormal object. Because the classifiers learnt using different attributes,they can provide important complementary knowledge. The refined classification of these abnormal objects will be achieved thanks to the combination of the multiple classifiers.

2.2.Weighted combination of classifiers based on belief functions

DS rule provides an efficient tool to characterize and combine the (partial) ignorant information using belief functions.Because the individual classifiers are learnt by different attribute spaces, they can provide some complementary information for classification. DS rule can be used for the combination of multiple classifiers to improve classification accuracy, and can also identify the specific class of abnormal objects with the conjunctive combining operation. If one object is confidently classified into a particular class by a classifier, but it is assigned to the abnormal class by other classifiers, then the specific class of this object can be identified after the combination.

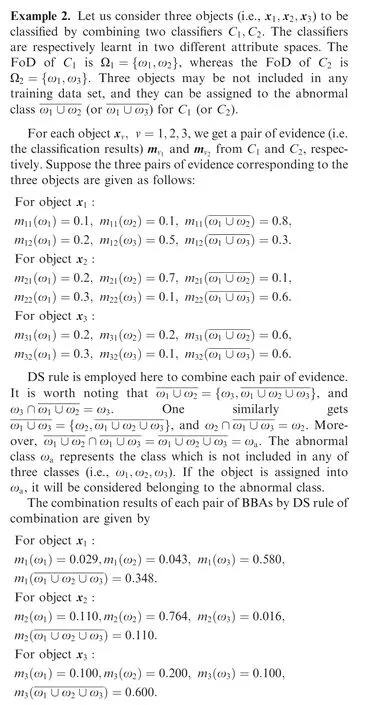

The following example shows how to combine multiple classifiers based on belief functions in the the Step 2 of CCIF method.

In Example 2, the object xis considered very likely as being an abnormal object by C, whereas it is classified to ωby Cwith the higher mass of belief. According to the combination results,we can see that xwill be most likely assigned to ω. The object xclassified to ωby Cis considered as an abnormal object by C, and it will be committed to ωby the DS combination result. This simple example shows that the specific classes of abnormal objects with respect to individual classifiers can be clearly identified by the belief-based combination strategy. For the object x, it is considered as most likely being abnormal object by both classifiers Cand C.Because we cannot obtain the knowledge about the specific class of the object using any classifier, xhas to be put in the abnormal class ω=ω∪ω∪ωby the fusion of the results obtained by Cand C. This means xdoes not belong to any pre-defined class.It shows that our method is able to deal with such abnormal objects in this framework.

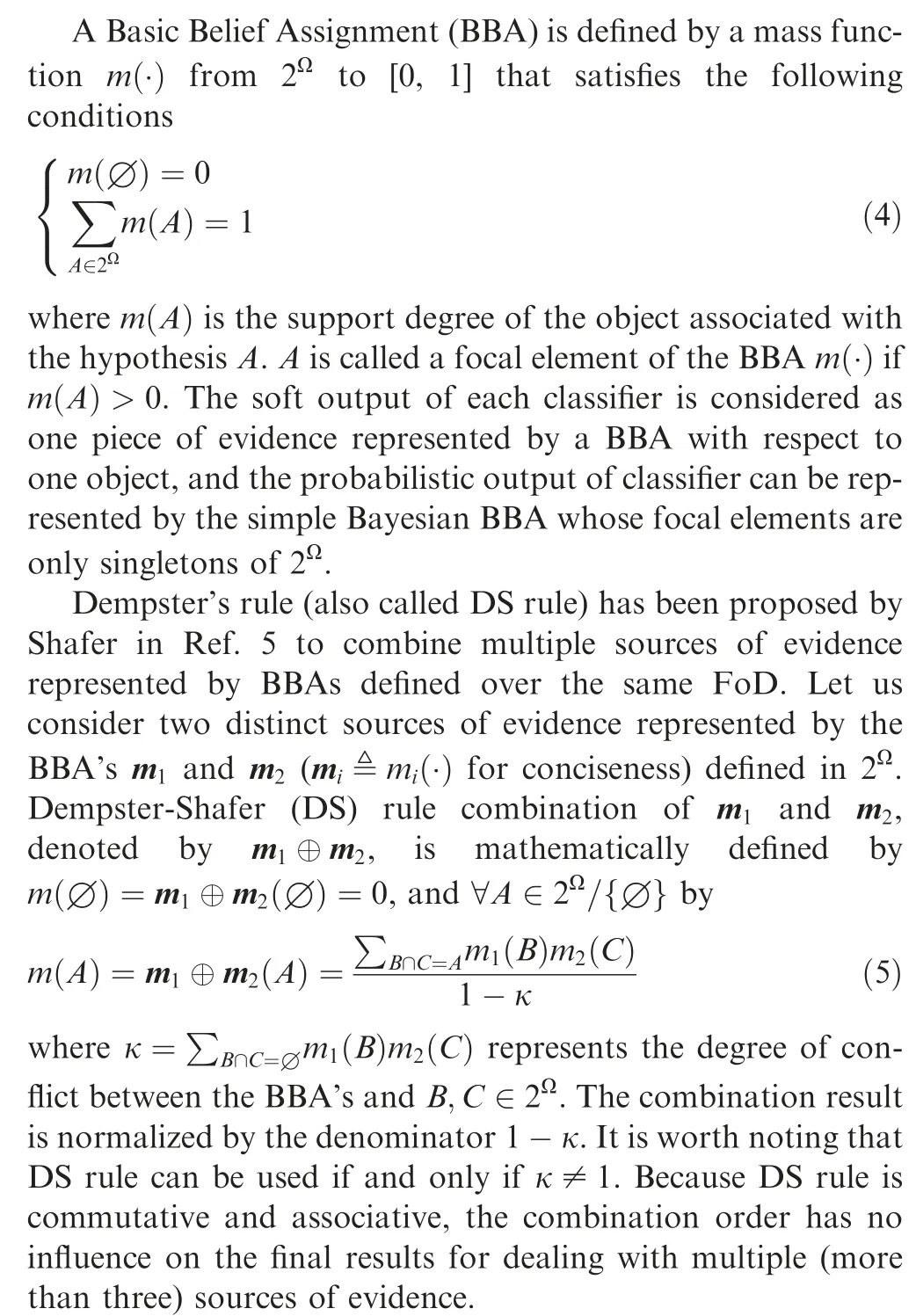

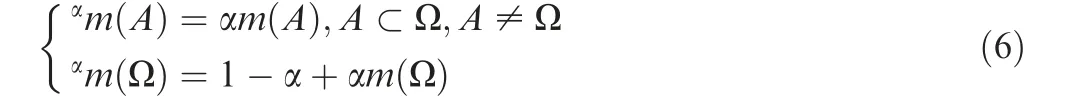

The individual classifiers are learnt with different attributes,and they usually have different reliabilities/weights for classification. If the reliability of classifier is high, the weight (discount factor) is usually big and vice versa. The classical discounting operationis employed here to discount each BBA (i.e. classifier output) with the corresponding weight.Once done,the discounted BBAs can be combined by DS rule for making final class decision.The beliefs of each class will be discounted to the total ignorance depending on the weight,and this aims to control the influence of each classifier in the combination. The discounted BBA with weight α ∈[0,1 ] is obtained as follows.

If the source of evidence is completely reliable, one has α=1 andm(A )=m(A ). If the source of evidence is not reliable at all, all the beliefs will be discounted to the total ignorance asm(Ω )=1 with α=0.m(Ω )=1 is called vacuous BBA denoted by mand it plays a neutral role for DS rule of combination because m⊕m=m.

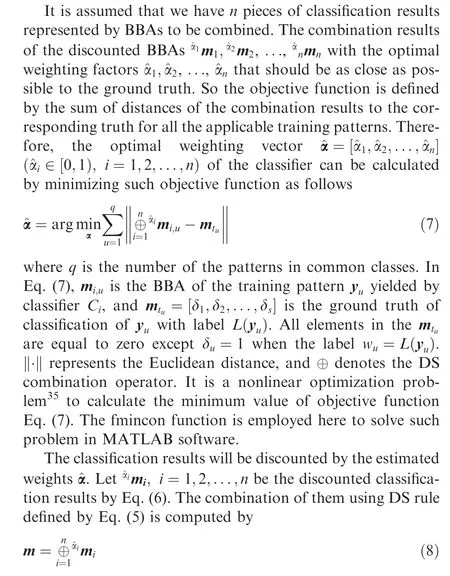

It is important to properly determine the weighting (i.e.,discounting) factors of classifiers for achieving as high as possible the classification accuracy. We will attempt to find the suitable weights using the training data with true labels.There usually exist several common classes among the FoDs of different classifiers to be combined.The labeled training patterns of these common classes will be employed to estimate the optimal weighting factors.

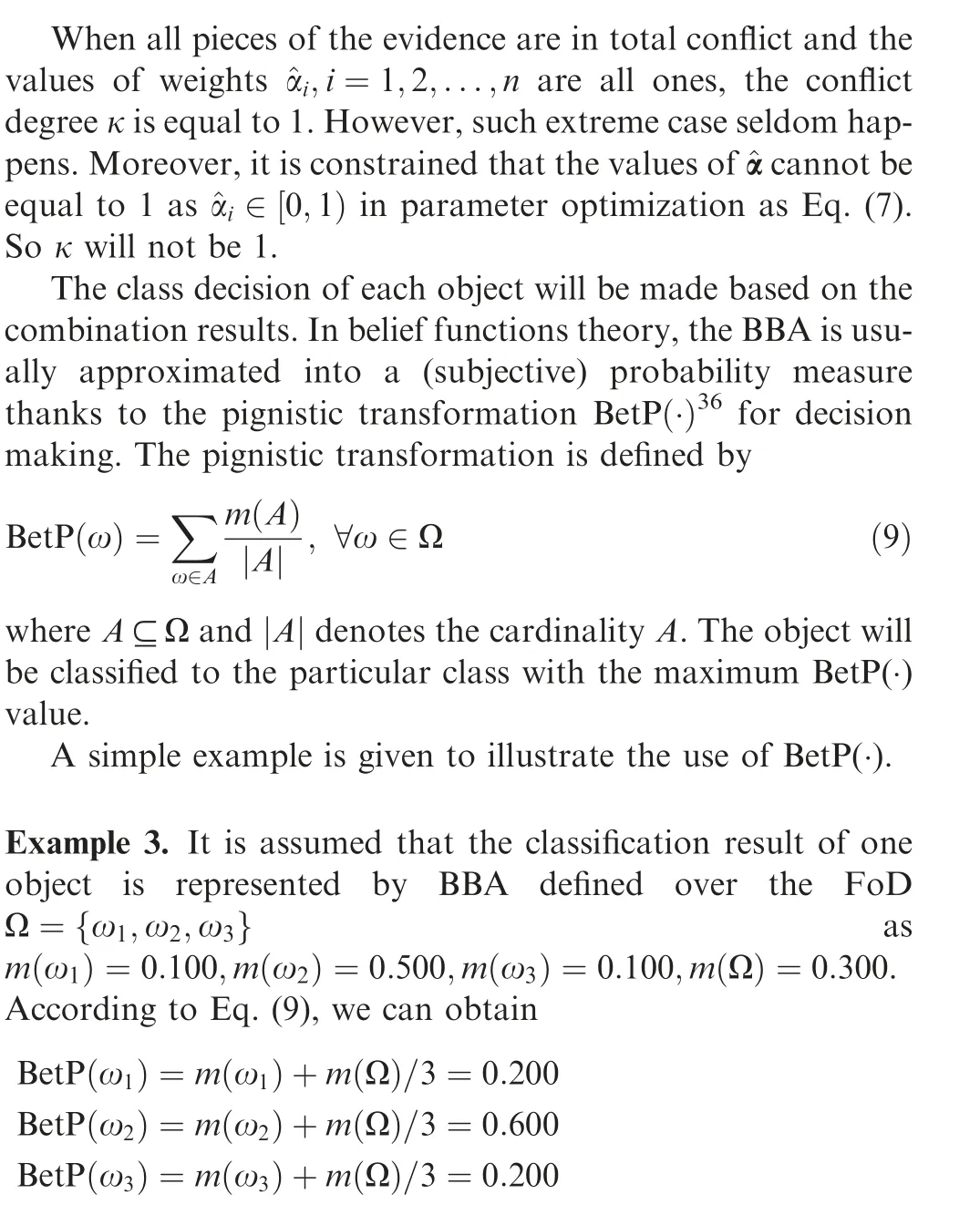

Thus,the object is generally considered most likely belongs to ωwith the maximum BetP(·) value.

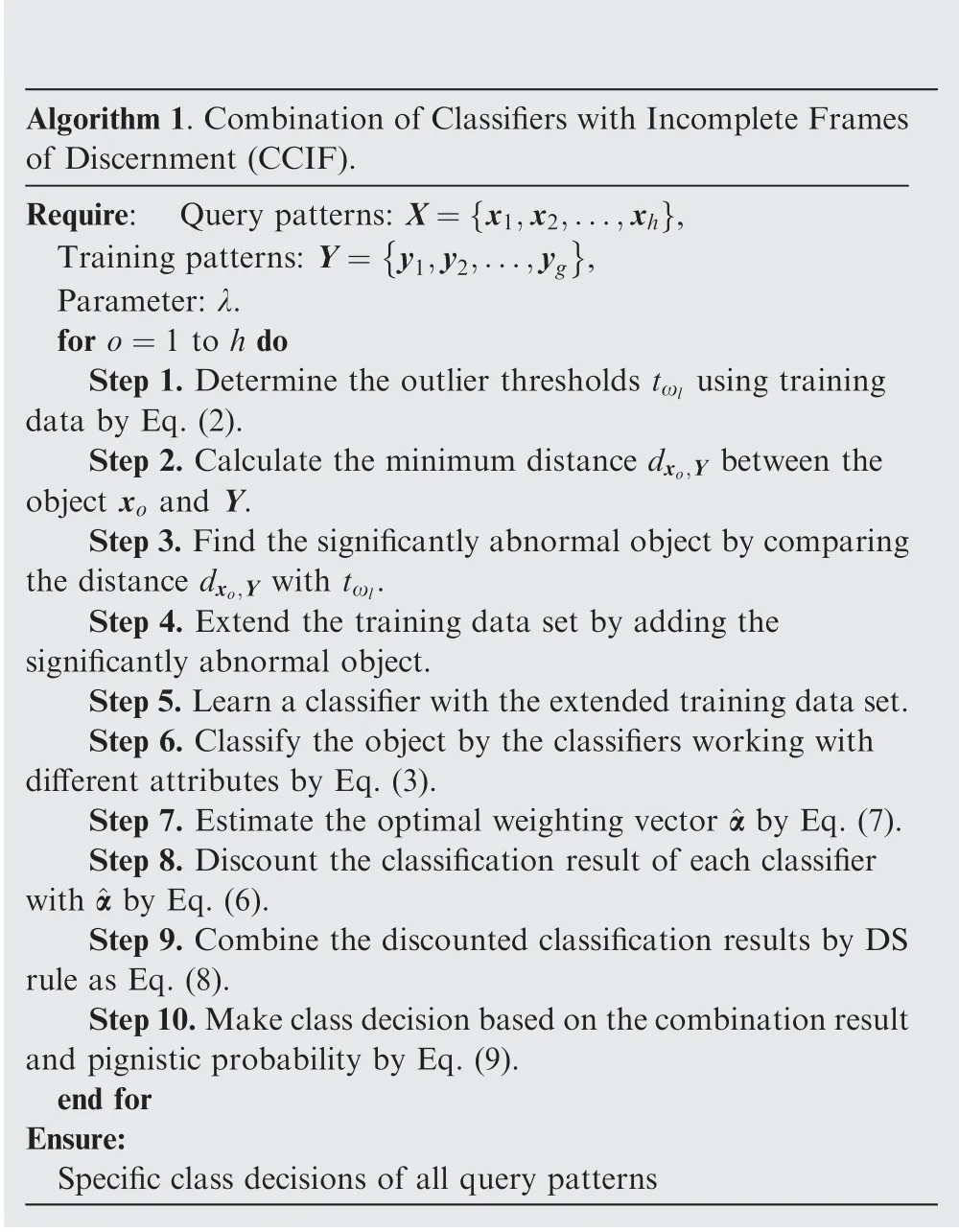

The pseudo code of CCIF is outlined in Algorithm 1 for convenience.

Algorithm 1.Combination of Classifiers with Incomplete Frames of Discernment (CCIF).Require: Query patterns: X= x1,x2,...,xh },Training patterns: Y= y1,y2,...,yg■{■,Parameter: λ.for o=1 to h do Step 1. Determine the outlier thresholds tωl using training data by Eq. (2).Step 2. Calculate the minimum distance dxo,Y between the object xo and Y.Step 3.Find the significantly abnormal object by comparing the distance dxo,Y with tωl.Step 4. Extend the training data set by adding the significantly abnormal object.Step 5.Learn a classifier with the extended training data set.Step 6. Classify the object by the classifiers working with different attributes by Eq. (3).Step 7. Estimate the optimal weighting vector ^α by Eq. (7).Step 8. Discount the classification result of each classifier with ^α by Eq. (6).Step 9. Combine the discounted classification results by DS rule as Eq. (8).Step 10.Make class decision based on the combination result and pignistic probability by Eq. (9).end for Ensure:Specific class decisions of all query patterns

Discussions on parameter tuning: The parameter λ involved in Eq. (2) is used to tune the value of outlier thresholds and controls the number of objects selected as the significantly abnormal objects. It will yield quite low thresholds when λ is too small, and some patterns may be wrongly considered as abnormal objects. This is harmful for learning the classifier using the extended training data set. When the value of λ is big, it will lead to high thresholds. Sufficient significantly abnormal objects cannot be found if the thresholds are too big, and we cannot obtain a good classifier. So the tuning of this parameter depends on the actual applications. Of course,according to the results of experimental, the suitable value λ can be selected. The cross validation technique can be employed to estimate the value of λ. The appropriate value of λ should be able to produce good detection performance.In experiment section, we evaluate the influence of λ based on some benchmark data sets.

3. Experimental applications

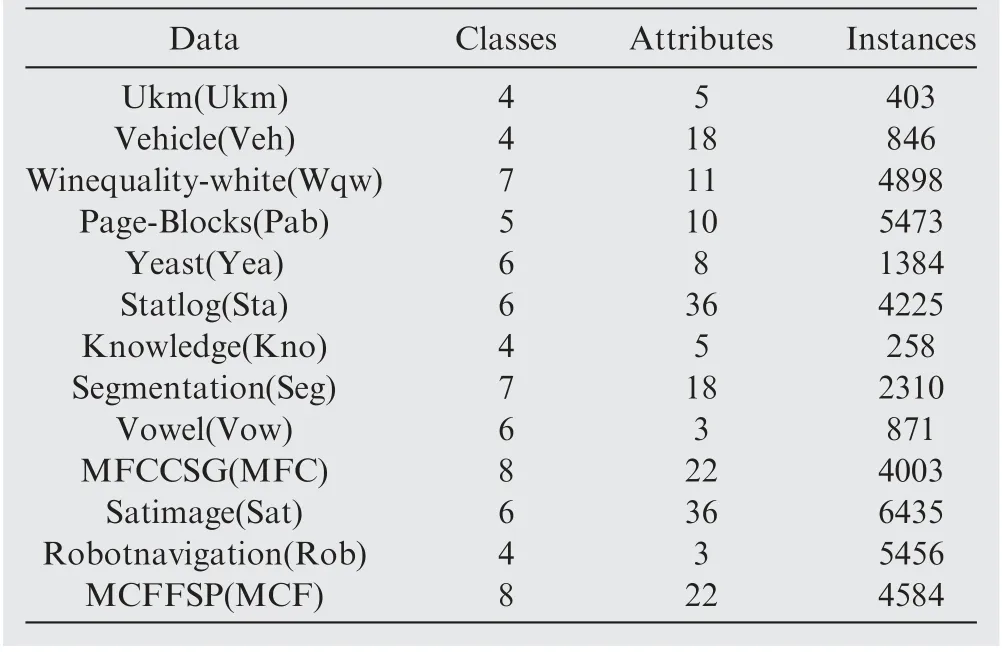

In this section, thirteen real data sets from UCI repository(http://archive.ics.uci.edu/ml) are used to evaluate the performance of the proposed method with respect to other related methods. The basic knowledge of the thirteen real data sets are shown in Table 1. The base classifiers can be chosen according to the actual applications.In this work,several well known classifiers, i.e. Random Forest (RF),Decision Tree Classifier (DTC)and Support Vector Machine (SVM),were selected,since they are often used in pattern classification.MATLAB software is employed for programming on computer with Intel(R) Core(TM) i7-9700 CPU @3.00 GHz.

For detecting abnormal objects, our proposed Progressive Detection Method (PDM) will be compared with Hard Thresholding Method (HTM) and One-class SVM (OCSVM).In HTM,the threshold for each class is set the same as the proposed method. When the minimum distance dbetween the object xand the whole training data set Y is greater than the corresponding threshold t,the object xwill be considered as the abnormal object.The object xwill be classified to the class ωif dis smaller than t.In OCSVM,it computes a binary function which is supposed to capture regions in input space where the probability density locations, i.e. a function such that most of the data will be located in the region where thefunction is nonzero.In OCSVM, the classes that have appeared in the FoD of the training data set are regarded as the positive class, and the abnormal objects are classified into the negative class. The specific class of objects that have prior information in the training data set can be detected in a supervised manner.The Majority Voting(MV)method is employed to combine the classification results with HTM and OCSVM.If the object is considered most likely belongs to a particular class say ωwith one classifier,then ωwill receive voting score 1 by this classifier.When the object is classified to the negative class (i.e. composed by a set of classes) like ωwith maximum probability, then all the classes (including abnormal class)except ωwill receive voting score 1. The object will be finally classified according to the voting results.

Table 1 Basic information of the used data sets.

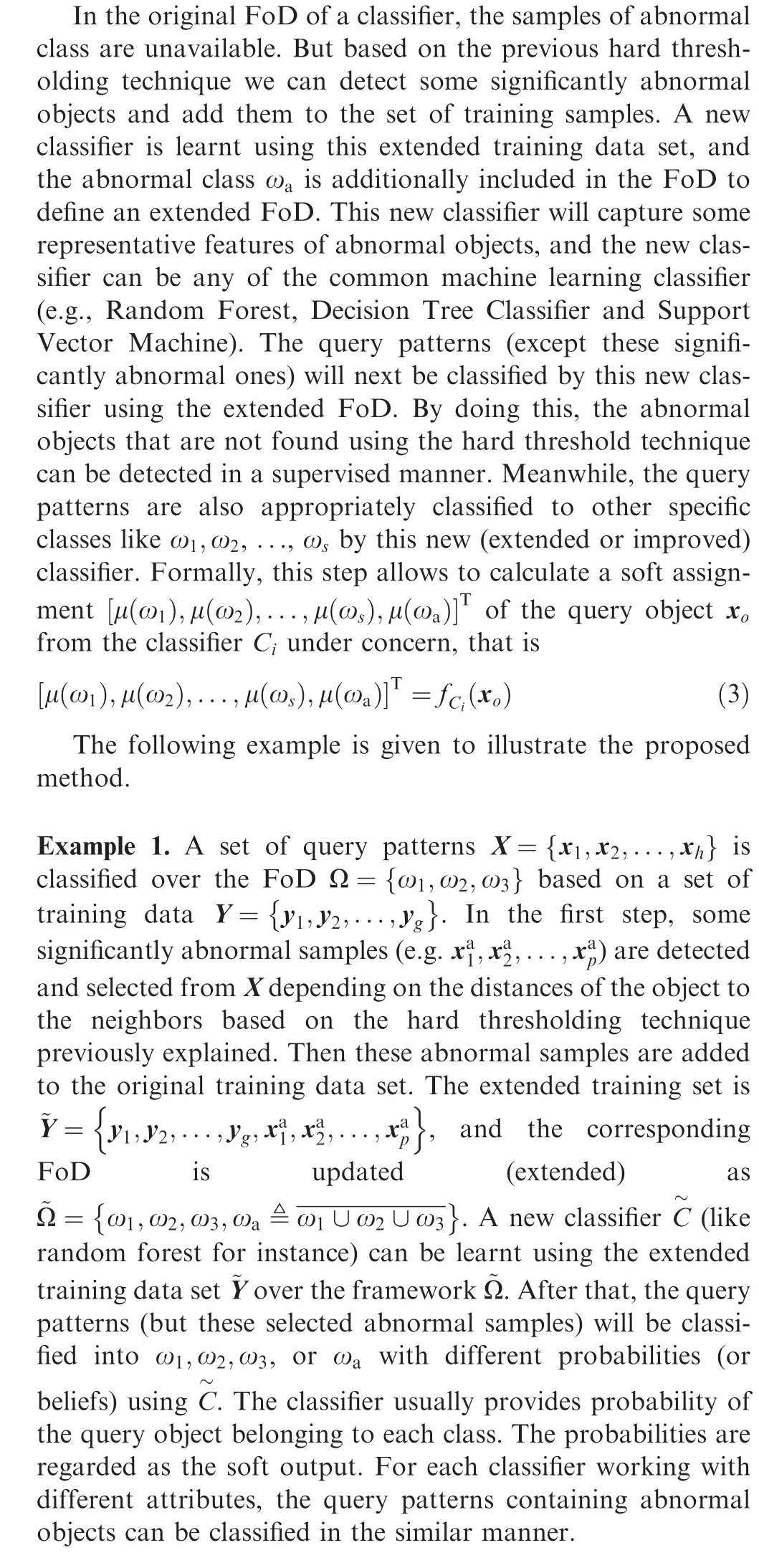

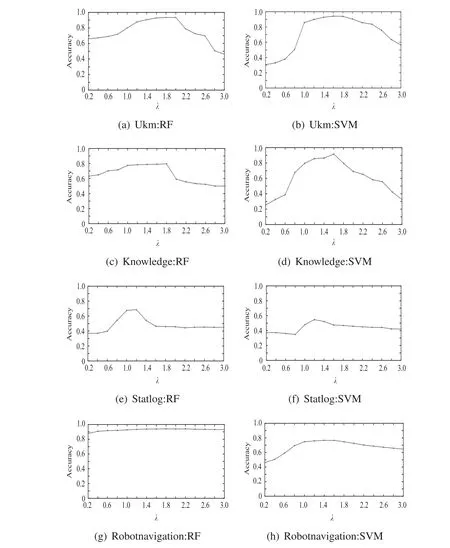

The significant abnormal objects are detected based on K nearest neighbors. The detection performance is tested with the K ranging from 2 to 10.For the tuning parameter λ,it controls the outlier thresholds, and its influence on the classification performance is evaluated. The classification accuracy based on four benchmark data sets and two classifiers is shown in Fig. 2. One can see that the classification accuracy is improved with the proper value of λ.This is a reasonable result because a big value of λ will lead to a small number of significantly objects, and we cannot obtain a good classifier.Whereas a small value of λ will yield quite low thresholds,and some patterns may be wrongly considered as abnormal objects. The reliability of a classifier learned by the extended training data set containing the wrongly labeled abnormal objects is low. One can find that our method generally produces good performances with λ ∈[1,1.5]. We have set λ=1.2 in the following experiments based on our experiences.

The k-fold cross validation is often used to evaluate the classification performance, but k remains a free parameter.The simplest 2-fold cross validation is used here, since the training and test sets are large,and each pattern can be respectively used for training and testing on each fold.For each fold,our testing program is randomly run ten times. In this work,the attributes of the data are randomly divided into several different subsets, and the basic classifiers (RF, DTC and SVM)are respectively learnt using different subsets of attributes.For example, a data set containing seven attributes is randomly divided into two subsets: one with three attributes and the other with four attributes. Then two basic classifiers are respectively learnt using these two subsets of attributes.

In the thirteen data sets tested, the abnormal objects come from one class for the four or five-class data sets, i.e., Ukm,Veh, Pab. The abnormal objects are picked from two classes in the six or seven-class data set, i.e., Yea, Seg. The abnormal objects are taken from three classes in the eight-class data set,i.e., MCF. When the abnormal objects are from one or two classes, the specific classes of all the abnormal objects will be identified via the fusion procedure. Two specific classes and one outlier-class will be identified when the abnormal object contains three classes.

Fig. 2 Accuracy with respect to λ value on benchmark data set.

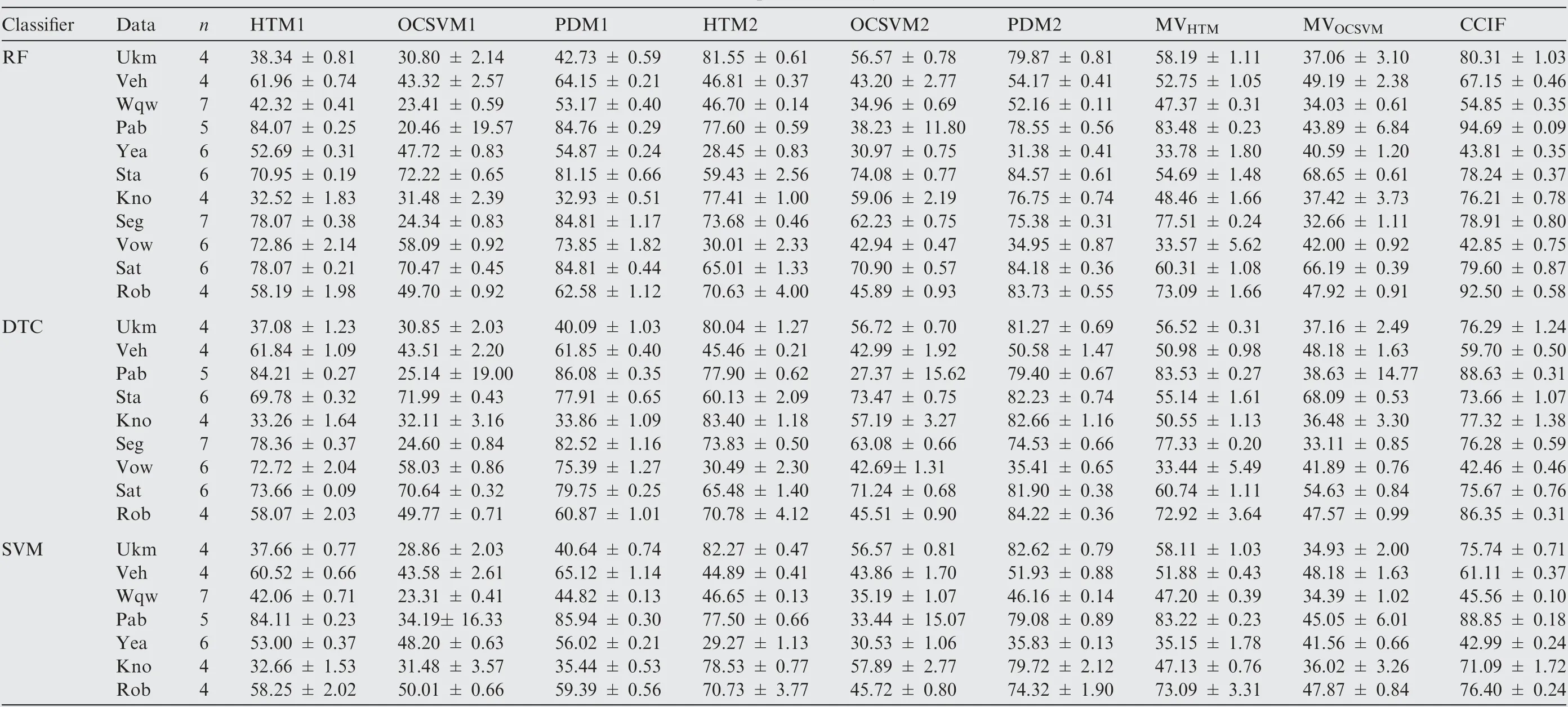

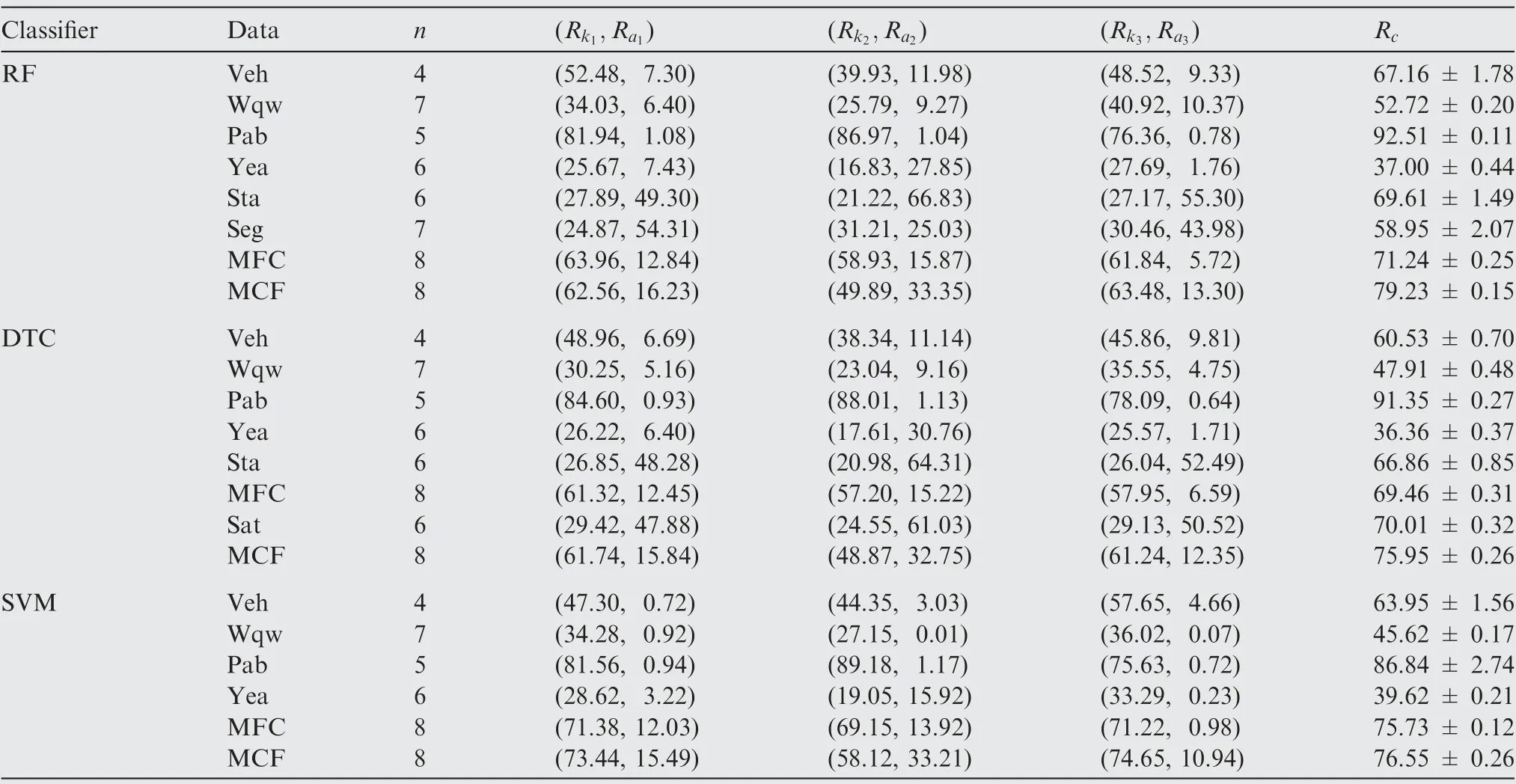

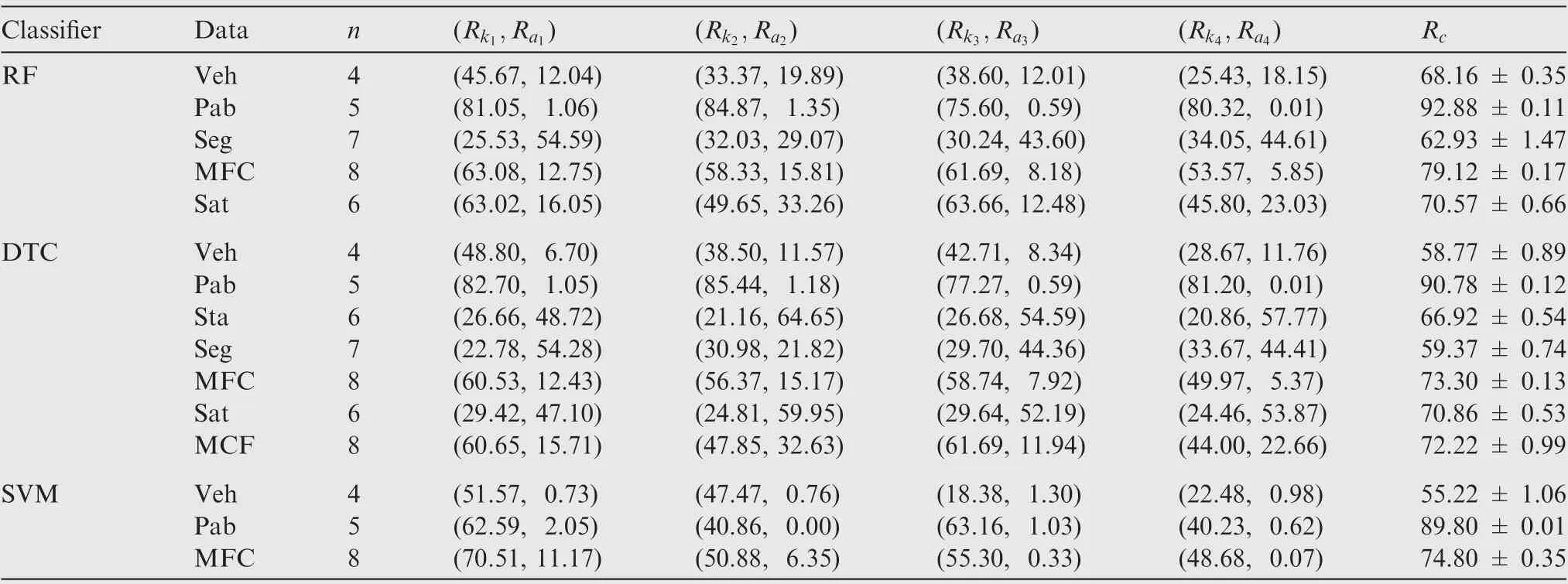

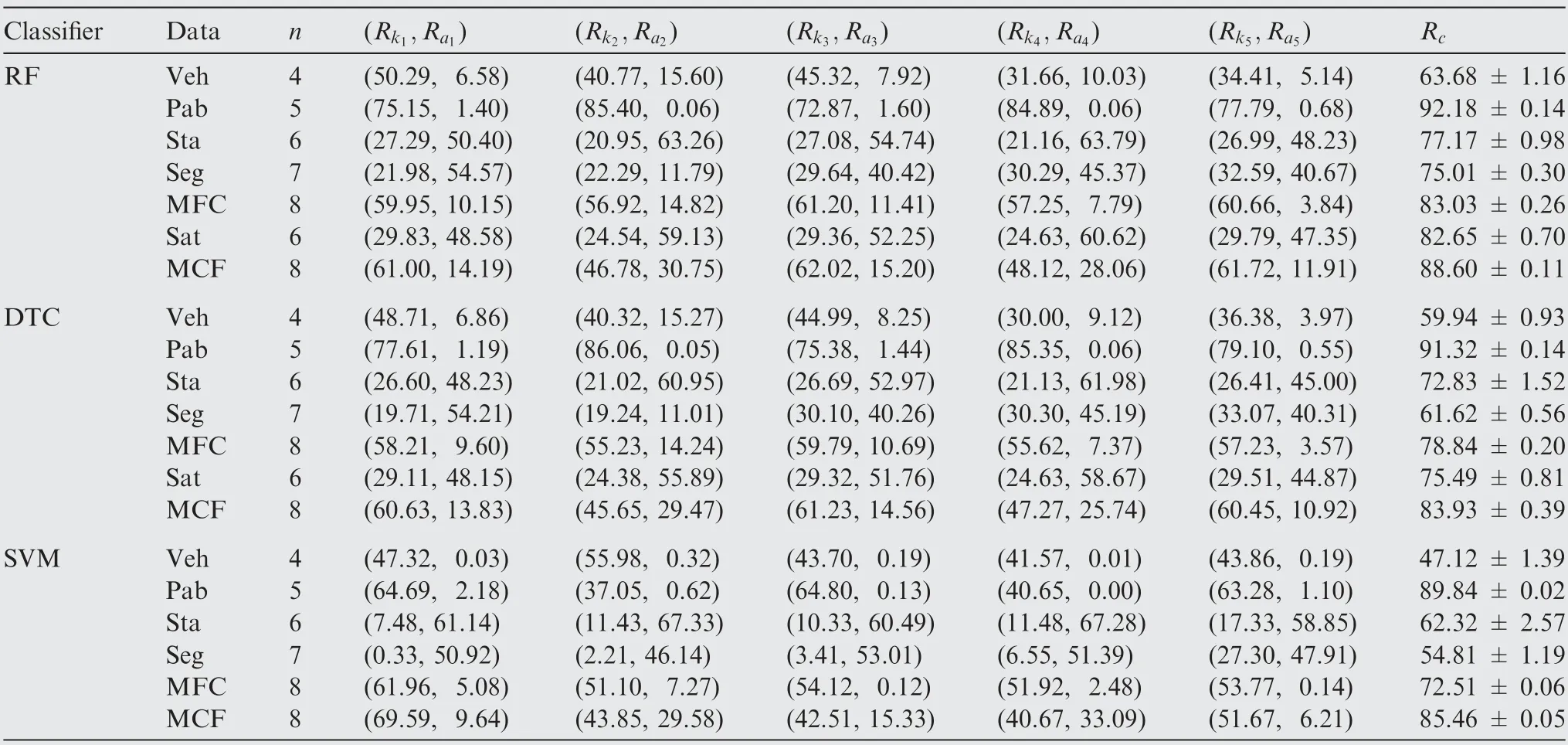

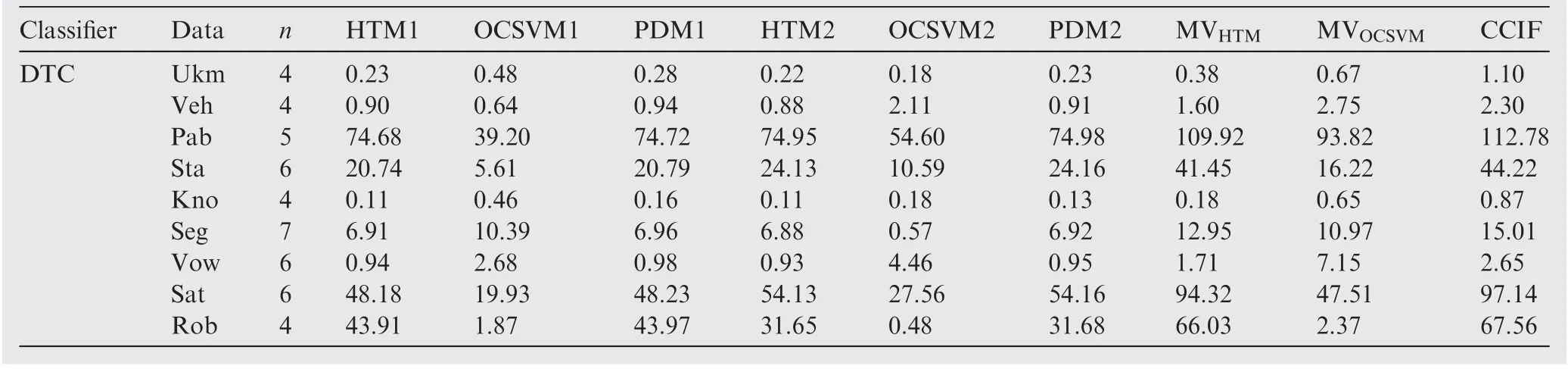

It is considered that I(I=2,3,4,5)classifiers working with the incomplete FoD will be combined for pattern classification.The first step of CCIF is considered as Progressive Detection Method (PDM) for dealing with abnormal objects with individual classifier.The abnormal objects can be also detected by HTM and OCSVM.PDM will be compared with HTM and OCSVM here.The MV method is used for the combination of multiple classifiers, and it will be compared with CCIF in this experiment. MVand MVrepresent the MV method is employed to combine the classification results with HTM and OCSVM, respectively. The classification accuracy of I(I=2,3,4,5) classifiers are shown in the Tables 2-5, where the n value is the number of classes contained in the data set. In the Table 2, the classification results of all methods are presented in detail. The results of each classifier with incomplete FoD and weighted combination are shown in the Tables 3-5. In the Table 2, the general classification accuracy defined by R=N/Tis shown for each classifier. Nis the number of object being correctly classified to the known classes and abnormal class, and Tis the total number of object to classify. In the Tables 3-5, the refined classification accuracy of the objects are shown for each classifier. The classification accuracy of the objects belonging to the known classes is denoted by R(i being the index of classifier), whereas the accuracy of the abnormal objects is denoted by R. The classification accuracy of the combination results by CCIF is denoted by R. It is defined by R=N/T, and R=N/T,and R=N/T.Nis the number of the objects correctly classified to the known classes by classifier i, and Nis the number of objects correctly committed to the abnormal class.Nis the number of objects correctly classified based on the combination results.The mean value of classification accuracy with variance is calculated based on the ten times random tests with K ranging from 2 to 10.Because OCSVM method isnot affected by the value of K,the variance is computed by ten random tests.

Table 2 Classification results of different methods based on two classifiers with incomplete frames (%).

Table 3 Classification results of CCIF method based on three classifiers with incomplete frames (%).

Table 4 Classification results of CCIF method based on four classifiers with incomplete frames (%).

The results in the Tables 2-5 show that the proposed CCIF generally produces higher accuracy than other methods in most cases. In HTM, an object is detected by only comparing the minimum distance with the threshold, and if the distance measure of one object is smaller than the given threshold,the object will be directly committed to a specific class. This cannot well handle with the uncertainty of classification. Our new method produces higher classification accuracy than OCSVM. This is because the significant abnormal objects are selected and added to the training data set for improving the classification accuracy.In the first step of CCIF as PDM,some significantly abnormal objects are automatically added to the training data set. We use these abnormal objects to learn a new classifier, which captures the significant features of the abnormal objects. Then the objects with small distance measure will be further (progressively) classified by the new classifier.So CCIF can efficiently improve the detection accuracy of the abnormal objects in progressive detection manner comparing with HTM.

In the class identification step, the specific classes of the abnormal objects detected by the individual classifiers can be clearly identified by the weighted DS combination in CCIF.In MCF data set, the abnormal objects are taken from three classes, and one of the three classes is not included in anyFoD of the individual classifiers.So the query patterns that are classified as abnormal objects by all the individual classifiers will be still considered as abnormal object after the fusion. If one object is assigned to abnormal class by several classifiers,but it is confidently classified into a particular class by another classifier, then the specific class of this object can be identified by the fusion. We also find that the classification accuracy of all the query patterns is generally higher than the individual classifiers. Moreover, the classification accuracy of CCIF is also higher than that of MV in the Table 2. It indicates that the proposed weighted DS combination rule can take full advantages of the complementary knowledge among the different classifiers, and the optimization of classifier weight in CCIF is helpful for improving the accuracy. In the Tables 3-5, one sees that the classification accuracy of CCIF is significantly higher than the accuracy of each individual classifier with respect to the known classes. It means that the patterns considered as abnormal objects by individual classifiers can be correctly classified to the specific classes by the proposed CCIF method.So CCIF generally produces much more refined(specific) classification results via the proper combination of classifiers. These experiments demonstrate the interest and effectiveness of the proposed CCIF method.

Table 5 Classification results of CCIF method based on five classifiers with incomplete frames (%).

Table 6 Program running time of different methods with two classifiers based on DTC (second).

For evaluating the computational cost of the CCIF method, the program running time of different methods with decision tree base classifier in the experiment are shown in Table 6. One can see that the computational burden of CCIF method is heavier than other methods. This is mainly because it is time consuming for seeking the K-nearest neighbor of the objects and for estimating the weighting factors of different classifiers. Nevertheless, our method can well detect and identify abnormal objects, and it generally produces higher classification accuracy than other methods. So the heavy computational burden is the necessary price we have to pay for the high classification accuracy.

4. Conclusions

We have proposed a new method for Combining Classifiers working with Incomplete Frameworks (CCIF) based on belief functions theory in order to well identify the abnormal objects.The method mainly consists of two steps: (A) the progressive detection of abnormal objects with individual classifier, and(B) weighted combination of classifiers to identify the specific classes of abnormal objects.Because it’s hard to directly detect all abnormal objects, a progressive detection method is developed. We firstly find some significantly abnormal objects relying on the distance of the object to the training data. These selected abnormal objects are added to the original training data set.We use such extended training data set to learn a classifier,which can capture some representative features of abnormal objects.Of course,a special abnormal class is added to the corresponding FoD of classifier. The query patterns expect these selected abnormal objects are classified by this classifier.By doing this, we can efficiently detect the abnormal objects via a supervised way, and the classification accuracy of query patterns can be also improved. The object can be treated by other individual classifiers working with different attribute space in the similar manner.However,it is hard to well identify the specific class of all the abnormal objects by any individual classifier. There generally exists important complementary knowledge among classifiers. A weighted evidential combination method is introduced to integrate these classifiers for refined classification of abnormal objects. This method can well characterize the uncertain and partially ignorant information using belief functions. The classifiers whose classification abilities vary are considered with different weights.So the classification result from each classifier is properly discounted by the corresponding weight before entering the DS combination procedure. The optimal weights are estimated by minimizing an error criteria. According to the combination result, we can clearly identify the specific class of the object that may be considered as abnormal object by individual classifier.Moreover, the classification accuracy can be also improved thanks to the complementarity of classifiers. In the experiments, thirteen UCI datasets are used to test the effectiveness of the proposed method with three base classifiers, i.e. RF,DTC,SVM.The experimental results show that our proposed method can well identify the specific class of the abnormal objects and also efficiently improve the classification accuracy.In our future work,we will attempt to further improve the performances of the proposed method using other evidential combination rules.

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

This work was partially supported by National Natural Science Foundation of China (Nos. U20B2067, 61790552,61790554), Shaanxi Science Fund for Distinguished Young Scholars, China (No. 2018JC-006).