An Effective Feature Generation and Selection Approach for Lymph Disease Recognition

Sunil Kr.Jhaand Zulfiqar Ahmad

1School of Computer and Software,Nanjing University of Information Science and Technology,Nanjing,210044,China

2Institute of Hydrobiology,Chinese Academy of Sciences,Wuhan,430072,China

ABSTRACT Health care data mining is noteworthy in disease diagnosis and recognition procedures.There exist several potentials to further improve the performance of machine learning based-classification methods in healthcare data analysis.The selection of a substantial subset of features is one of the feasible approaches to achieve improved recognition results of classification methods in disease diagnosis prediction.In the present study,a novel combined approach of feature generation using latent semantic analysis(LSA)and selection using ranker search(RAS)has been proposed to improve the performance of classification methods in lymph disease diagnosis prediction.The performance of the proposed combined approach (LSA-RAS)for feature generation and selection is validated using three function-based and two tree-based classification methods.The performance of the LSA-RAS selected features is compared with the original attributes and other subsets of attributes and features chosen by nine different attributes and features selection approaches in the analysis of a most widely used benchmark and open access lymph disease dataset.The LSA-RAS selected features improve the recognition accuracy of the classification methods significantly in the diagnosis prediction of the lymph disease.The tree-based classification methods have better recognition accuracy than the function-based classification methods.The best performance(recognition accuracy of 93.91%)is achieved for the logistic model tree(LMT)classification method using the feature subset generated by the proposed combined approach(LSA-RAS).

KEYWORDS Disease data mining; feature selection; classification; lymph; diagnosis

Nomenclature

1 Introduction

The machine learning approaches are playing a vital role in the development of computeraided diagnosis systems [1–3].The highly efficient machine learning-based soft disease diagnosis system provides an economical, non-invasive, and quick diagnostic facility for the patient.Such a system also eases the effort of physicians in decision-making and interpretation of disease diagnosis results.The lymphatic system improves the immune system, maintains the balance of body fluids, removes the waste product, bacteria, and virus, and supports the absorption of nutrients, etc.[4,5].Any blockage and infection of the tissue in lymph vessels result in lymphoma,lymphadenitis, and lymphedema, etc.[6].Imaging techniques are used in the examination of lymph nodes [7,8].Moreover, the classification approaches of machine learning can be implemented to improve the prediction accuracy of the initial status of the lymph node by modeling the measurements of imaging techniques and physical observations.

Generally, the classification techniques of machine learning are the backbone of the soft disease diagnosis system for the class recognition of the specific disease in diagnosis purposes by analyzing preliminary observations and instrumental measurements [9–11].The performance of the classification methods has been affected by the size of the data, the number of attributes, nature of attributes, noise and outliers in data, and uneven distribution of instances of different attributes,etc.[12,13].Consequently, addressing the earlier issues is crucial for the real-time diagnosis and recognition of diseases by a machine learning-based system.Among the previous concerns, reducing the dimensionality (attributes)of a dataset is one of the significant steps for the disease recognition performance improvement of the classification method [13–16].The dimensionality of any disease data set can be reduced in two ways (i)selecting a significant subset of attributes from the original attribute set, and (ii)generating novel features by a transformation of the original attributes of the dataset into new feature space and subsequently, selecting a significant subset of features.In the present study, both of the earlier approaches of dimensionality reduction have been implemented for efficient recognition of lymph disease.Moreover, a novel approach of feature generation and selection has been implemented for the dimensionality reduction of the lymph dataset and its effect on the recognition performance of five different classification methods has been examined.Besides, some other feature generation methods like principal component analysis (PCA), and attribute selection methods based on the genetic algorithm (GA), greedy forward and backward search, random search, and rank search, etc.have been implemented for performance comparison of the proposed approach.

1.1 Literature Survey

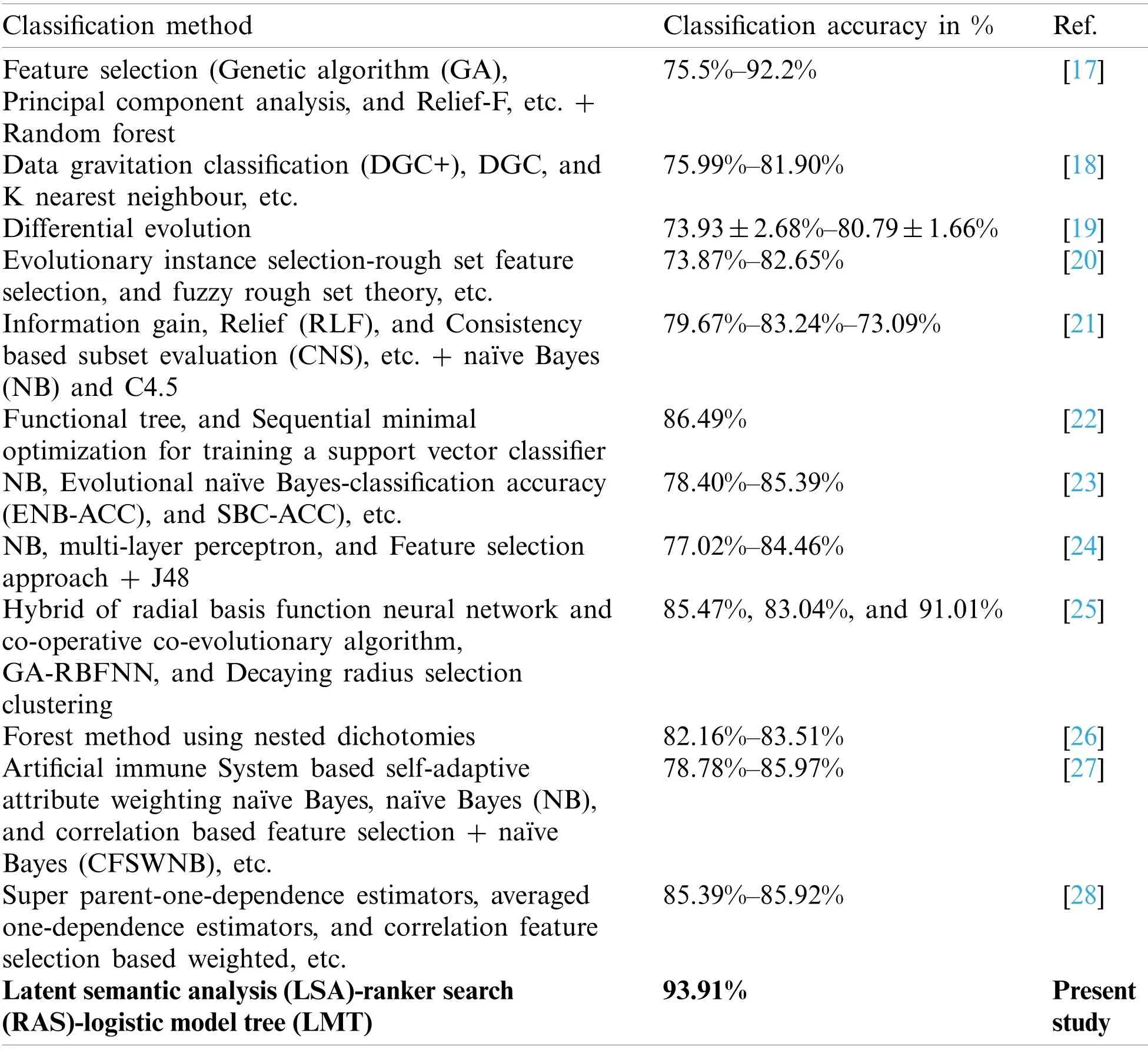

Classification approaches to machine learning have been implemented in the recognition of lymph disease in past studies [17–28].Mainly, single classification methods [19,22], in combination with the feature selection approach [17,20,21,24], and combination with other classification methods [18,23,25,27,28] have been used in the analysis of the lymph disease dataset.Tab.1 presents a short review of the classification approaches used in the analysis of the lymph disease dataset.Based on category wise analysis of the classification methods, it is obvious that the treebased classification methods have been used mostly in the lymph disease recognition [17,22,28].The maximum accuracy of 92.2% has been achieved using the selected features and random forest (RF)classifier [17].The artificial neural network (ANN)classification methods implemented in some studies [24,25], like multi-layer perceptron (MLP)[24], and hybrids of radial basis function neural network and evolutionary algorithm [25].The hybrid ANN method achieved improved recognition accuracy of 85.47% than MLP.The Bayesian classifiers [23,24] result in average recognition accuracy.Besides, in some recent studies, deep learning approaches have been implemented in disease diagnosis, like convolutional long short-term memory neural network in heartbeat classification [29], atrial fibrillation detection using adaptive residual network [30], and arrhythmia classification using fully connected neural networks [31], etc.

Table 1: A review of previous approaches in lymph disease classification

1.2 Motivation and Contribution of Present Study

It is obvious from the literature survey that the selected feature improves the recognition performance of the classification methods.The selection of an optimal set of features that can result in the maximum lymph disease recognition accuracy is still an existing challenge.With this motivation, an effective approach of feature generation (latent semantic analysis (LSA))and selection (ranker search (RAS))has been proposed which results in the maximum lymph disease recognition accuracy of classification methods.The main findings of the present study include the followings:

• An efficient approach of dimensionality reduction using the combination of feature generation and selection approaches.

• An effective recognition approach of lymph disease using the combination of an optimal subset of selected features.

• Comprehensive performance comparison of the proposed feature generation and selection method with other methods of attribute selection.

• Performance validation of the proposed approach using functions and tree-based classification methods.

• The maximum recognition accuracy of the classification methods using the feature subset selected with the proposed approach with methods in the reviewed literature.

Details of the lymph disease dataset are available in Section 2, Section 3 presents the proposed approach, analysis results are presented in Section 4, discussed in Section 5, and concluded in Section 6.

2 Experimental Lymph Dataset

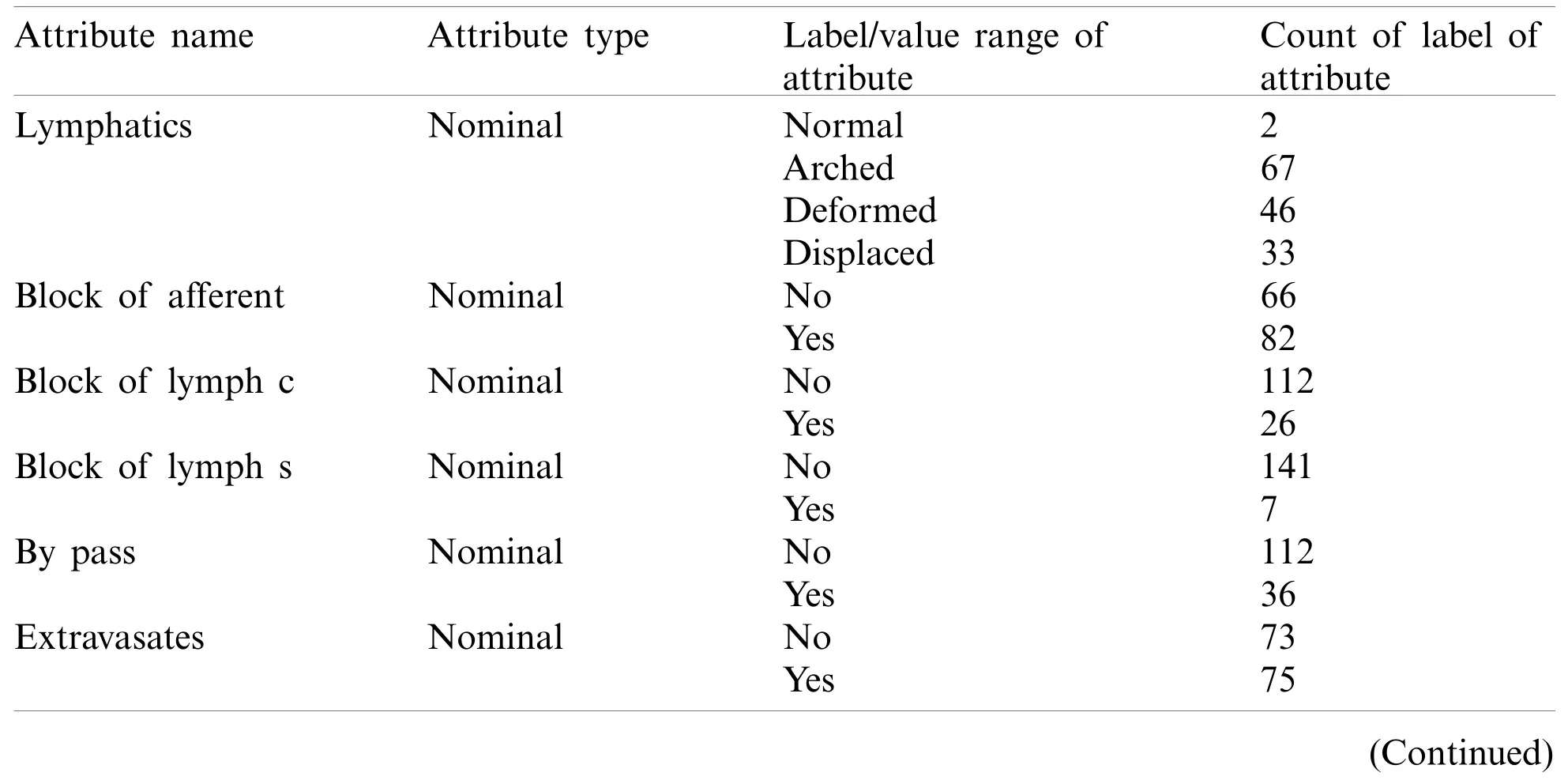

The lymphography dataset was accessed from the University of California Irvin’s (UCI)machine learning repository [32].A description of the lymphography dataset is available in Tab.2.It contains two instances of normal cases and eighty-one, sixty-one, and four instances of metastases, malign lymph, and fibrosis cases of lymph disease, respectively.Fifteen nominal attributes(lymphatics, block of afferent, and block of lymph c, etc.)and three numerical attributes (lymph nodes diminish, lymph nodes enlarge, and number of nodes)of each of the instances have been observed without missing values.

Table 2: Details of the lymph disease dataset

Table 2(continued)Attribute name Attribute type Label/value range of attribute Count of label of attribute Regeneration of Nominal No 138 Yes 10 Early uptake Nominal No 44 Yes 104 Changes in lymph Nominal Bean 6 Oval 77 Round 65 Defect in node Nominal No 3 Lacunar 49 Lacunar marginal 46 Lacunar central 50 Changes in node Nominal No 6 Lacunar 42 Lacunar marginal 75 Lacunar central 25 Changes in structure Nominal No 2 Grainy 14 Drop-like 19 Coarse 31 Diluted 28 Reticular 2 Stripped 7 Faint 45 Special forms Nominal No 28 Chalices 43 Vesicles 77 Dislocation of Nominal No 50 Yes 98 Exclusion of node Nominal No 31 Yes 117 Lymph nodes diminish Numeric 1.0–1.2 142 1.8–2.0 3 2.8–3.0 3 Lymph nodes enlarge Numeric 1.0–1.6 13 1.6–2.2 72 2.8–3.4 43 3.4–4.0 20 Number of nodes Numeric 1.0–2.2 94 2.2–3.3 18 3.3–4.5 10 4.5–5.7 8 5.7–6.8 8 6.8–8.0 10

3 Feature Generation,Selection,and Classification

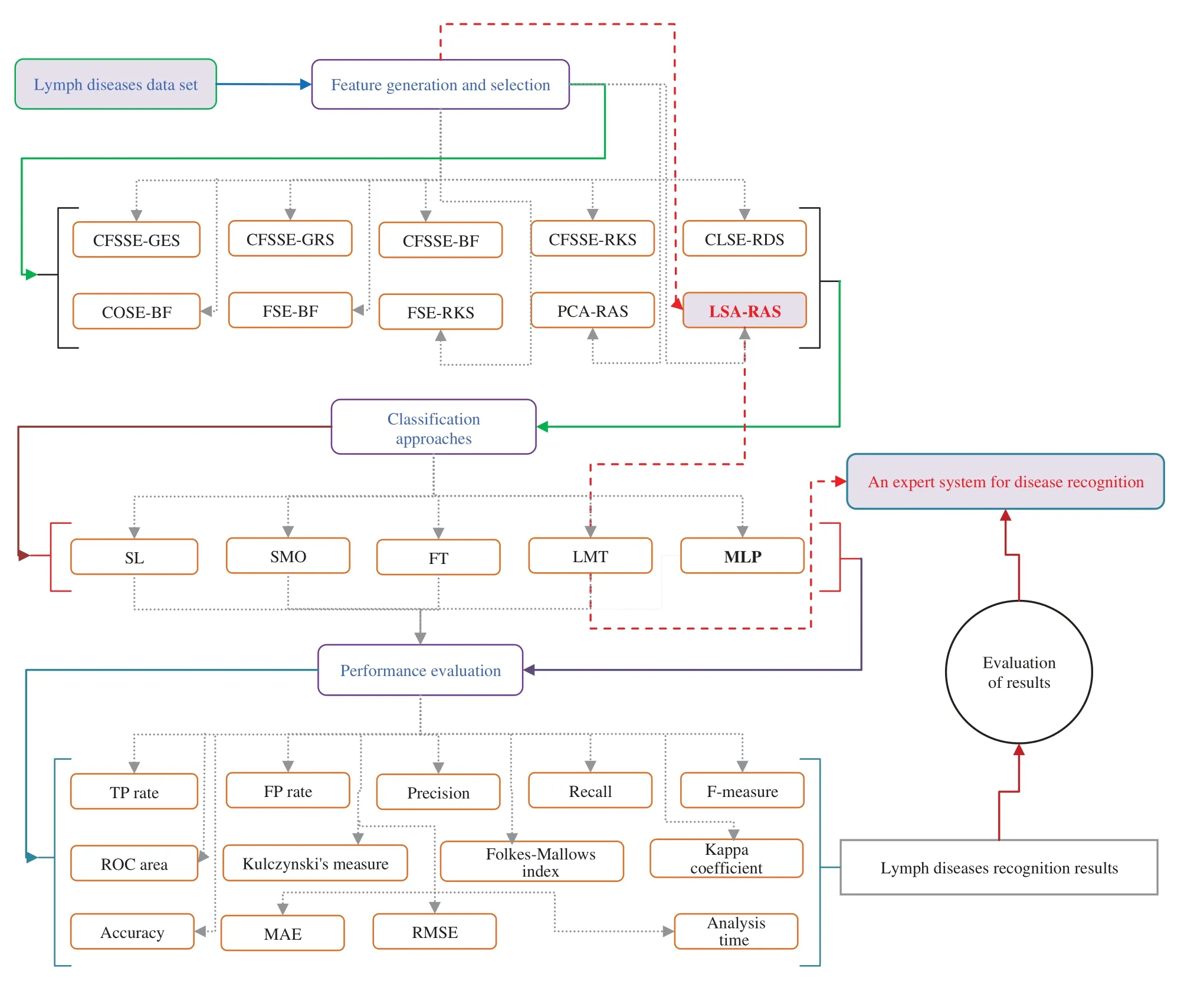

The LSA method generates an effective set of features by combining the original attributes.The RAS selects an optimal subset of features from the LSA generated set.Subsequently, the selected optimal subset of features results in the improved recognition accuracy of MLP, simple linear logistic regression (SL), and sequential minimal optimization (SMO), functional tree (FT),and logistic model tree (LMT)classification methods.Fig.1 presents a schematic diagram of the analysis and validation procedures.A PC (64-bit Windows 10, Intel(R)Core(TM)i5-4590 CPU@3.30 GHz, 8 GB RAM)was used in the implementation of attribute selection, feature generation and selection, classification methods, and their combination in WEKA [33].A short description of attribute selection, feature generation, and selection, classification methods are as follows.

Figure 1: An overview of attribute selection, feature generation and selection, classification, and performance evaluation methods of the lymph disease

3.1 The Proposed LSA-RAS Approach of Feature Generation and Selection

3.2 Functions and Tree-Based Classification Approaches

Three functions-based classifiers (MLP, SL, and SMO)and two tree-based classifiers (FT, and LMT)have been implemented to test the efficiency of the LSA-RAS selected feature subset and other feature subsets in a 10-fold cross-validation.

3.2.1 MLP Classifier

It is a systematic arrangement of artificial neurons in different layers (input, hidden, and output).The input of a neuron is defined asby using the weightsWnand biasbof attributesXn[36].In the present study, the sigmoid activation functionO=1/1+exp(−Y)=1/1+expwas used to compute the output of neurons in the hidden layer.The linear activation function was used to calculate the output of neurons in the output layer.The MLP uses a feed-forward back-propagation strategy to update the weights and bias of each of the neurons till the error is minimized.The weight is updated using the error gradient(δj)and learning rate(η)in delta rule aswij(p+1)=wij(p)+Δwij(p), whereΔwij(p)=ηδjxji, andδj=(tj−oj)×oj×(1 −oj).Using a momentum term(α), the weight update(Δwij)is defined asΔwij(p)=ηδjxji+αΔwij(p−1).The error(tj−oj)decreases with the number of training epochs.The optimal MLP classifier was built usingη=0.3,α=0.2, training epoch=500, and hidden layers=(attributes+classes/2), etc.[33].Moreover, the decaying value ofη(dividing theηwith the current epoch number)was used to limit the divergence from the target.Normalized values of the attributes and the classes (−1 to +1)are used in the training and validation.A nominal to binary filter was used for nominal attributes [33].

3.2.2 SL Classifier

3.2.3 SMO

3.2.4 FT Classifier

FT [38] uses logistic regression functions at the inner nodes and/or leaves and a constructor function (generalized linear model (GLM))to build the decision tree.GLM combines the original attributes to generate the novel attributes.Firstly, the constructor function is used to build the initial model.In the second step, the model is mapped to new attributes of dimension equal to the number of classes in the dataset.The new attributes represent the class belonging probability of an instance computed by using the constructor function.A merit function is used to evaluate the attribute with the original attributes.The FT was built using the following parameters: boosting iterations equal to 150, number of instances equal to 15 for the splitting of nodes, andβ=0,etc.[33].

3.2.5 LMT Classifier

LMT is a combination of linear logistic regression (low variance high bias)and tree induction(high variance low bias)classification methods [39].Logistic regression functions are generated at every node of the tree using the LogitBoost algorithm.An information gain criterion was used for the splitting of the tree, and after the complete formation of the tree, the CART algorithm was used for its pruning.The heuristic cross-validation was used to control the number of iterations of LogitBoost to avoid data overfitting.The additive logistic regression of the LogitBoost algorithm for each classMiis defined asLM(x)=βixi+β0.The posterior probability of leaf node is defined asP(M/x)=exp(LM(x))/exp(LM′(x)).The optimal performance of LMT was achieved in a number of instances equal to 15 of the splitting of nodes, boosting iterations equal to 150, andβ=0 (weight trimming value), etc.[33].

3.3 Additional Attributes and Feature Selection Methods

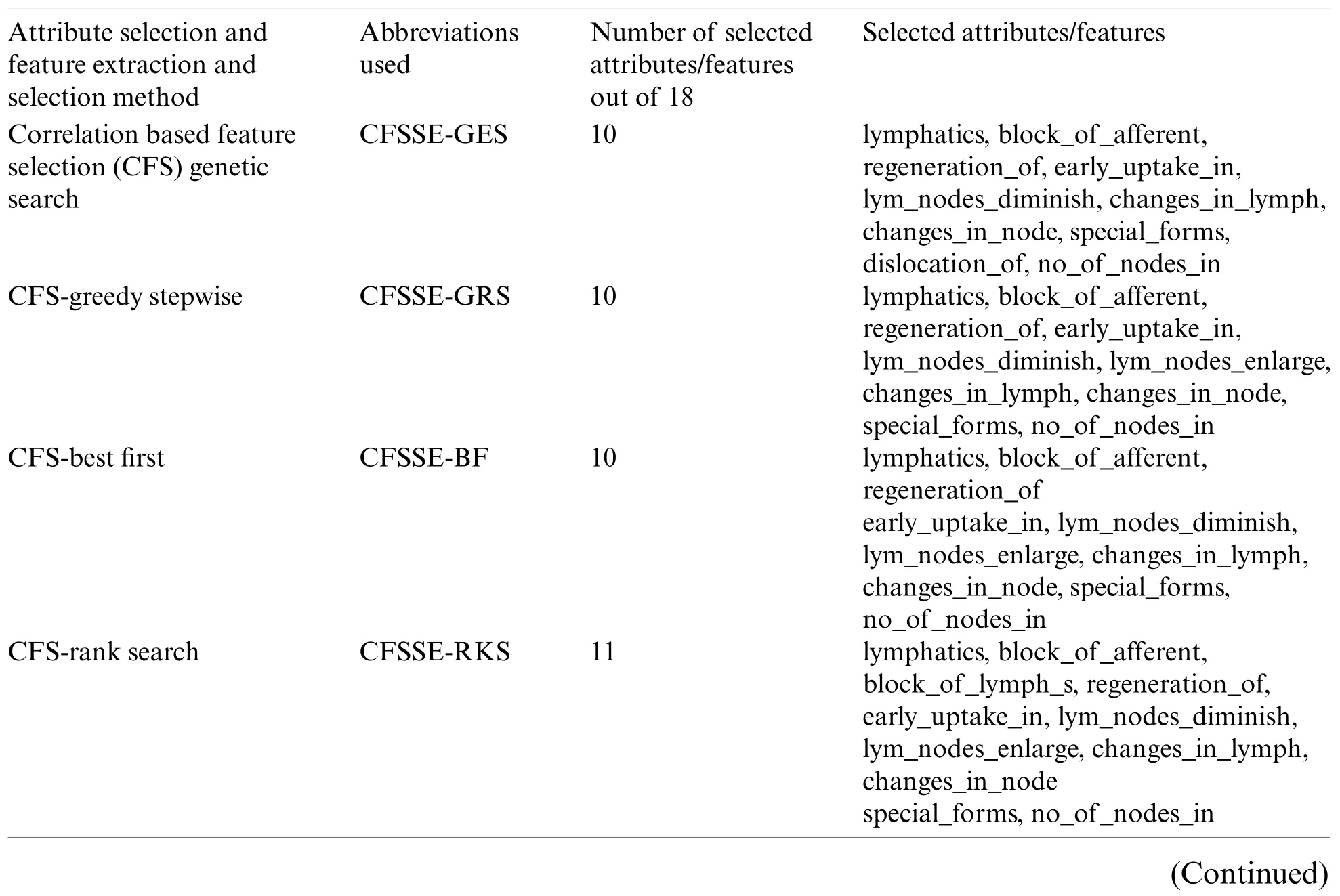

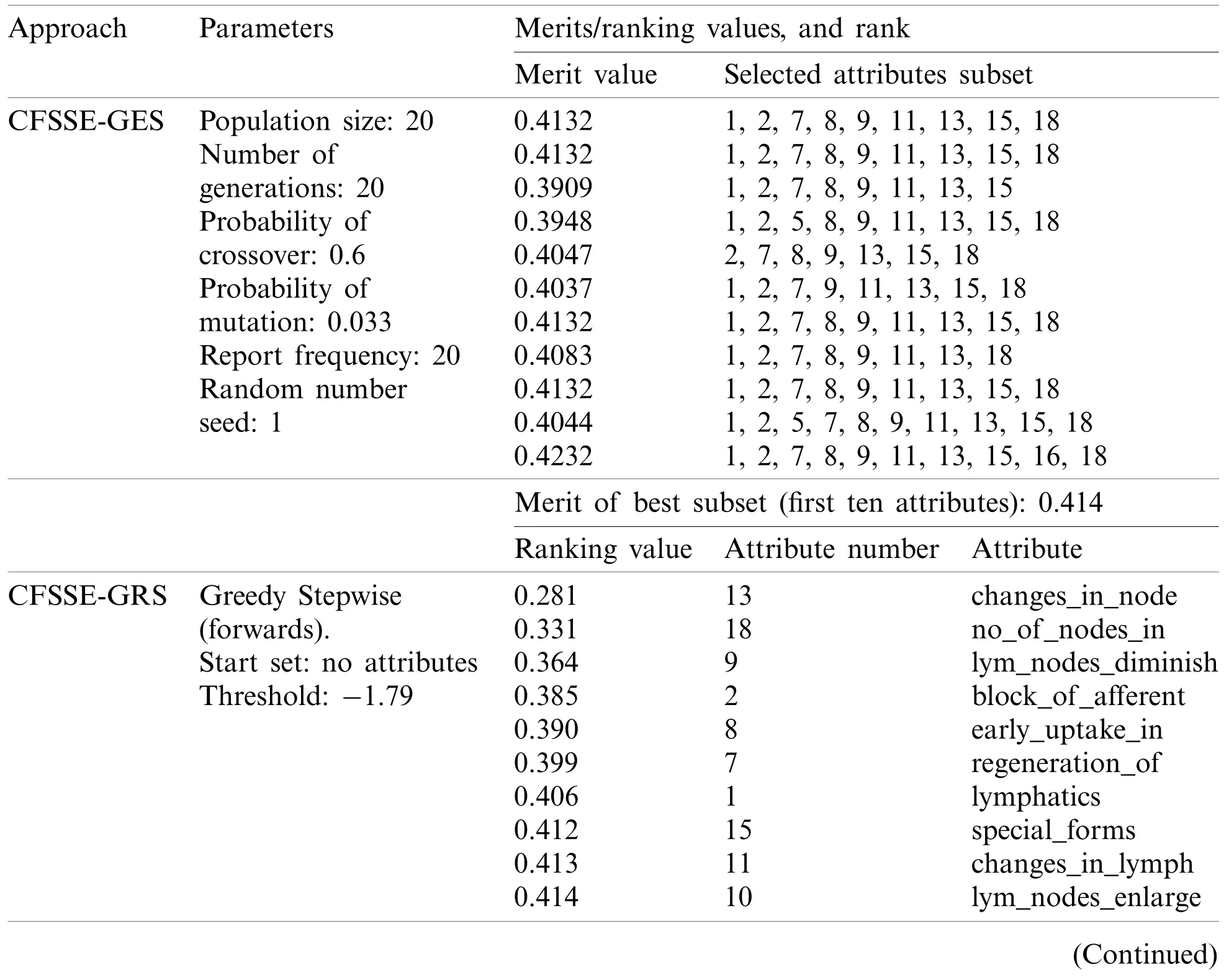

Nine feature selection methods (Tab.3)have been used in the performance comparison analysis.The correlation-based feature selection genetic search (CFS-GES)method combines two approaches, correlation-based feature selection (CFS)and genetic search (GES).The CFS implements a correlation measure to select the feature which is highly correlated with the class and less correlated with the other features.

Table 3: Attribute and feature selection methods, and selected attributes and features

Table 3(continued)Attribute selection and feature extraction and selection method Abbreviations used Number of selected attributes/features out of 18 Selected attributes/features Classifier subset evaluation random search CLSE-RDS 9 block_of_afferent,block_of_lymph_c,block_of_lymph_s,extravasates,changes_in_lym defect_in_node,changes_in_node,special_forms,no_of_nodes_in Consistency subset evaluation best first COSE-BF 9 lymphatics,block_of_afferent,block_of_lymph_c,changes_in_lymph,defect_in_node,changes_in_node,changes_in_stru,special_forms exclusion_of_no Filtered subset evaluation best first FSE-BF 10 lymphatics,block_of_afferent,regeneration_of early_uptake_in,lym_nodes_diminish,lym_nodes_enlarge,changes_in_lymph,changes_in_node,special_forms,no_of_nodes_in Filtered subset evaluation rank search FSE-RKS 11 lymphatics,block_of_afferent,block_of_lymph_s regeneration_of,early_uptake_in,lym_nodes_diminish,lym_nodes_enlarge,changes_in_lymph,changes_in_node,special_forms,no_of_nodes_in Principal component analysis ranker search PCA-RAS 25 Principal components(PC1-PC25)Latent semantic analysis ranker search LSA-RAS 13 Latent variables(LV1-LV13)

On the basis of the earlier correlation values, a merit measureMsof feature subset is defined asMs=(rcfandrffdenotes an average feature-class and feature-feature correlation)[33].The GES method basically implements a simple genetic algorithm in searching for an optimal set of attributes.Selection, crossover, and mutation operators have been used in GA to adopt the process of evolution of nature [36].The GES method was built using the initial population of 20 features, cross-over probability equal to 0.6, the number of generations equal to 20, and mutation probability equal to 0.033 [33].The GES method was used for the ranking of the attributes in combination with CFS (Tab.3).The attributes are selected either in the forward or backward direction using the greedy stepwise (GRS)method.The best subset of the attribute is selected by including attributes step by step till the merit of the feature subset increases.A forward selection approach is implemented in the selection of an optimal subset of attributes.The GRS method was used in combination with the CFS method in attribute ranking and selection of an optimal subset of attributes (Tab.3).

Redundant attributes are discarded using a threshold value of −1.80 [33].A hill-climbing approach with a backtracking search approach was used in the selection of optimal attributes in the best first (BF)method.A forward search approach and search termination threshold value equal to 5 was used in the BF method in the present analysis [33].BF method was used in combination with CFS, consistency subset evaluation, and filtered subset evaluation methods(Tab.3).The rank search (RKS)approach uses a forward selection search method to generate an optimal subset of the attribute of maximum merit.The attributes are included one by one with the best attribute in each step to generate an optimal subset of attributes.Attribute evaluator (gain ratio with starting point equal to 0 and the step size equal to 1)was used to evaluate the attribute subset in each step after including an attribute until the merit of the attribute subset increases [21].The RKS method was combined with the CFS and filtered subset evaluation (FSE)methods for the attribute ranking and selection (Tab.3).Classifier subset evaluation (CLSE)implements a classification method in the selection of an optimal subset of attributes.The CLSE uses a ZeroR classification approach to compute the merit of the feature subset.For the numeric class, ZeroR predicts the mean of the numeric class and mode for the nominal class.This concept is used to compute the merit of an attribute subset in CLSE [33].The random search (RDS)uses a random search approach to select an optimal subset of attributes.The RDS selects a random subset of attributes in finding the optimal subset.Another parameter used in the RDS method was a seed equal to 1 to generate a random number, and 25% of the search space [33].The RDS was used in combination with the CLSE (Tab.3).Consistency subset evaluation (COSE)selects an optimal subset of attributes on the basis of its level of consistency in class.The consistency(Cs)of a subset of the attribute is defined asCs= 1 −(Nrepresents the number of instances in the dataset,sdenotes the attribute subset,Jstand for different combinations of attributes, and |Di|and |Mi|denote the frequency and the cardinality of the majority class of ithattribute value combination, respectively)[21].The COSE was used in combination with the BF method (Tab.3).Filtered subset evaluation (FSE)is a combined approach of CFS and a random subsample filter used in the selection of an optimal subset of attributes.Basically, an initial subset of features is selected casually by the random subsample filter and used as the input of the CFS method.A spread value is always defined in the FSE to control the effect of least and most recurrent classes [40,41].The FSE was used in combination with the BF and RKS method to select an optimal subset of features (Tab.3).The principal component analysis (PCA)method doesn’t select the attributes directly; nonetheless, it first transforms the original attributes in a novel principal component (PC)space and then selects a significant subset of the attributes using some ranking method.Basically, the original attributes are projected along the PC directions to obtain the novel subset of features.The PC component matrix PCmxkof an original data matrix Omxk(minstances andnattributes)is achieved as Omxk=PCmxk+Rmxn[36].The loading matrixdenotes the significance of the attributes in the formation of the PC components.The RAS method is combined with the PCA to select an ideal subset of PC components [33].

3.4 Performance Assessment Measures of Classification Approaches

Performance of classification approaches is evaluated on the basis of the average value of true positive (TP)rate, false positive (FP)rate, precision, recall, Kulczynski’s measure(arithmetic mean of precision and recall), Folkes-Mallows index (geometrical mean of precision and recall), F-measure (harmonic mean of precision and recall), Kappa coefficient,receiver operating characteristic (ROC)area, and analysis time.The Kappa coefficient is computed using the number of instances in a row(xi.), column(xj), and diagonal(xii)of the confusion matrix of the classification method and the total number of instances in the dataset(N)ask=[40].The TP, FP, false negative (FN), and true negative (TN)are used to compute the ROC area asROCarea=1+(TP/TP+FN)−(FP/FP+TN)/2 [18].

4 Validation Results of the Proposed Approach and Comparative Analysis Results

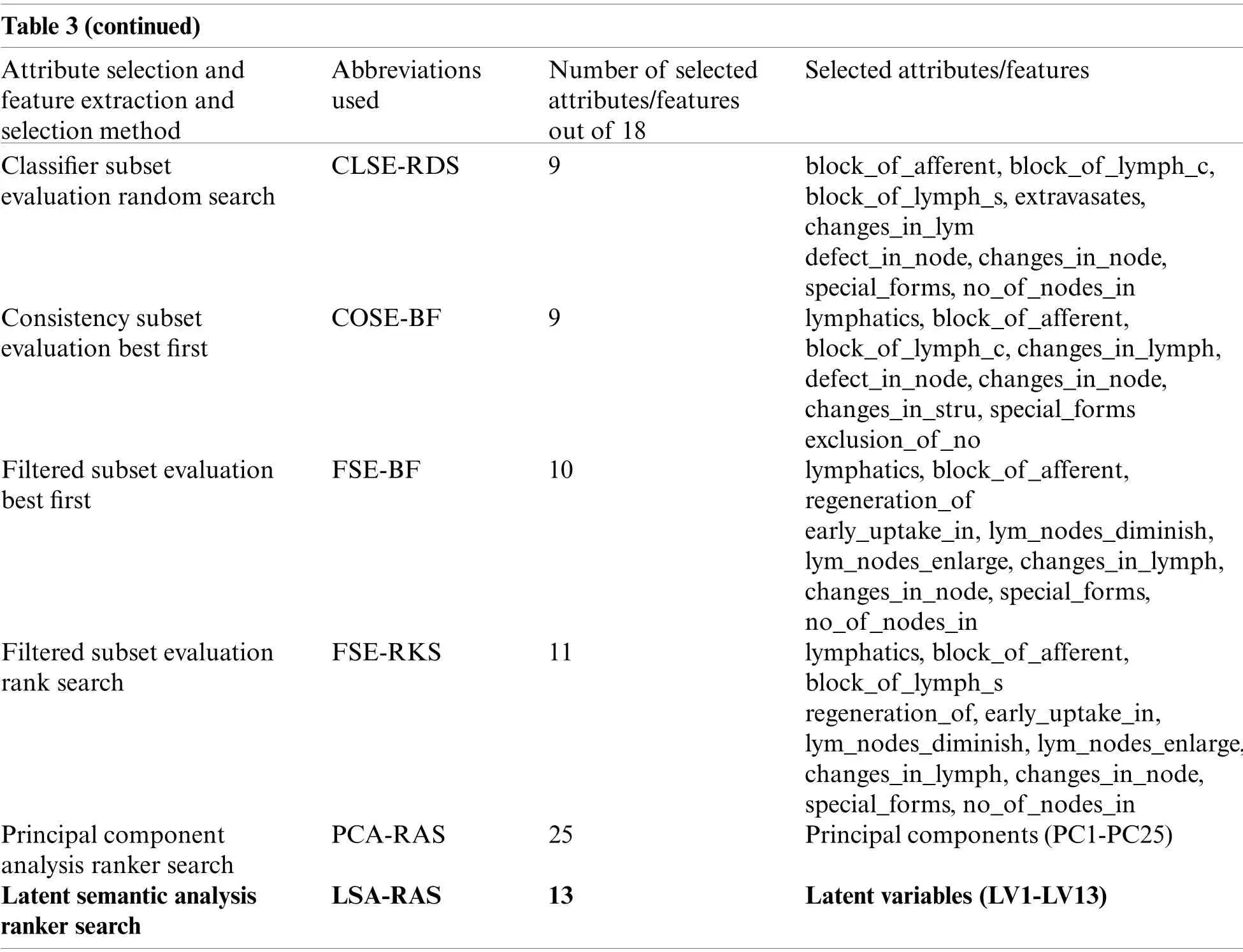

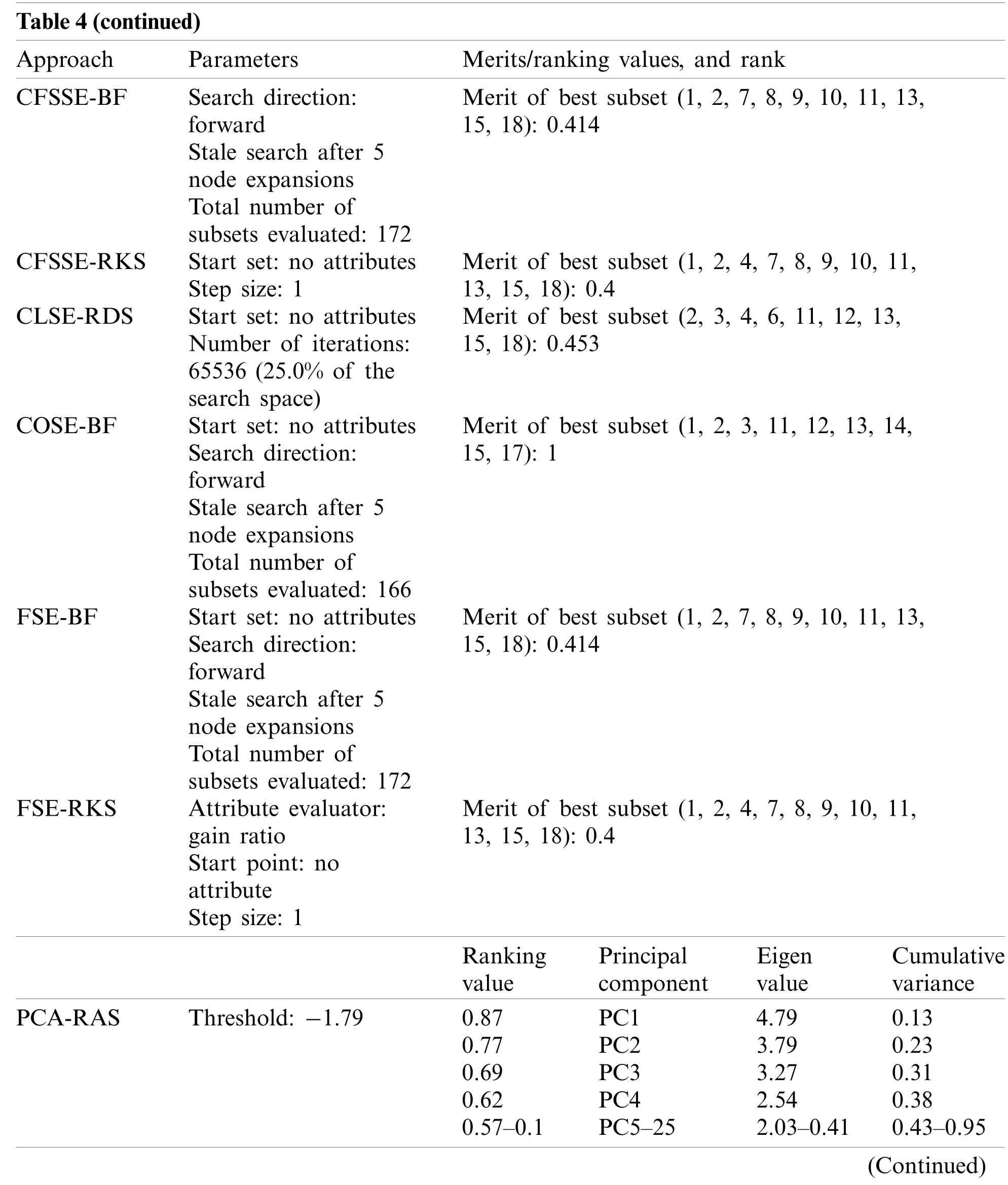

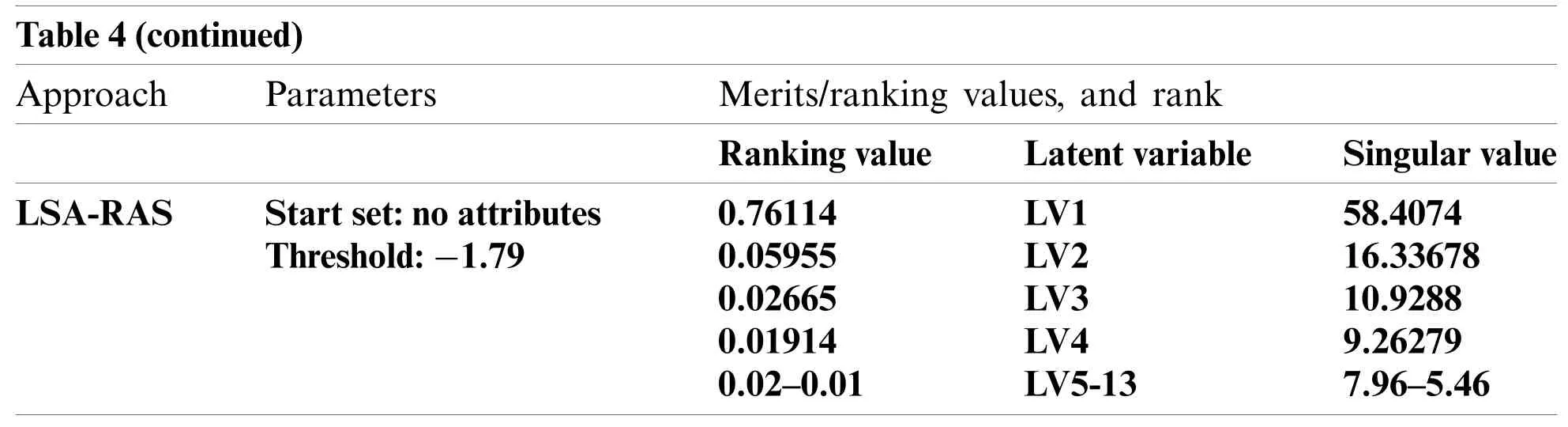

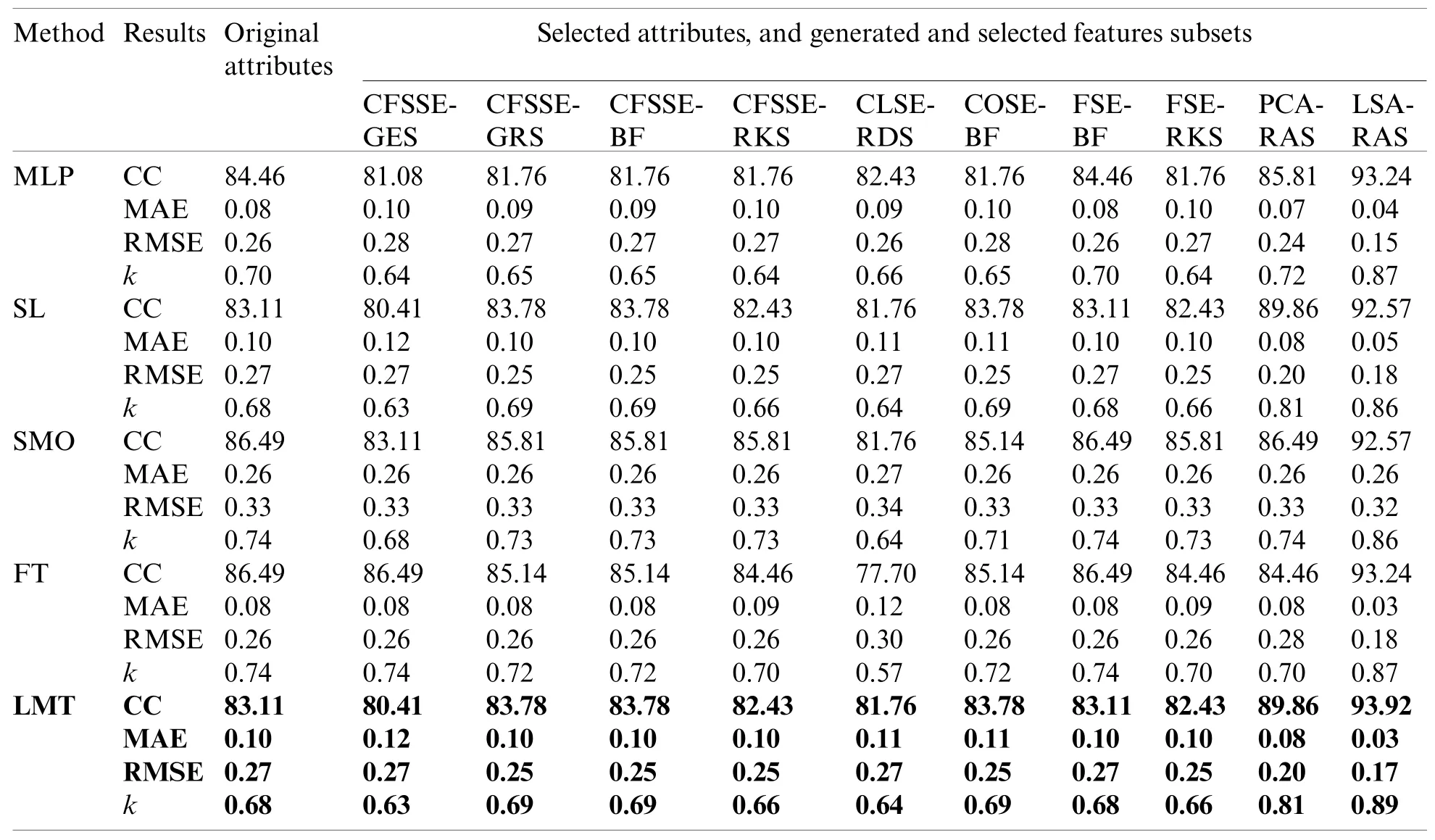

Tab.3 summarizes the attributes and features selected by the different approaches.It is obvious that the CFSSE-RKS method selects a maximum number of attributes (11 out of 18)and PCA-RAS generates a maximum number of features.CFSSE-GES, CFSSE-GRS, and CFSSEBF methods select a similar number of attributes (Tab.3).The different attributes subsets are selected by the CFSSE-GES, and CFSSE-GRS methods, while the attribute subset selected by the CFSSE-GRS and CFSSE-BF is the same.It is also noticeable that the PCA-RAS and LSARAS methods select the optimal subset of features considering the contributions of all attributes,while the rest of the methods in Tab.3 select an optimal subset of attributes.The parametric details of the attributes/feature selection methods and the merits/ranking of the selected subset of the attributes/features are summarized in Tab.4.The performance of classification methods using selected subsets of attributes/features is summarized in Tab.5.Tab.6 presents the performance evaluation metrics of classification methods.

Table 4: Merits and ranking values of selected attributes and features

Table 4(continued)Approach Parameters Merits/ranking values, and rank CFSSE-BF Search direction:forward Stale search after 5 node expansions Total number of subsets evaluated: 172 Merit of best subset (1, 2, 7, 8, 9, 10, 11, 13,15, 18): 0.414 CFSSE-RKS Start set: no attributes Step size: 1 Merit of best subset (1, 2, 4, 7, 8, 9, 10, 11,13, 15, 18): 0.4 CLSE-RDS Start set: no attributes Number of iterations:65536 (25.0% of the search space)Merit of best subset (2, 3, 4, 6, 11, 12, 13,15, 18): 0.453 COSE-BF Start set: no attributes Search direction:forward Stale search after 5 node expansions Total number of subsets evaluated: 166 Merit of best subset (1, 2, 3, 11, 12, 13, 14,15, 17): 1 FSE-BF Start set: no attributes Search direction:forward Stale search after 5 node expansions Total number of subsets evaluated: 172 Merit of best subset (1, 2, 7, 8, 9, 10, 11, 13,15, 18): 0.414 FSE-RKS Attribute evaluator:gain ratio Start point: no attribute Step size: 1 Merit of best subset (1, 2, 4, 7, 8, 9, 10, 11,13, 15, 18): 0.4 Ranking value Principal component Eigen value Cumulative variance PCA-RAS Threshold: −1.79 0.87 PC1 4.79 0.13 0.77 PC2 3.79 0.23 0.69 PC3 3.27 0.31 0.62 PC4 2.54 0.38 0.57–0.1 PC5–25 2.03–0.41 0.43–0.95(Continued)

Notes:attribute no.1:lymphatics,2:block_of_afferent,3:block_of_lymph_c,4:block_of_lymph_s,5:by_pass,6:extrava sates,7:regeneration_of,8:early_uptake_in,9:lym_nodes_diminish,10:lym_nodes_enlar,11:changes_in_lym,12:d efect_in_node,13:changes_in_node,14:changes_in_stru,15:special_forms,16:dislocation_of,and 17:exclusion_of_no,18:no_of_nodes_in.

Table 5: Performance of the classification methods using different features subsets in the lymph disease recognition

The LSA-RAS generated feature subset results in the maximum accuracy of classification methods (Tab.5).Among the three functions-based classification methods, the maximum classification accuracy (93.24%)and the minimum value of mean absolute error (MAE)(0.04)have been achieved for the MLP.The LMT classifier achieved higher accuracy (93.92%)and the maximum value of the kappa coefficient (0.89)than the FT method.

Table 6: Evaluation metrics of classification methods using selected attribute and features subsets in the lymph disease recognition

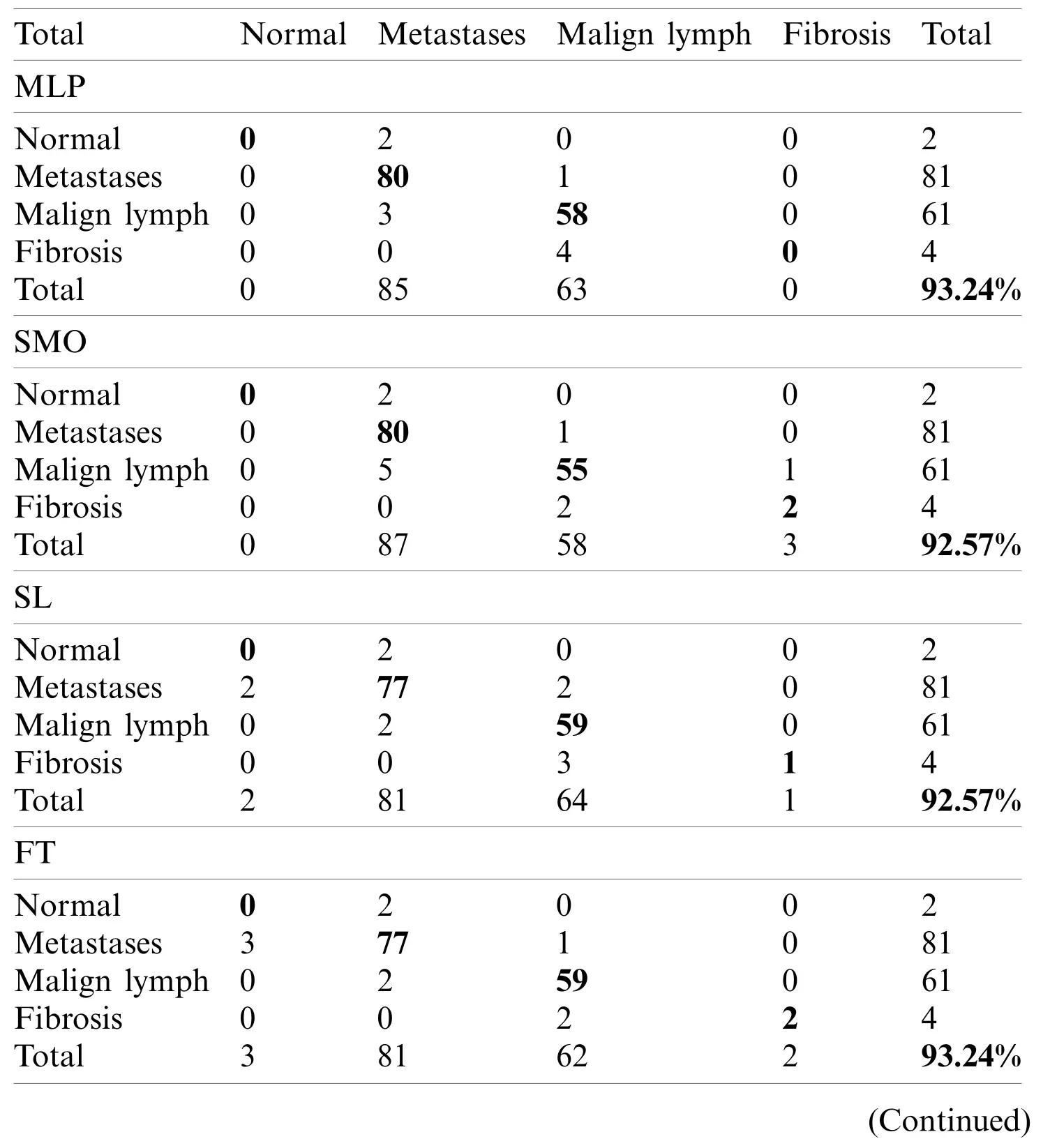

The LSA-RAS selected feature subset results in the improvement of 10.81% in the classification accuracy of the LMT classifier than the original attributes.Moreover, the accuracy of classification methods using most of the selected attribute subset except PCA-RAS and LSA-RAS selected feature subset is lower or comparable than using the original attributes.The LSA-RAS selected feature subset results in improved evaluation measures (maximum value of average the TP rate, Precision, Recall, F-measure, ROC area, Kulczynski’s measure, and Folkes-Mallows index,and the minimum average value of FP rate)of each of the classification methods than other selected attribute subsets, selected feature subset, and original attributes.Furthermore, the LMT classification method using the LSA-RAS selected feature subset has the best values of earlier evaluation metrics than the rest of the classification method.A detailed class confusion matrix of each of the classification methods using the best performing LSA-RAS selected feature subset is summarized in Tab.7.The MLP classification recognizes 138 out of 148 instances of lymph disease correctly.The maximum number of instances (80 out of 81)of metastases class is identified correctly (accuracy of 98.77%).Fig.2 presents the error curve of the MLP method using three attribute subsets and one feature subset.

Table 7: The class confusion matrix in recognition of the lymph diseases using the LSA-RAS features subset

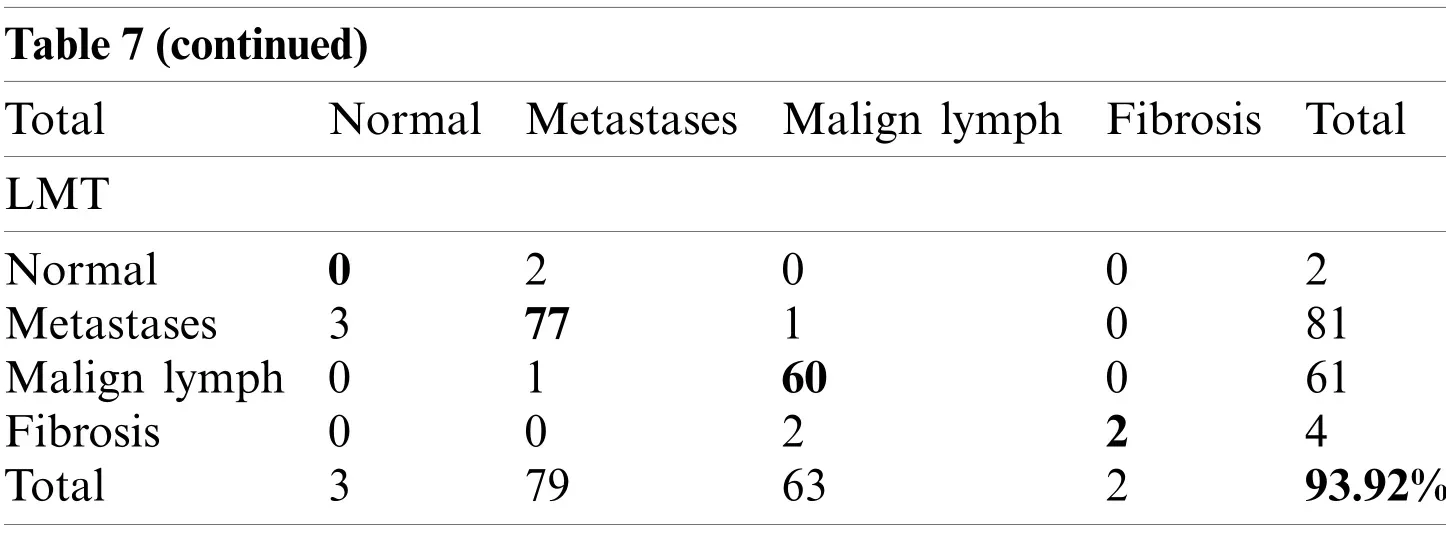

Table 7(continued)Total Normal Metastases Malign lymph Fibrosis Total LMT Normal 0 2 0 0 2 Metastases 3 77 1 0 81 Malign lymph 0 1 60 0 61 Fibrosis 0 0 2 2 4 Total 3 79 63 2 93.92%

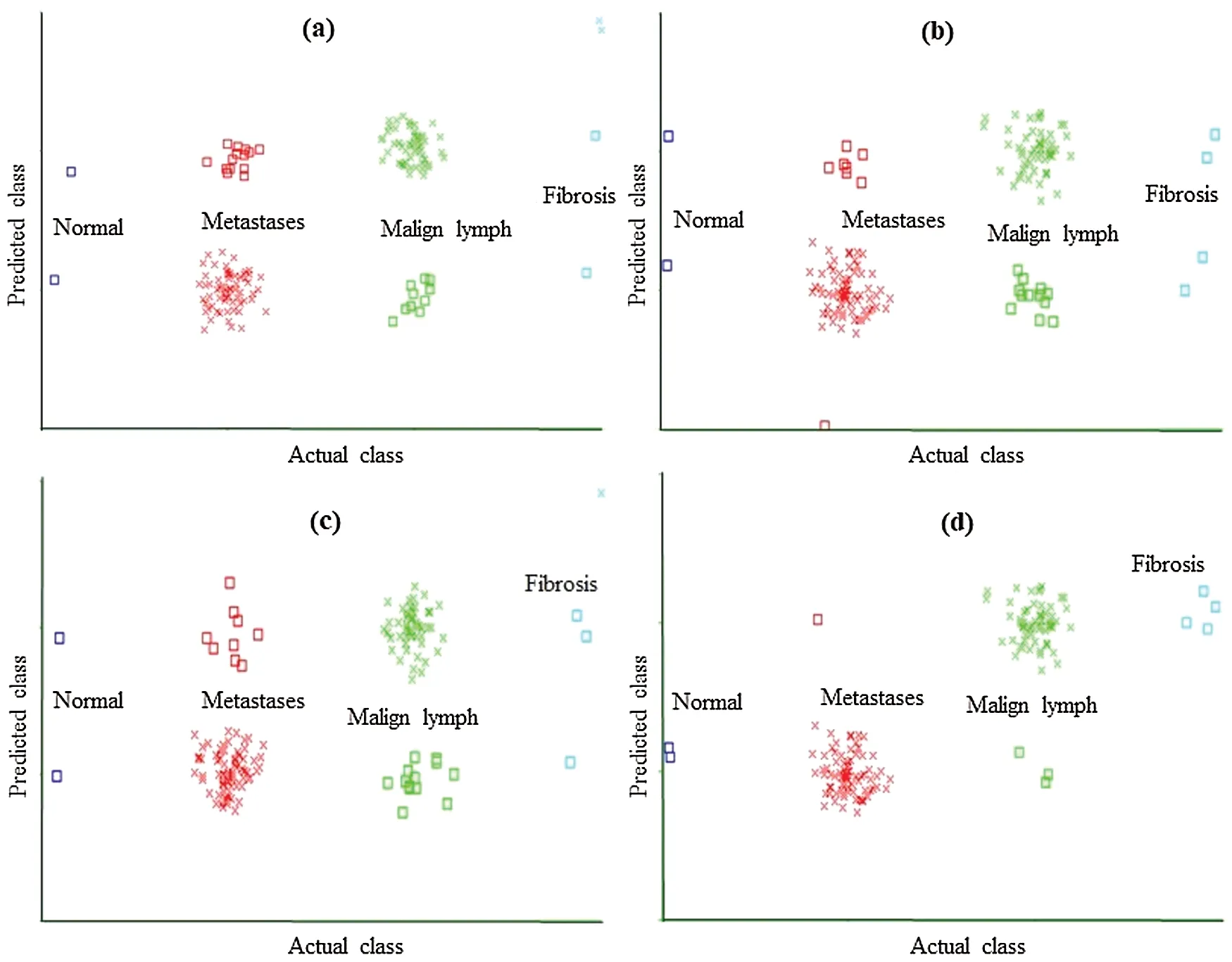

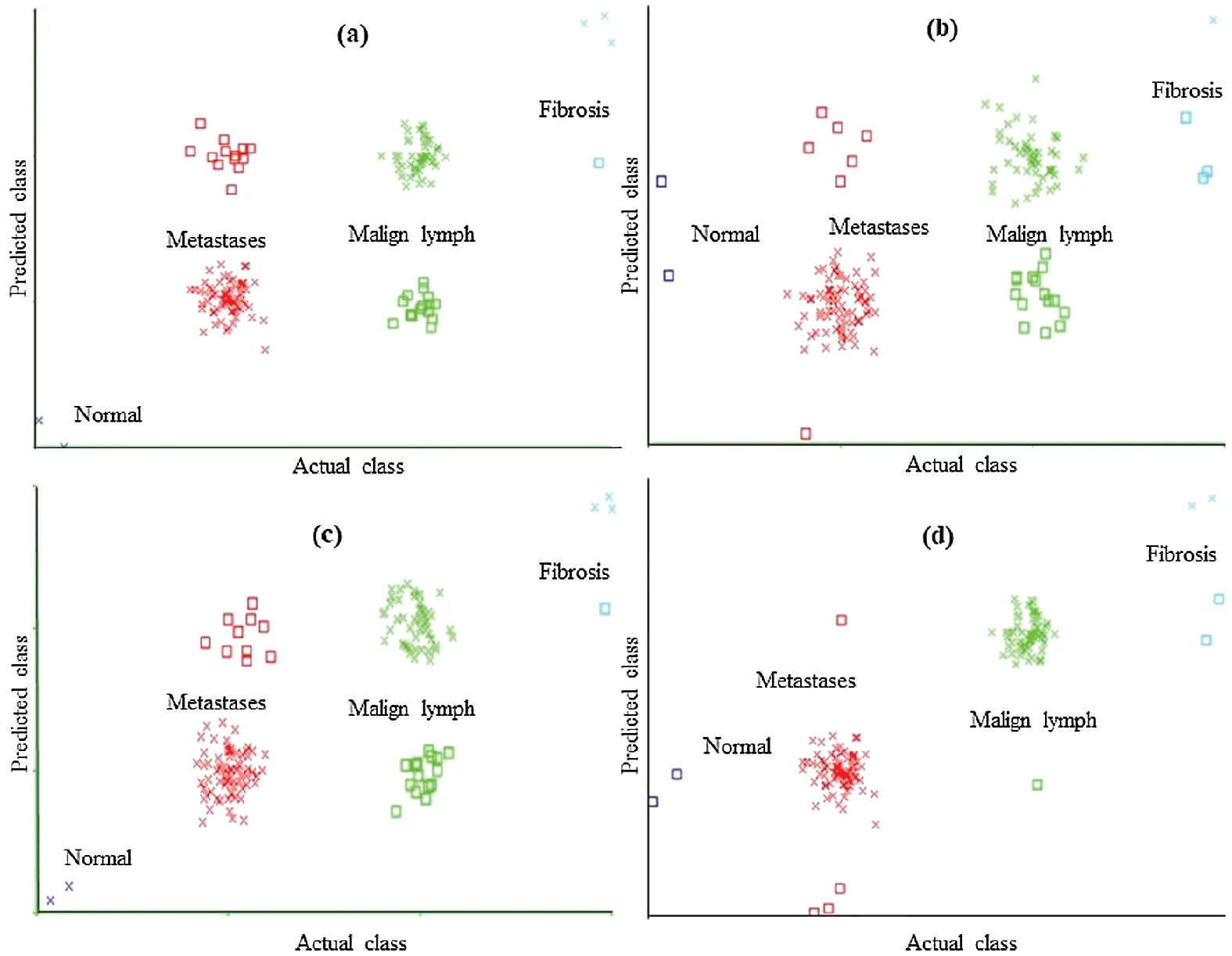

Figure 2: Classification error curve of MLP using (a)CFSSE-GES, (b)CLSE-RDS, (c)FSE-RKS,and (d)LSA-RAS selected attribute and feature subsets

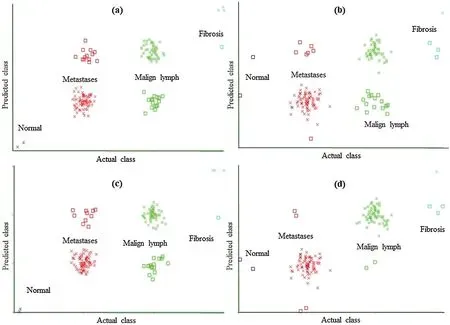

The classification error in Fig.2 is denoted by the square symbol.It is obvious that the LSA-RAS feature subset results in the minimum classification error (10)of MLP than rest three attribute subsets (Fig.2d).It is analogous to the confusion matrix of MLP in Tab.7.The CFSSEGES selected attribute subset results in the maximum error of MLP (Fig.2a).The error curve of the SL is presented in Fig.3.The SL classification recognizes 137 out of 148 instances of lymph disease correctly.The maximum number of instances (59 out of 61)of malign lymph class is identified correctly (accuracy of 96.72%, Tab.7).The LSA-RAS selected feature subset results in the minimum classification error (11)of the SL than rest three attribute subsets (Fig.3d)which is similar to the confusion matrix of SL in Tab.7.The maximum error of SL has been obtained for the CFSSE-GES selected attribute subset (Fig.3a).Fig.4 presents the error curve of the SMO classification method.Like SL, the SMO classification method also recognizes 137 out of 148 instances of lymph disease correctly though there is some difference in the confusion matrix (Tab.7).The maximum number of instances (80 out of 81)of metastases class is identified correctly (accuracy of 98.77%, Tab.7).The LSA-RAS selected feature subset results in the minimum classification error (11)of SMO than rest three attribute subsets (Fig.4d)(analogous to the confusion matrix of SL in Tab.7).

Figure 3: Classification error curve of SL using (a)CFSSE-GES, (b)CLSE-RDS, (c)FSE-RKS,and (d)LSA-RAS selected attribute and feature subsets

Figure 4: Classification error curve of SMO using (a)CFSSE-GES, (b)CLSE-RDS, (c)FSE-RKS,and (d)LSA-RAS selected attribute and feature subsets

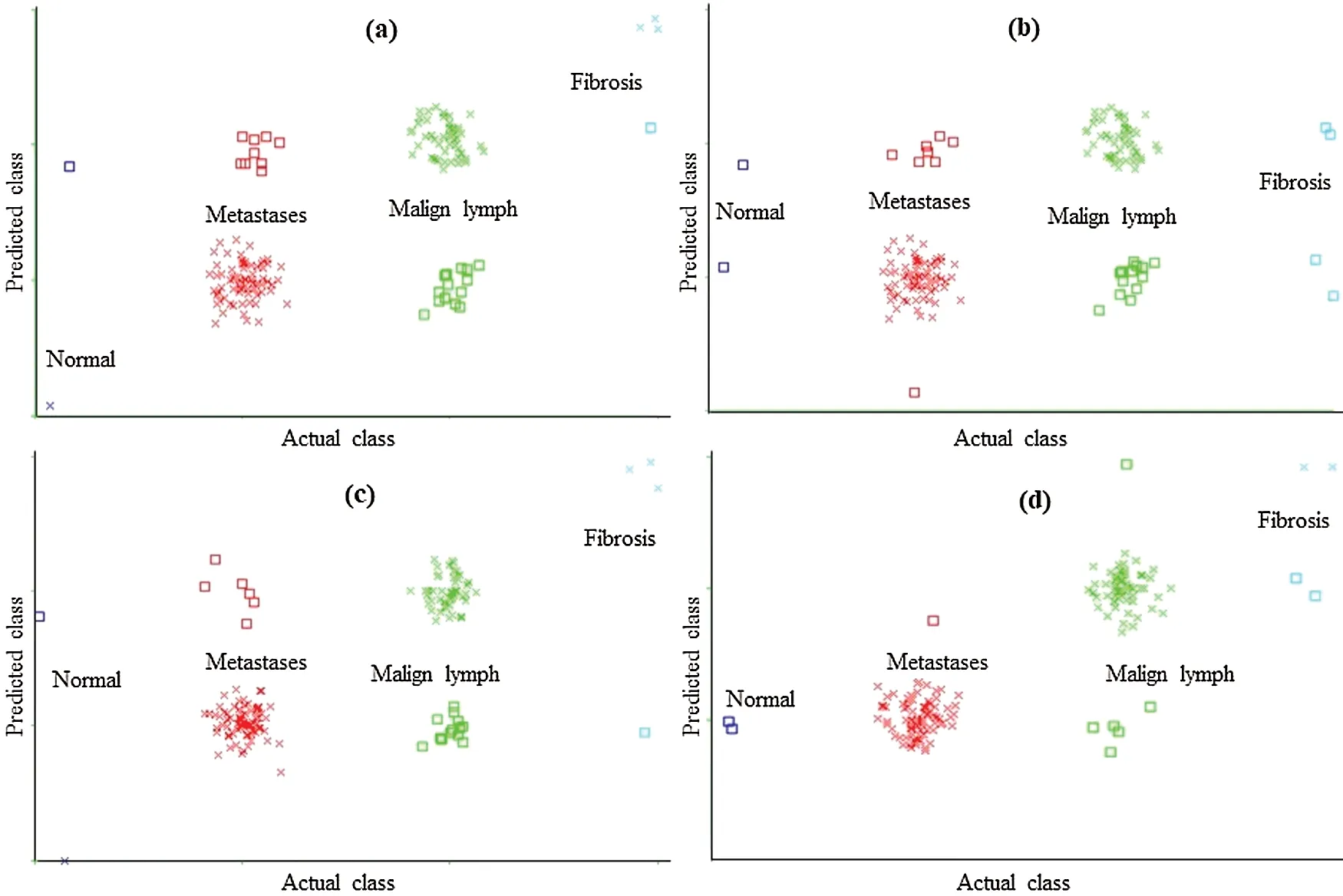

The CLSE-RDS selected attribute subset results in the maximum error of the SMO (Fig.4a).The error curve of the FT classification method using CFSSE-GES, CLSE-RDS, and FSE-RKS selected attribute subsets, and LSA-RAS selected feature subset is presented in Fig.5.The FT classification method identifies 138 out of 148 instances of lymph disease correctly (confusion matrix in Tab.7).The maximum number of instances (59 out of 61)of malign lymph class is identified correctly (accuracy of 96.72%).The LSA-RAS selected feature subset results in the minimum classification error (10)of the FT classifier than the rest three attributes subsets (Fig.5d)(similar to the confusion matrix of FT in Tab.7).CFSSE-GES and FSE-BF have the maximum and similar errors (Figs.5a and 5c).Fig.6 presents the error curve of the LMT classification method.The error curve in Fig.6d represents that 139 out of 148 instances have been correctly identified by the LMT method using the LSA-RAS selected feature subset.It is analogous to the confusion matrix of the LMT method in Tab.7.The maximum number of instances (60 out of 61)of malign lymph class is identified correctly (accuracy of 98.36%).The CFSSE-GES selected attribute subset results in the maximum error of the LMT (Fig.6a).The LSA-RAS selected feature subset results in the improved value of the area under ROC of the classification methods than any other selected attribute subset, feature subset, and original attributes (Tab.6).Furthermore, the maximum average area under the ROC was achieved for the LMT using the LSA-RAS selected feature subset.

Figure 5: Classification error curve of FT using (a)CFSSE-GES, (b)CLSE-RDS, (c)FSE-RKS,and (d)LSA-RAS selected attribute and feature subsets

Figure 6: Classification error curve of LMT using (a)CFSSE-GES, (b)CLSE-RDS, (c)FSE-RKS,and (d)LSA-RAS selected attribute and feature subsets

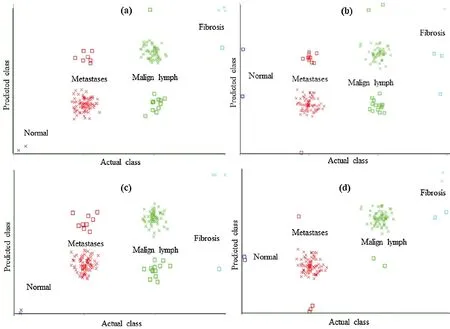

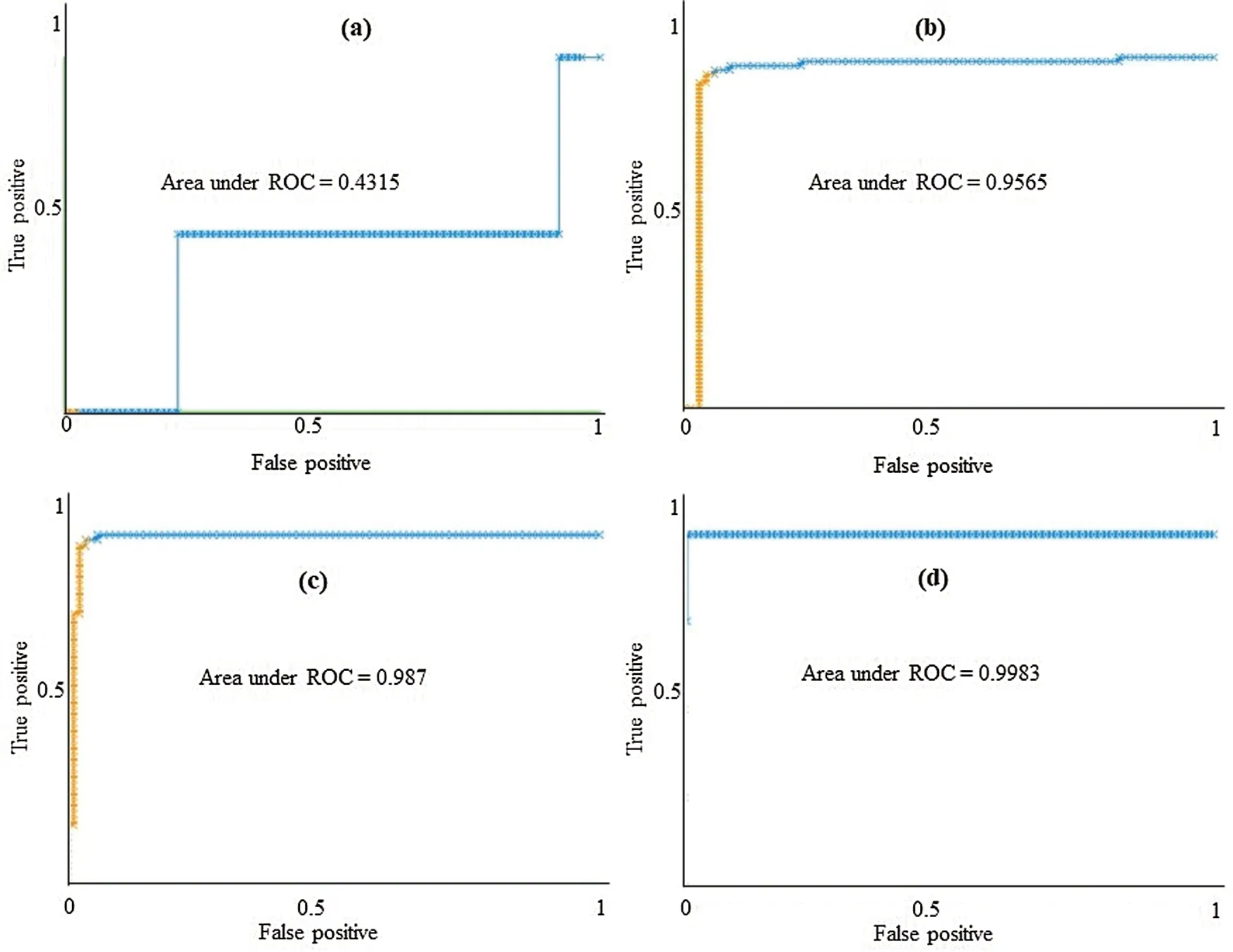

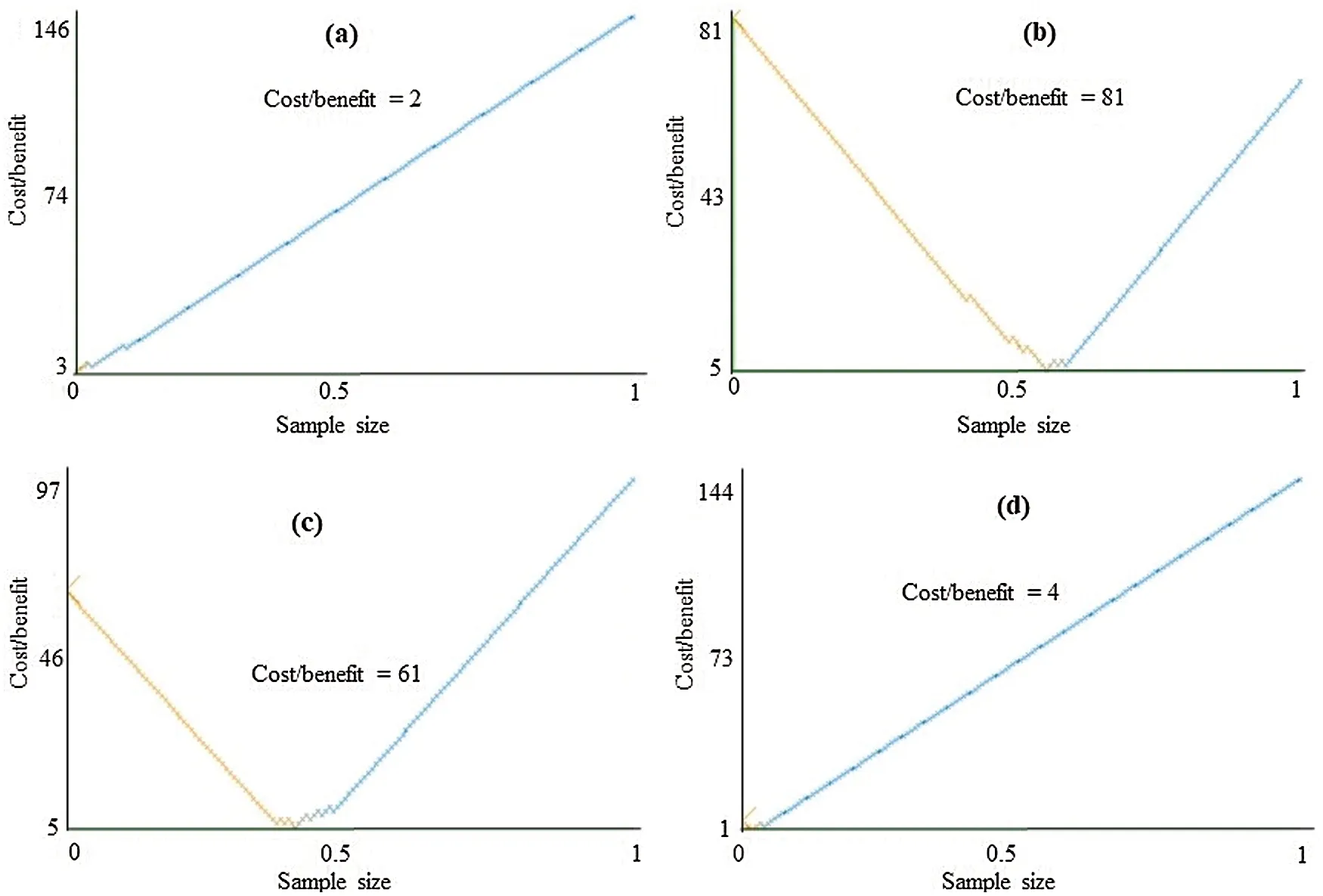

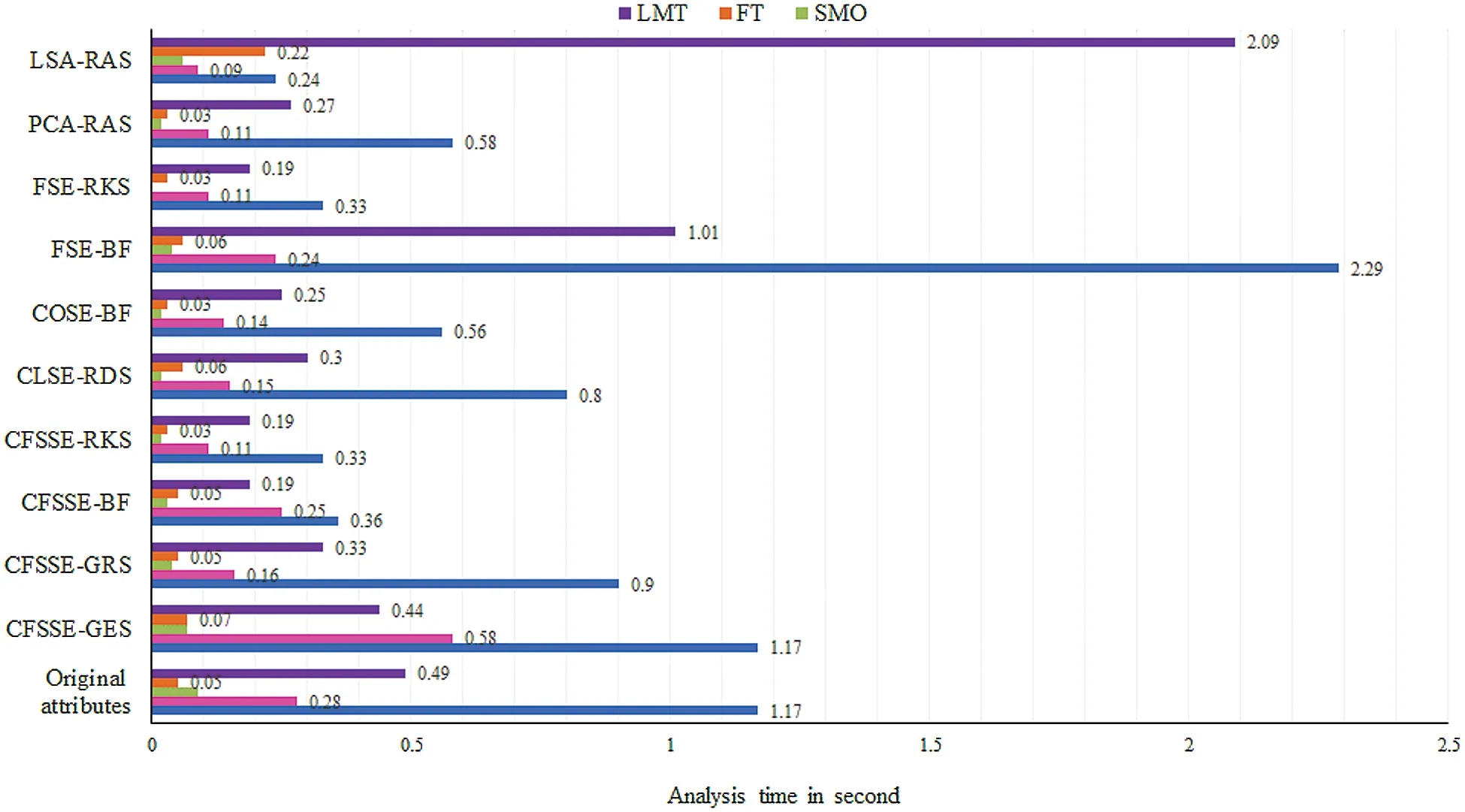

The class-wise area under the ROC curve of LMT using the LSA-RAS selected feature subset is demonstrated in Fig.7.It is obvious that the three positive classes (metastases, malign lymph class, fibrosis)of the lymph disease have an area under ROC ≥0.96.The minimum ROC area(0.43)was obtained for the normal class of the lymph disease while the fibrosis class of the lymph has the maximum ROC area (0.998).Fig.8 represents the cost/benefit curve of LMT using the LSA-RAS selected feature subset of normal and three classes of lymph.The cost/benefit curve represents the error rate on the Y-axis and the probability of belonging to the positive class on the X-axis.The normal and fibrosis classes of lymph have higher error (100%, and 50%, respectively),consequently, the cost curve has a positive slope.The rest of the two classes metastases and malign have a lower error rate.The analysis time of each of the classification methods using the original attributes, selected attributes, and selected features are presented in Fig.9.The MLP classification method has a maximum analysis time of 2.29 s using the FSE-BF selected attribute subset and the SMO method has a minimum analysis time of 0.01 s using the FSE RKS selected attribute subset.Moreover, the MLP has the maximum average analysis time of 0.793 s and the SMO has a minimum average analysis time of 0.037 s using original attributes and selected attributes, and selected features.

Figure 7: The area under the ROC curve of LMT using LSA-RAS selected feature subset for (a)normal, (b)metastases, (c)malign lymph, and (d)fibrosis classes of lymph diseases

Figure 8: Cost/Benefit curve of LMT using LSA-RAS selected feature subset for (a)normal, (b)metastases, (c)malign lymph, and (d)fibrosis classes of lymph diseases

Figure 9: Analysis time of classification methods using original attributes and selected attributes,and selected and generated feature subsets

5 Discussions of Validation and Comparative Analysis Results

The PCA-RAS and LSA-RAS methods consider the contribution of all attributes in the generation and selection of an optimal subset of novel features.This is the reason for the better performance of classification methods in lymph disease recognition using the PCA-RAS and LSARAS selected feature subset than the original attributes and other selected subset of attributes.Though the better performance of the LSA-RAS selected feature than the PCA-RAS selected feature, in-class recognition is due to the majority of the nominal attribute (15 out of 18)in the lymph disease dataset.

The PCA minimizes the correlation and maximizes the variance of the three original numerical attributes, while the LSA measures the textual coherence of most of the nominal attributes effectively and generates novel latent variables that result in a significant improvement in the accuracy of classification methods.The deprived performances of the eight attribute selection methods (Tab.3)in class recognition of the lymph diseases are due to the selection of a few significant attributes of the original data.It may cause the loss of the class identity information on the discarded attributes and hence the substandard recognition performance of the classification methods using the selected attributes than the original attribute, and the PCA-RAS and LSA-RAS selected feature subsets.Analysis results in Figs.2–8 and Tabs.2–7 confirm the better performance of the tree-based than the function-based classification methods using the LSA-RAS selected feature subset.Specifically, the LMT achieved the best recognition accuracy than the rest of the classification methods and the performance of the FT is comparable to MLP and better than the SL and SMO methods.Using the efficient LSA-RAS selected feature subset is the reason for the improved recognition accuracy of each of the classification methods.The improved performance of classification methods in recognition of lymph disease in combination with the feature selection methods is also discussed in some past studies summarized in Tab.1, like the performance improvement of RF using GA, PCA, and ReliefF, etc.(maximum accuracy of 92.2%using GA selected feature subset)[17]; the maximum accuracy of 82.65% of classification method using the rough set selected feature subset [20]; improved accuracy of NB and C4.5 classification methods using information gain (IG), relief, and consistency-based subset evaluation (CNS), etc.(maximum accuracy of 83.24%)[21]; improved accuracy of NB, MLP, and J48 classification method using the IG, gain ratio, and symmetrical, etc.(maximum accuracy of 84.46%)[24];and improved accuracy (84.94±8.42%)of the NB method using the artificial immune system self-adaptive attribute weighting method [27], etc.

The LSA or the combination of the LSA with RAS in lymph disease recognition is not implemented before in the previously published research.Though, the LSA method has been implemented in different applications [41–45], including topic modeling [41], remote sensing image fusion [42], patient-physician communication [43], essay evaluation [44], and psychosis prediction [45], etc.The semantic information is obtained by combining the likelihood of the co-occurrence in the LSA.Also, the latent variables attempt to link the nominal attributes of the instance to their respective class maximally, which causes the improved performance of the classification methods.The improved performance of the RAS method in feature selection is due to its characteristics to combine the entropy, gain-ratio, and relief criteria.The combination of the earlier three criteria reduces the redundancy in the selected feature subset.Some of them have been used independently in the feature selection of the lymph dataset [17,21,24], like reliefF(accuracy of 84.2%)[17], information gain, and reliefF (accuracy of 82.63%, and 81.47%)[21],and information gain, gain ratio, and reliefF (accuracy of 77.02%–80.40%)[24], etc.Among the three functions-based classification methods, the MLP results in the maximum accuracy, using the LSA-RAS selected feature subset.The tree-based LMT achieved the maximum recognition accuracy, using a similar feature subset.The best accuracy of the tree-based classification method and improved accuracy of the function-based classification method is also confirmed in the earlier studies [17,22,24–26], like the best recognition accuracy of 92.2% of random forest method [17],maximum accuracy of 86.49% of SMO and FT methods using the original attributes of lymph dataset [22], the accuracy of 84.46% of MLP, using chi-square selected and original attributes [24],the training accuracy of 85.47% of hybrid radial basis function neural network [25], and the maximum accuracy of 83.51% of ensembles of decision trees [26], etc.

The better performance of the tree-based classification methods; LMT and FT are due to the less number of adjustable parameters after using a significant subset of features selected by the LSA-RAS method, a reduced amount of noise of original attributes in latent variables,and negligible influence of noise, etc.The improved performance of the MLP method is due to the reduced uncertainty of the input and output by using the LSA-RAS selected feature subset.Among the implemented feature selection methods in the recognition of the lymph disease in the previous study [17,20,21,24,27], the best accuracy has been achieved for the combination of the GA and random forest classification methods [17].The proposed approach LSA-RASLMT in the present study achieved the maximum recognition accuracy of the lymph disease than previously published reports.A significant improvement in the accuracy of the LMT (10.81%),SL (9.46), and ML (8.78%)has been achieved (Tab.5).The analysis time of the LSA-RAS-LMT approach in the present analysis of the lymph dataset was 2.09 s (Fig.9).It is in between the analysis time 0.02 s–11.77 s of [20] and, 0.0004 s–0.0051 s (Linux cluster node (Inter(R)Xeon(R)@3.33 GHz, and 3 GB memory)[28].The area under the ROC of the LSA-RAS-LMT approach in the present analysis is equal to 0.97 (Tab.6).It is higher than the area under ROC of other approaches [17,19,23,27], like 0.843-0.954 [17], 80.48 [19], 91.3757±3.25–91.8005±3.61 [23], and 92.99±4.15–95.01±4.87 [27].The LSA-RAS-LMT method has the maximum value of the kappa coefficient (0.89)(Tab.5)in the present analysis.It is also higher than the earlier achieved value of the kappa coefficient [17,18], like 0.512–0.879 [17] and 0.500–0.629 [18].

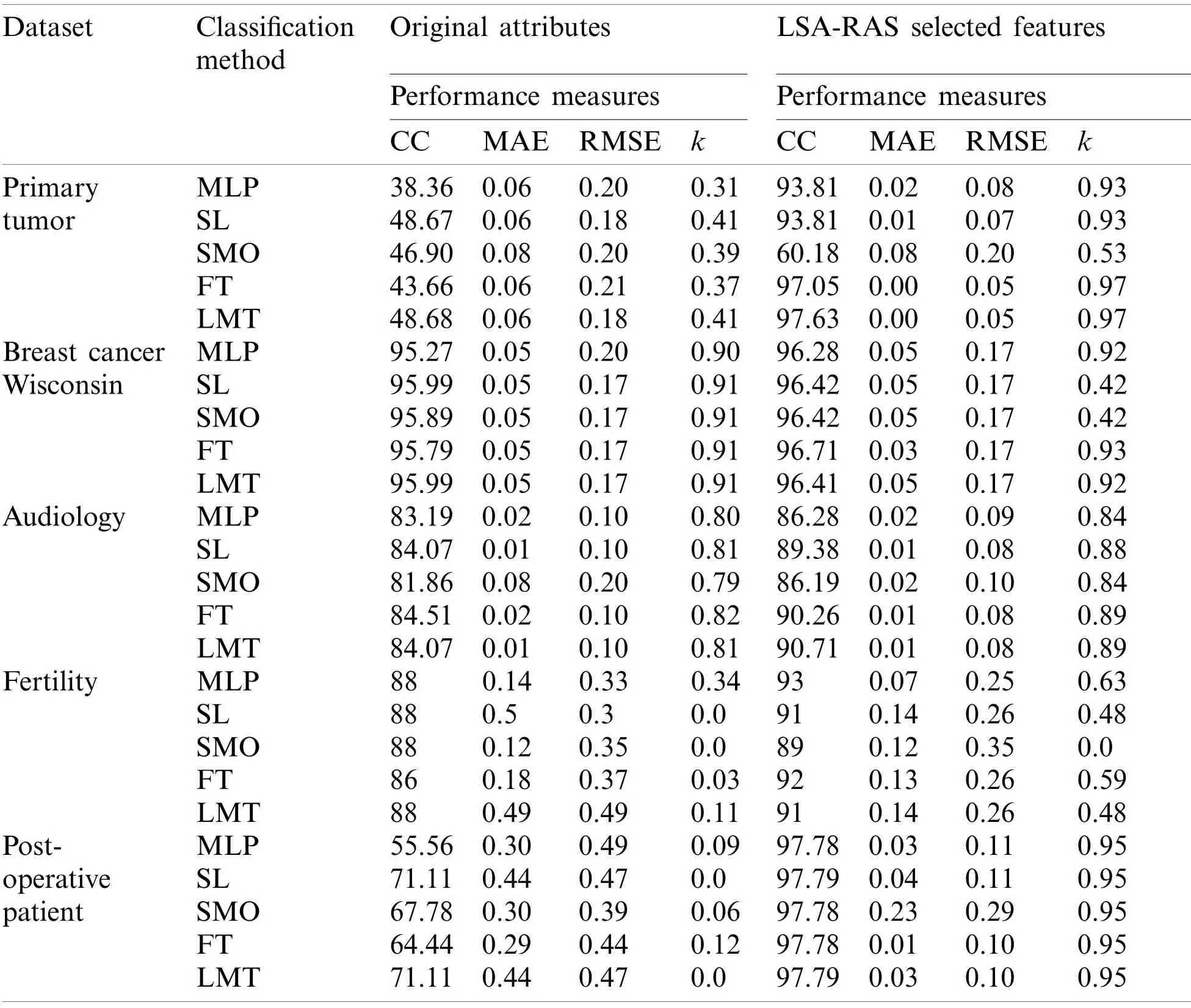

Moreover, the LSA-RAS approach has been validated in the recognition of other benchmark diseases (primary tumor, breast cancer, audiology, fertility, and post-operative patient)[32].The performance of classification approaches is summarized in Tab.8.It is obvious that the LSAselected features subset results in improved accuracy of each of the classification methods than the original attributes.Specifically, a major improvement in accuracy of MLP in the primary tumor (55.45%), SL and SMO in the post-operative patient (26.61%), and FT (53.39%)and LMT(48.95%)in primary tumor has been noticed.The LMT classifier has an improved recognition performance in the analysis of most of the disease datasets.Deep neural networks such as convolutional and recurrent neural networks are used mainly in the preprocessing and classification of the image, text, and continuous data successfully in the past studies [11,12,29–31].Though the lymph and other disease datasets selected in the present study contains the discrete values of numeric and nominal attributes, therefore, the direct implementation of the deep neural networks and its comparison with the proposed approach is not feasible.However, there is a need to explore the possibility in the future research.

Table 8: Performance of the classification methods in recognition of other diseases

6 Conclusions and Research Scope

In the present study, a competent feature generation and selection method (LSA-RAS)of lymph disease recognition has been implemented and validated.The LSA-RAS method results in the improved accuracy of different classification methods.The tree-based methods achieved better performance than the function-based classification methods using the LSA-RAS selected feature subset.Furthermore, hybrids approach (LSA-RAS-LMT)using the combination of feature generation and selection, and classification methods achieved the maximum recognition accuracy and improved the value of other evaluation metrics than other approaches available in the published literature.The LSA-RAS-LMT approach is efficient in the recognition of the lymph disease and analogous disease datasets.Future research will focus on further improvement in the accuracy of the classification methods for lymph disease recognition.

Acknowledgement:This work is supported by the Startup Foundation for Introducing Talent of NUIST.The authors acknowledge the anonymous reviewers for their valued comments and suggestions.

Funding Statement:This work is supported by the Startup Foundation for Introducing Talent of NUIST, Project No.2243141701103.

Conflicts of Interest:The authors declare that they have no conflicts of interest to report regarding the present study.

Computer Modeling In Engineering&Sciences2021年11期

Computer Modeling In Engineering&Sciences2021年11期

- Computer Modeling In Engineering&Sciences的其它文章

- A Simplified Approach of Open Boundary Conditions for the Smoothed Particle Hydrodynamics Method

- Multi-Objective High-Fidelity Optimization Using NSGA-III and MO-RPSOLC

- Traffic Flow Statistics Method Based on Deep Learning and Multi-Feature Fusion

- A 3-Node Co-Rotational Triangular Finite Element for Non-Smooth,Folded and Multi-Shell Laminated Composite Structures

- Modelling of Contact Damage in Brittle Materials Based on Peridynamics

- Combinatorial Method with Static Analysis for Source Code Security in Web Applications