An evaluation of moving shadow detection techniques

Mosin Russell(),Ju Jia Zou,and Gu Fang

An evaluation of moving shadow detection techniques

Mosin Russell1(),Ju Jia Zou1,and Gu Fang1

©The Author(s)2016.This article is published with open access at Springerlink.com

DOI 10.1007/s41095-016-0058-0 Vol.2,No.3,September 2016,195–217

Shadows of moving objects may cause serious problems in many computer vision applications, including object tracking and object recognition.In common object detection systems,due to having similar characteristics,shadows can be easily misclassified as either part of moving objects or independent moving objects.To deal with the problem of misclassifying shadows as foreground,various methods have been introduced.This paper addresses the main problematic situations associated with shadows and provides a comprehensiveperformancecomparison on up-todate methods that have been proposed to tackle these problems.The evaluation is carried out using benchmark datasets that have been selected and modified to suit the purpose.This survey suggests the ways of selecting shadow detection methods under different scenarios.

moving shadow detection;problematic situations; shadow features; shadow detection methods

1 Introduction

Shadows play an important role in our understanding of the world and provide rich visual information about the properties of objects,scenes,and lights. The human vision system is capable of recognizing and extracting shadows from complex scenes and uses shadow information to automatically perform various tasks,such as perception of the position, size,and shape of the objects,understanding the structure of the 3D scene geometry and location andintensity of the light sources.For the past decades, researchers working in computer vision and other related fields have been trying to find a mechanism for machines to mimic the human vision system in handling the visual data and performing the associate tasks.However,the problem is far from being solved and all the tasks remain as challenging.

Shadows are involved in many low-level computer vision applicationsand imageprocessing tasks such as shadow detection,removing,extraction, correction, and mapping. In many video applications,shadows need to be detected and removed for the purpose of object tracking[1], classification[2,3],size and position estimation [4],behaviour recognition[5],and structural health monitoring[6].In still image processing,shadow feature extraction is applied to get features that willbe usefulin objectshape estimation [7], 3D object extraction[8],building detection[9], illumination estimation [10]and direction [11], and camera parameter estimation[12].Shadow detection and correction(i.e.,shadow compensation or de-shadowing)involve complex image processing techniques to produce a shadow-free image which can be usefulin many applicationsincluding reconstruction of surfaces [13], illumination correction[14],and face detection and recognition [15].In contrary to shadow detection, some applications,such as rendering soft shadow for 3D objects[16]and creating shadow mattes in cel animation[17],require rendering shadows to add more spatial details within and among objects and to produce images with a natural-realistic look. Shadow detection and mapping also need to be considered in some recent image processing applications such as PatchNet[18],timeline editing [19],and many othervisualmediaprocessing applications[20]. Examples of these applications are shown in Fig.1.

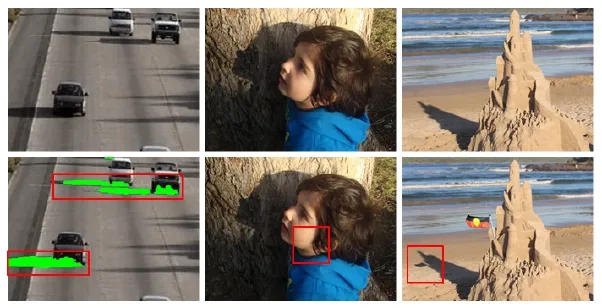

Fig.1 Examples of shadow applications:(first column)detection of moving shadows in a frame of the sequence Hwy I[21]using the method reported in Ref.[22](shadows are highlighted in green),(second column)manual detection and correction of shadows for a still image,and (third column)manual detection and mapping of shadow for an outdoor still image.

Compared with detecting moving shadows in a video,detecting still shadows in a single image is more difficult due to having less information available in a single image than a video.In both cases,various features(such as intensities,colors, edges,gradients,and textures)are used to identify shadow points.In moving shadow detection,these features from the current frame are compared to those of the corresponding background image to find if the features are similar.In still image shadow detection,these features are often used along with other geometric features(such as locations of light sources and object shapes)to detect shadows.

In this paper,we focus on shadow detection and removalin image sequences(also called moving shadow detection)and introduce a novel taxonomy to categorize the up-to-date existing moving shadow detection methods based on various metrics including moving objects and their cast shadow properties,image features,and the spatial level used for analysing and classification.Listed below are the major contributions of this paper:

•The main problematic situations associated with shadows are addressed and common datasets are organized and classified based on these problematic situations.

•A unique way to analyse and classify most key papers published in the literature is introduced.

•A quantitative performance comparison among classes of methods is provided in terms of shadow detection rate and discrimination rate.

•Since the last survey on shadow detection methods by Sanin et al.[23],more than 140 papers have been published in the literature which need to be evaluated. This survey focuses on most recent methods.

The rest of the paper is organized as follows. Section 2 discusses the general concept of shadow modelling and possible problematic situations present in real-world scenes.Details of datasets used for comparison are given in Section 3.Quantitative metrics used for performance evaluation are discussed in Section 4.Section 5 discusses the existing moving shadow detection methods in detail,followed by their performance evaluation with advantages and disadvantages in Section 6. Section 7 provides a conclusion of the work presented in this paper.

2 Understanding shadow

Understanding the physical formation of shadows is an essential key to solve many problems in the applications mentioned in the previous section. In the beginning of this section,the basic idea about shadow formation and modelling is discussed. The main properties and assumptions for moving shadows are summarised in Section 2.2.In Section 2.3,variousproblematicsituationsformoving shadow detection are discussed.

2.1 Cast shadow model

Shadows are considered as a problem of local or

regional illumination changes.In other words,when an object is placed between the light source and the background surface,it blocks the light to reach the adjacent region(s)of the foreground object,causing a change in illumination in that region.Due to the multi-lighting effects,changes in illumination(with respect to background)are more significant in the centre regions of the shadow(areas which linked to the self-shadow of the foreground object)than its outer boundaries.This is the case where shadows can be further classified into two regions,namely, umbra and penumbra regions.Umbra is the darker region in the cast shadow where the direct light (dominant light)is totally blocked and the ambient lights illuminate the region.Penumbra is the lighter regions where both the light sources(dominant and ambient lights)illuminate the area.An example of cast shadow analysis is illustrated in Fig.2.

These parts of the detected foreground mask can be analysed in terms of illuminations of the light sources and the surface reflections of these regions. Thus,using the Kubelka–Munk theory[24],the intensity of each pixel St(p)in an image plane obtained by a camera can be expressed as

where 0<it(p)<∞ is the irradiance term which indicates the amount of source illumination received by the point p on the surface S at time t,and 0<rt(p)<1 is a coefficient measuring the amount of the illumination reflected by the same point.

Fig.2 Shadow model.

The coefficient 0<TD<1 measures the amount of the light energy available from the dominant light at time t.It represents the global change in illumination of the dominant light source in time. T(p)determines the amount of the available light energy of the dominant light source received by the point p at time t.It represents the local change in illumination at point p in time.Theoretically,the point p belongs to a cast shadow when the value of the coefficient T(p)<1.Furthermore,the point p is considered as belonging to the penumbra region of the shadow cast when 0<T(p)<1 or to the umbra region when T(p)=0.

2.2 Properties of moving shadows

There are many properties associated with shadows; however,in here,only those directly related to the field of shadow cast detection in images are mentioned.Each proposed method within literature relies at least on one of the following properties to detect shadows:

•Intensity reduction:The intensity of a background point is reduced when shadow occurs,that is due to the irradiance term it(p)in Eq.(1)which receives less amount of light from the dominant light source once shadow occurs. The strength of the ambient light sources around the object determines how much darker the point will be.

•Linear attenuation: When the spectral power distribution(SPD)of the dominant and the ambient light sources are similar,the background color will be maintained when shadow occurs.In other words,linear attenuation exists for the three color channels(R,G,B),i.e.,

•Non-linear attenuation:When the SPD for the dominant and the ambient light sources are different,the background colors,depending on the color of the ambient light,will be changed when shadow occurs.

•Reflectance-constancy:Textures and patterns for background regions do not change in time,i.e.,the object reflectance term rt(p)in Eq.(1)does not change when shadow occurs.

•Size-property:The size of the shadow mainly depends on the direction of illumination,the size of moving object,and the number of available light sources.

•Shape-property:The shape of the shadow depends on the shape of the object which casts it and the direction of the illumination.

•Shadow direction:For a single point light source, there is only one direction of shadow.However, multiple shadow directions occur if there is more than one light source in the scene.

•Motion attitude:Shadows follow the same motion as the objects that cast them.

2.3 Problematic situations

In the following,the most common problematic cases for moving cast shadows are addressed.These problematic cases can be defined based on two factors,including:(i)the color and intensity of the lights that illuminate the shadow region and nonshadow regions,and(ii)the reflectance properties of the foreground regions,cast shadow,and the corresponding background regions.A foreground region is a region of the current frame,belonging to a moving object.A background region is a region of the background image without any moving object. Each case is elaborated in detail below.

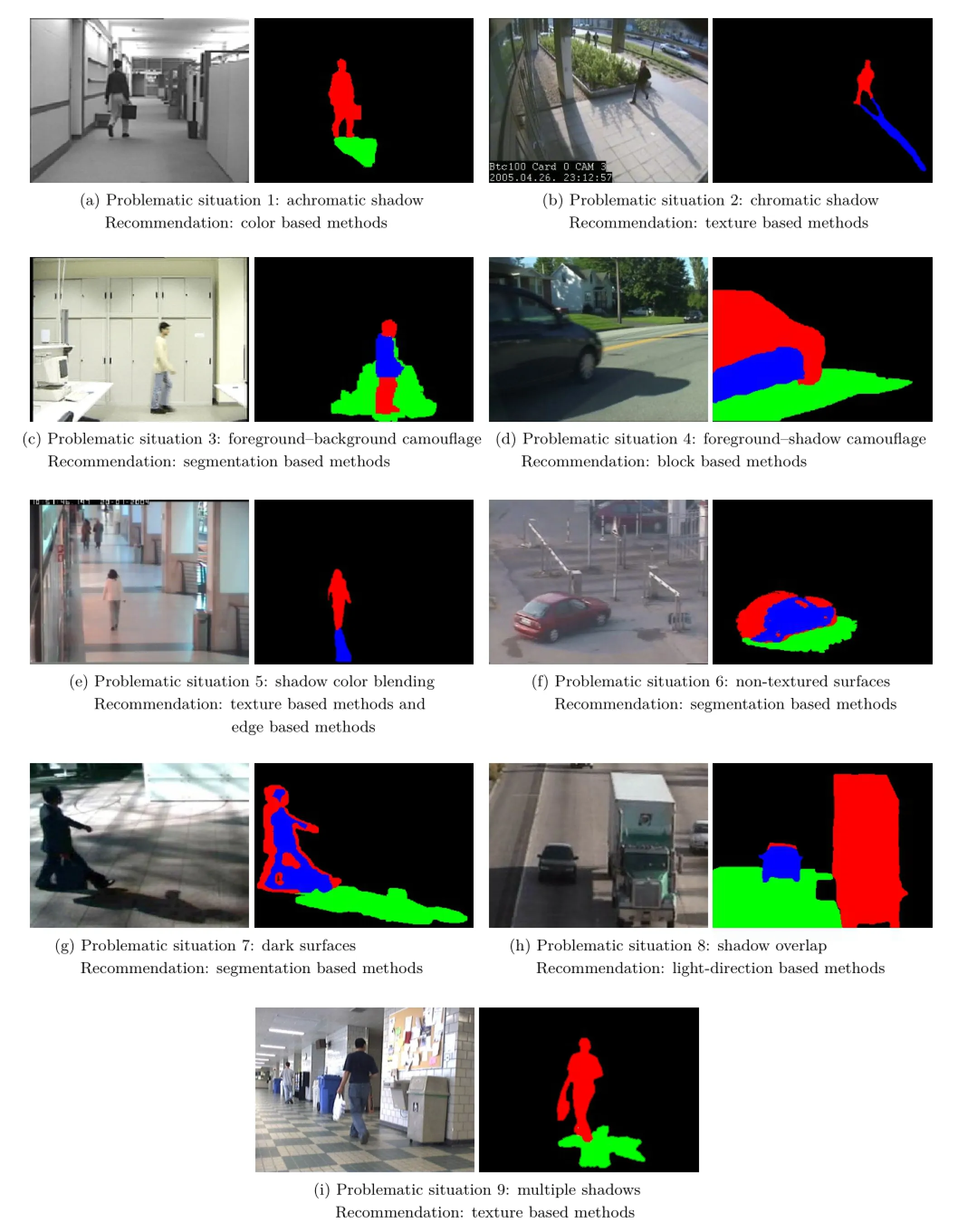

•Achromatic shadow:The SPD of the two light sources,the dominant light and the ambient light, are similar for both penumbra and umbra.

•Chromatic shadow:Some parts of shadow cast are illuminated by different light color to that in dominant light.

•Foreground–background camouflage:Some parts of the foreground region have similar appearances in color or texture to the corresponding backgrounds.

•Foreground–shadow camouflage: When the intensity of dominant light is strong and the objectsurface hashigh luminance(such as metal),then shadow points are reflected back by the self-shadow parts of the foreground object. As a result,the boundary between the foreground object and its cast shadow is not clear.

•Shadow color blending: When the reflectance of the background surface is high,some parts of the foreground object are bounced off by the background,causing color blending in the background.

•Non-textured surfaces:Some internal parts of the foreground object or the background surface are non-textured or flat.

•Dark surfaces:Some parts of the foregrounds or backgrounds are dark due to low reflection properties.

•Shadow overlap:An object,partially or fully,is covered by the shadow casted by the nearby object.

•Multiple shadow:When two or more light sources are available in the scene,objects are more likely to have more than one shadow cast.

3 Datasets

Many datasetsfordetecting castshadowsof foreground objects have been developed. Based on the environments where the scenes were taken, datasets can be categorised into two types:outdoor environment and indoor environment.Compared to indoor scenes,detecting moving cast shadows is relatively more difficult in outdoor scenes in which many issues should be considered,such as noisy background,illumination changes,and the effects of the colored lights(e.g.,the diffused lights reflected from the sky).

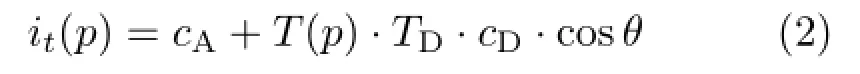

Table 1 shows the summary of various publicly available datasets for indoor and outdoor situations. Some of these datasets have been modified to represent at least one problematic situation in moving cast shadows.The first sequence,Campus (D1),shows an outdoor environment where the shadow size is relatively large and its shadow strength is weak due to the presence of multi-light effects.Sequence Hwy I(D2)represents a case of having shadow overlap in a heavy traffic condition with strong and large size cast shadows.Sequence Hwy III(D3)is another traffic scene with different shadow strengths and sizes,shadow characteristics, and problematic situations.Laboratory (D4)is a typical indoor environment in which a strong camouflage exists between parts of the walking person and the background.Intelligent Room(D5) is a good example of having dark surfaces in the foreground and background images in indoor scene. The Corridor sequence(D6)shows the problem of shadow color blending due to having a strong background reflection.

2y20leD1llwa3 800orleHa×doPeopriabVaMediumWeak——√0√— √ — √—√—√D11llM o n ito 330×doorr 76 all PeopMediumSm144 24InHaWeak√—√√— √ — —√√——0r adShe 3oo99ee leleIn8 1√√√D10×td244 Ou11PeopLargLargStrong—√ √ — —√√√—Peops 3oongalow6 00ee 0×tdrgrg0r D9√√√17Vehicle LaLa√24OuStrong√ √ — —√———SeamD80r 92 Bule3oo0×tdall 45PeoprgengLaStro√√√—24Ou—√ ———√——D70r y II0×tdall all 32oongtaHw√√50√24Vehicle 0 OudaSmSmStro√√— — ——√——2358setsorD6le4 38 orrridall Co×dol details of28InPeopnica8SmMediumWeak——√—√ √ — √√———7503 ble 1 VaTecht Room telligen×do20le0 orriab24InD5PeopMedium√—√Weak√— — — ——√——In2217ratoD4Tary3 Inorle20 × dobo——√240InPeopMedium√MediumWeak— √ — √√——√×tdLay III 0 2 273oor 22240 OuVehicle VariabSmStrong—√—√√ √ √ —√—D3——2 Variab0r e 24Ou3 y IVehicle 3oo73LargHw0×tdHwStrongle—√√√√ √ √ ——√√—all us2r 47mp5 rg298×tdD23oo28Ouall/m ed iu m e leCaLaWeak√√√√ √D1√ √—√—Sm——ndcoth(frames) Non-textured su rfa ces thdolesue sizeatic sh a d ow SizeLengowdingpeScene tyNaStrenglew UmtClass Vehicle/people SmPenutic sh a d o w bel FramleSizeSampmeSelf-sharabrambAchromromand– b a ck g rou n d ca m o u f l a g e Foregrou– sh a d ow camouf l a g e loadLarkChShbelnadowoverla Foregrouplesh a d ow ltipr ejO cb rfaceswodahSDaShMu

Hwy II(D7)represents traffic scenes taken from a strong daylight where the case of shadow camouflage exists.Seam(D8)shows another example of outdoor environment where the shadows of the people are strong.The Bungalows sequence(D9)shows a traffic scene captured with a narrow-angle camera and is a good example of having foreground–shadow camouflage.PeopleInShade(D10)is considered as a challenging sequence for many reasons including existence of non-textured and dark surfaces, foreground–background camouflage,and foreground–shadow camouflage.The last two sequences,Hall Monitor(D11)and Hallway(D12),represent two indoor surveillance cases where the problems of dark surfaces and multiple shadow exist.

Changedetectionmasks(CDMs)alongwith ground truth images for sequences Campus,Hwy I, Hwy III,Laboratory,Intelligent Room,Corridor, and Hallway are available in Ref.[21],for sequences Bungalows and PeopleInShade in Ref.[26],and for sequences Hwy II and Seam in Ref.[27]and Ref.[28]respectively.

4 Quantitative metrics

Two quantitative metrics,shadow detection rate(η) and shadow discrimination rate(ξ),are widely used to evaluate the performance of shadow detection methods:

where TPS,FNS,TPF,and FNFare shadow's true positives(shadow pixels that are correctly classified as shadow points),shadow's false negatives (shadow pixels that are incorrectly classified as foreground object points),object's true positives (pixels belonging to foreground objects that are correctly classified as foreground object points),and object's false negatives(foreground object pixels that are incorrectly classified as shadow points), respectively.The shadow detection rate(η)shows the number of points that are successfully identified as shadows(TPS)from the total number of the shadow pixels(TPSand FNS).It is desirable to detect most pixels in this region as shadows to obtain a good detection rate. On the other hand,the shadow discrimination rate(ξ)focuses on the area that is occluded by parts of the foreground object. It measures the ratio of the number of correctly detected foreground object points(TPF)to the total number of the ground truth points belonging to the foreground object(TPFand FNF).Similarly, it is preferable to correctly detect almost all the foreground pixels in this region.

5 Review of existing methods

Many shadow detecting and removing algorithms have been proposed in the literature in which different techniques are used to extract the foreground object from its shadow.Prati et al.[29] provided a survey on shadow detection methods which is mainly based on the type of the algorithms used. They organised the contributions reported in the literature into four main classes,namely, parametric,non-parametric,model based,and nonmodel based.Al-Najdawi et al.[30]proposed a four-layer taxonomy survey which is complementary to that in Ref.[29].The survey is mainly based on object/environment dependency and the implementation domain of the algorithms.Sanin et al.[23]stated that the selection of features has superior influences on the shadow detection results compared to the selection of algorithms. Thus,they classified the shadow detection methods into four main categories: chromaticity based, geometry based,physical properties and textures based methods.

Fig.3 Classification of moving shadow detection.

Thispaperintroducesa differentsystematic method to classify existing shadow detection algorithms mainly based on the type of properties used for classification.Since the properties of the two main components in the change detection mask,the moving object and the cast shadow, have important roles to separate them,the existing methods,accordingly,can be divided into two main categories(see Fig.3):object shape-property based and shadow-property based methods. Depending on the type of main feature used,shadow-property based methods can be further subdivided into two groups: light-direction based and image-feature based methods.Light-direction based methods work on geometric formation of cast shadows and the light source to find useful geometric features such as the location and direction of the light source and the location shadow cast in the background.Meanwhile, image-featurebased methodswork directlyon analysing 2D images and extract color and texture independent of the scene type,object type,or other geometric features.Due to a vast majority of the work belonging to this category,the image-feature based methods are further subdivided,based on the spatial features used in their final classification,into pixel based and region based methods.Regardless of the type of image features,such as color,edge, and texture,used in analysing stage,the final classification in both cases is made on individual pixels.Due to the importance of the three features, namely,color based method,edge based method, and texture based method are studied separately. On the other hand,region-level methods effectively take advantages on contextual information and accordingly segment the image into regions.They can be broadly further subdivided into segmentation based methods and block based methods.

When compared to other types of classification, the proposed classification provides a better grasp of the existing shadow detection methods by taking into account more features in the classification to cover more papers in the literature,and analysing and evaluating themethodsunderallmajor problematic situations in shadow detection.In the following,shape based,light-direction based,color based,edge based,texture based,segmentation based,and block based methods are discussed in detail.

5.1 Shape based methods

Shape based methods utilise the properties of the foreground objects,such as shape and size to detect their cast shadows. They model the foreground object using various object-geometric features that can be either obtained by having a priori knowledge about the foreground object or extracted from the input images without depending on the background reference.They are mainly designed to detect shadows casted by a specific foreground object such as human[31]or vehicles[32].Typical shape based methods are summarised in Table 2.

Hsieh et al. [33] proposed a coarse-to-fine Gaussian shadow algorithm to eliminate shadows of pedestrians.Several geometric features are utilized in their model,including the object orientation, centre position of the shadow region,and the intensity mean.

Yoneyama et al.[32]utilized the vehicle-shadow orientations to distinguish shadows from the moving vehicles.The method is based on a joint 2D vehicle/shadow model which is projected onto a 2D image plane.They explicitly divided the 2D vehicle/shadow model into six types where each type is referred to one location of the shadow in the foreground mask.These geometric properties are estimated from input frames without a priori knowledge of the light source and the camera calibration information.

Bi et al.[34]introduced a shadow detection method based on human body geometrical existence and its approximate location.In the first step,the human body shape property is analysed and used to determine the location of cast shadow.In the second step,an image orientation information measure is used to divide the image pixels into two regions, namely,smooth region and edge region.The two measurements,shape analysis and the ratio of pixels, are then fused in the final classification.

Fang etal.[35]exploited the spectraland geometrical properties to detect shadows in video sequences. In the first stage,candidate shadow points are segmented using the spectral properties of shadows.Feature points of occluding function are then detected using wavelet transform.In the laststage,the occluding line,formed from the feature points,is detected to separate objects from their shadows.

Table 2 Summary of shape based methods for shadow detection

Chen et al.[31]proposed a 3-stage algorithm to detect cast shadows of pedestrians who are posed vertically. In the first stage,a support vector machines(SVM)classifier is trained and applied on the foreground mask to compute possible shadow points.A linear classifier is then adopted to divide the foreground mask into human and shadow subregions.In the last stage,the shadow region is reconstructed with the aid of the background image.

5.2 Light-direction based methods

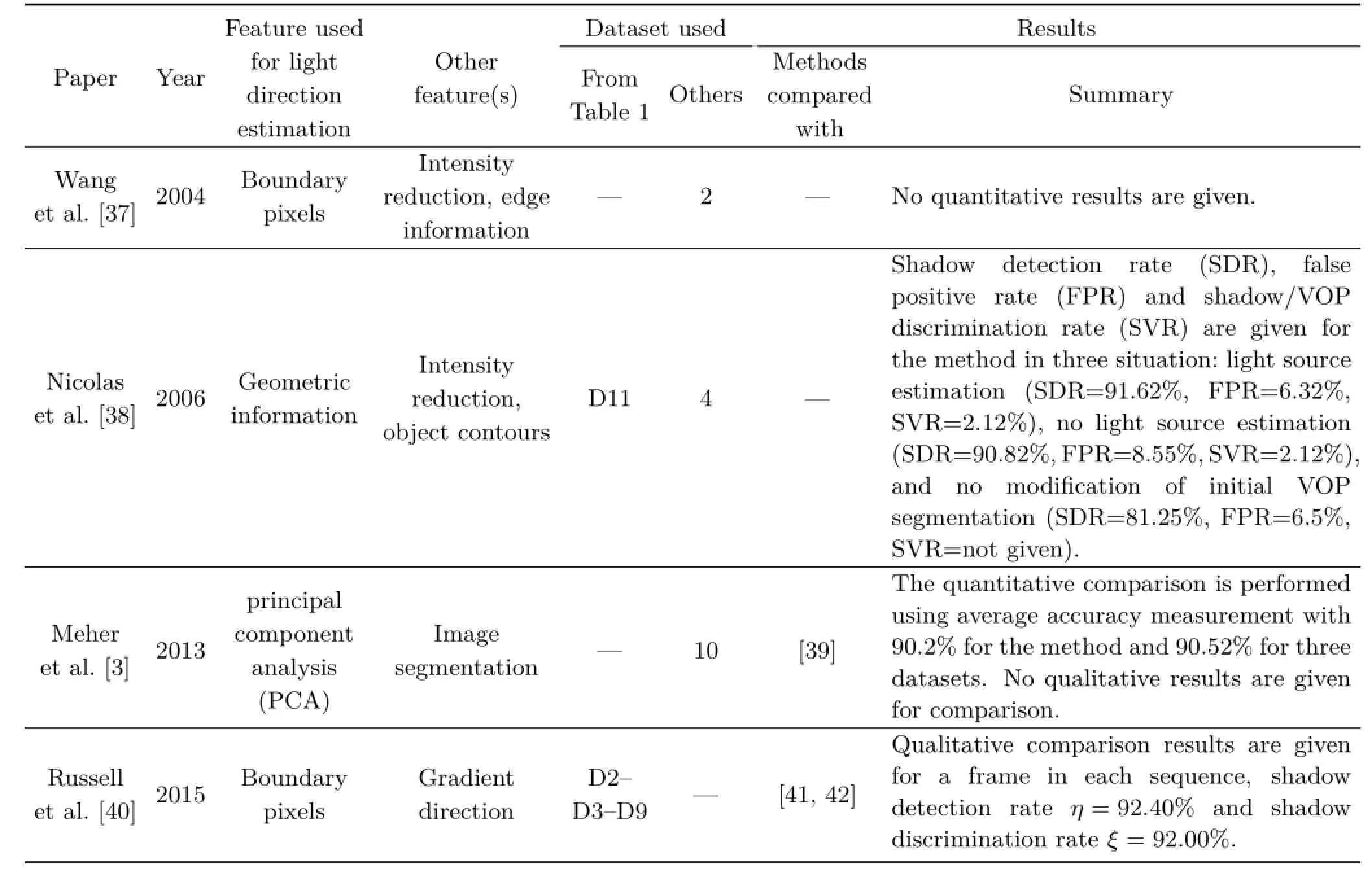

Some methods utilise various geometric information, such as location and direction,of the light source(s) and shadows to detect shadows casted by moving objects.These geometric measurements can be extracted from the input images or having prior information about the scene.These methods mainly depend on geometric features and use other image features to enhance the detection results.Typical light-direction based methods are summarised in Table 3.

Nicolas et al.[38]stated that estimating the position of the light source can improve the detection results. Based on that,they proposed a method which allows a joint estimation of the light source projection on the image plane and the segmentation of moving cast shadows in natural video sequences. The light source position is estimated by exploring the geometric relations between the light source, and the object/shadow regions on the 2D image plane.For each incoming video frame,the shadow–foreground discrimination is performed based on the estimation of the light source position and the video object contours.This method is strongly based on two assumptions:(i)the light source is unique and (ii)the surface of the background is flat.

Wang et al.[37]presented a method for detecting and removing shadow which is mainly based on the detection of the cast shadow direction. In the method,shadow direction is computed using a number of sampling points taken from shadow candidature.An edge map is then used to isolate the foreground object from its shadows.They applied some rules to recover parts of the vehicles that are (i)darker than their corresponding backgrounds and (ii)located in the self-shadow regions.

Meheretal.[3]used lightsource direction estimation to detect cast shadows of vehicles for the purpose of classification. In the first step, image segmentation is performed on the moving regions using a mean-shift algorithm. Principal component analysis(PCA)is then used to determine the direction of moving shadow regions and separate them from vehicle regions.

Recently, Russell et al. [40] developed amethod for detecting moving shadows of vehicles in real-time applications.The method is based on the illumination direction and the intensity measurementsin theneighbouringpixelsin a scanned line to detect cast shadow lines of vehicles.

Table 3 Summary of light-direction based methods for shadow detection

5.3 Color based methods

Color based methods use color information to describe the change in the value and appearance of a pixel when shadow occurs.In these methods, two features,namely the intensity and invariant measurements,are combined to identify those points thatbecome darkerthan theircorresponding background while maintaining their color consistency.Algorithms based on color techniques attempt to use suitable color spaces for separating the brightness of each pixel from its chromaticity. A comparative survey on different color spaces used for shadow detection can be found in Refs.[63–65]. Table 4 shows the common color spaces used in shadow detection algorithms.

Cucchiara et al.[43] introduced the hue–saturation–value(HSV)color space as a better choice compared to the RGB color space in shadow detection.Their method is based on the observation that shadows lower the pixel's value(V)and saturation(S)but barely change its hue component (H).

More recently,Guan[53]proposed a shadow detection method for color video sequences using multi-scale wavelet transforms and temporal motion analysis.The method exploits the hue–saturation–value(HSV)color space instead of introducing complex color models.

Similarly,Salvador et al.[44]adopted a new color space model,c1c2c3,for detecting shadow points in both still images and videos. In the method, the features of the invariant color c1c2c3 of each candidate point from a pre-defined set are compared with the features of the reference point.Thus,a candidate point is labelled as shadow if the value of its c1c2c3 has not changed with respect to the reference.

Mellietal.[49]proposed a shadow–vehicle discrimination method fortrafficscenes.They asserted that YCbCr color spaces are more suitablein shadow–foreground discrimination,mainly to separate the road surface from shadow regions.

Table 4 Summary of color based methods for shadow detection

Cavallaro et al.[50]used normalized RGB(nRGB) color space to get shadow-free images.The main idea of using this color space is that the values of normalized components(usually labelled as rgb)do not change a lot for points under local or global illumination changes.Similar to nRGB,normalized r-g(nR-G)is proposed by Lo and Yang[51]to separate brightness and color for each pixel in the foreground mask region. They stated that the normalized values of the two channels(red and green)remain roughly the same under different illumination conditions.

Sun and Li[54]proposed a method for detecting cast shadows of vehicles using combined color spaces. In the method,HSI and c1c2c3 color spaces are used to detect possible shadow points.A rough result is then obtained by synthesizing the above two results. In the final step,some morphological operations are used to improve the accuracy of the detection result.

Ishida et al.[66]used the UV components of the

YUV color space along with the normalized vector distance,peripheral increment sign correlation,and edge information to detect shadows from image sequences.They stated that the differences in the U and V components of each shaded pixels and their corresponding backgrounds are small.On the other hand,those in a moving object region become large.

Dai et al.[57]introduced a method to detect shadows using multiple color spaces and multi-scale images.Their color features include chromaticity difference in HSV,invariant photometric color in c1c2c3,and salient color information in RGB.

Wang et al.[61]proposed a method for shadow detection using online sub-scene shadow modelling and object inner-edge analysis.In the method, accumulating histograms are computed using the chromaticity differences in hue,saturation,and intensity(HSI)between foreground and background regions.

5.4 Edge based methods

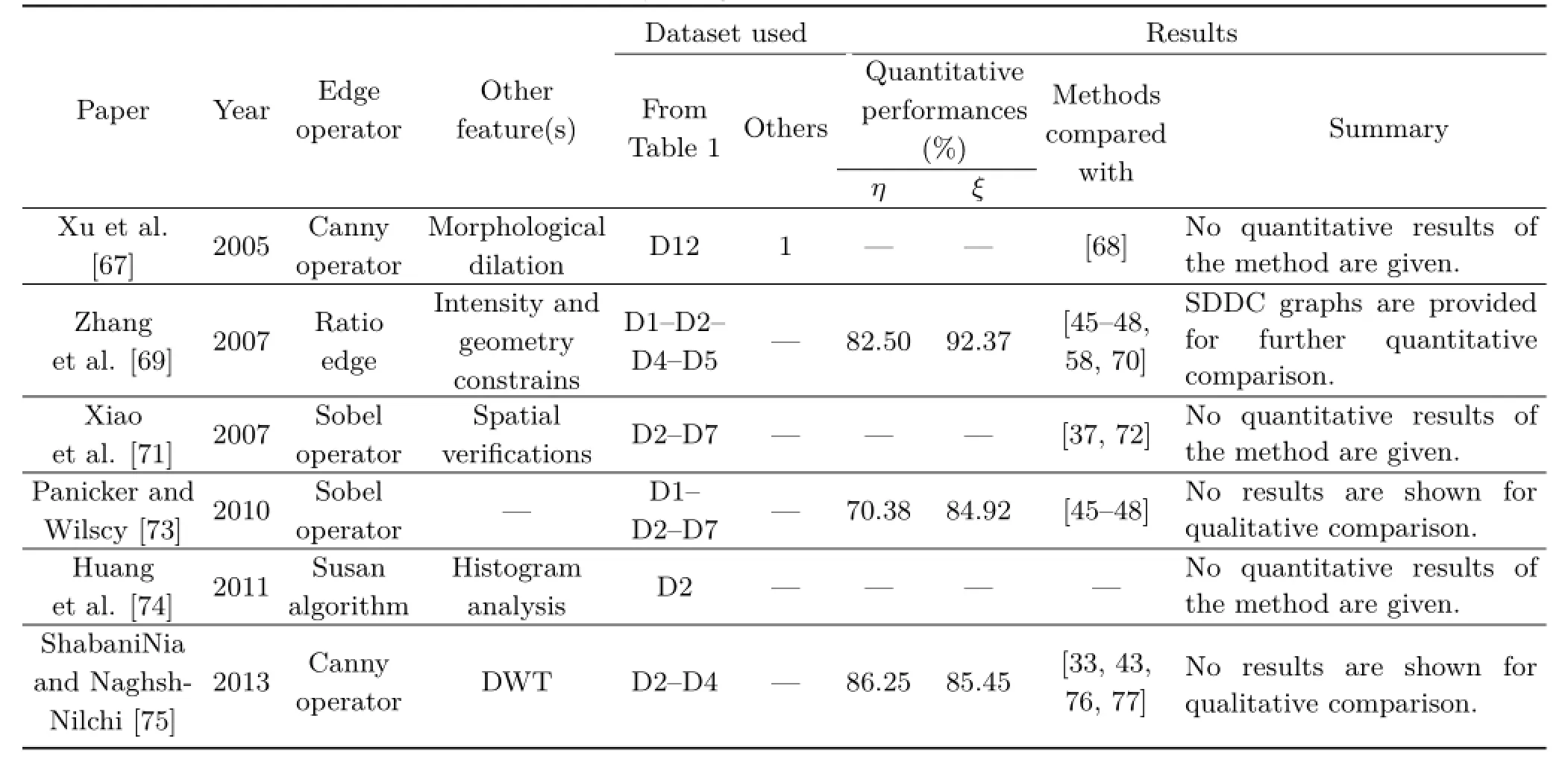

Edgeinformation isa very usefulfeaturefor detecting shadow regions. It can be proved that edges do not change under varying illumination, i.e.,the edge for the shadow region is similar to the corresponding region under direct light.Edge information can be useful when a pixel in the current frame has similar brightness or intensity values to that in the corresponding background.The common edge detection operators include Prewitt operator, Sobel operator,Canny operator,Robert operator, etc.Typical edge based methods are summarised in Table 5.

Xu et al.[67]used static edge correlation and seed region formation to detect shadow regions for indoor sequences. In the method,a number oftechniquesareinvolved including: (a)the generation of the initial change detection mask (CDM),(b)applying Canny edge detection on the given frame,(c)detecting moving edges using multiframe integration,and(d)using the morphological dilation to enhance output results.

Zhang et al.[69]proved that the ratio edge is illumination invariant.In the first stage,the possible shadow points are modelled in a mask based on intensity constraint and the physical properties of shadows.The ratio edge between the intensity of a pixel and its neighbouring pixels is then computed for the given frame and the background image.In the final stage,geometric heuristics are imposed to improve the quality of the results.

Xiao et al.[71]used Sobel edge detection to eliminate shadows of the moving vehicles.Sobel edge detector is applied on the binary change detection mask(to detect the boundary of the whole mask)and the given frame masked with the change detection mask(to detect inner edges of the vehicles).The edges from the vehicles are then extracted from the two results.In the final step,spatial verifications are applied to reconstruct the vehicle's shape.

Table 5 Summary of edge based methods for shadow detection

Similarly,Panicker and Wilscy[73]proposed a method which uses the edge information to detect moving shadows for traffic sequences.In the first stage,the edge information for both the foreground and the background masks is extracted by using the Sobel operator.The two edge maps are then correlated to eliminate the boundary of the cast shadow,resulting in preserving the internal edge of the object.In the final stage,the object shape is reconstructed by using the object interior edges.

Huang et al.[74]proposed a simple edge-pixel statistics histogram analysis to detect and segment the shadow area for traffic sequences.The statistic characteristics of edge pixels,detected using Susan's algorithm[78],are analysed to detect shadow pixels.

ShabaniNia and Naghsh-Nilchi[75]introduced a shadow detection method which is mainly based on edge information.In the first step,static edges of the change detection mask are detected using the Canny operator.In the second step,a wavelet transform is applied to obtain a noise-free image followed by the watershed transform to segment different parts of an object including shadows.Segmented parts are then marked as shadows or foreground using chromaticity of the background.

5.5 Texture based methods

It can be proved that textures in the background do not change under varying illumination.Moreover, the foreground object produces different patterns and edgeswith that ofits shadow orthe corresponding background.A summary of typical texture based methods is given in Table 6.

Leone and Distante [77]presented a new approach for shadow detection of moving objects in visual surveillance environments.Potential shadow points are detected based on adaptive background difference.The similarities between little textured patches are then measured using the Gabor function to improve the detection results.

Yang et al.[41]proposed a method to detect shaded points by exploiting color constancy among and within pixels as well as temporal consistency between adjacent frames.The method has better performance compared to other pixel based methods in which the inter-pixel relationship is used as additional metric to support classification.

Qin et al.[79]proposed a shadow detection method using local texture descriptors called scale invariant local ternary patterns(SILTP).Texture and color features are learned and modelled through the use of a mixture of Gaussian.The contextual constraint from Markov random field(MRF)modelling is further applied to obtain the maximum a posteriori (MAP)estimation of the cast shadows.

Liu and Adjeroh[82]proposed a texture based method to detect shadow points in video.Potential shadow points are detected first using intensity reduction features. A gradient confidence weight is used to describe the texture formation within a window of 3×3 pixels(centred at the point).

Khare etal.[84]used the discrete wavelet transform (DWT) to describe the texture information in horizontal and verticalimage dimensions.The shadow points are detected through computing several wavelet decompositions in the HSV color space.

Local binary pattern(LBP)is used in Ref.[87] as a local texture descriptor in detecting shadows of surveillance scenarios.Besides LBP,other features such as intensity ratio and color distortion are also utilized in a statistical learning framework to enhance the detection result.

Huerta et al.[89]proposed a multi-stage texture based approach to detect shadows in videos. In thefirststage,candidate shadow regionsare formed using intensity reduction.Chromatic shadow detection is then performed using gradients and chrominance angles.

5.6 Segmentation based methods

Segmentation based methodsattemptto find similarity in intensity,color,or texture among neighbouring pixels to form independent regions.In general,these methods consist of two main stages: candidate shadow points and region-correlations. Usually the selection of the candidate shadow points is done on individual pixels by employing some spectral features such as intensity reduction[90], chromaticity[1],luminance ratio[42],intensity-color [22],etc. These candidate points often form one or more independent candidate shadow regions.In the next stage,the region-correlation is performed based on various measurements,including texture, intensity,color,etc.Typical segmentation based methods are summarised in Table 7.

Javed and Shah [90]proposed a five-stage algorithm for detecting shadow points using the RGBcolor space. Firstly,a shadow mask is created containing all the pixels with their intensity values reduced significantly.In the second stage,vertical and horizontal gradients of each pixel are computed. Shadow candidate regions are then formed based on color segmentation. In the fourth stage,the gradient direction of each region in the current frame is correlated with that of the background.The classification is done in the final stage by comparing the results of the correlation with a predetermined threshold.Regions with a high gradient correlation are classified as shadows.

Table 6 Summary of texture based methods for shadow detection

Toth et al.[52]proposed a shadow detection method which is mainly based on color and shading information.The foreground image is first divided intosubregionsusingamean-shiftcolorsegmentation algorithm.Then a significant test is performed to classify each pixel into foreground or shadow.The final classification is made based on whether the majority of the pixels inside each subregion,in the previous stage,are classified as shadows or not.The subregion is considered as shadow if the total number of shaded points exceeds 50%of the total number of the pixels inside the subregion.

Table 7 Summary of segmentation based methods for shadow detection

Sanin et al.[1]stated that selecting a larger region,which ideally contains all the shadow points, will provide better texture information compared to smaller regions.Based on that,chromaticity information is used to select possible shadow points. Connected components are then extracted to form candidate regions,followed by computing gradient information to remove those foreground regions that are incorrectly detected as shadows.There are some assumptions for this method:(i)the candidate shadow regions are assumed to be isolated from each other and do not have common boundaries between them,and(ii)each region contains either shadow points or foreground object points.

Amato et al.[42]proposed a method to detect moving shadow for both achromatic and chromatic shadows.Their method is based on that a local constancy exists for any pair of pixels belonging to the shadow region,while foreground pixels do not have this property.In the method,the intensities of the background pixels are divided by intensity of the given frame in the RGB space.The gradient constancy is then applied to detect possible shadow regions.In the final stage,the regions with low gradient constancy are considered as shadows. Similar to other approaches,this method assumes that the foreground object has different texture from that in the shadow region.

Russell et al.[22]used color segmentation to divide the change detection mask(CDM)into subregions. In the method,three features,namely, intensity,spatial color constancy,and temporal color constancy,are used to distinguish shaded regions from objects.In their method,an initial clustering of the change detection mask is used to divide the mask into subregions,then three quantities: intensity mean, invariant colorconstancy measurement, and temporal color constancy measurement,are computed for each region. Initial classification is made based on these three measurements followed by inter-region relationships among neighbouring regions to enhance the final detection result.

5.7 Block based methods

Unlike segmentation based method,regions in block based methods are manually formed by fixed-equalsize blocks and without relying on color or texture information. To determine whether the block is located under shadow or not,color and texture information are exploited among pixels and their corresponding backgrounds.Typical block based methods are summarised in Table 8.

Zhang etal.[92]assumed thatnormalized coefficients of orthogonal transform of image block are illumination invariant.Based on that,they used normalized coefficients of five kinds of orthogonal transform, namely, discrete Fourier transform (DFT),discrete cosine transform(DCT),singular value decomposition(SVD),Haar transform,and Hadamard transform,to distinguish between a moving object and its cast shadow.The information of intensity and geometry is utilized to refine thedetection results.

Table 8 Summary of block based methods for shadow detection

Song and Tai[60]developed a shadow-region based statistical nonparametric approach to construct a new model for shadow detection of all pixels in an image frame. The color ratio between the illuminated regions and the shaded regions is utilized as an index to establish the model for different shadow pixels.

Celik et al.[93]divided the image into 8 nonoverlapped homogenous blocks.A brightness ratio histogram of each block is used to determine whether the block is part of the moving object or shadows.

Bullkich et al.[88]assumed that the shadow pixels are associated with background pixels through a non-linear tone mapping. A model of matching by tone mapping(MTM)is developed to evaluate distances between suspected foreground and background pixels. Regions with low MTM distance metric are considered as shadows.

In Ref.[95],the change detection mask is divided into 8×8 blocks and the 2D cepstrum is applied to check whether the current frame preserve the background texture and color.Pixel based analysis is performed within each shaded block for further classification.

Dai and Han[96]used affinity propagation to detect moving cast shadows in videos.In the first stage,the foreground image is divided into nonoverlapping blocks and color information in the HSV color space from each block is extracted.Affinity

propagation is then utilized to cluster foreground blocks adaptively and subregions are generated after coarse segmentation. In the last stage,texture features from irregular subregions are extracted and compared with the corresponding backgrounds to detect those with similarity.

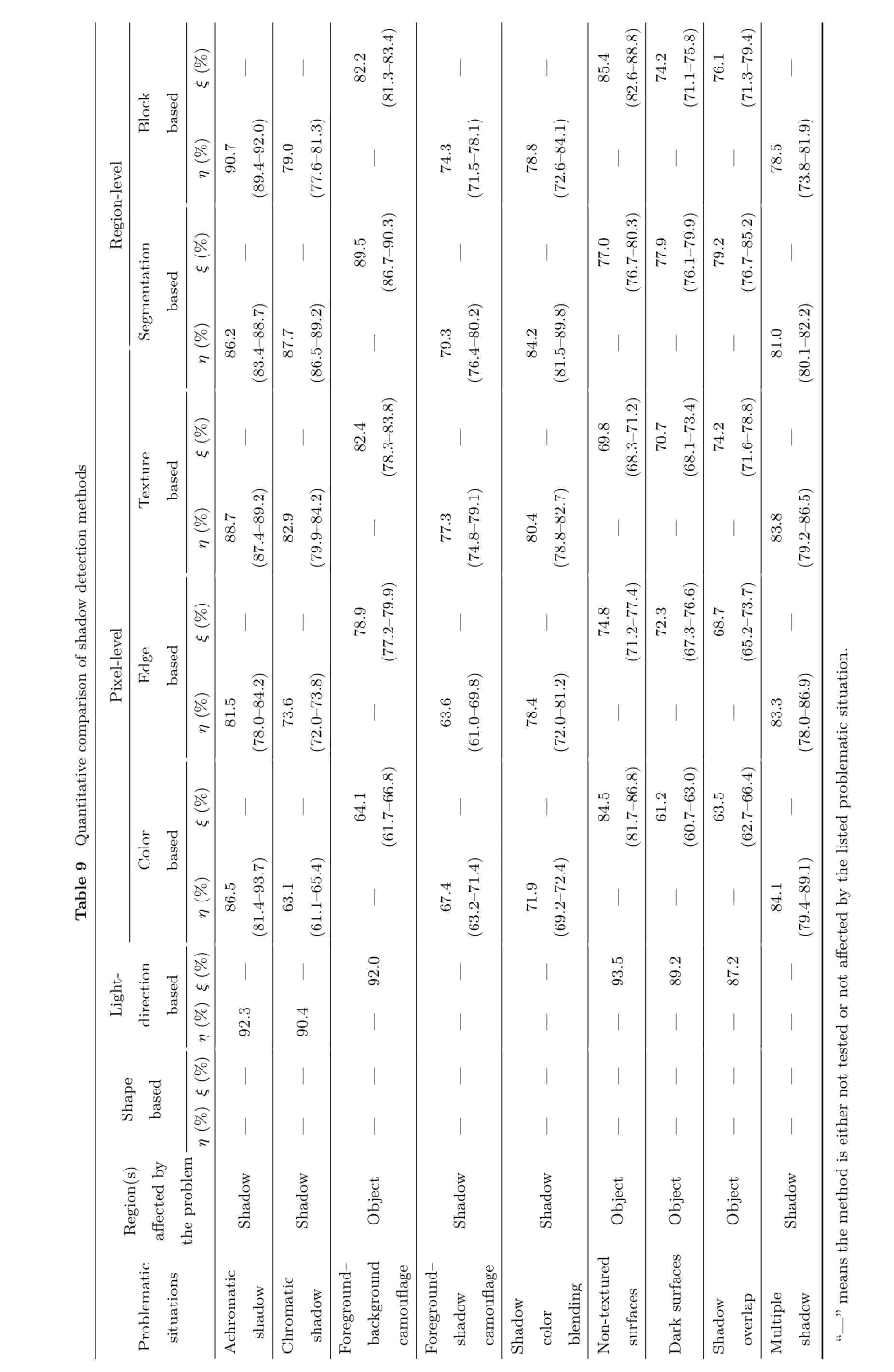

6 Performance evaluation

Table9 providesthequantitativeperformance evaluation,in terms of the average shadow detection rate(η)and the average shadow discrimination rate(ξ),for each class of methods with respect to the shadow problematic situations.These rates are calculated according to the stated results of the original publications.For example, achromatic shadow has been tested in four papers belonging to color based methods.For each paper, since achromatic shadows do not affect shadow discrimination rate,only shadow detection rate has been calculated.Based on that,three rates,namely, lowest rate,highest rate,and a simple average for all four methods are reported in Table 9.

Clearly(as indicated in Table 9),shadow detection rate is affected by the presence of achromatic shadow, chromatic shadow, foreground–shadow camouflage,shadow color blending,and multiple shadows. Meanwhile,problems of foreground–background camouflage,non-textured surfaces,dark surfaces,and shadow overlaps are affecting the shadow discrimination rate.

Thefirsttwo classes,shapebased methods and light-direction based methods,mainly rely on geometric relationshipsoftheobjectsand theshadowsin thescene.They can provide accurateresultswhen thesegeometric features along with their assumptions are valid(maximum shadow detection rate(η)=92.3%and maximum shadow discrimination rate(ξ)=93.5%are reported for light-direction based method from Table 9).However,they may fail when these geometric relationships change.Besides,these methods impose some strong geometric assumptions which make them only applicable in specific situations or they may require human interaction or need some prior knowledge about the scene and the moving objects.

Color based methods can provide reasonably high shadow detection rate(the lowest rate is η=81.4%, the highest rate is η=93.7%,and the average of η=86.5%)for indoor environments in which only achromatic shadow is present.However,color based methods fail to recognize most shadow points when other problematic situations are present.For example,from Table 9,the average shadow detection rates(η)obtained for the color based method are 63.1%,67.4%,and 71.9%when having the problem of chromatic shadows,foreground–shadow camouflages and background color blending,respectively. In order to examine the performance of the method when having other problems that are directly related to the foreground objects,further quantitative resultsfortheshadow discrimination rateare obtained.Except for non-textured surfaces,color based methods clearly fail to cope with the problems of foreground–background camouflage(average of ξ=64.1%),dark surfaces(average of ξ=61.2%), and shadow overlap(average of ξ=63.5%).In general,color based methods are easy to implement and are applicable in real-time application due to their low computational complexity.

Edge based methods can provide better results than color based methods when having chromatic shadow(with the lowest shadow detection rate of η=72.0%,the highest shadow detection rate of η=73.8%,and the average of η=73.6%)and foreground–background camouflage(with the lowest shadow detection rate of ξ=77.2%,the highest shadow detection rate of ξ=79.9%,and the average of ξ=78.9%).However,these methods are not suitable for other situations such as foreground–shadow camouflage(the average of η=63.6%)and shadow overlap(the average of ξ=68.7%).

Among pixel based methods,the performance of texture based methods is better than color based and edge based methods in terms of the shadow detection rate and discrimination rate for all the problematic situations except for having non-textured(the average of ξ=69.8%)and dark surfaces(the average of ξ=70.7%).

Region based methods are designed to deal with noise,camouflage,and dark surfaces by taking advantages of the spatial image feature and forming independent regions.Region based methods are computationally expensive and are generally not suitable for real-time applications. Compared to pixel based methods,block based methods provide

better results when having achromatic shadow(the average of η=90.7%),non-textured surfaces(the average of ξ=85.4%),dark surfaces(the average of ξ=74.2%),and shadow overlap(the average of ξ=76.1%).

— —.282—(81.3–83.4)—.4η(%)ξ(%)85.2(82.6–88.8) 74(71.1–75.8) .176(71.3–79.4)—) Block based ).3) (89.4–92.0.190.779.0.1.9—(77.6–81-level) 74.3(71.5–7878.8—(72.6–84— —78.5(73.8–81Region) .3) tion——77.0(76.7–80—89.5—77.9(76.1–79.3) ta79.2ensed (86.7–90(76.7–85) Segmba)) .9) ) ) 3.4–89.288.7.286.380.2.7.289.887—.082.26.5– —.2) 796.4–84—1.5– — —81(8(8(8(70.1–(8η(%)ξ(%)) ) ) )—83.882.48.3–69.871.28.3–70.773.474.278.88.1– —1.6–sed (6odbaTexture —(7η(%)ξ(%)s (6—(7detection meth88.7.982(79.9–84.2) (87.4–89.2)—.3—77(74.8–79.1) .480(78.8–82.7)— — —.883(79.2–86.5) adow—78——.8—74.3(77.2–79.9) 72.768—shof(71.2–77.4) based Pixel-level ge(67.3–76.6) (65.2–73.7) Ed.2) arison.8) ation..8) (78.0–84.2) η(%)ξ(%)81.5.9) 73.6(72.0–73comp—63.6(61.0–6978.4(72.0–81— — —83.3(78.0–86tic situtive.4)lematita.8) (61.7–66.9—an—84.561.2—(81.7–8663.564.1.0) (60.7–63——(62.7–66probQulor ble 9Cobased .8) ) 93.7) 65.4) 71.4) 72.4) 89.1e listedthTa86.5η(%)ξ(%)1.4–63.11.1– —67.43.2–71.9— — —84.1(6(89.2–(6(69.4–(7t-n directioLighsedξ(%)— —92.0— —93.589.287.2—baη(%)not aff ected by90.492.3— — — —— ——orape — — — — — — — — —t testedShbased ξ(%)η(%)noer— — — — — — — — —eith(s)isRegionaff ected bylem e probowodadowadjectowadowadjectjectjectowadthShShObShShObObObShe meththlematic atic–ationsndndufl age –ndnsufl age owrfacesleeaProbsituAchromadromatic owgrouowdingsuowshadowow”mChshForegroubackcamoForegrouadshcamoadrfacesltipadShcolor blenNon-texturedsuDarkadShoverlap Mush“—

Among all the methods,segmentation based methodsachieved the highestperformance in different scenarios except for having achromatic shadows(the average of η=86.2%),non-textured surfaces(the average of ξ=77.0%),and multiple shadows(the average of η=81.0%).The results show theability ofthesemethodsto handle the problems of chromatic shadows(the average of η=87.7%),foreground–background camouflage (theaverageofξ=89.5%),foreground–shadow camouflage(the average of η=79.3%),shadow color blending(the average of η=84.2%),dark surfaces (the average of ξ=77.9%),and shadow overlap(the average of ξ=79.2%).

Figure 4 shows an overall picture of all the problematic situations with recommended methods to deal with them.It can be seen that for a simple problem,such as having achromatic shadows,color based methods can be used.Texture based and edge based methods can be used when there are problems of chromatic shadows,shadow color blending,and multiple shadows. Light-direction based methods can provide accurate results in situations with shadow overlapsifthelightdirection can be estimated correctly.Block based methods perform better than other methods when there is foreground–shadow camouflage. For the rest of the cases, namely,foreground–background camouflage,nontextured surfaces,and dark surfaces,segmentation based methods are the best selection in detecting shadows.

7 Conclusions

This paper highlighted possible problematic situations for moving shadow detection and reviewed recent works that have been done to address these problems.A new way of classification wasprovided to classify theexisting methods into seven main groups,namely,shape based, light-direction based,colorbased,edge based, texture based,segmentation based,and block based methods. Quantitative metrics have been used to evaluate the overall performance of each class using common moving shadow detection benchmark datasets consisting of twelve video sequences.The overall performance evaluation from each category, with respect to the problematic situations,showed that each category has its strengths and weaknesses.

Shape based methods are mainly designed to detect shadows in specific application. They can provide accurate results when all the geometric features along with their assumptions are valid. In addition,these methods can be used to detect shadows of foreground objects in still images as they do not depend on the background.However,their applications are limited and they may fail when their geometric relationships are changed.Light-direction based methods rely strongly on the shadow/light direction to detect shadows. These methods can provide good results when having a strong-single light source in the scene.However,they are not reliable in other situations specially when having multiple light sources in the scene.

Color based methods perform well when having a simple achromatic shadow,non-textured surfaces and multiple shadows in the scene.However,they may fail in other shadow problematic situations as well as having the problem of image pixelnoise.Edge based methods can provide good shadow detection resultsin situationswith achromatic shadows,chromatic shadows,and multiple shadows. However,they may fail in other situations.Texture based methods can be selected as the best choice among pixel-level analysis methods for detecting shadows of moving objects. They can provide reasonableresultsin situationswith chromatic shadows,camouflages,and multiple shadows.

Block based methods perform better than pixellevel analysis methods when having the problem of non-textured surfaces and dark surfaces.They can be selected to detect shadows in low levels of illumination. Compared to all other methods, segmentation based methods provided the best results in almost all situations and can be selected as the best way of detecting moving shadows from videos.

This survey can help researchers to explore various contributions of shadow detection methods with their strengths and weaknesses and can guide them to select a proper technique for a specific application.

Fig.4 Examples of problematic situations and recommended methods to deal with them.

Acknowledgements

This work is supported by the Western Sydney University Postgraduate Research Award Program.

[1]Sanin,A.;Sanderson,C.;Lovell,B.C.Improved shadow removalfor robust person tracking in surveillance scenarios.In:Proceedings of the 20th International Conference on Pattern Recognition,141–144,2010.

[2]Asaidi,H.; Aarab,A.; Bellouki,M.Shadow elimination and vehicles classification approaches in traffic video surveillance context.Journal of Visual Languages and Computing Vol.25,No.4,333–345, 2014.

[3]Meher,S.K.;Murty,M.N.Efficient method of moving shadow detection and vehicle classification. AEU-International Journal of Electronics and Communications Vol.67,No.8,665–670,2013.

[4]Johansson,B.;Wiklund,J.;Forss´en,P.-E.;Granlund, G.Combining shadow detection and simulation for estimation ofvehicle size and position.Pattern Recognition Letters Vol.30,No.8,751–759,2009.

[5]Candamo,J.;Shreve,M.;Goldgof,D.B.;Sapper,D. B.;Kasturi,R.Understanding transit scenes:A survey onhumanbehavior-recognitionalgorithms.IEEE Transactions on Intelligent Transportation Systems Vol.11,No.1,206–224,2010.

[6]Gandhi,T.;Chang,R.;Trivedi,M.M.Video and seismic sensor-based structural health monitoring: Framework,algorithms,and implementation.IEEE Transactions on Intelligent Transportation Systems Vol.8,No.2,169–180,2007.

[7]Zhang,R.;Tsai,P.-S.;Cryer,J.E.;Shah,M. Shape-from-shading:A survey.IEEE Transactions on Pattern Analysis and Machine Intelligence Vol.21,No. 8,690–706,1999.

[8]Norman,J.F.;Lee,Y.;Phillips,F.;Norman,H.F.; Jennings,L.R.;McBride,T.R.The perception of 3-D shape from shadows cast onto curved surfaces.Acta Psychologica Vol.131,No.1,1–11,2009.

[9]Ok,A.O.Automated detection of buildings from single VHR multispectralimagesusing shadow information and graph cuts.ISPRS Journalof Photogrammetry and Remote Sensing Vol.86,21–40, 2013.

[10]Sato,I.;Sato,Y.;Ikeuchi,K.Illumination from shadows.IEEE Transactions on Pattern Analysis and Machine Intelligence Vol.25,No.3,290–300,2003.

[11]Liu,Y.;Gevers,T.;Li,X.Estimation of sunlight direction using 3D object models.IEEE Transactions on Image Processing Vol.24,No.3,932–942,2015.

[12]Wu,L.;Cao,X.;Foroosh,H.Camera calibration and geo-location estimation from two shadow trajectories. Computer Vision and Image Understanding Vol.114, No.8,915–927,2010.

[13]Iiyama,M.;Hamada,K.;Kakusho,K.;Minoh,M. Usage of needle maps and shadows to overcome depth edges in depth map reconstruction.In:Proceedings of the 19th International Conference on Pattern Recognition,1–4,2008.

[14]Levine,M.D.;Bhattacharyya,J.Removing shadows. Pattern Recognition Letters Vol.26,No.3,251–265, 2005.

[15]Deng,W.;Hu,J.;Guo,J.;Cai,W.;Feng,D.Robust, accurate and efficient face recognition from a single training image:A uniform pursuit approach.Pattern Recognition Vol.43,No.5,1748–1762,2010.

[16]Cai,X.-H.;Jia,Y.-T.;Wang,X.;Hu,S.-M.;Martin, R.R.Rendering soft shadows using multilayered shadow fins.Computer Graphics Forum Vol.25,No. 1,15–28,2006.

[17]Petrovi´c L.;Fujito,B.;Williams,L.;Finkelstein,A. Shadows for cel animation.In:Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques,511–516,2000.

[18]Hu,S.-M.;Zhang,F.-L.;Wang,M.;Martin,R. R.;Wang,J.PatchNet: A patch-basedimage representation for interactive library-driven image editing.ACM Transactions on Graphics Vol.32,No. 6,Article No.196,2013.

[19]Lu,S.-P.;Zhang,S.-H.;Wei,J.;Hu,S.-M.;Martin, R.R.Timeline editing of objects in video.IEEE Transactions on Visualization and Computer Graphics Vol.19,No.7,1218–1227,2013.

[20]Hu,S.-M.;Chen,T.;Xu,K.;Cheng,M.-M.;Martin, R.R.Internet visual media processing:A survey with graphics and vision applications.The Visual Computer Vol.29,No.5,393–405,2013.

[21]Datasets with ground truths.Available at http:// arma.sourceforge.net/shadows/.

[22]Russell,A.;Zou,J.J.Moving shadow detection based on spatial-temporal constancy.In:Proceedings of the 7th International Conference on Signal Processing and Communication Systems,1–6,2013.

[23]Sanin,A.;Sanderson,C.;Lovell,B.C.Shadow detection:A survey and comparative evaluation of recent methods.Pattern Recognition Vol.45,No.4, 1684–1695,2012.

[24]Kubelka,P.New contributions to the optics of intensely light-scattering materials.Part I.Journal of the Optical Society of America Vol.38,No.5,448–457, 1948.

[25]Phong,B.T.Illumination for computer generated pictures.Communications of the ACM Vol.18,No. 6,311–317,1975.

[26]Change detection datasets with ground truths. Available at http://www.changedetection.net/.

[27]Cvrr-aton datasets with ground truths.Available at http://cvrr.ucsd.edu/aton/shadow/.

[28]Fgshbenchmark datasets with ground truths. Available at http://web.eee.sztaki.hu/~bcsaba/ \FgShBenchmark.htm.

[29]Prati,A.;Mikic,I.;Trivedi,M.M.;Cucchiara,R.

Detecting moving shadows: Algorithms and evaluation.IEEE Transactions on Pattern Analysis and Machine Intelligence Vol.25,No.7,918–923, 2003.

[30]Al-Najdawi,N.;Bez,H.E.;Singhai,J.;Edirisinghe, E.A.A survey of cast shadow detection algorithms. Pattern Recognition Letters Vol.33,No.6,752–764, 2012.

[31]Chen,C.-C.;Aggarwal,J.K.Human shadow removal with unknown light source.In:Proceedings of the 20th International Conference on Pattern Recognition, 2407–2410,2010.

[32]Yoneyama,A.;Yeh,C.-H.;Kuo,C.-C.J.Robust vehicle and traffic information extraction for highway surveillance.EURASIP Journal on Applied Signal Processing Vol.2005,2305–2321,2005.

[33]Hsieh,J.-W.;Hu,W.-F.;Chang,C.-J.;Chen,Y.-S.Shadow elimination for effective moving object detection by Gaussian shadow modeling.Image and Vision Computing Vol.21,No.6,505–516,2003.

[34]Bi,S.;Liang,D.;Shen,X.;Wang,Q.Human cast shadow elimination method based on orientation information measures.In: ProceedingsofIEEE International Conference on Automation and Logistics,1567–1571,2007.

[35]Fang,L.Z.;Qiong,W.Y.;Sheng,Y.Z.A method to segment moving vehicle cast shadow based on wavelet transform.Pattern Recognition Letters Vol.29,No.16, 2182–2188,2008.

[36]Nadimi,S.;Bhanu,B.Moving shadow detection using a physics-based approach.In:Proceedings of the 16th International Conference on Pattern Recognition,Vol. 2,701–704,2002.

[37]Wang,J.M.;Chung,Y.C.;Chang,C.L.;Chen,S. W.Shadow detection and removal for traffic images. In:Proceedings of IEEE International Conference on Networking,Sensing and Control,Vol.1,649–654, 2004.

[38]Nicolas,H.;Pinel,J.M.Joint moving cast shadows segmentation and light source detection in video sequences.Signal Processing:Image Communication Vol.21,No.1,22–43,2006.

[39]Joshi,A.J.;Papanikolopoulos,N.P.Learning to detect moving shadows in dynamic environments. IEEE Transactions on Pattern Analysis and Machine Intelligence Vol.30,No.11,2055–2063,2008.

[40]Russell,M.;Zou,J.J.;Fang,G.Real-time vehicle shadow detection.Electronics Letters Vol.51,No.16, 1253–1255,2015.

[41]Yang,M.-T.;Lo,K.-H.;Chiang,C.-C.;Tai,W.-K. Moving cast shadow detection by exploiting multiple cues.IET Image Processing Vol.2,No.2,95–104, 2008.

[42]Amato,A.;Mozerov,M.G.;Bagdanov,A.D.; Gonzlez,J.Accurate moving cast shadow suppression based on localcolorconstancy detection.IEEE Transactions on Image Processing Vol.20,No.10, 2954–2966,2011.

[43]Cucchiara,R.;Grana,C.;Piccardi,M.;Prati,A. Detecting moving objects,ghosts and shadows in video streams.IEEE Transactions on Pattern Analysis and Machine Intelligence Vol.25,No.10,1337–1342,2003.

[44]Salvador,E.;Cavallaro,A.;Ebrahimi,T.Cast shadow segmentation using invariant color features.Computer Vision and Image Understanding Vol.95,No.2,238–259,2004.

[45]Stander,J.;Mech,R.;Ostermann,J.Detection of moving cast shadows for object segmentation.IEEE Transactions on Multimedia Vol.1,No.1,65–76,1999.

[46]Cucchiara,R.;Grana,C.;Neri,G.;Piccardi,M.; Prati,A.The Sakbot system for moving object detection and tracking.In:Video-Based Surveillance Systems.Remagnino,P.;Jones,G.A.;Paragios,N.; Regazzoni,C.S.Eds.Springer US,145–157,2002.

[47]Haritaoglu,I.;Harwood,D.;Davis,L.S.W4: Real-time surveillance of people and their activities. IEEE Transactions on Pattern Analysis and Machine Intelligence Vol.22,No.8,809–830,2000.

[48]Mikic,I.;Cosman,P.C.;Kogut,G.T.;Trivedi,M.M. Moving shadow and object detection in traffic scenes. In:Proceedings of the 15th International Conference on Pattern Recognition,Vol.1,321–324,2000.

[49]Melli,R.;Prati,A.;Cucchiara,R.;de Cock,L. Predictive and probabilistic tracking to detect stopped vehicles.In:Proceedings of the 17th IEEE Workshops on Application of Computer Vision,Vol.1,388–393, 2005.

[50]Cavallaro, A.; Salvador, E.; Ebrahimi, T. Shadow-aware object-based video processing.IEE Proceedings—Vision,Image and Signal Processing Vol.152,No.4,398–406,2005.

[51]Lo,K.-H.; Yang,M.-T.Shadow detection by integrating multiple features.In:Proceedings of the 18th International Conference on Pattern Recognition, Vol.1,743–746,2006.

[52]Toth,D.;Stuke,I.;Wagner,A.;Aach,T.Detection of moving shadows using mean shift clustering and a significance test.In: Proceedings of the 17th International Conference on Pattern Recognition,Vol. 4,260–263,2004.

[53]Guan,Y.-P.Spatio-temporal motion-based foreground segmentation and shadow suppression.IET Computer Vision Vol.4,No.1,50–60,2010.

[54]Sun,B.;Li,S.Moving cast shadow detection of vehicle using combined color models.In:Proceedings of Chinese Conference on Pattern Recognition,1–5, 2010.

[55]Cucchiara,R.;Grana,C.;Piccardi,M.;Prati, A.;Sirotti,S.Improving shadow suppression in moving object detection with HSV color information. In:Proceedings of IEEE Intelligent Transportation Systems,334–339,2001.

[56]Ishida,S.;Fukui,S.;Iwahori,Y.;Bhuyan,M. K.;Woodham,R.J.Shadow model construction with features robust to illumination changes.In: Proceedings of the World Congress on Engineering, Vol.3,2013.

[57]Dai,J.;Qi,M.;Wang,J.;Dai,J.;Kong,J.Robust and accurate moving shadow detection based on multiple features fusion.Optics&Laser Technology Vol.54, 232–241,2013.

[58]Horprasert,T.;Harwood,D.;Davis,L.S.A statistical approach for real-time robust background subtraction and shadow detection.In: Proceedings of IEEE International Conference on Computer Vision,Vol.99, 1–19,1999.

[59]Choi,J.;Yoo,Y.J.;Choi,J.Y.Adaptive shadow estimator for removing shadow of moving object. Computer Vision and Image Understanding Vol.114, No.9,1017–1029,2010.

[60]Song,K.-T.;Tai,J.-C.Image-based traffic monitoring with shadow suppression.Proceedings of the IEEE Vol. 95,No.2,413–426,2007.

[61]Wang,J.;Wang,Y.;Jiang,M.;Yan,X.;Song, M.Moving castshadow detection using online sub-scene shadow modeling and object inner-edges analysis.Journal of Visual Communication and Image Representation Vol.25,No.5,978–993,2014.

[62]Huang,C.H.;Wu,R.C.An online learning method for shadow detection.In:Proceedings of the 4th Pacific-Rim Symposium on Image and Video Technology,145–150,2010.

[63]Kumar,P.;Sengupta,K.;Lee,A.A comparative study of different color spaces for foreground and shadow detection for traffic monitoring system.In: Proceedings of the IEEE 5th International Conference on Intelligent Transportation Systems,100–105,2002.

[64]Shan,Y.;Yang,F.;Wang,R.Color space selection for moving shadow elimination.In:Proceedings of the 4th International Conference on Image and Graphics, 496–501,2007.

[65]Subramanyam,M.;Nallaperumal,K.;Subban,R.; Pasupathi,P.;Shashikala,D.;Kumar,S.;Devi,G. S.A study and analysis of colour space selection for insignificant shadow detection.International Journal of Engineering Research and Technology(IJERT)Vol. 2,No.12,2476–2480,2013.

[66]Ishida,S.;Fukui,S.;Iwahori,Y.;Bhuyan,M.K.; Woodham,R.J.Shadow detection by three shadow models with features robust to illumination changes. Procedia Computer Science Vol.35,1219–1228,2014.

[67]Xu,D.;Li,X.;Liu,Z.;Yuan,Y.Cast shadow detection in video segmentation.Pattern Recognition Letters Vol.26,No.1,91–99,2005.

[68]Chien,S.-Y.;Ma,S.-Y.;Chen,L.-G.Efficient moving objectsegmentation algorithm using background registration technique.IEEE Transactions on Circuits and Systems for Video Technology Vol.12,No.7,577–586,2002.

[69]Zhang,W.;Fang,X.Z.;Yang,X.K.;Wu,Q.M.J. Moving cast shadows detection using ratio edge.IEEE Transactions on Multimedia Vol.9,No.6,1202–1214, 2007.

[70]Martel-Brisson,N.;Zaccarin,A.Moving cast shadow detection from a Gaussian mixture shadow model.In: Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition,Vol.2, 643–648,2005.

[71]Xiao,M.;Han,C.-Z.;Zhang,L.Moving shadow detection and removal for traffic sequences. International Journal of Automation and Computing Vol.4,No.1,38–46,2007.

[72]Wu,Y.-M.;Ye,X.-Q.;Gu,W.-K.A shadow handler in traffic monitoring system.In:Proceedings of IEEE 55th Vehicular Technology Conference,Vol.1,303–307,2002.

[73]Panicker,J.;Wilscy,M.Detection of moving cast shadows using edge information.In:Proceedings of the 2nd International Conference on Computer and Automation Engineering,Vol.5,817–821,2010.

[74]Huang,S.;Liu,B.;Wang,W.Moving shadow detection based on Susan algorithm.In:Proceedings of IEEE International Conference on Computer Science and Automation Engineering,Vol.3,16–20,2011.

[75]ShabaniNia, E.; Naghsh-Nilchi,A.R.Robust watershed segmentation of moving shadows using wavelets.In: Proceedings ofthe 8th Iranian Conference on Machine Vision and Image Processing, 381–386,2013.

[76]Huang,J.-B.;Chen,C.-S.Moving cast shadow detection using physics-based features. In: Proceedings ofIEEE Conference on Computer Vision and Pattern Recognition,2310–2317,2009.

[77]Leone,A.;Distante,C.Shadow detection for moving objects based on texture analysis.Pattern Recognition Vol.40,No.4,1222–1233,2007.

[78]Smith,S.M.;Brady,J.M.SUSAN—A new approach to low level image processing.International Journal of Computer Vision Vol.23,No.1,45–78,1997.

[79]Qin,R.;Liao,S.;Lei,Z.;Li,S.Z.Moving cast shadow removal based on local descriptors.In:Proceedings of the 20th International Conference on Pattern Recognition,1377–1380,2010.

[80]Martel-Brisson,N.;Zaccarin,A.Kernel-based learning of cast shadows from a physical model of light sources and surfaces for low-level segmentation.In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition,1–8,2008.

[81]Martel-Brisson,N.; Zaccarin,A.Learning and removing cast shadows through a multidistribution approach.IEEE Transactions on Pattern Analysis and Machine Intelligence Vol.29,No.7,1133–1146,2007.

[82]Liu,Y.;Adjeroh,D.A statistical approach for shadow detection using spatio-temporal contexts.In: Proceedings of IEEE International Conference on Image Processing,3457–3460,2010.

[83]Huang,J.-B.;Chen,C.-S.A physical approach to moving cast shadow detection.In:Proceedings of IEEE International Conference on Acoustics,Speech and Signal Processing,769–772,2009.

[84]Khare,M.;Srivastava,R.K.;Khare,A.Moving

shadow detection and removal—A wavelet transform based approach.IET Computer Vision Vol.8,No.6, 701–717,2014.

[85]Jung,C.R.Efficient background subtraction and shadow removal for monochromatic video sequences. IEEE Transactions on Multimedia Vol.11,No.3,571–577,2009.

[86]Khare,M.;Srivastava,R.K.;Khare,A.Daubechies complex wavelet-based computer vision applications. In:Recent Developments in Biometrics and Video Processing Techniques.Srivastava,R.;Singh,S.K.; Shukla,K.K.Eds.IGI Global,138–155,2013.

[87]Dai,J.;Han,D.;Zhao,X.Effective moving shadow detection using statistical discriminant model.Optik—International Journal for Light and Electron Optics Vol.126,No.24,5398–5406,2015.

[88]Bullkich,E.;Ilan,I.;Moshe,Y.;Hel-Or,Y.;Hel-Or,H. Moving shadow detection by nonlinear tone-mapping. In:Proceedings of the 19th International Conference on Systems,Signals and Image Processing,146–149, 2012.

[89]Huerta,I.;Holte,M.B.;Moeslund,T.B.;Gonz`alez, J.Chromatic shadow detection and tracking for moving foreground segmentation.Image and Vision Computing Vol.41,42–53,2015.

[90]Javed,O.;Shah,M.Tracking and object classification for automated surveillance.In: Lecture Notes in Computer Science,Vol.2353.Heyden,A.;Sparr, G.;Nielsen,M.;Johansen,P.Eds.Springer Berlin Heidelberg,343–357,2002.

[91]Cucchiara,R.;Grana,C.;Piccardi,M.;Prati, A.Detecting objects,shadows and ghosts in video streams by exploiting color and motion information. In:Proceedings of the 11th International Conference on Image Analysis and Processing,360–365,2001.

[92]Zhang,W.;Fang,X.S.;Xu,Y.Detection of moving cast shadows using image orthogonal transform.In: Proceedings of the 18th International Conference on Pattern Recognition,Vol.1,626–629,2006.

[93]Celik,H.;Ortigosa,A.M.;Hanjalic,A.;Hendriks, E.A.Autonomous and adaptive learning of shadows for surveillance. In: Proceedings of the 9th InternationalWorkshop on Image Analysis for Multimedia Interactive Services,59–62,2008.

[94]Benedek,C.;Szirnyi,T.Shadow detection in digital images and videos.In:Computational Photography: Methods and Applications.Lukac,R.Ed.Boca Raton, FL,USA:CRC Press,283–312,2011.

[95]Cogun,F.;Cetin,A.Moving shadow detection in video using cepstrum.International Journal of Advanced Robotic Systems Vol.10,DOI:10.5772/52942,2013.

[96]Dai,J.;Han,D.Region-basedmovingshadow detection using affinity propagation.International Journal of Signal Processing,Image Processing and Pattern Recognition Vol.8,No.3,65–74,2015.

Mosin Russell received his Master of Engineering(Honours)degree from Western SydneyUniversity. He is currently pursuing his Ph.D.degree in computer vision.His research interests include digital image processing, computer vision applications,machine learning,and computer graphics.

Ju Jia Zou received his Ph.D.degree in digital image processing from the University of Sydney in 2001. He was a research associate and then an Australian Postdoctoral Fellow at the UniversityofSydney from 2000to 2003,working on projects funded by the Australian Research Council.He is currently a senior lecturer at the University of Western Sydney. His research interests include digital image processing,pattern recognition,signalprocessing and their applications to structural health monitoring,and geotechnical characterisation.

Gu Fang received his Ph.D.degree from the University ofSydney in Australia.He is an associate professor in mechatronics engineering at Western Sydney University and the director of academic program(DAP)in engineering postgraduate courses.His main research interests include: computer vision in robotic applications,neuralnetwork and fuzzy logic control in robotics,robotic welding,and particle swarm optimisation in robotics.

Open Access The articles published in this journal are distributed under the terms of the Creative Commons Attribution 4.0 International License(http:// creativecommons.org/licenses/by/4.0/), which permits unrestricted use,distribution,and reproduction in any medium,provided you give appropriate credit to the original author(s)and the source,provide a link to the Creative Commons license,and indicate if changes were made.

Other papers from this open access journal are available free of charge from http://www.springer.com/journal/41095. To submit a manuscript,please go to https://www. editorialmanager.com/cvmj.

1School of Computing,Engineering and Mathematics, Western Sydney University, Locked Bag 1797, Penrith,NSW 2751,Australia.E-mail:M.Russell, a.russell@westernsydney.edu.au();J.J.Zou,j.zou@ westernsydney.edu.au;G.Fang,g.fang@westernsydney. edu.au.

Manuscript received:2016-04-06;accepted:2016-07-20

Computational Visual Media2016年3期

Computational Visual Media2016年3期

- Computational Visual Media的其它文章

- Analyzing surface sampling patterns using the localized pair correlation function

- Skeleton-based canonical forms for non-rigid 3D shape retrieval

- Parallelized deformable part models with effective hypothesis pruning

- Discriminative subgraphs for discovering family photos

- Removingmixed noiseinlow ranktexturesbyconvex optimization

- Synthesis of a stroboscopic image from a hand-held camera sequence for a sports analysis