Real-Time Color Enhancement Method Used for Intelligent Mobile Terminals

Jin Hui

(Solution Marketing Department of Product Marketing System, ZTE Corporation,Shenzhen 518057, P. R. China)

Abstract:In certain environments and under some conditions,the video images taken by the intelligent mobile video phones seem dark,and the colors are not bright or saturated enough.This paper presents an adaptive method to enhance the video image brightness visualization and the color performance depending on the certain hardware property and function parameters.The experimental results prove that this method can enhance the colors and the contrast of the video images,based on the estimated quality feature values of each frame,without using the extra Digital Signal Processor(DSP).

B eing a core service of 3G systems,mobile video call service catches widespread interest.The quality of video images during a video call has direct impact on the popularization and extension of the service,which may arouse a wonderful video application storm in the future.

In the video processing field,one main objective of video enhancement is to improve subjective visual effect.

Equipment manufacturers often use color enhancement chips,function curves or matrix conversion method to enhance images.The enhancement parameters discussed in References[I,2]are fixed values,which are the averages of estimated video sequences of various scenes.They cannot adapt themselves to different situations.To address such a problem,some researchers propose wavelet transform-based color image enhancement algorithm in the color space which is selected according to human eyes'sensitivity to brightness and color as well as human beings'visual psychological characteristics.References[3-6]discuss color correction of static images,and Reference[7]adopts smoothing parameter transitions for frames in a video sequence.

Traditional enhancement methods have the following two problems:

(I)The enhancement parameter is not optimal for all scenes.For example,the curve parameter that can improve the contrast in natural scene may damage the visual effect of image sequences of a human face.

(2)Color reproduction is hard to achieve because traditional color reproduction techniques act on the entire image rather than the colors of selected objects.

As to mobile terminals,people expect the best subjective quality enhancement at a minimum cost and lowest power consumption.The method presented herein has been verified and applied on intelligent TD-SCDMA terminals.Based on the mobile terminal platform,it can solve the color problems related to video call image quality in a real-time way without adding extra hardware and overheads,and enhance brightness and visibility in low-illumination scenes,thus offering the users with the best experience of video calls.

1 ADLE

Being portable,mobile terminals are often used to capture video images in some entertainment situations where the places are dark or in poor light,such as coffee shops.But because of poor light,the captured images or videos are often difficult to distinct.Moreover,when video sequences of the captured images are viewed on the Liquid Crystal Display(LCD)of a mobile terminal,the grey level of original sequence cannot be reproduced due to special optoelectronic characteristics of liquid crystal,that is,input and output being non-linear.Therefore,visibility may be faded further.

This paper suggests Adaptive Dark-Lightness Enhancement(ADLE)method to solve these problems.

1.1 Luminance Enhancement Method

Due to hardware's Gamma characteristic,Gamma correction can be used to enhance the presentation of images.For a dim environment,a Gamma value less than I can be set to enhance brightness and visibility;for normal lighting scenes,a Gamma value around I can be set to compensate for image brightness loss that is brought by darkening effect of Gamma correction.If the surrounding is bright or even whitening,the visualeffect of an image will be reduced due to lack of contrast and stereoscopy.In this case,the Gamma can be set to be greater than I to stretch the image's dynamic range and enhance the image's stereoscopy and visibility of details.

Gamma correction algorithm processes the three primary colors,i.e.Red,Green and Blue(RGB),respectively.Experiments prove that direct correction of Ycomponent of YUV color space can produce a display effect similar to spatial correction of RGB but its computation speed is faster.In mobile terminals,video sequences use YUV color space before and after coding/decoding.To enhance the visibility of images in dark scenes,we can directly process Ycomponent.

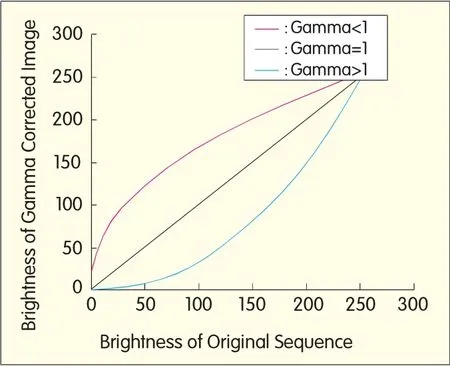

Figure I illustrates three Gamma correction curves,where Xaxis is the brightness of original sequence,and Y the brightness of Gamma corrected image.The Gamma curves correspond to three cases,i.e.Gamma<I,Gamma=I and Gamma>I,which indicate enhanced,unchanged and decreased brightness respectively.

1.2 Scene Detection Policy

In Gamma correction,if only a fixed Gamma value is used to correct the images that are too dark or too bright,the following two problems may be raised:

(I)It is hard to accurately correct all images captured in various scenes.For example,one Gamma value cannot ensure good correction performance when used for correcting images captured in dark and dim scenes respectively.

(2)The correction with a fixed Gamma value is apt to step change of the scenes near the threshold,thus leading to flicker.For example,in processing images near the threshold between dark scene and normal lighting scene,a small correction may cause the images to change dramatically when the images are processed from dark scene to normal lighting scene.(This phenomenon is especially evident in the transition from dark to normal lighting scene.)

To address the above problems,we develop a scene detection mechanism and design II continuous Gamma values.Gamma value is set based on the darkness of the scene as well as scene detection polices,allowing a smooth transition belt to generate when the darkness changes.Therefore,no flicker will occur.The mechanism works like this:

First,the mechanism judges if the scene is normal brightness.If the number of pixels whose brightness is less than I00 is no more than 7/8 of the pixels of the entire image,the scene is a normal brightness scene and Gamma value should be set to be 0.9.Otherwise,the scene is dark.

Second,the mechanism determines the degree of darkness.The judgment criterion is the number of pixels whose brightness is no more than a brightness scale(which starts with 50(GEscale)and increases at a step of 5).If the number is greater than 7/8 of the total pixels of the image,corresponding Gamma value(this value begins with 0.7 and increases at a step of 0.02)will be set.

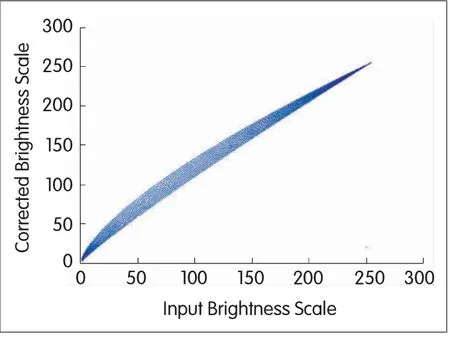

Third,it constructs Look-Up Table(LUT)for II Gamma values.The mechanism use the II Gamma values(ranging from 0.7 to 0.9 and increasing at a step of 0.02)to compute the corrected brightness scales for all brightness scales(0-255).Figure 2 is mapping curves for the II Gamma values,where Xaxis is the input brightness scale and Yaxis is corrected brightness scale.(As the very bright scenes rarely occur in actual application,they are not considered herein.)

From Figure 2,it can be seen that the corrected brightness gradually increases with Gamma values.The correction of continuously changing scenes is also a gradual process,thus avoiding a burst change.

▲Figure 1. Gamma correction curves.

2 Color Enhancement

2.1 Color Cast

During video calls,the video capture system of an intelligent handset may suffer color cast,thus decreasing the subjective feeling of a video image.Figure 3 are two pictures of a human face taken in the same environment,where the left one is taken with a Single Lens Reflex camera and the right one is taken with the video capture system of ZTEU980(for TD-SCDMA)smart handset.Comparison of the two pictures shows the colors of the left picture are lifelike,which means the camera has strong color reduction capability,while the overall color temperature of the right picture is a little low,and the image is shifted towards red.The causes of color cast diversify,including:

(I)The capture environment,such as photographic materials,exposure and color temperature of light source.

(2)Transmission,conversion and edit between software as well as difference in color spaces.Compared with human eyes,a machine records the color temperature more faithful.For example,it can identify the colors at high color temperature(e.g.cyan),causing the shadowy place to be shifted towards cyan or blue.In contrast,human eyes can adapt themselves to onsite color temperature and human brains can adjust color cast.For example,in case of fluorescence light,people see white color only and do not notice blue-green.Besides,human eyes are insensitive to ultraviolet and cannot feel colors of high color temperature in a cloudy or snowy day.This is known as visualpersistence.

The purpose of correcting color cast is to make the color information recorded by machines be the same as what seen by human eyes.In other words,it corrects the colors that can be recorded by machines but cannot be noticed by human eyes.

▲Figure 2. Curves of Gamma values from 0.7 to 0.9.

(3)Difference in white balance of monitors.Color cast may take place when an image without color case is displayed on a non-standard monitor.The principle of automatic white balancing is as following:Assuming the average color value of a scene is within a specific range,if the measured result is not in this range,related parameter of the capture system will be adjusted until the measured value falls within the range.This processing process can be based on YUVspace or RGB space.

2.2 Attenuation of Dynamic Video Capture Range

To analyze the dynamic video capture ranges of the camera of a mobile terminal in indoor,fluorescence light and normal lighting environments,we collect some color cards,and focus our analysis on the color values of the blocks around the four corners.First,a reference system is selected,which performs better than the capture system of a mobile camera in terms of subjective effect.As the video capture system of Sony Ericsson K800 handset produces good subjective effect,it is used as the reference object for studying the attenuation of dynamic video capture range of ZTEU980 in our experiments.

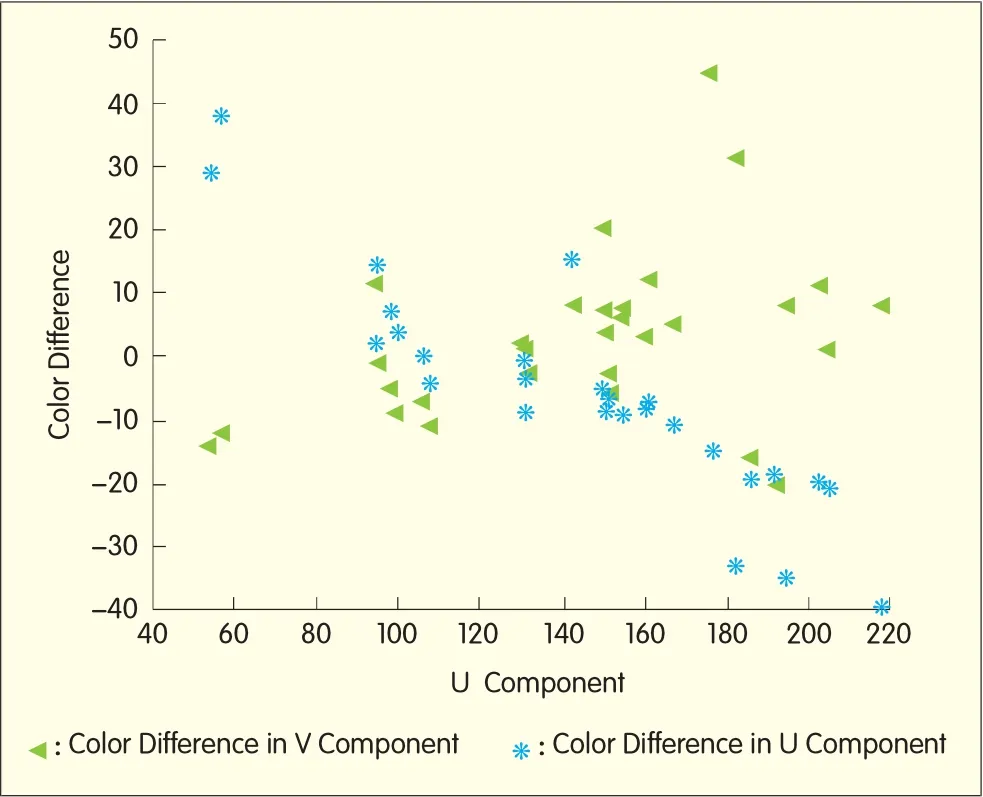

Then U980 and K800 are used to capture images of Kodak's color card under the same conditions,and compare color blocks on the two captured images for color difference in YUVspace.The color difference is denoted by the differences in U/Vcomponents,which are the difference between U/V components of the same color block of two images.The comparison results are shown in Figure 4 and Figure 5.

Figure 4 shows the differences compared on U-component plane,where triangles are color differences in V component,stars are color differences in U component,and Xaxis is the value of U component.As you can see,color differences in Vcomponent are irregular,but color differences in U component are degressive.Moreover,when the U component is relatively high or low,the absolute values of color differences in U component are large;while in the middle range of U values,the absolute values are small.

Figure 5 shows the differences compared on V-component plane,where triangles are color differences in V component,stars are color differences in U component and Xaxis is the value of U component.Similar conclusion can be made:when the Vcomponent is relatively high or low,the absolute values of color differences in Vcomponent are large;while in the middle range of V values,the absolute values are small.

The increase in sampling points can result in a more detailed color difference diagram,enabling color correction and enhancement to produce better effect.Surely,color enhancement should not go too far and parameter adjustment should be done on a consecutive basis.

2.3 Color Space Conversion

Human beings have memory colors of some preferred natural objects,which are often more pleasing than true colors of these objects.Color reproduction method is to reproduce satisfying colors of some natural objects,such as green foliage,blue sky,skin and red apple,for consumers and clients,rather than maintain actual color information of these objects.

The generation of color space conversion lookup table can be done in three steps:

·Adjust color components to correct the color cast problem of captured video sequences;

·Adjust the contrast to increase the color's brightness and saturation;

·Render colors of special objects to enhance subjective visual effect.

The parameters of a color space conversion algorithm are related to hardware and external light environment.The experiment conditions for the algorithm discussed in this paper are as follows:

·Hardware:ZTEU980,which is with front-facing camera and LCD monitor;

·External light environment:Indoor,fluorescence light and normal lighting.

(I)Color Component Adjustment

▲Figure 3. Color cast of video capture system of mobile terminal.

Hue and saturation are two attributes of a color.Each color is determined by three components:Y,U and V,so color component adjustment involves the three components.

◀Figure 4.Differences in U component.

Analyses of images captured by ZTE U980's front-facing camera show that the capture sensor is too sensitive to red component but not so sensitive to blue component.Therefore,in YUVspace,the three components YUVshould be adjusted accordingly to reduce the red component and enhance the effect of blue.Here,nonlinear Gamma correction can be adopted to make the following adjustments:for Vcomponent,Gamma is set to be I.03;for Ucomponent,Gamma is set to be 0.95;while Ycomponent is adjusted based on the linear segment.

(2)Contrast Adjustment

The adjustment of luminance component can be achieved by adjusting the contrast.In the experiments,we use segment adjustment method as it can adjust the contrast globally and locally to improve subjective image quality.

In case of low-ratio contrast stretching(i.e.brightness is less than 80),Gamma should be I.03;in case of high-ratio contrast stretching(i.e.brightness is greater than I70),Gamma should be 0.97;and in other cases(i.e.brightness ranges from 80 to I70),Gamma should be 0.99.

Specific to the scene of indoor skin color taken by ZTEU980 camera,the correction of brightness focuses on correcting skin color,color cast and brightness of images to make them please human eyes.The correction is performed in YUVgamut,mapping the three components Y,U and V respectively.

(3)Rendering of Specific Colors

As human eyes have limited capability of identifying color change,they can not distinguish the difference between two colors having a small chromaticity difference.Only when the chromaticity difference reaches a certain value,human eyes can notice their difference.The chromaticity difference that can be just noticed by human eyes is called Just Noticeable Difference(JND).

The International Commission on Illumination(CIE)chromaticity diagram indicates human eyes'JNDs are different at different locations or in different directions.In I942,David Macadam et al.conducted experiments to measure JND at 25 points on the chromaticity diagram and in 5-9 directions at each point.The measured results formed ellipses of different sizes and orientations.These ellipses are called Macadam ellipses.Average human eyes treat the colors within each Macadam ellipse as the same color.

Based on JND and Macadam ellipse,this paper explains its color enhancement algorithm by taking example for several preferred colors and gamut.Under the condition of common fluorescence light,we first make experiments and measure the values of the centralpoints of Macadam ellipses of the preferred color gamut,including facial skin,red,blue,green,black and yellow.Then we approximate each Macadam ellipse to a circle and make enhancement processing for the gamut.

Under our specific experiment environment and based on experimental values,we measure the central point of the ellipse of each color and adjust U and Vcomponents of the color as follows.

·Facial skin:Central point of the ellipse is(I05,I50);within a radius of 20,let u=u+3,v=v-6;

·Yellow:Central point of the ellipse is(60,I55);within a radius of I5,let u=u-I5,v=v+I0;

·Red:Central point of the ellipse is(I20,I65);within a radius of I 5,let u=u,v=v+3;

·Hair:Central point of the ellipse is(II 5,I20);within a radius of I 0,let u=u-2,v=v-2;

·Green:Central point of the ellipse is(99,92);within a radius of I5,let u=u-8,v=v-I 5;

·Blue:Central point of the ellipse is(I50,II 2);within a radius of I 5,let u=u-2,v=v-2;

·White:Central point of the ellipse is(I32,I25);within a radius of I0,let u=u+2,v=v-2.

Figure 5.▶Differences in V component.

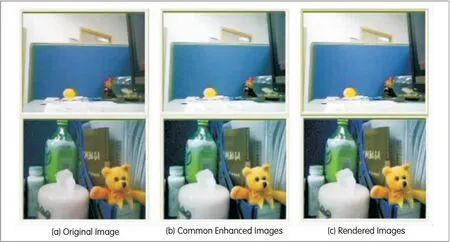

▲Figure 6. Original images vs. enhanced images.

◀Figure 7.Visibility enhancement effect.

▲Figure 8. Color enhancement effect.

Figure 6 is the comparison of images before and after correction.The left picture is original image sequence captured with ZTEU980,the middle one is the picture with color enhanced,and the right one is the picture with blue,green and yellow gamut enhanced with our proposed algorithm.

Adopting the lookup table method,our color space conversion algorithm meets the processing capability requirement of ZTEU980 in terms of efficiency,and the visualeffect after enhancement is much better than the original image.Besides,experiments prove the algorithm is stable and robust.

3 Experiment Results

On a PC installed with 3.2 GHz CPU,IG DDRmemory and VC6.0 Release,if ADLEalgorithm is used to process the video sequences of a Quarter Common Intermediate Format(QCIF)image of I 76×I44 pixels,the average processing time is 0.I8 s per frame.

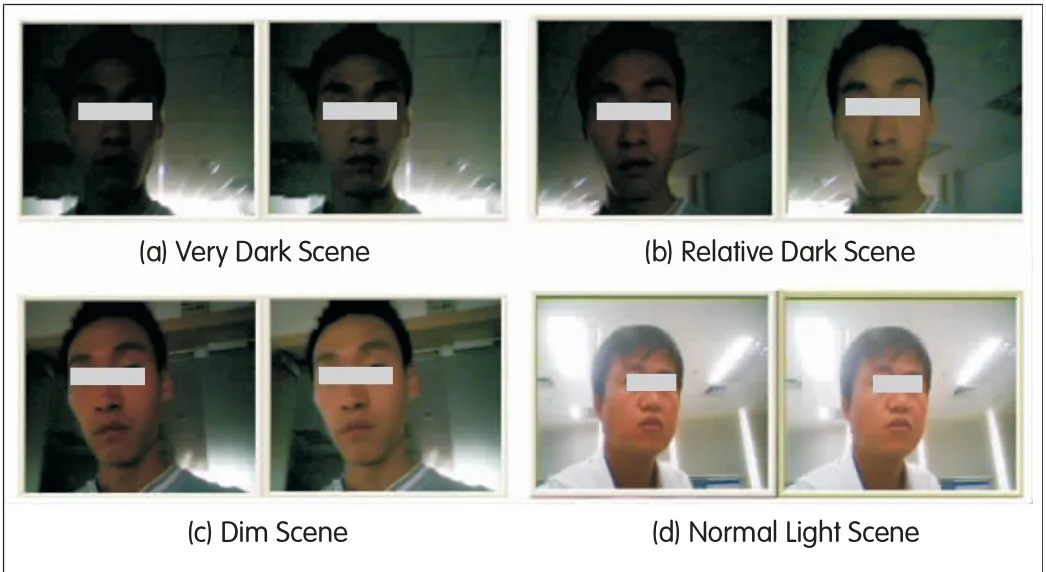

Figure 7 compares original images with enhanced ones in difference scenes.As shown in Figure 7(a),(b)and(c),which correspond to very dark,relative dark and dim scenes respectively,the visibility enhancement is gradual,without any skip or flicker.In the normal lighting scene(Figure 7(d)),the enhanced picture is not too white.This means the algorithm has the functions of adaptive scene detection and enhancements.

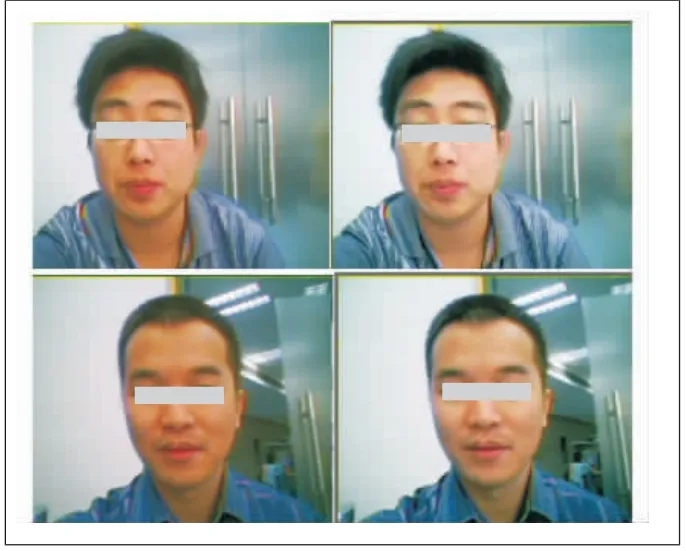

In the color enhancement experiments,the processing average time for a qcif image of I76×I44 pixels is 0.065 s per frame.The experiment results are shown in Figure 8.The left one is the original sequences,and the right one is the enhanced sequences.Comparing the two pictures,you can see the original image is slightly shifted towards red,with poor stereoscopy and uneven brightness distribution.Hence,the subjective visual feeling is dim and depressed.The right one is bright and stereoscopic,and its brightness is evenly distributed.In particular,the skin color and background(wall)are greatly improved.In addition,no flicker or spot is found in the process sequences.Therefore,the enhanced pictures are more pleasing to human eyes than the original ones.

- ZTE Communications的其它文章

- ZTE and TMNLaunch Two WindowsR Phone Based 3.5GSmart Phones

- ZTEShowcases LTEProduct Line in Germany

- ZTE Launches Global University Recruitment Campaign

- Smart Terminal in Mobile Internet

- ZTE SDRBase Station Records over 200,000 Volume Shipment

- Cooperative Communication and Cognitive Radio (4)